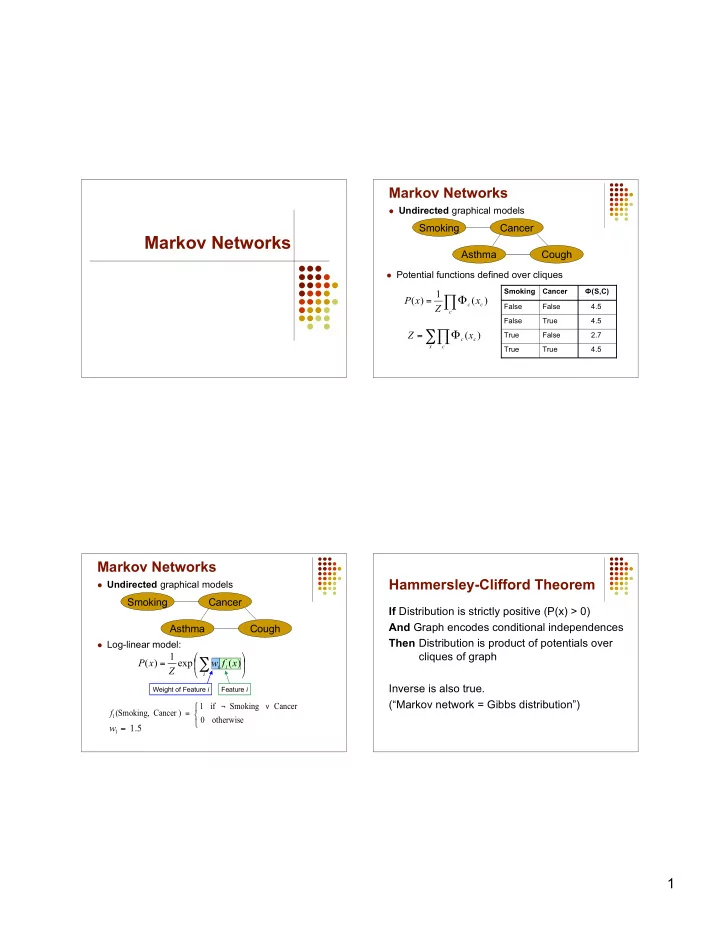

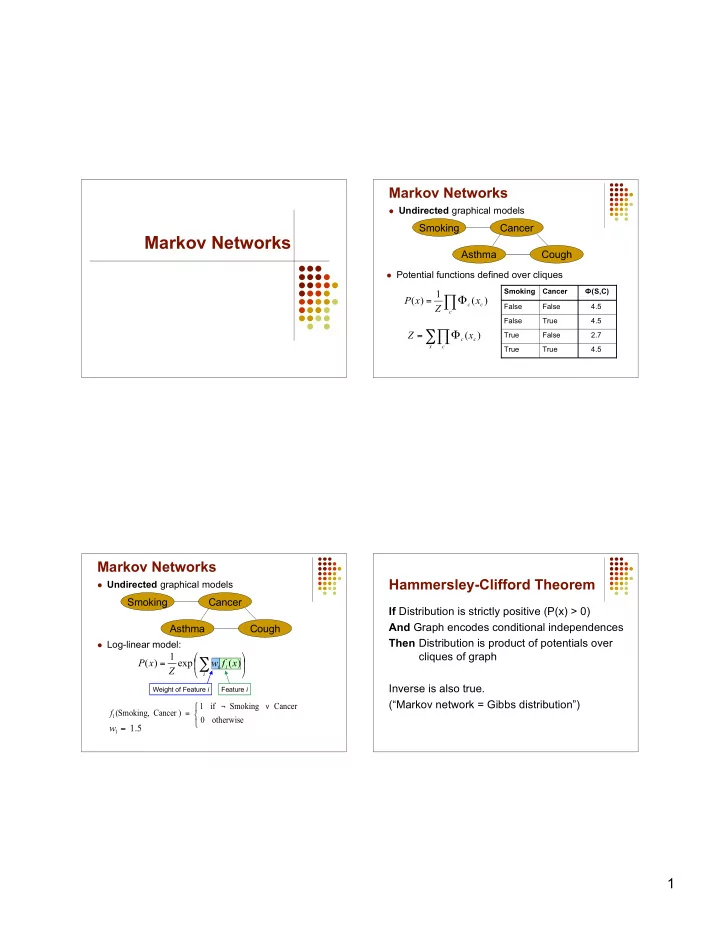

Markov Networks Undirected graphical models Smoking Cancer Markov Networks Asthma Cough Potential functions defined over cliques Smoking Cancer Ф (S,C) 1 P ( x ) � � c x ( ) = c False False 4.5 Z c False True 4.5 Z c x ( ) �� � = True False 2.7 c x c True True 4.5 Markov Networks Hammersley-Clifford Theorem Undirected graphical models Smoking Cancer If Distribution is strictly positive (P(x) > 0) And Graph encodes conditional independences Asthma Cough Then Distribution is product of potentials over Log-linear model: cliques of graph 1 � � P ( x ) exp w f ( x ) � = � � i i Z � � i Inverse is also true. Weight of Feature i Feature i (“Markov network = Gibbs distribution”) 1 if Smoking Cancer � ¬ � f ( Smoking, Cancer ) = � 1 0 otherwise � w 1 . 5 1 = 1

Markov Nets vs. Bayes Nets Inference in Markov Networks Goal : compute marginals & conditionals of Property Markov Nets Bayes Nets 1 � � � � P X ( ) exp � w f X ( ) Z � exp � w f X ( ) Form Prod. potentials Prod. potentials = = � � i i � i i � Z � � � � i X i Potentials Arbitrary Cond. probabilities Exact inference is #P-complete Cycles Allowed Forbidden Conditioning on Markov blanket is easy: Partition func. Z = ? Z = 1 ( ) exp � w f x ( ) i i i Indep. check Graph separation D-separation P x MB x ( | ( )) = ( ) ( ) exp � w f x ( 0) exp � w f x ( 1) = + = i i i i Indep. props. Some Some i i Inference MCMC, BP, etc. Convert to Markov Gibbs sampling exploits this MCMC: Gibbs Sampling Other Inference Methods Belief propagation (sum-product) state ← random truth assignment Mean field / Variational approximations for i ← 1 to num-samples do for each variable x sample x according to P( x | neighbors ( x )) state ← state with new value of x P( F ) ← fraction of states in which F is true 2

MAP/MPE Inference MAP Inference Algorithms Goal: Find most likely state of world given Iterated conditional modes evidence Simulated annealing Graph cuts max P ( y | x ) Belief propagation (max-product) y Query Evidence 3

Recommend

More recommend