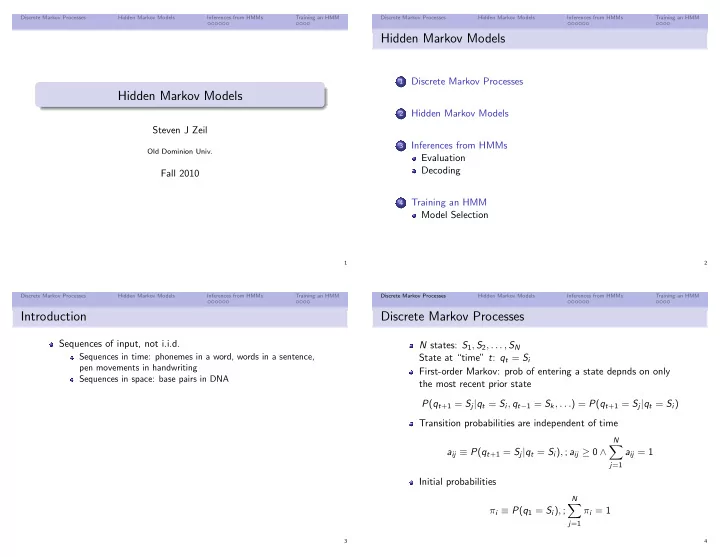

Discrete Markov Processes Hidden Markov Models Inferences from HMMs Training an HMM Discrete Markov Processes Hidden Markov Models Inferences from HMMs Training an HMM Hidden Markov Models Discrete Markov Processes 1 Hidden Markov Models Hidden Markov Models 2 Steven J Zeil Inferences from HMMs 3 Old Dominion Univ. Evaluation Decoding Fall 2010 Training an HMM 4 Model Selection 1 2 Discrete Markov Processes Hidden Markov Models Inferences from HMMs Training an HMM Discrete Markov Processes Hidden Markov Models Inferences from HMMs Training an HMM Introduction Discrete Markov Processes Sequences of input, not i.i.d. N states: S 1 , S 2 , . . . , S N Sequences in time: phonemes in a word, words in a sentence, State at “time” t : q t = S i pen movements in handwriting First-order Markov: prob of entering a state depnds on only Sequences in space: base pairs in DNA the most recent prior state P ( q t +1 = S j | q t = S i , q t − 1 = S k , . . . ) = P ( q t +1 = S j | q t = S i ) Transition probabilities are independent of time N � a ij ≡ P ( q t +1 = S j | q t = S i ) , ; a ij ≥ 0 ∧ a ij = 1 j =1 Initial probabilities N � π i ≡ P ( q 1 = S i ) , ; π i = 1 j =1 3 4

Discrete Markov Processes Hidden Markov Models Inferences from HMMs Training an HMM Discrete Markov Processes Hidden Markov Models Inferences from HMMs Training an HMM Stochastic Automaton Example: Balls & Urns Three urns each full of balls of one color. A “genii” moves randomly from urn to urn selecting balls. S 1 : red, S 2 : blue, S 3 : green π = [0 . 5 , 0 . 2 , 0 . 3] T � 0 . 4 0 . 3 0 . 3 A = 0 . 2 0 . 6 0 . 2 0 . 1 0 . 1 0 . 8 Suppose we observe O = [red, red, green, green] P ( O | A ,� π ) = P ( S 1 ) P ( S 1 | S 1 ) P ( S 3 | S 1 ) P ( S 3 | S 3 ) = π 1 a 11 a 13 a 33 = 0 . 5 ∗ 0 . 4 ∗ 0 . 3 ∗ 0 . 8 = 0 . 048 5 6 Discrete Markov Processes Hidden Markov Models Inferences from HMMs Training an HMM Discrete Markov Processes Hidden Markov Models Inferences from HMMs Training an HMM Hiding the Model Hidden Markov Models Now suppose that States are not observable Discrete observations [ v 1 , v 2 , . . . , v M ] are recorded The urns and the genii are hidden behind a screen. Each is a probabilisitic function of the state The urns start with different mixtures of all three colors Emission probabilities and (if we’re really unlucky) we don’t even know how many urns there are b j ( m ) ≡ P ( O t = v m | q t = S j ) Suppose we observe O = [red, red, green, green]. For any given sequence of observations, there may be multiple Can we say anything at all? possible state sequences. 7 8

Discrete Markov Processes Hidden Markov Models Inferences from HMMs Training an HMM Discrete Markov Processes Hidden Markov Models Inferences from HMMs Training an HMM HMM Unfolded in Time Elements of an HMM An HMM λ = ( A , B ,� π ) A = [ a ij ]: N × N state transition probability matrix N is number of hidden states B = b j ( m ): N × M emission probability matrix M is number of observation symbols � π = [ π i ]’: N × 1 initial state probability vector 9 10 Discrete Markov Processes Hidden Markov Models Inferences from HMMs Training an HMM Discrete Markov Processes Hidden Markov Models Inferences from HMMs Training an HMM Making Inferences from an HMM Decoding Example Evaluation : Given λ and O , calculate P ( O | λ ) What’s the weather Example: Given several HMMs, each trained to recognize a been? different handwritten character, and given a sequence of pen States can be “labeled” strokes, which character is most likely denoted by that even though “hidden”. sequence? Decoding : Given λ and O , what is the most probable sequence of states leading to that observation? Example: Given an HMM trianed on sentences and a sequence of words, some of which can belong to multiple syntactical classes (e.g., “green” can be an adjective, a noun, or a verb), determine the most likely syntactic class from surrounding context. Related problems: most likely starting or ending state 11 12

Discrete Markov Processes Hidden Markov Models Inferences from HMMs Training an HMM Discrete Markov Processes Hidden Markov Models Inferences from HMMs Training an HMM Evaluation Forward Variable Given λ and O , calculate P ( O | λ ) If we knew the state sequence � q , we could do α t ( i ) ≡ P ( O 1 . . . O t , q t = S i | λ ) T T � � P ( O | λ,� q ) = P ( O t | q t , λ ) = b q t ( O t ) N � P ( O | λ ) = α T ( i ) t =1 t =1 The prob of a state sequence is i =1 Computed recursively T − 1 Initial: α 1 ( i ) = π i b i ( O 1 ) � P ( � q | λ ) = π q 1 a q t q t +1 Recursion: t =1 T − 1 � N � � P ( O ,� q | λ ) = π q 1 b q 1 ( O 1 ) a q t q t +1 b q t +1 ( O t +1 ) � α t +1 ( j ) = α t ( i ) a ij b j ( O t +1 ) t =1 i =1 � P ( O | λ ) = P ( O ,� q | λ ) } all possible � q which is totally impractical 13 14 Discrete Markov Processes Hidden Markov Models Inferences from HMMs Training an HMM Discrete Markov Processes Hidden Markov Models Inferences from HMMs Training an HMM Decoding Viterbi’s Algorithm Given λ and O , what is the most probable sequence of states A constrained optimizer for state graph traversal leading to that observation? Dynamic programming algorithm: Start by introducing a backward variable : Assign a cost to each edge Update path metrics by addition from shorter paths. Discard β t ( i ) ≡ P ( O t +1 . . . O T | q t = S i , λ ) suboptimal cases. Starting from the final state, trace back the optimal path. Initial: β T ( i ) = 1 Recursion: N � β t ( i ) = a ij b j ( O t +1 ) β t +1 ( j ) j =1 15 16

Discrete Markov Processes Hidden Markov Models Inferences from HMMs Training an HMM Discrete Markov Processes Hidden Markov Models Inferences from HMMs Training an HMM The HMM Trellis Viterbi’s Algorithm for HMMs δ t ( i ) ≡ q 1 q 2 ... q t − 1 p ( q 1 q 2 . . . q t − 1 , q t = S i , O 1 . . . O t | λ ) max Initial: δ 1 ( i ) = π i b i ( O 1 ), ψ 1 ( i ) = 0 Iterate: δ t ( i ) = max i δ t − 1 ( i ) a ij b j ( O t ) ψ t ( j ) = arg max i δ t − 1( i ) a ij Optimum: p ∗ = max i δ T ( i ), q ∗ T = arg max i δ T ( i ) Backtrack: q ∗ t = ψ t +1 ( q ∗ t +1 ), t = T − 1 , . . . , 1 Examples: Numeric sequence, fixed problem Coin Flipping, Customizable Spelling Correction as a decoding problem 17 18 Discrete Markov Processes Hidden Markov Models Inferences from HMMs Training an HMM Discrete Markov Processes Hidden Markov Models Inferences from HMMs Training an HMM Training an HMM Baum-Welch Algorithm - Overview Need to estimate a ij , π i , b j ( m ) An E-M style algorithm Repeatedly apply the steps that maximize the likelihood of observing a set or training instances X = { O k } K E : Use the current λ = ( A , B ,� π ) to compute, for each training k =1 instance, the probability of being in S i at time t the probability of making the transition from S i to S j at time t + 1 M : Update the values of λ = ( A , B ,� π ) to maximize the likelihood of matching those probabilities. 19 20

Discrete Markov Processes Hidden Markov Models Inferences from HMMs Training an HMM Discrete Markov Processes Hidden Markov Models Inferences from HMMs Training an HMM From the Training Data From the HMM � 1 if q t = S i z t i = 0 ow γ t ( i ) ≡ P ( q t = S i | O , λ ) α t ( i ) β t ( i ) k z k � = ˆ = Note that i P ( q t = S i | λ ) � N K j =1 α t ( j ) β t ( j ) � 1 During Baum-Welch, we estimate as if q t = S i ∧ q t +1 = S j z t ij = 0 ow γ k t ( i ) ≈ E [ z t i ] k z k � = ˆ Note that ij P ( q t = S i , q t +1 = S j | λ ) K 21 22 Discrete Markov Processes Hidden Markov Models Inferences from HMMs Training an HMM Discrete Markov Processes Hidden Markov Models Inferences from HMMs Training an HMM From the HMM Baum-Welch Algorithm - E Repeatedly apply the steps E : For each O k , γ k t ( i ) ← E [ z t ξ t ( i , j ) ≡ P ( q t = S i , q t +1 = S j | O , λ ) i ] α t ( i ) a ij b j ( O t +1 ) β t +1 ( j ) ξ k t ( i , j ) ← E [ z t ij ] = � � m α t ( k ) a km b m ( O t +1 ) β t +1 ( m ) k Then average over all observations: During Baum-Welch, we estimate as � K k =1 γ k t ( i ) γ t ( i ) ← K ξ k t ( i , j ) ≈ E [ z t ij ] � K k =1 ξ k t ( i , j ) ξ t ( i , j ) ← K M : Update the values of λ = ( A , B ,� π ) to maximize the likelihood of matching those probabilities. 23 24

Recommend

More recommend