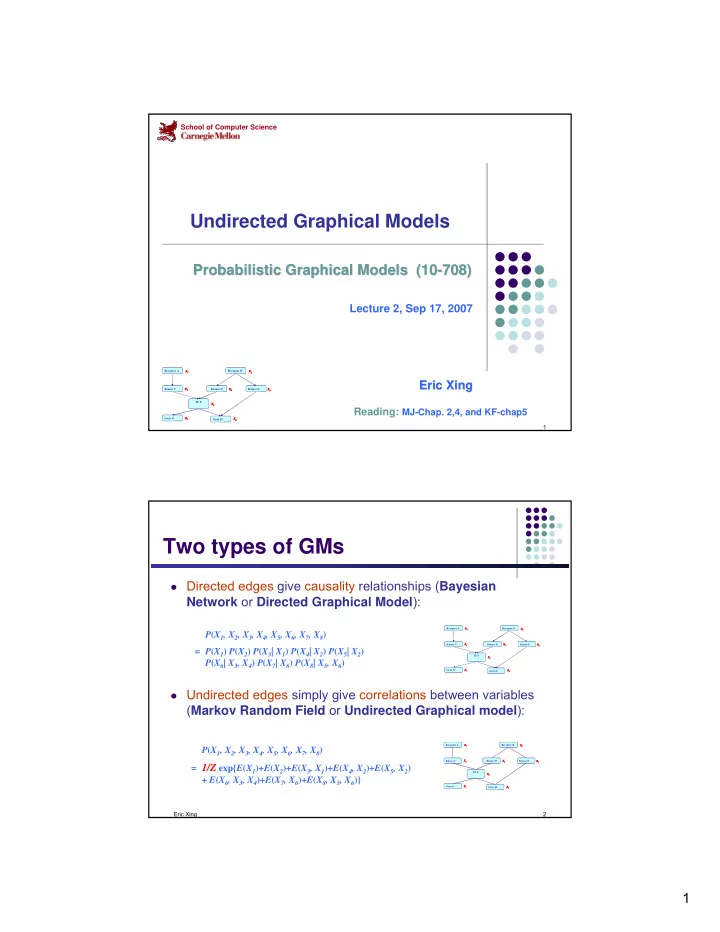

School of Computer Science Undirected Graphical Models Probabilistic Graphical Models (10- Probabilistic Graphical Models (10 -708) 708) Lecture 2, Sep 17, 2007 Receptor A Receptor A X 1 X 1 X 1 Receptor B Receptor B X 2 X 2 X 2 Eric Xing Eric Xing Kinase C Kinase C X 3 X 3 X 3 Kinase D Kinase D X 4 X 4 X 4 Kinase E Kinase E X 5 X 5 X 5 TF F TF F X 6 X 6 X 6 Reading: MJ-Chap. 2,4, and KF-chap5 Gene G Gene G X 7 X 7 X 7 X 8 X 8 X 8 Gene H Gene H 1 Two types of GMs � Directed edges give causality relationships ( Bayesian Network or Directed Graphical Model ): Receptor A Receptor A X 1 X 1 X 1 Receptor B Receptor B X 2 X 2 X 2 P ( X 1 , X 2 , X 3 , X 4 , X 5 , X 6 , X 7 , X 8 ) Kinase C Kinase C X 3 X 3 X 3 Kinase D Kinase D X 4 X 4 X 4 Kinase E Kinase E X 5 X 5 X 5 = P ( X 1 ) P ( X 2 ) P ( X 3 | X 1 ) P ( X 4 | X 2 ) P ( X 5 | X 2 ) TF F TF F X 6 X 6 X 6 P ( X 6 | X 3 , X 4 ) P ( X 7 | X 6 ) P ( X 8 | X 5 , X 6 ) Gene G Gene G X 7 X 7 X 7 X 8 X 8 X 8 Gene H Gene H � Undirected edges simply give correlations between variables ( Markov Random Field or Undirected Graphical model ): Receptor A Receptor A X 1 X 1 X 1 Receptor B Receptor B X 2 X 2 X 2 P ( X 1 , X 2 , X 3 , X 4 , X 5 , X 6 , X 7 , X 8 ) Kinase C Kinase C X 3 X 3 X 3 Kinase D Kinase D X 4 X 4 X 4 Kinase E Kinase E X 5 X 5 X 5 = 1/Z exp{ E ( X 1 ) +E ( X 2 ) +E ( X 3 , X 1 )+ E ( X 4 , X 2 ) +E ( X 5 , X 2 ) TF F TF F X 6 X 6 X 6 + E ( X 6 , X 3 , X 4 )+ E ( X 7 , X 6 )+ E ( X 8 , X 5 , X 6 )} Gene G Gene G X 7 X 7 X 7 X 8 X 8 X 8 Gene H Gene H Eric Xing 2 1

Review: independence properties of DAGs � Defn: let I l ( G ) be the set of local independence properties encoded by DAG G , namely: { X i ⊥ NonDescendants( X i ) | Parents( X i ) } � Defn: A DAG G is an I-map (independence-map) of P if I l ( G ) ⊆ I ( P ) � A fully connected DAG G is an I-map for any distribution, since I l ( G ) =∅⊆ I ( P ) for any P . � Defn: A DAG G is a minimal I-map for P if it is an I-map for P , and if the removal of even a single edge from G renders it not an I-map. � A distribution may have several minimal I-maps Each corresponding to a specific node-ordering � Eric Xing 3 P-maps � Defn: A DAG G is a perfect map (P-map) for a distribution P if I ( P ) = I ( G ). � Thm: not every distribution has a perfect map as DAG. Pf by counterexample. Suppose we have a model where � A ⊥ C | { B,D }, and B ⊥ D | { A,C }. This cannot be represented by any Bayes net. e.g., BN1 wrongly says B ⊥ D | A , BN2 wrongly says B ⊥ D . � A A D D B B D B D D B B D B C A A C C C C BN1 BN2 MRF BN1 BN2 MRF Eric Xing 4 2

Undirected graphical models X 1 X 4 X 3 X 2 X 5 � Pairwise (non-causal) relationships � Can write down model, and score specific configurations of the graph, but no explicit way to generate samples � Contingency constrains on node configurations Eric Xing 5 Canonical examples � The grid model � Naturally arises in image processing, lattice physics, etc. � Each node may represent a single "pixel", or an atom The states of adjacent or nearby nodes are "coupled" due to pattern continuity or � electro-magnetic force, etc. Most likely joint-configurations usually correspond to a "low-energy" state � Eric Xing 6 3

Social networks The New Testament Social Networks Eric Xing 7 Protein interaction networks Eric Xing 8 4

Modeling Go Eric Xing 9 Information retrieval topic topic text text image image Eric Xing 10 5

� First hw out today! Start now! � Auditing students: please fill out forms � Recitation: � questions: Eric Xing 11 Distributional equivalence and I- equivalence All independence in I d (G) will be captured in I f (G), is the reverse � true? Are "not-independence" from G all honored in P f ? � Eric Xing 12 6

Global Markov Independencies � Let H be an undirected graph: � B separates A and C if every path from a node in A to a node in C passes through a node in B : sep ( ; ) A C B H � A probability distribution satisfies the global Markov property if for any disjoint A , B , C , such that B separates A and C , A is { } = ⊥ independent of C given B: I ( ) : sep ( ; ) H A C B A C B H Eric Xing 13 Soundness of separation criterion � The independencies in I(H) are precisely those that are guaranteed to hold for every MRF distribution P over H. � In other words, the separation criterion is sound for detecting independence properties in MRF distributions over H. Eric Xing 14 7

Local Markov independencies � For each node X i ∈ V , there is unique Markov blanket of X i , denoted MB Xi , which is the set of neighbors of X i in the graph (those that share an edge with X i ) � Defn (5.5.4): The local Markov independencies associated with H is: I ℓ ( H ): { X i ⊥ V – { X i } – MB Xi | MB Xi : ∀ i ), In other words, X i is independent of the rest of the nodes in the graph given its immediate neighbors Eric Xing 15 Summary: Conditional Independence Semantics in an MRF Structure: an undirected graph • Meaning: a node is conditionally independent of Y 1 Y 2 every other node in the network given its Directed neighbors X • Local contingency functions ( potentials ) and the cliques in the graph completely determine the joint dist. • Give correlations between variables, but no explicit way to generate samples Eric Xing 16 8

Cliques � For G ={ V , E }, a complete subgraph (clique) is a subgraph G' ={ V' ⊆ V , E' ⊆ E } such that nodes in V' are fully interconnected A (maximal) clique is a complete subgraph s.t. any superset � V" ⊃ V' is not complete. A sub-clique is a not-necessarily-maximal clique. � A D B D B C � Example: C max-cliques = { A , B , D }, { B , C , D }, � sub-cliques = { A , B }, { C , D }, … � all edges and singletons � Eric Xing 17 Quantitative Specification � Defn: an undirected graphical model represents a distribution P ( X 1 ,…,X n ) defined by an undirected graph H , and a set of positive potential functions ψ c associated with cliques of H , s.t. 1 ∏ = ψ 1 K P ( x , , x ) ( x ) (A Gibbs distribution) n c c Z ∈ c C where Z is known as the partition function: ∑ ∏ = ψ ( ) Z x c c ∈ K x , , x c C 1 n � Also known as Markov Random Fields, Markov networks … � The potential function can be understood as an contingency function of its arguments assigning "pre-probabilistic" score of their joint configuration. Eric Xing 18 9

Interpretation of Clique Potentials X Y Z X Z � The model implies X ⊥ Z | Y . This independence statement implies (by definition) that the joint must factorize as: p x y z p y p x y p z y = ( , , ) ( ) ( | ) ( | ) p x y z = p x y p z y ( , , ) ( , ) ( | ) � We can write this as: , but p x y z p x y p z y = ( , , ) ( | ) ( , ) cannot have all potentials be marginals � cannot have all potentials be conditionals � � The positive clique potentials can only be thought of as general "compatibility", "goodness" or "happiness" functions over their variables, but not as probability distributions. Eric Xing 19 Example UGM – using max cliques A D B D B A,B,D B,C,D ψ ψ ( ) ( ) x x C C 124 234 c c 1 = ψ × ψ P ' ( x , x , x , x ) ( x ) ( x ) 1 2 3 4 124 234 c c Z ∑ = ψ × ψ Z ( x ) ( x ) 124 234 c c x , x , x , x 1 2 3 4 � For discrete nodes, we can represent P ( X 1:4 ) as two 3D tables instead of one 4D table Eric Xing 20 10

Example UGM – using subcliques A A,D D B D B A,B B,D C,D B,C C C 1 = ∏ ψ " ( , , , ) ( ) P x x x x x 1 2 3 4 ij ij Z ij 1 = ψ ψ ψ ψ ψ ( ) ( ) ( ) ( ) ( ) x x x x x 12 12 14 14 23 23 24 24 34 34 Z ∑ ∏ = ψ ( x ) Z ij ij x , x , x , x ij 1 2 3 4 We can represent P ( X 1:4 ) as 5 2D tables instead of one 4D table � Pair MRFs, a popular and simple special case � I(P') vs. I(P") ? D(P') vs. D(P") � Eric Xing 21 Factored graph A D B D B C C Eric Xing 22 11

Recommend

More recommend