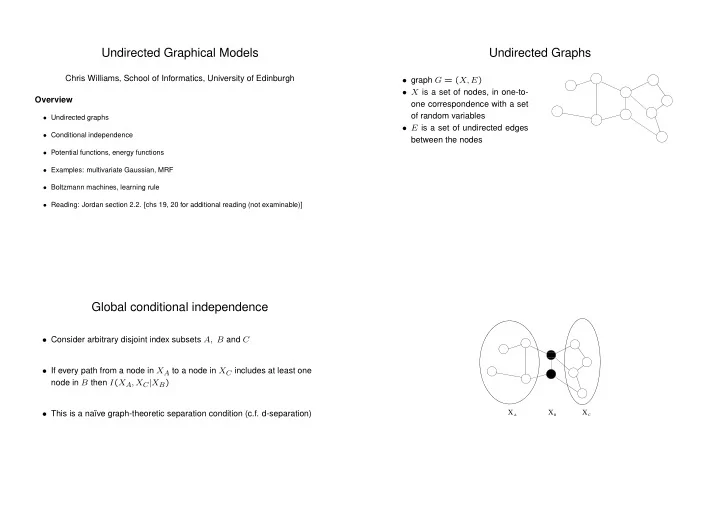

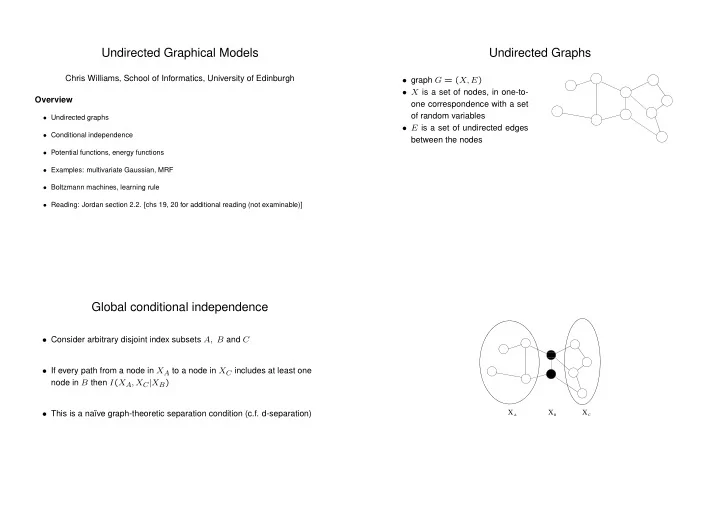

� � ✁ ✁ ✁ ✁ ✁ ✁ ✁ ✁ � � � � � � Undirected Graphical Models Undirected Graphs Chris Williams, School of Informatics, University of Edinburgh • graph G = ( X, E ) • X is a set of nodes, in one-to- Overview one correspondence with a set of random variables • Undirected graphs • E is a set of undirected edges • Conditional independence between the nodes • Potential functions, energy functions • Examples: multivariate Gaussian, MRF • Boltzmann machines, learning rule • Reading: Jordan section 2.2. [chs 19, 20 for additional reading (not examinable)] Global conditional independence • Consider arbitrary disjoint index subsets A, B and C • If every path from a node in X A to a node in X C includes at least one node in B then I ( X A , X C | X B ) X X X • This is a na¨ ıve graph-theoretic separation condition (c.f. d-separation) A B C

Graphs and Cliques Parameterization • Conditional independence properties of undirected graphs imply a representation of the joint probability as a product of local functions defined on the maximal cliques of the • For directed graphs use P ( X ) = � i P ( X i | Pa i ) , gives notion of locality graph p ( x ) = 1 � ψ X C ( x c ) Z C ∈C • For undirected graphs, locality depends on the notion of cliques with � � Z = ψ X C ( x c ) C ∈C x • A clique of a graph is a fully-connected set of nodes • Each ψ X C ( x C ) is a strictly positive, real-valued function, otherwise arbitrary • Z is called the partition function • A maximal clique is a clique which cannot be extended to include additional nodes without losing the property of being fully connected • Equivalence of conditional independence and clique factorization form is the Hammersley-Clifford theorem X • Potential functions are in general neither conditional or marginal 4 probabilities X 2 X • Natural interpretation as agreement, constraint, energy 6 X 1 X X • Potential function favours certain local configurations by assigning them 3 5 ψ (x1,x2) ψ (x1,x3) ψ (x3,x5) ψ (x2,x5,x6) ψ (x2,x4) larger values P(x) = /Z • Global configurations that have high probability are, roughly speaking, those that satisfy as many of the favoured local configurations as possible

Energy functions Local Markov Property • Enforce positivity by defining • Denote all nodes by V ψ X C ( x C ) = exp {− H X C ( x C ) } • Negative sign is conventional (high probability, low energy) • For a vertex a , let ∂a denote the boundary of a , i.e. the set of vertices in p ( x ) = 1 ψ X C ( x c ) = 1 V \ a that are neighbours of a � � Z exp {− H X C ( x C ) } Z C ∈C C ∈C • Energy H ( x ) = � C ∈C H X C ( x C ) • Local Markov property : For any vertex a , the conditional distribution of X a given X V \ a depends only on X ∂a • Boltzmann distribution p ( x ) = 1 Z exp {− H ( x ) } Example I—Multivariate Gaussian X 4 p ( x ) ∝ exp {− 1 2 x T Σ − 1 x } X 2 X 6 X • It is the zeros in Σ − 1 that define the missing edges in the graph and 1 hence the conditional independence structure X X 3 5 ψ (x1,x2) ψ (x1,x3) ψ (x3,x5) ψ (x2,x5,x6) ψ (x2,x4) P(x) = /Z

Example II—Markov Random Field Boltzmann machines • Discrete random variables • Hinton and Sejnowski, 1983 • Ising model in statistical physics (spins up/down) • Binary units ± 1 • MRF models used in image p ( x ) = 1 Z exp { 1 analysis, e.g. segmentation of � w ij x i x j } 2 regions. Define energies such ij that blocks of the same labels are preferred (Geman and Ge- • w ij = w ji and w ii = 0 man, 1984) • set x 0 = 1 (bias unit) • 1 � ij w ij x i x j = � i<j w ij x i x j 2 hidden units • Can have hidden units output (visible) • Potential function is not arbitrary function of cliques, but only based on units pairwise links (can generalize) • P ( X i = 1 | rest ) = σ (2 h i ) where h i = � j w ij x j

Boltzmann machine learning rule p ( x , y ) = 1 � Z exp { θ k φ k ( x , y ) } Denote visible units by x , hidden units by y k p ( x ) = 1 � � exp { θ k φ k ( x , y ) } p ( x , y ) = 1 Z � Z exp { θ k φ k ( x , y ) } k y k � � log p ( x ) = log exp { θ k φ k ( x , y ) } − log Z y k This is the general form of a log linear model. ∂ log p ( x ) � � = φ l ( x , y ) p ( y | x ) − φ l ( x , y ) p ( x , y ) ∂θ l x , y y • Features φ k ( x , y ) are the pairwise potentials for a Boltzmann machine def = � φ l ( x , y ) � + − � φ l ( x , y ) � − • Parameters θ k correspond to weights in the Boltzmann machine Gibbs sampler • + denotes the clamped phase (with x clamped on visible units), − denotes the free-running phase (all unclamped) Loop T times for each unit i to be sampled from compute h i and sample P ( X i | rest ) end for • Learning stops when statistics match in both phases end loop • This is a Markov Chain Monte Carlo (MCMC) method. Under general conditions this will converge to the correct distribution as T → ∞ • Statistics could be computed exactly (using junction tree algorithm) but often this is intractable— use stochastic sampling • Boltzmann machine learning can be slow due to the need to use MCMC techniques. Gradient is the difference of two noisy estimates • Boltzmann machine learning rule is gradient based; one can also use • Hinton (1999, 2000) has introduced the Products of Experts (PoE) architecture, which may get round some of these difficulties Iterative Scaling algorithms (see Jordan ch 20) to update θ k ’s

Recommend

More recommend