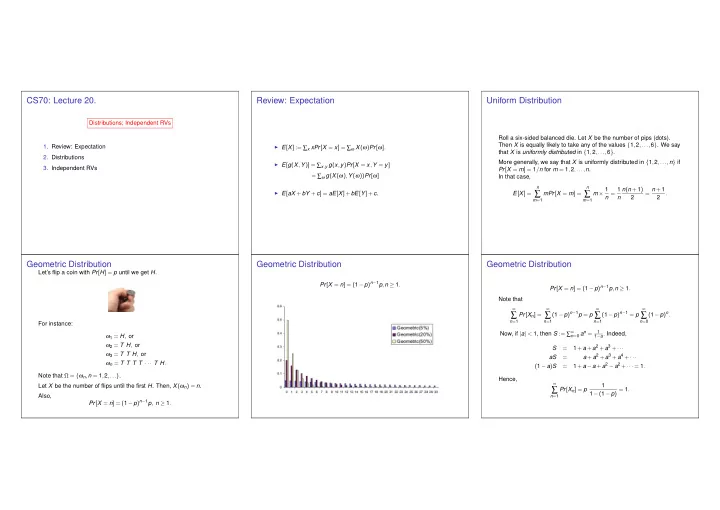

CS70: Lecture 20. Review: Expectation Uniform Distribution Distributions; Independent RVs Roll a six-sided balanced die. Let X be the number of pips (dots). Then X is equally likely to take any of the values { 1 , 2 ,..., 6 } . We say 1. Review: Expectation ◮ E [ X ] := ∑ x xPr [ X = x ] = ∑ ω X ( ω ) Pr [ ω ] . that X is uniformly distributed in { 1 , 2 ,..., 6 } . 2. Distributions More generally, we say that X is uniformly distributed in { 1 , 2 ,..., n } if ◮ E [ g ( X , Y )] = ∑ x , y g ( x , y ) Pr [ X = x , Y = y ] 3. Independent RVs Pr [ X = m ] = 1 / n for m = 1 , 2 ,..., n . = ∑ ω g ( X ( ω ) , Y ( ω )) Pr [ ω ] In that case, n n m × 1 n = 1 n ( n + 1 ) = n + 1 ◮ E [ aX + bY + c ] = aE [ X ]+ bE [ Y ]+ c . ∑ ∑ E [ X ] = mPr [ X = m ] = . n 2 2 m = 1 m = 1 Geometric Distribution Geometric Distribution Geometric Distribution Let’s flip a coin with Pr [ H ] = p until we get H . Pr [ X = n ] = ( 1 − p ) n − 1 p , n ≥ 1 . Pr [ X = n ] = ( 1 − p ) n − 1 p , n ≥ 1 . Note that ∞ ∞ ∞ ∞ ( 1 − p ) n − 1 = p ( 1 − p ) n − 1 p = p ( 1 − p ) n . ∑ Pr [ X n ] = ∑ ∑ ∑ n = 1 n = 1 n = 1 n = 0 For instance: n = 0 a n = Now, if | a | < 1, then S := ∑ ∞ 1 1 − a . Indeed, ω 1 = H , or ω 2 = T H , or 1 + a + a 2 + a 3 + ··· S = ω 3 = T T H , or a + a 2 + a 3 + a 4 + ··· aS = ω n = T T T T ··· T H . 1 + a − a + a 2 − a 2 + ··· = 1 . ( 1 − a ) S = Note that Ω = { ω n , n = 1 , 2 ,... } . Hence, ∞ 1 Let X be the number of flips until the first H . Then, X ( ω n ) = n . ∑ Pr [ X n ] = p 1 − ( 1 − p ) = 1 . Also, n = 1 Pr [ X = n ] = ( 1 − p ) n − 1 p , n ≥ 1 .

Geometric Distribution: Expectation Coupon Collectors Problem. Time to collect coupons X = D G ( p ) , i.e., Pr [ X = n ] = ( 1 − p ) n − 1 p , n ≥ 1 . X -time to get n coupons. One has X 1 - time to get first coupon. Note: X 1 = 1. E ( X 1 ) = 1 . ∞ ∞ ∑ ∑ n ( 1 − p ) n − 1 p . E [ X ] = nPr [ X = n ] = X 2 - time to get second coupon after getting first. n = 1 n = 1 Experiment: Get coupons at random from n until collect all n first coupon” ] = n − 1 Pr [ “get second coupon” | “got milk —- Thus, coupons. n Outcomes: { 123145 ..., 56765 ... } ⇒ E [ X 2 ] = 1 1 n E [ X 2 ]? Geometric ! ! ! = p = = n − 1 . n − 1 p + 2 ( 1 − p ) p + 3 ( 1 − p ) 2 p + 4 ( 1 − p ) 3 p + ··· E [ X ] = Random Variable: X - length of outcome. n ( 1 − p ) p + 2 ( 1 − p ) 2 p + 3 ( 1 − p ) 3 p + ··· Pr [ “getting i th coupon | “got i − 1rst coupons” ] = n − ( i − 1 ) = n − i + 1 ( 1 − p ) E [ X ] = Before: Pr [ X ≥ n ln2 n ] ≤ 1 2 . n n p + ( 1 − p ) p + ( 1 − p ) 2 p + ( 1 − p ) 3 p + ··· pE [ X ] = E [ X i ] = 1 n p = n − i + 1 , i = 1 , 2 ,..., n . Today: E [ X ] ? by subtracting the previous two identities E [ X 1 ]+ ··· + E [ X n ] = n n n − 2 + ··· + n n ∞ E [ X ] = n + n − 1 + = ∑ Pr [ X = n ] = 1 . 1 n = 1 n ( 1 + 1 2 + ··· + 1 = n ) =: nH ( n ) ≈ n ( ln n + γ ) Hence, E [ X ] = 1 p . Review: Harmonic sum Harmonic sum: Paradox Paradox � n H ( n ) = 1 + 1 2 + ··· + 1 1 Consider this stack of cards (no glue!): n ≈ x dx = ln ( n ) . 1 . A good approximation is If each card has length 2, the stack can extend H ( n ) to the right of the table. As n increases, you can go as far as you want! H ( n ) ≈ ln ( n )+ γ where γ ≈ 0 . 58 (Euler-Mascheroni constant).

Stacking Geometric Distribution: Memoryless Geometric Distribution: Memoryless - Interpretation Let X be G ( p ) . Then, for n ≥ 0, Pr [ X > n ] = Pr [ first n flips are T ] = ( 1 − p ) n . Pr [ X > n + m | X > n ] = Pr [ X > m ] , m , n ≥ 0 . Theorem Pr [ X > n + m | X > n ] = Pr [ X > m ] , m , n ≥ 0 . Proof: Pr [ X > n + m and X > n ] Pr [ X > n + m | X > n ] = Pr [ X > n ] Pr [ X > n + m ] = Pr [ X > n ] Pr [ X > n + m | X > n ] = Pr [ A | B ] = Pr [ A ] = Pr [ X > m ] . ( 1 − p ) n + m The cards have width 2. Induction shows that the center of gravity = ( 1 − p ) m = The coin is memoryless, therefore, so is X . after n cards is H ( n ) away from the right-most edge. ( 1 − p ) n = Pr [ X > m ] . Geometric Distribution: Yet another look Expected Value of Integer RV Theorem: For a r.v. X that takes values in { 0 , 1 , 2 ,... } , one has Theorem: For a r.v. X that takes values in { 0 , 1 , 2 ,... } , one has ∞ ∑ E [ X ] = Pr [ X ≥ i ] . ∞ ∑ i = 1 E [ X ] = Pr [ X ≥ i ] . Theorem: For a r.v. X that takes the values { 0 , 1 , 2 ,... } , one has i = 1 Proof: One has ∞ ∑ E [ X ] = Pr [ X ≥ i ] . ∞ ∑ E [ X ] = i × Pr [ X = i ] i = 1 Probability mass at i , counted i times. i = 1 [See later for a proof.] ∞ Same as ∑ ∞ ∑ ··· = i { Pr [ X ≥ i ] − Pr [ X ≥ i + 1 ] } i = 1 i × Pr [ X = i ] . If X = G ( p ) , then Pr [ X ≥ i ] = Pr [ X > i − 1 ] = ( 1 − p ) i − 1 . i = 1 ∞ Hence, ∑ = { i × Pr [ X ≥ i ] − i × Pr [ X ≥ i + 1 ] } ∞ ∞ i = 1 1 − ( 1 − p ) = 1 1 ( 1 − p ) i − 1 = ( 1 − p ) i = ∑ ∑ 0 1 2 3 E [ X ] = p . ∞ Pr [ X ≥ 1 ] = ∑ { i × Pr [ X ≥ i ] − ( i − 1 ) × Pr [ X ≥ i ] } i = 1 i = 0 i = 1 Pr [ X ≥ 2 ] ∞ Pr [ X ≥ 3 ] ∑ = Pr [ X ≥ i ] . . i = 1 . .

Poisson Poisson Poisson Distribution: Definition and Mean Experiment: flip a coin n times. The coin is such that Pr [ H ] = λ / n . Experiment: flip a coin n times. The coin is such that Pr [ H ] = λ / n . Definition Poisson Distribution with parameter λ > 0 Random Variable: X - number of heads. Thus, X = B ( n , λ / n ) . Random Variable: X - number of heads. Thus, X = B ( n , λ / n ) . X = P ( λ ) ⇔ Pr [ X = m ] = λ m Poisson Distribution is distribution of X “for large n .” Poisson Distribution is distribution of X “for large n .” m ! e − λ , m ≥ 0 . We expect X ≪ n . For m ≪ n one has Fact: E [ X ] = λ . � n � p m ( 1 − p ) n − m , with p = λ / n Pr [ X = m ] = m Proof: � m � � n − m n ( n − 1 ) ··· ( n − m + 1 ) � λ 1 − λ ∞ m × λ m ∞ λ m = m ! e − λ = e − λ m ! n n ∑ ∑ E [ X ] = ( m − 1 )! � n − m λ m m = 1 m = 1 n ( n − 1 ) ··· ( n − m + 1 ) � 1 − λ = ∞ λ m + 1 ∞ λ m n m m ! n e − λ = e − λ λ ∑ ∑ = m ! m ! � n − m � n λ m ≈ ( 2 ) λ m ≈ λ m � 1 − λ � 1 − λ m = 0 m = 0 m ! e − λ . ≈ ( 1 ) e − λ λ e λ = λ . = m ! n m ! n For ( 1 ) we used m ≪ n ; for ( 2 ) we used ( 1 − a / n ) n ≈ e − a . Simeon Poisson Equal Time: B. Geometric Review: Distributions The Poisson distribution is named after: The geometric distribution is named after: ◮ U [ 1 ,..., n ] : Pr [ X = m ] = 1 n , m = 1 ,..., n ; E [ X ] = n + 1 2 ; � n p m ( 1 − p ) n − m , m = 0 ,..., n ; ◮ B ( n , p ) : Pr [ X = m ] = � m E [ X ] = np ; ◮ G ( p ) : Pr [ X = n ] = ( 1 − p ) n − 1 p , n = 1 , 2 ,... ; E [ X ] = 1 p ; ◮ P ( λ ) : Pr [ X = n ] = λ n n ! e − λ , n ≥ 0; E [ X ] = λ . Prof. Walrand could not find a picture of D. Binomial, sorry.

Recommend

More recommend