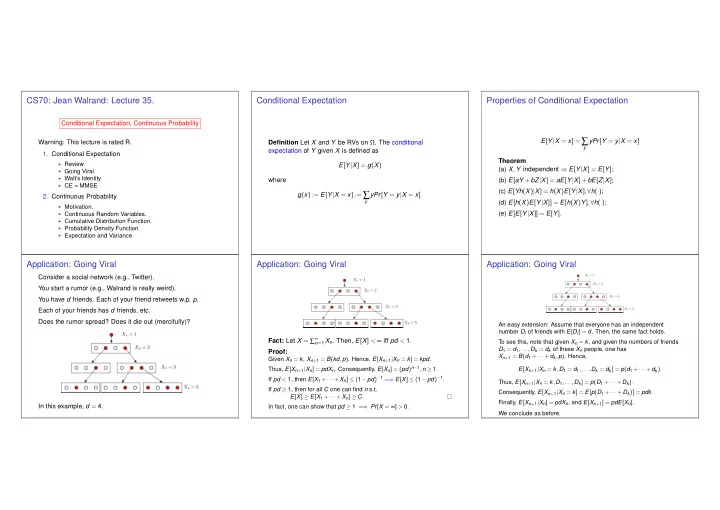

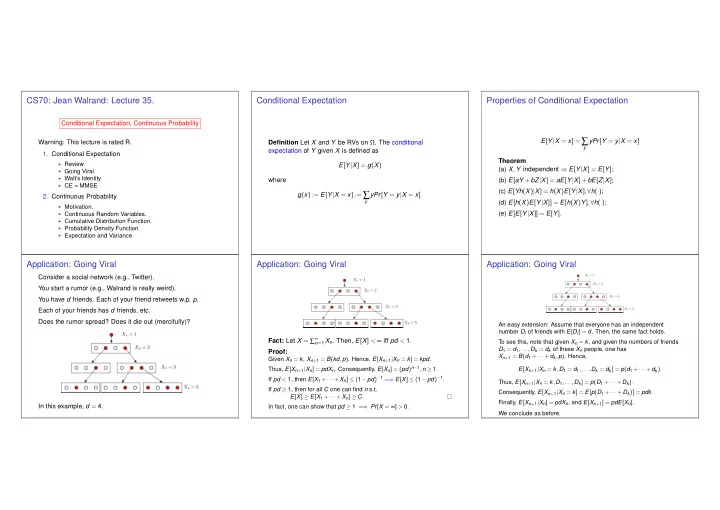

CS70: Jean Walrand: Lecture 35. Conditional Expectation Properties of Conditional Expectation Conditional Expectation, Continuous Probability E [ Y | X = x ] = ∑ yPr [ Y = y | X = x ] Warning: This lecture is rated R. Definition Let X and Y be RVs on Ω . The conditional y expectation of Y given X is defined as 1. Conditional Expectation Theorem ◮ Review E [ Y | X ] = g ( X ) (a) X , Y independent ⇒ E [ Y | X ] = E [ Y ] ; ◮ Going Viral ◮ Walt’s Identity where (b) E [ aY + bZ | X ] = aE [ Y | X ]+ bE [ Z | X ] ; ◮ CE = MMSE (c) E [ Yh ( X ) | X ] = h ( X ) E [ Y | X ] , ∀ h ( · ) ; g ( x ) := E [ Y | X = x ] := ∑ yPr [ Y = y | X = x ] . 2. Continuous Probability y (d) E [ h ( X ) E [ Y | X ]] = E [ h ( X ) Y ] , ∀ h ( · ) ; ◮ Motivation. ◮ Continuous Random Variables. (e) E [ E [ Y | X ]] = E [ Y ] . ◮ Cumulative Distribution Function. ◮ Probability Density Function ◮ Expectation and Variance Application: Going Viral Application: Going Viral Application: Going Viral Consider a social network (e.g., Twitter). You start a rumor (e.g., Walrand is really weird). You have d friends. Each of your friend retweets w.p. p . Each of your friends has d friends, etc. Does the rumor spread? Does it die out (mercifully)? An easy extension: Assume that everyone has an independent number D i of friends with E [ D i ] = d . Then, the same fact holds. Fact: Let X = ∑ ∞ n = 1 X n . Then, E [ X ] < ∞ iff pd < 1 . To see this, note that given X n = k , and given the numbers of friends D 1 = d 1 ,..., D k = d k of these X n people, one has Proof: X n + 1 = B ( d 1 + ··· + d k , p ) . Hence, Given X n = k , X n + 1 = B ( kd , p ) . Hence, E [ X n + 1 | X n = k ] = kpd . Thus, E [ X n + 1 | X n ] = pdX n . Consequently, E [ X n ] = ( pd ) n − 1 , n ≥ 1 . E [ X n + 1 | X n = k , D 1 = d 1 ,..., D k = d k ] = p ( d 1 + ··· + d k ) . If pd < 1, then E [ X 1 + ··· + X n ] ≤ ( 1 − pd ) − 1 = ⇒ E [ X ] ≤ ( 1 − pd ) − 1 . Thus, E [ X n + 1 | X n = k , D 1 ,..., D k ] = p ( D 1 + ··· + D k ) . If pd ≥ 1, then for all C one can find n s.t. Consequently, E [ X n + 1 | X n = k ] = E [ p ( D 1 + ··· + D k )] = pdk . E [ X ] ≥ E [ X 1 + ··· + X n ] ≥ C . Finally, E [ X n + 1 | X n ] = pdX n , and E [ X n + 1 ] = pdE [ X n ] . In this example, d = 4. In fact, one can show that pd ≥ 1 = ⇒ Pr [ X = ∞ ] > 0. We conclude as before.

Application: Wald’s Identity CE = MMSE CE = MMSE Theorem Here is an extension of an identity we used in the last slide. E [ Y | X ] is the ‘best’ guess about Y based on X . Theorem CE = MMSE Theorem Wald’s Identity Specifically, it is the function g ( X ) of X that g ( X ) := E [ Y | X ] is the function of X that minimizes Assume that X 1 , X 2 ,... and Z are independent, where minimizes E [( Y − g ( X )) 2 ] . E [( Y − g ( X )) 2 ] Z takes values in { 0 , 1 , 2 ,... } and E [ X n ] = µ for all n ≥ 1. . Then, Proof: E [ X 1 + ··· + X Z ] = µ E [ Z ] . First recall the projection property of CE: E [( Y − E [ Y | X ]) h ( X )] = 0 , ∀ h ( · ) . Proof: E [ X 1 + ··· + X Z | Z = k ] = µ k . Thus, E [ X 1 + ··· + X Z | Z ] = µ Z . That is, the error Y − E [ Y | X ] is orthogonal to any h ( X ) . Hence, E [ X 1 + ··· + X Z ] = E [ µ Z ] = µ E [ Z ] . CE = MMSE E [ Y | X ] and L [ Y | X ] as projections Continuous Probability - James Bond. Theorem CE = MMSE g ( X ) := E [ Y | X ] is the function of X that minimizes ◮ Escapes from SPECTRE sometime during 1 , 000 mile E [( Y − g ( X )) 2 ] flight. ◮ Uniformly likely to be at any point along path. . Proof: Let h ( X ) be any function of X . Then E [( Y − h ( X )) 2 ] E [( Y − g ( X )+ g ( X ) − h ( X )) 2 ] = What is the chance he is at any point along the path? E [( Y − g ( X )) 2 ]+ E [( g ( X ) − h ( X )) 2 ] = Discrete Setting: Uniorm over Ω = { 1 ,..., 1000 } . + 2 E [( Y − g ( X ))( g ( X ) − h ( X ))] . Continuous setting: probability at any point in [ 0 , 1000 ] ? But, Probability at any one of an infinite number of points is .. ...uh ...0? E [( Y − g ( X ))( g ( X ) − h ( X ))] = 0 by the projection property . L [ Y | X ] is the projection of Y on { a + bX , a , b ∈ ℜ } : LLSE E [ Y | X ] is the projection of Y on { g ( X ) , g ( · ) : ℜ → ℜ } : MMSE. Thus, E [( Y − h ( X )) 2 ] ≥ E [( Y − g ( X )) 2 ] .

Continuous Probability: the interval! Shooting.. Buffon’s needle. Another Bond example: Spectre is chasing him in a buggie. Throw a needle on a board with horizontal lines at random. Consider [ a , b ] ⊆ [ 0 ,ℓ ] (for James, ℓ = 1000 . ) Bond shoots at buggy and hits it at random spot. Let [ a , b ] also denote the event that point is in the interval [ a , b ] . What is the chance he hits gas tank? Gas tank is a one foot circle and the buggy is 4 × 5 rectangle. Pr [[ a , b ]] = length of [ a , b ] length of [ 0 ,ℓ ] = b − a = b − a 1000 . ℓ buggy Again, [ a , b ] ⊆ Ω = [ 0 ,ℓ ] are events. Events in this space are unions of intervals. gas Example: the event A - “within 50 miles of base” is Lines 1 unit apart, needle has length 1. [ 0 , 50 ] ∪ [ 950 , 1000 ] . What is the probability that the needle hits a line? Clearly... Pr [ A ] = Pr [[ 0 , 50 ]]+ Pr [[ 950 , 10000 ]] = 1 Ω = { ( x , y ) : x ∈ [ 0 , 4 ] , y ∈ [ 0 , 5 ] } . 2 10 . π . The size of the event is π ( 1 ) 2 = π . The “size” of the sample space which is 4 × 5 . Since uniform, probability of event is π 20 . Buffon’s needle. Continuous Random Variables: CDF Example: CDF Sample space: possible positions of needle. Position: center position ( X , Y ) , orientation, Θ . Example: Bond’s position. Θ Pr [ a ≤ X ≤ b ] instead of Pr [ X = a ] . 0 for x < 0 For all a and b specifies the behavior! · x ( X , Y ) F ( x ) = Pr [ X ≤ x ] = for 0 ≤ x ≤ 1000 Y Simpler: P [ X ≤ x ] for all x . 1000 1 for x > 1000 Cumulative probability Distribution Function of X is Probability that Bond is within 50 miles of center: F ( x ) = Pr [ X ≤ x ] Relevant: X coordinate .. doesn’t matter; Y coordinate := Pr [ 450 < X ≤ 550 ] = Pr [ X ≤ 550 ] − Pr [ X ≤ 450 ] Pr [ a < X ≤ b ] = Pr [ X ≤ b ] − Pr [ X ≤ a ] = F ( b ) − F ( a ) . distance from closest line. Y ∈ [ 0 , 1 2 ] ; Θ := closest angle to 1000 − 450 550 = vertical [ − π 2 , π 2 ] . When Y ≤ 1 2 cos Θ : needle intersects line. Idea: two events X ≤ b and X ≤ a . 1000 � π / 2 � Pr [Θ ∈ [ θ , θ + d θ ]] Pr [ Y ≤ 1 � Difference is the event a ≤ X ≤ b . 1000 = 1 100 Pr [ “intersects” ] = 2 cos θ ] = 10 − π / 2 � π / 2 � [ d θ π ] × [( 1 / 2 ) cos θ � = 2 π [ 1 − π / 2 = 2 2 sin θ ] π / 2 = ] π . 1 / 2 − π / 2

Example: CDF Calculation of event with dartboard.. Density function. Example: hitting random location on gas tank. Random location on circle. Is the dart more like to be (near) . 5 or . 1 ? Probability between . 5 and . 6 of center? 1 Probability of “Near x” is Pr [ x < X ≤ x + δ ] . Recall CDF . Goes to 0 as δ goes to zero. y Try 0 for y < 0 Pr [ x < X ≤ x + δ ] y 2 . F Y ( y ) = Pr [ Y ≤ y ] = for 0 ≤ y ≤ 1 δ Random Variable: Y distance from center. 1 for y > 1 The limit as δ goes to zero. Probability within y of center: Pr [ x < X ≤ x + δ ] Pr [ X ≤ x + δ ] − Pr [ X ≤ x ] area of small circle lim = lim Pr [ Y ≤ y ] = Pr [ 0 . 5 < Y ≤ 0 . 6 ] = Pr [ Y ≤ 0 . 6 ] − Pr [ Y ≤ 0 . 5 ] δ δ δ → 0 δ → 0 area of dartboard = F Y ( 0 . 6 ) − F Y ( 0 . 5 ) F X ( x + δ ) − F X ( x ) π y 2 = lim = y 2 . = δ δ → 0 = . 36 − . 25 π d ( F ( x )) Hence, = . 11 = . dx for y < 0 0 y 2 F Y ( y ) = Pr [ Y ≤ y ] = for 0 ≤ y ≤ 1 1 for y > 1 Density Examples: Density. Examples: Density. Example: “Dart” board. Example: uniform over interval [ 0 , 1000 ] Recall that Definition: (Density) A probability density function for a random variable X with cdf F X ( x ) = Pr [ X ≤ x ] is the function 0 for x < 0 0 for y < 0 f X ( x ) where f X ( x ) = F ′ 1 X ( x ) = for 0 ≤ x ≤ 1000 y 2 � x F Y ( y ) = Pr [ Y ≤ y ] = for 0 ≤ y ≤ 1 1000 F X ( x ) = − ∞ f X ( x ) dx . 0 for x > 1000 1 for y > 1 Example: uniform over interval [ 0 ,ℓ ] Thus, 0 for y < 0 f Y ( y ) = F ′ Y ( y ) = 2 y for 0 ≤ y ≤ 1 0 for x < 0 Pr [ X ∈ ( x , x + δ ]] = F X ( x + δ ) − F X ( x ) = f X ( x ) δ . 0 for y > 1 f X ( x ) = F ′ 1 X ( x ) = for 0 ≤ x ≤ ℓ ℓ 0 for x > ℓ The cumulative distribution function (cdf) and probability distribution function (pdf) give full information. Use whichever is convenient.

Recommend

More recommend