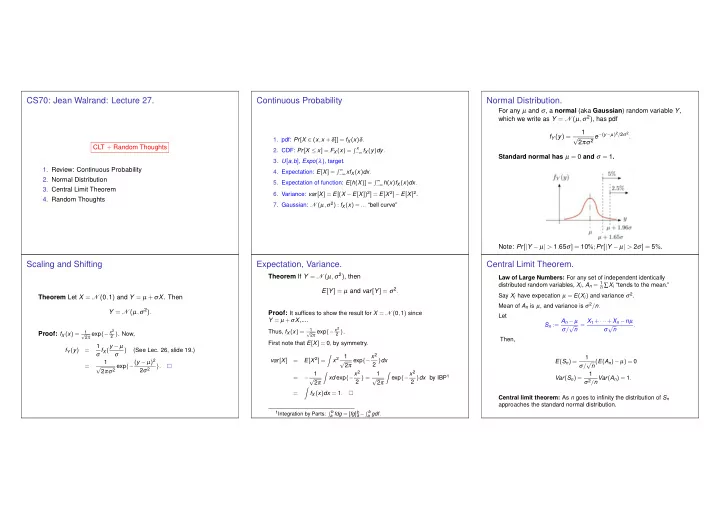

CS70: Jean Walrand: Lecture 27. Continuous Probability Normal Distribution. For any µ and σ , a normal (aka Gaussian ) random variable Y , which we write as Y = N ( µ , σ 2 ) , has pdf 1 2 πσ 2 e − ( y − µ ) 2 / 2 σ 2 . f Y ( y ) = √ 1. pdf: Pr [ X ∈ ( x , x + δ ]] = f X ( x ) δ . CLT + Random Thoughts � x 2. CDF: Pr [ X ≤ x ] = F X ( x ) = − ∞ f X ( y ) dy . Standard normal has µ = 0 and σ = 1 . 3. U [ a , b ] , Expo ( λ ) , target. � ∞ 1. Review: Continuous Probability 4. Expectation: E [ X ] = − ∞ xf X ( x ) dx . 2. Normal Distribution � ∞ 5. Expectation of function: E [ h ( X )] = − ∞ h ( x ) f X ( x ) dx . 3. Central Limit Theorem 6. Variance: var [ X ] = E [( X − E [ X ]) 2 ] = E [ X 2 ] − E [ X ] 2 . 4. Random Thoughts 7. Gaussian: N ( µ , σ 2 ) : f X ( x ) = ... “bell curve” Note: Pr [ | Y − µ | > 1 . 65 σ ] = 10 %; Pr [ | Y − µ | > 2 σ ] = 5 % . Scaling and Shifting Expectation, Variance. Central Limit Theorem. Theorem If Y = N ( µ , σ 2 ) , then Law of Large Numbers: For any set of independent identically distributed random variables, X i , A n = 1 n ∑ X i “tends to the mean.” E [ Y ] = µ and var [ Y ] = σ 2 . Say X i have expecation µ = E ( X i ) and variance σ 2 . Theorem Let X = N ( 0 , 1 ) and Y = µ + σ X . Then Mean of A n is µ , and variance is σ 2 / n . Y = N ( µ , σ 2 ) . Proof: It suffices to show the result for X = N ( 0 , 1 ) since Let Y = µ + σ X ,.... S n := A n − µ σ / √ n = X 1 + ··· + X n − n µ σ √ n . 2 π exp {− x 2 1 2 π exp {− x 2 Thus, f X ( x ) = 2 } . √ Proof: f X ( x ) = 1 √ 2 } . Now, Then, First note that E [ X ] = 0 , by symmetry. 1 σ f X ( y − µ f Y ( y ) = ) (See Lec. 26, slide 19.) σ exp {− x 2 1 � 1 E [ X 2 ] = x 2 var [ X ] = √ 2 } dx 2 πσ 2 exp {− ( y − µ ) 2 E ( S n ) = σ / √ n ( E ( A n ) − µ ) = 0 1 √ 2 π = } . 2 σ 2 xd exp {− x 2 exp {− x 2 1 1 � � 1 2 } dx by IBP 1 = − √ 2 } = √ Var ( S n ) = σ 2 / nVar ( A n ) = 1 . 2 π 2 π � = f X ( x ) dx = 1 . Central limit theorem: As n goes to infinity the distribution of S n approaches the standard normal distribution. � b � b 1 Integration by Parts: a fdg = [ fg ] b a − a gdf .

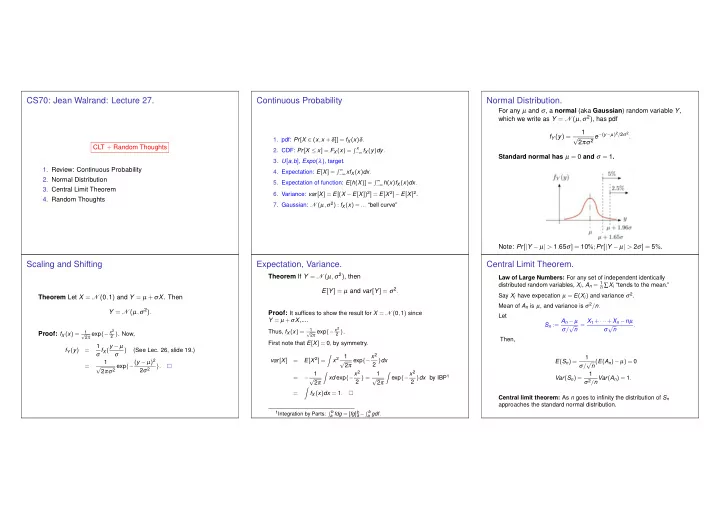

Central Limit Theorem CI for Mean CI for Mean Let X 1 , X 2 ,... be i.i.d. with mean µ and variance σ 2 . Let Let X 1 , X 2 ,... be i.i.d. with mean µ and variance σ 2 . Let A n = X 1 + ··· + X n . Central Limit Theorem n A n = X 1 + ··· + X n . Let X 1 , X 2 ,... be i.i.d. with E [ X 1 ] = µ and var ( X 1 ) = σ 2 . Define The CLT states that n A n − µ σ / √ n = X 1 + ··· + X n − n µ The CLT states that S n := A n − µ σ / √ n = X 1 + ··· + X n − n µ σ √ n → N ( 0 , 1 ) as n → ∞ . σ √ n . X 1 + ··· + X n − n µ σ √ n → N ( 0 , 1 ) as n → ∞ . Thus, for n ≫ 1, one has Then, S n → N ( 0 , 1 ) , as n → ∞ . Pr [ − 2 ≤ | A n − µ σ / √ n | ≤ 2 ] ≈ 95 % . Also, [ A n − 2 σ √ n , A n + 2 σ That is, √ n ] is a 95 % − CI for µ . Equivalently, � α 1 − ∞ e − x 2 / 2 dx . Recall: Using Chebyshev, we found that (see Lec. 22, slide 6) √ Pr [ S n ≤ α ] → Pr [ µ ∈ [ A n − 2 σ √ n , A n + 2 σ 2 π √ n ]] ≈ 95 % . [ A n − 4 . 5 σ √ n , A n + 4 . 5 σ √ n ] is a 95 % − CI for µ . Proof: See EE126. That is, Thus, the CLT provides a smaller confidence interval. [ A n − 2 σ √ n , A n + 2 σ √ n ] is a 95 % − CI for µ . Coins and normal. Coins and normal. Summary Let X 1 , X 2 ,... be i.i.d. B ( p ) . Thus, X 1 + ··· + X n = B ( n , p ) . Let X 1 , X 2 ,... be i.i.d. B ( p ) . Thus, X 1 + ··· + X n = B ( n , p ) . � Here, µ = p and σ = p ( 1 − p ) . CLT states that � Here, µ = p and σ = p ( 1 − p ) . CLT states that X 1 + ··· + X n − np X 1 + ··· + X n − np → N ( 0 , 1 ) → N ( 0 , 1 ) . � p ( 1 − p ) n � p ( 1 − p ) n and Gaussian and CLT [ A n − 2 σ √ n , A n + 2 σ √ n ] is a 95 % − CI for µ 1. Gaussian: N ( µ , σ 2 ) : f X ( x ) = ... “bell curve” with A n = ( X 1 + ··· + X n ) / n . Hence, ⇒ A n − µ 2. CLT: X n i.i.d. = σ / √ n → N ( 0 , 1 ) [ A n − 2 σ √ n , A n + 2 σ √ n ] is a 95 % − CI for p . 3. CI: [ A n − 2 σ √ n , A n + 2 σ √ n ] = 95 % -CI for µ . Since σ ≤ 0 . 5 , [ A n − 20 . 5 √ n , A n + 20 . 5 √ n ] is a 95 % − CI for p . Thus, [ A n − 1 √ n , A n + 1 √ n ] is a 95 % − CI for p .

Confusing Statistics: Simpson’s Paradox More on Confusing Statistics Choosing at Random: Bertrand’s Paradox Statistics are often confusing: ◮ The average household annual income in the US is $ 72 k . Yes, but the median is $ 52 k . ◮ The false alarm rate for prostate cancer is only 1 % . Great, but only 1 person in 8 , 000 has that cancer. So, there are 80 false alarms for each actual case. The numbers are applications and admissions of males and The figures corresponds to three ways of choosing a chord “at random.” The ◮ The Texas sharpshooter fallacy. Look at people living close females to the two colleges of a university. probability that the chord is larger than the side | AB | of an inscribed to power lines. You find clusters of cancers. You will also equilateral triangle is Overall, the admission rate of male students is 80 % whereas it find such clusters when looking at people eating kale. ◮ 1 / 3 if you choose a point A , then another point X uniformly at random is only 51 % for female students. ◮ False causation. Vaccines cause autism. Both vaccination on the circumference (left). A closer look shows that the admission rate is larger for female and autism rates increased.... ◮ 1 / 4 if you choose a point X uniformly at random in the circle and draw students in both colleges.... the chord perpendicular to the radius that goes through X (center). ◮ Beware of statistics reported in the media! Female students happen to apply more to the college that ◮ 1 / 2 if you choose a point X uniformly at random on a given radius and draw the chord perpendicular to the radius that goes through X (right). admits fewer students. Confirmation Bias Confirmation Bias: An experiment Being Rational: ‘Thinking, Fast and Slow’ In this book, Daniel Kahneman discusses examples of our irrationality. Confirmation bias is the tendency to search for, interpret, and recall information in a way that confirms one’s beliefs or Here are a few examples: hypotheses, while giving disproportionately less consideration ◮ A judge rolls a die in the morning. In the afternoon, he has to to alternative possibilities. There are two bags. One with 60 % red balls and 40 % blue sentence a criminal. Statistically, the sentence tends to be balls; the other with the opposite fractions. heavier if the outcome of the morning roll was high. Confirmation biases contribute to overconfidence in personal beliefs and can maintain or strengthen beliefs in the face of One selects one of the two bags. ◮ People tend to be more convinced by articles printed in Times contrary evidence. Roman instead of Computer Modern Sans Serif. As one draws balls one at time, one asks people to declare Three aspects: ◮ Perception illusions: Which horizontal line is longer? whether they think one draws from the first or second bag. ◮ Biased search for information. E.g., ignoring articles that Surprisingly, people tend to be reinforced in their original belief, dispute your beliefs. even when the evidence accumulates against it. ◮ Biased interpretation. E.g., putting more weight on confirmation than on contrary evidence. ◮ Biased memory. E.g., remembering facts that confirm your beliefs and forgetting others. It is difficult to think clearly!

Recommend

More recommend