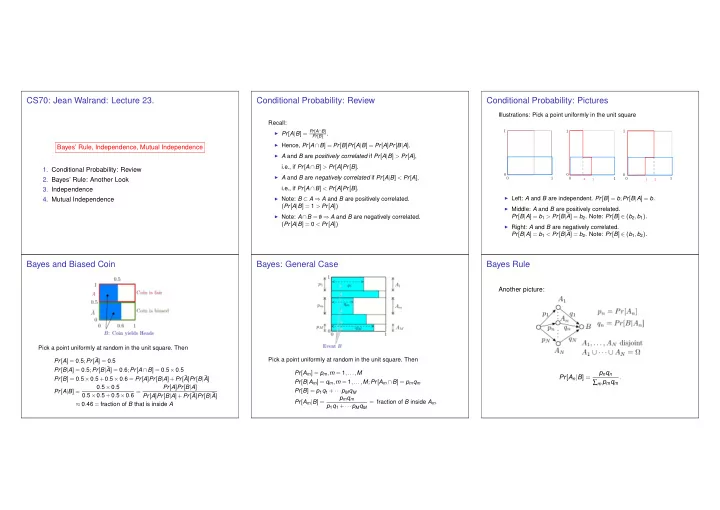

A B A B b B A CS70: Jean Walrand: Lecture 23. Conditional Probability: Review Conditional Probability: Pictures Illustrations: Pick a point uniformly in the unit square Recall: 1 ◮ Pr [ A | B ] = Pr [ A ∩ B ] 1 1 Pr [ B ] . ◮ Hence, Pr [ A ∩ B ] = Pr [ B ] Pr [ A | B ] = Pr [ A ] Pr [ B | A ] . Bayes’ Rule, Independence, Mutual Independence ◮ A and B are positively correlated if Pr [ A | B ] > Pr [ A ] , i.e., if Pr [ A ∩ B ] > Pr [ A ] Pr [ B ] . 1. Conditional Probability: Review 0 0 0 ◮ A and B are negatively correlated if Pr [ A | B ] < Pr [ A ] , 2. Bayes’ Rule: Another Look 0 1 0 1 0 1 b 2 b 1 b 1 b 2 i.e., if Pr [ A ∩ B ] < Pr [ A ] Pr [ B ] . 3. Independence ◮ Note: B ⊂ A ⇒ A and B are positively correlated. ◮ Left: A and B are independent. Pr [ B ] = b ; Pr [ B | A ] = b . 4. Mutual Independence ( Pr [ A | B ] = 1 > Pr [ A ]) ◮ Middle: A and B are positively correlated. Pr [ B | A ] = b 1 > Pr [ B | ¯ ◮ Note: A ∩ B = / A ] = b 2 . Note: Pr [ B ] ∈ ( b 2 , b 1 ) . 0 ⇒ A and B are negatively correlated. ( Pr [ A | B ] = 0 < Pr [ A ]) ◮ Right: A and B are negatively correlated. Pr [ B | A ] = b 1 < Pr [ B | ¯ A ] = b 2 . Note: Pr [ B ] ∈ ( b 1 , b 2 ) . Bayes and Biased Coin Bayes: General Case Bayes Rule Another picture: Pick a point uniformly at random in the unit square. Then Pr [ A ] = 0 . 5 ; Pr [¯ Pick a point uniformly at random in the unit square. Then A ] = 0 . 5 Pr [ B | A ] = 0 . 5 ; Pr [ B | ¯ A ] = 0 . 6 ; Pr [ A ∩ B ] = 0 . 5 × 0 . 5 Pr [ A m ] = p m , m = 1 ,..., M p n q n Pr [ A n | B ] = . Pr [ B ] = 0 . 5 × 0 . 5 + 0 . 5 × 0 . 6 = Pr [ A ] Pr [ B | A ]+ Pr [¯ A ] Pr [ B | ¯ A ] Pr [ B | A m ] = q m , m = 1 ,..., M ; Pr [ A m ∩ B ] = p m q m ∑ m p m q m 0 . 5 × 0 . 5 Pr [ A ] Pr [ B | A ] Pr [ B ] = p 1 q 1 + ··· p M q M Pr [ A | B ] = 0 . 5 × 0 . 5 + 0 . 5 × 0 . 6 = Pr [ A ] Pr [ B | A ]+ Pr [¯ A ] Pr [ B | ¯ A ] p m q m Pr [ A m | B ] = = fraction of B inside A m . ≈ 0 . 46 = fraction of B that is inside A p 1 q 1 + ··· p M q M

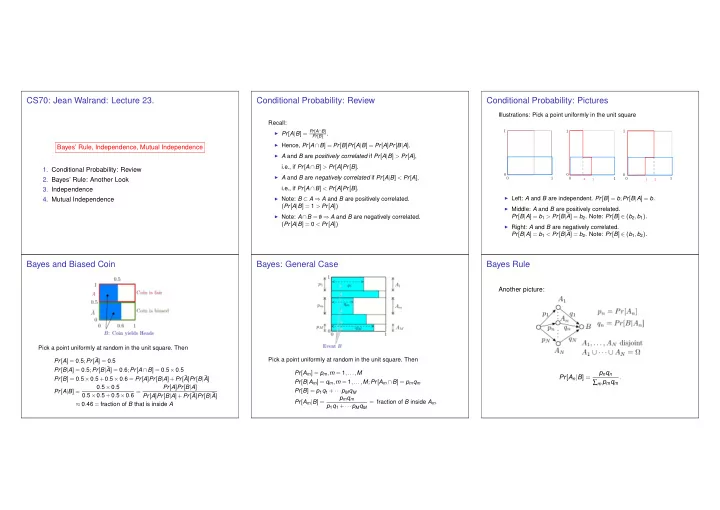

A A A B B Why do you have a fever? Why do you have a fever? Why do you have a fever? Our “Bayes’ Square” picture: We found 0 . 80 Pr [ Flu | High Fever ] ≈ 0 . 58 , Flu 0 . 15 Pr [ Ebola | High Fever ] ≈ 5 × 10 − 8 , ≈ 0 1 Ebola Pr [ Other | High Fever ] ≈ 0 . 42 One says that ‘Flu’ is the Most Likely a Posteriori (MAP) cause of the high Other 0 . 85 fever. ‘Ebola’ is the Maximum Likelihood Estimate (MLE) of the cause: it causes the Green = Fever Using Bayes’ rule, we find fever with the largest probability. Recall that 0 . 15 × 0 . 80 0 . 10 Pr [ Flu | High Fever ] = 0 . 15 × 0 . 80 + 10 − 8 × 1 + 0 . 85 × 0 . 1 ≈ 0 . 58 p m q m 58% of Fever = Flu p m = Pr [ A m ] , q m = Pr [ B | A m ] , Pr [ A m | B ] = . p 1 q 1 + ··· + p M q M ≈ 0% of Fever = Ebola 10 − 8 × 1 0 . 15 × 0 . 80 + 10 − 8 × 1 + 0 . 85 × 0 . 1 ≈ 5 × 10 − 8 42% of Fever = Other Pr [ Ebola | High Fever ] = Thus, 0 . 85 × 0 . 1 Note that even though Pr [ Fever | Ebola ] = 1, one has ◮ MAP = value of m that maximizes p m q m . Pr [ Other | High Fever ] = 0 . 15 × 0 . 80 + 10 − 8 × 1 + 0 . 85 × 0 . 1 ≈ 0 . 42 ◮ MLE = value of m that maximizes q m . Pr [ Ebola | Fever ] ≈ 0 . The values 0 . 58 , 5 × 10 − 8 , 0 . 42 are the posterior probabilities. This example shows the importance of the prior probabilities. Independence Independence and conditional probability Independence Recall : Definition: Two events A and B are independent if A and B are independent ⇔ Pr [ A ∩ B ] = Pr [ A ] Pr [ B ] Pr [ A ∩ B ] = Pr [ A ] Pr [ B ] . Fact: Two events A and B are independent if and only if ⇔ Pr [ A | B ] = Pr [ A ] . Pr [ A | B ] = Pr [ A ] . Consider the example below: Examples: ¯ ◮ When rolling two dice, A = sum is 7 and B = red die is 1 Indeed: Pr [ A | B ] = Pr [ A ∩ B ] Pr [ B ] , so that are independent; 1 0.1 0.15 ◮ When rolling two dice, A = sum is 3 and B = red die is 1 Pr [ A | B ] = Pr [ A ] ⇔ Pr [ A ∩ B ] 0.25 0.25 are not independent; = Pr [ A ] ⇔ Pr [ A ∩ B ] = Pr [ A ] Pr [ B ] . 2 Pr [ B ] ◮ When flipping coins, A = coin 1 yields heads and B = coin 0.15 0.1 2 yields tails are independent; 3 ◮ When throwing 3 balls into 3 bins, A = bin 1 is empty and B = bin 2 is empty are not independent; ( A 2 , B ) are independent: Pr [ A 2 | B ] = 0 . 5 = Pr [ A 2 ] . ( A 2 , ¯ B ) are independent: Pr [ A 2 | ¯ B ] = 0 . 5 = Pr [ A 2 ] . ( A 1 , B ) are not independent: Pr [ A 1 | B ] = 0 . 1 0 . 5 = 0 . 2 � = Pr [ A 1 ] = 0 . 25.

Pairwise Independence Example 2 Mutual Independence Flip two fair coins. Let Flip a fair coin 5 times. Let A n = ‘coin n is H’, for n = 1 ,..., 5. Definition Mutual Independence ◮ A = ‘first coin is H’ = { HT , HH } ; Then, (a) The events A 1 ,..., A 5 are mutually independent if ◮ B = ‘second coin is H’ = { TH , HH } ; A m , A n are independent for all m � = n . ◮ C = ‘the two coins are different’ = { TH , HT } . Pr [ ∩ k ∈ K A k ] = Π k ∈ K Pr [ A k ] , for all K ⊆ { 1 ,..., 5 } . Also, A 1 and A 3 ∩ A 5 are independent . (b) More generally, the events { A j , j ∈ J } are mutually independent if Indeed, Pr [ A 1 ∩ ( A 3 ∩ A 5 )] = 1 Pr [ ∩ k ∈ K A k ] = Π k ∈ K Pr [ A k ] , for all finite K ⊆ J . 8 = Pr [ A 1 ] Pr [ A 3 ∩ A 5 ] Thus, Pr [ A 1 ∩ A 2 ] = Pr [ A 1 ] Pr [ A 2 ] , . Similarly, Pr [ A 1 ∩ A 3 ∩ A 4 ] = Pr [ A 1 ] Pr [ A 3 ] Pr [ A 4 ] ,... . A , C are independent; B , C are independent; A 1 ∩ A 2 and A 3 ∩ A 4 ∩ A 5 are independent . A ∩ B , C are not independent. ( Pr [ A ∩ B ∩ C ] = 0 � = Pr [ A ∩ B ] Pr [ C ] .) Example: Flip a fair coin forever. Let A n = ‘coin n is H.’ Then the events A n are mutually independent. This leads to a definition .... If A did not say anything about C and B did not say anything about C , then A ∩ B would not say anything about C . Mutual Independence Mutual Independence: Complements Summary. Here is one step in the proof of the previous theorem. Fact Assume A , B , C ,..., G , H are mutually independent. Then, A , B c , C ,..., G c , H are mutually independent. Theorem Proof: If the events { A j , j ∈ J } are mutually independent and if K 1 and K 2 are disjoint finite subsets of J , then any event V 1 defined by { A j , j ∈ K 1 } is Bayes’ Rule, Independence, Mutual Independence We show that independent of any event V 2 defined by { A j , j ∈ K 2 } . Pr [ A ∩ B c ∩ C ∩···∩ G c ∩ H ] = Pr [ A ] Pr [ B c ] ··· Pr [ G c ] Pr [ H ] . (b) More generally, if the K n are pairwise disjoint finite subsets of J , Assume that this is true when there are at most n complements. Main results: then events V n defined by { A j , j ∈ K n } are mutually independent. Base case: n = 0 true by definition of mutual independence. ◮ Bayes’ Rule: Pr [ A m | B ] = p m q m / ( p 1 q 1 + ··· + p M q M ) . Proof: Induction step: Assume true for n . Check for n + 1: ◮ Mutual Independence: Events defined by disjoint See Lecture Note 25, Example 2.7. A ∩ B c ∩ C ∩···∩ G c ∩ H = collections of mutually independent events are mutually For instance, the fact that there are more heads than tails in the first A ∩ B c ∩ C ∩···∩ F ∩ H \ A ∩ B c ∩ C ∩···∩ G ∩ H . Hence, independent. five flips of a coin is independent of the fact there are fewer heads Pr [ A ∩ B c ∩ C ∩···∩ G c ∩ H ] than tails in flips 6 ,..., 13. = Pr [ A ∩ B c ∩ C ∩···∩ F ∩ H ] − Pr [ A ∩ B c ∩ C ∩···∩ G ∩ H ] = Pr [ A ] Pr [ B c ] ··· Pr [ F ] Pr [ H ] − Pr [ A ] Pr [ B c ] ··· Pr [ F ] Pr [ G ] Pr [ H ] = Pr [ A ] Pr [ B c ] ··· Pr [ F ] Pr [ H ]( 1 − Pr [ G ]) = Pr [ A ] Pr [ B c ] ··· Pr [ F ] Pr [ G c ] Pr [ H ] .

Recommend

More recommend