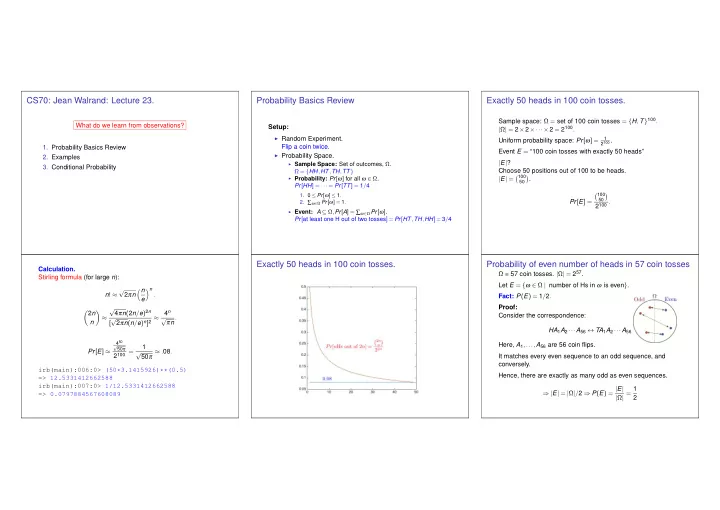

CS70: Jean Walrand: Lecture 23. Probability Basics Review Exactly 50 heads in 100 coin tosses. Sample space: Ω = set of 100 coin tosses = { H , T } 100 . What do we learn from observations? Setup: | Ω | = 2 × 2 ×···× 2 = 2 100 . ◮ Random Experiment. 1 Uniform probability space: Pr [ ω ] = 2 100 . Flip a coin twice. 1. Probability Basics Review Event E = “100 coin tosses with exactly 50 heads” ◮ Probability Space. 2. Examples | E | ? ◮ Sample Space: Set of outcomes, Ω . 3. Conditional Probability Choose 50 positions out of 100 to be heads. Ω = { HH , HT , TH , TT } ◮ Probability: Pr [ ω ] for all ω ∈ Ω . � 100 | E | = � . 50 Pr [ HH ] = ··· = Pr [ TT ] = 1 / 4 1. 0 ≤ Pr [ ω ] ≤ 1 . � 100 � 50 Pr [ E ] = 2 100 . 2. ∑ ω ∈ Ω Pr [ ω ] = 1 . ◮ Event: A ⊆ Ω , Pr [ A ] = ∑ ω ∈ Ω Pr [ ω ] . Pr [ at least one H out of two tosses ] = Pr [ HT , TH , HH ] = 3 / 4 Exactly 50 heads in 100 coin tosses. Probability of even number of heads in 57 coin tosses Calculation. Ω = 57 coin tosses. | Ω | = 2 57 . Stirling formula (for large n ): Let E = { ω ∈ Ω | number of Hs in ω is even } . √ � n � n n ! ≈ 2 π n . Fact: P ( E ) = 1 / 2 . e √ Proof: 4 π n ( 2 n / e ) 2 n 4 n � 2 n � Consider the correspondence: √ √ π n . ≈ 2 π n ( n / e ) n ] 2 ≈ n [ HA 1 A 2 ··· A 56 ↔ TA 1 A 2 ··· A 56 4 50 Here, A 1 ,..., A 56 are 56 coin flips. √ 1 50 π Pr [ E ] ≈ 2 100 = √ ≈ . 08 . 50 π It matches every even sequence to an odd sequence, and conversely. irb(main):006:0> (50*3.1415926)**(0.5) Hence, there are exactly as many odd as even sequences. => 12.5331412662588 irb(main):007:0> 1/12.5331412662588 ⇒ | E | = | Ω | / 2 ⇒ P ( E ) = | E | | Ω | = 1 => 0.0797884567608089 2

n Probability more heads than tails in 100 coin tosses. Probability of n heads in 100 coin tosses. Roll a red and a blue die. Ω = 100 coin tosses. | Ω | = 2 100 . Ω = 100 coin tosses. Ω = { ( a , b ) : 1 ≤ a , b ≤ 6 } = { 1 , 2 ,..., 6 } 2 . | Ω | = 2 100 . � 100 Event E n = ‘ n heads’; | E n | = � n | Ω | = 1 1 Uniform: Pr [ ω ] = 36 for all ω . Recall event E = ‘equal heads and tails’ Event F = ‘more heads than tails’ What is the probability of Event G = ‘more tails than heads’ 1. the red die showing 6? A 1-to-1 correspondence between p n E 1 = { ( 6 , b ) : 1 ≤ b ≤ 6 } , | E 1 | = 6, Pr [ E 1 ] = | E 1 | / | Ω | = 1 / 6 outcomes in F and G! 2. at least one die showing 6? | F | = | G | . E 1 = { ( 6 , b ) : 1 ≤ b ≤ 6 } = red die shows 6 E , F and G are disjoint. E 2 = { ( a , 6 ) : 1 ≤ a ≤ 6 } = blue die shows 6 Pr [ E ] ≈ 8 % . E = E 1 ∪ E 2 = red or blue die (or both) show 6 | Ω | = | E | + | F | + | G | . | E | = | E 1 | + | E 2 |−| E 1 ∩ E 2 | [Inclusion/Exclusion] ⇒ 1 = Pr [Ω] = Pr [ E ]+ 2 Pr [ F ] ≈ 8 %+ 2 Pr [ F ] . | E 1 ∩ E 2 | = { ( 6 , 6 ) } Solve for | F | : | E | = 6 + 6 − 1 = 11 | F | ≈ 46 % � 100 Pr [ E ] = 11 / 36 � p n = Pr [ E n ] = | E n | n | Ω | = 2 100 Roll a red and a blue die. Inclusion/Exclusion Roll a red and a blue die. Note that, Ω = { ( a , b ) : 1 ≤ a , b ≤ 6 } = { 1 , 2 ,..., 6 } 2 . | Ω | = 1 1 Uniform: Pr [ ω ] = 36 for all ω . Pr [ A ∪ B ] = Pr [ A ]+ Pr [ B ] − Pr [ A ∩ B ] What is the probability of whether or not the sample space has uniform distribution. 1. the dice sum to 7? E = { ( 1 , 6 ) , ( 2 , 5 ) , ( 3 , 4 ) , ( 4 , 3 ) , ( 5 , 2 ) , ( 6 , 1 ) } ; | E | = 6 . Counting argument: for each choice a of the value of the red die, there is exactly one choice b = 7 − a for the blue die, so there are 6 total choices. Pr [ E ] = | E | / | Ω | = 6 / 36 = 1 / 6. 2. the dice sum to 10? E 1 = ‘Red die shows 6’ ; E 2 = ‘Blue die shows 6’ E = { ( 4 , 6 ) , ( 5 , 5 ) , ( 6 , 4 ) } Pr [ E ] = | E | / | Ω | = 3 / 36 = 1 / 12 . E 1 ∪ E 2 = ‘At least one die shows 6’ Pr [ E 1 ] = 6 36 , Pr [ E 2 ] = 6 36 , Pr [ E 1 ∪ E 2 ] = 11 36 .

Roll a red and a blue die. Roll two blue dice. Roll two blue dice The key idea is that we do not distinguish the dice. Roll die 1, then die 2. Then forget the order. For instance, we consider that ( 2 , 5 ) and ( 5 , 2 ) are the same outcome. We designate this outcome by ( 2 , 5 ) . Thus, Ω ′ = { ( a , b ) | 1 ≤ a ≤ b ≤ 6 } . We see that Pr [( 1 , 3 )] = 2 36 and Pr [( 2 , 2 )] = 1 36 . Two different models of the same random experiment. In Ω ′ , Pr [( 1 , 3 )] = 2 36 and Pr [( 2 , 2 )] = 1 36 Roll two blue dice. Roll two blue dice. Really not uniform! and not finite! What is the probability of the dice sum to 7? Now what is the probability of at least one die showing 6? ◮ Experiment: Toss three times a coin with Pr [ H ] = 2 / 3. ◮ Ω = { HHH , HHT , HTH , HTT , THH , THT , TTH , TTT } . ◮ Pr [ HHH ] = ( 2 3 ) 3 ; Pr [ HHT ] = ( 2 3 ) 2 ( 1 3 ) ; . . . ◮ Toss a fair coin until you get a heads. ◮ Ω = { H , TH , TTH , TTTH ,... } ◮ Pr [ H ] = 1 2 , Pr [ TH ] = 1 4 , Pr [ TTH ] = 1 8 ◮ Still sums to 1. Indeed 1 2 + 1 4 + 1 8 + 1 16 + ··· = 1 . In Ω , Pr [ A ] = 11 36 ; in Ω ′ , Pr [ B ] = 5 × 2 36 + 1 × 1 36 . In Ω , Pr [ A ] = 6 36 ; in Ω ′ , Pr [ B ] = 3 × 2 36 . Of course, this is the same as for distinguishable dice! Of course, this is the same as for distinguishable dice! The event does not depend on the dice being distinguishable. The event does not depend on the dice being distinguishable.

A B A A A A Set notation review Conditional probability: example. A similar example. Ω Ω Ω Two coin flips. One of the flips is heads. Two coin flips. First flip is heads. Probability of two heads? [ B \ B Probability of two heads? Ω = { HH , HT , TH , TT } ; Uniform probability space. Event A = first flip is heads: A = { HH , HT } . Ω = { HH , HT , TH , TT } ; uniform. Event A = one flip is heads. A = { HH , HT , TH } . Figure : Difference ( A , Figure : Union (or) Figure : Two events not B ) Ω Ω Ω New sample space: A ; uniform still. ∩ B New sample space: A ; uniform still. A ∆ B ¯ Event B = two heads. Event B = two heads. Figure : Complement Figure : Intersection The probability of two heads if the first flip is heads. (not) Figure : Symmetric (and) The probability of two heads if at least one flip is heads. The probability of B given A is 1 / 2. difference (only one) The probability of B given A is 1 / 3. Conditional Probability: A non-uniform example Another non-uniform example Yet another non-uniform example Consider Ω = { 1 , 2 ,..., N } with Pr [ n ] = p n . Consider Ω = { 1 , 2 ,..., N } with Pr [ n ] = p n . Consider Ω = { 1 , 2 ,..., N } with Pr [ n ] = p n . p 3 p 3 Pr [ 3 | B ] = = Pr [ B ] . p 1 + p 2 + p 3 p 3 = Pr [ A ∩ B ] p 2 + p 3 = Pr [ A ∩ B ] Pr [ A | B ] = . Pr [ A | B ] = . ω / ∈ B ⇒ Pr [ ω | B ] = 0 . p 1 + p 2 + p 3 Pr [ B ] p 1 + p 2 + p 3 Pr [ B ]

Conditional Probability. Conditional Probability. Conditional Probability. Definition: The conditional probability of B given A is Pr [ B | A ] = Pr [ A ∩ B ] Definition: The conditional probability of B given A is Pr [ A ] A ∩ B Pr [ ω | A ] = ∑ ω ∈ A ∩ B Pr [ ω ] = Pr [ A ∩ B ] Pr [ B | A ] = ∑ If in A , what is the probability of outcome ω ? Pr [ A ] Pr [ A ] In A ! ω ∈ B If ω �∈ A , probability is 0 A A B B In B ? Otherwise: Ratio of probability of ω to total probability of A Must be in A ∩ B . Pr [ ω | A ] = Pr [ ω ] Pr [ B | A ] = Pr [ A ∩ B ] Pr [ A ] . Pr [ A ] Uniform Probability Space: Ratio of 1 / | Ω | to | A | / | Ω | = ⇒ 1 / | A | . (Makes sense!) What do we learn from observations? You observe that the event B occurs. That changes your information about the probability of every event A . The conditional probability of A given B is Pr [ A | B ] = Pr [ A ∩ B ] . Pr [ B ] Note: Pr [ A ∩ B ] = Pr [ B ] × Pr [ A | B ] = Pr [ A ] × Pr [ B | A ] .

Recommend

More recommend