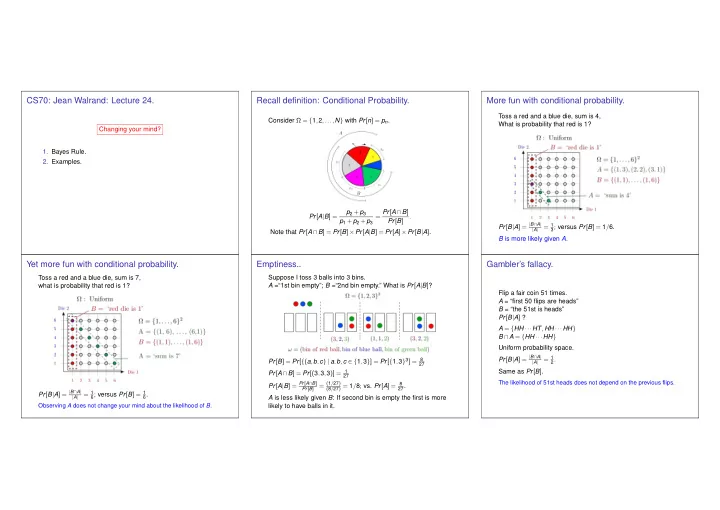

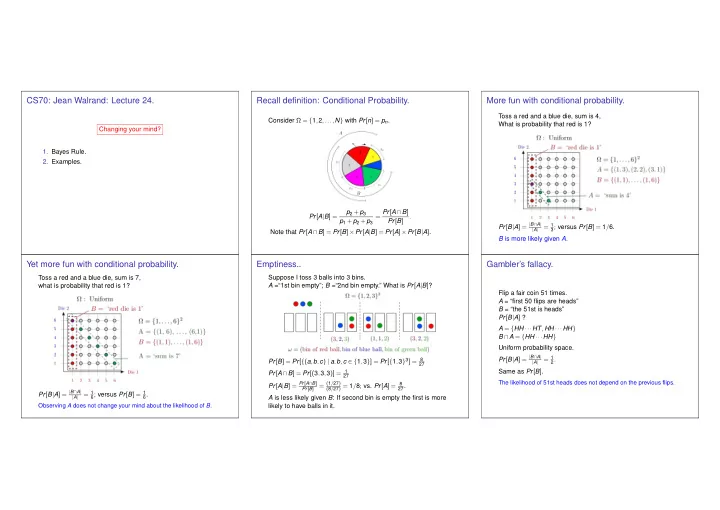

CS70: Jean Walrand: Lecture 24. Recall definition: Conditional Probability. More fun with conditional probability. Toss a red and a blue die, sum is 4, Consider Ω = { 1 , 2 ,..., N } with Pr [ n ] = p n . What is probability that red is 1? Changing your mind? 1. Bayes Rule. 2. Examples. p 2 + p 3 = Pr [ A ∩ B ] Pr [ A | B ] = . p 1 + p 2 + p 3 Pr [ B ] Pr [ B | A ] = | B ∩ A | = 1 3 ; versus Pr [ B ] = 1 / 6. | A | Note that Pr [ A ∩ B ] = Pr [ B ] × Pr [ A | B ] = Pr [ A ] × Pr [ B | A ] . B is more likely given A . Yet more fun with conditional probability. Emptiness.. Gambler’s fallacy. Toss a red and a blue die, sum is 7, Suppose I toss 3 balls into 3 bins. what is probability that red is 1? A =“1st bin empty”; B =“2nd bin empty.” What is Pr [ A | B ] ? Flip a fair coin 51 times. A = “first 50 flips are heads” B = “the 51st is heads” Pr [ B | A ] ? A = { HH ··· HT , HH ··· HH } B ∩ A = { HH ··· HH } Uniform probability space. Pr [ B | A ] = | B ∩ A | = 1 2 . Pr [ B ] = Pr [ { ( a , b , c ) | a , b , c ∈ { 1 , 3 } ] = Pr [ { 1 , 3 } 3 ] = 8 | A | 27 Same as Pr [ B ] . Pr [ A ∩ B ] = Pr [( 3 , 3 , 3 )] = 1 27 The likelihood of 51st heads does not depend on the previous flips. Pr [ A | B ] = Pr [ A ∩ B ] = ( 1 / 27 ) ( 8 / 27 ) = 1 / 8 ; vs. Pr [ A ] = 8 27 . Pr [ B ] Pr [ B | A ] = | B ∩ A | = 1 6 ; versus Pr [ B ] = 1 6 . A is less likely given B : If second bin is empty the first is more | A | Observing A does not change your mind about the likelihood of B . likely to have balls in it.

Monty Hall Game. Monty Hall Game Recall: You picked door 1 and Carol opened door 3. Should Monty Hall is the host of a game show. His assistant is Carol. you switch to door 2? ◮ Three doors; one prize two goats. First intuition: Doors 1 and 2 are equally likely to hide the prize: ◮ Choose one door, say door 1. no need to switch. Opening door 3 did not tell us anything ◮ Carol opens another door with a goat, say door 3. about doors 1 and 2. Wrong! ◮ Monty offers you a chance to switch doors, i.e., choose Better observation: If you switch, you get the prize, except it it is door 2. behind door 1. Thus, by switching, you get the prize with ◮ What do you do? probability 2 / 3. If you do not switch, you get it with probability 1 / 3. Monty Hall Game Analysis Independence Independence and conditional probability Definition: Two events A and B are independent if Pr [ A ∩ B ] = Pr [ A ] Pr [ B ] . Fact: Two events A and B are independent if and only if Ω = { 1 , 2 , 3 } 2 ; ω = ( a , b ) = ( prize , your initial choice ); uniform. Pr [ A | B ] = Pr [ A ] . If you do not switch, you win if a = b . Examples: If you switch, you win if a � = b . ◮ When rolling two dice, A = sum is 7 and B = red die is 1 Indeed: Pr [ A | B ] = Pr [ A ∩ B ] Pr [ B ] , so that E.g., ( a , b ) = ( 1 , 2 ) → shows 3 → switch to 1 . are independent; Pr [ { ( a , b ) | a = b } ] = 3 9 = 1 ◮ When rolling two dice, A = sum is 3 and B = red die is 1 3 Pr [ A | B ] = Pr [ A ] ⇔ Pr [ A ∩ B ] are not independent; = Pr [ A ] ⇔ Pr [ A ∩ B ] = Pr [ A ] Pr [ B ] . Pr [ { ( a , b ) | a � = b } ] = 1 − Pr [ { ( a , b ) | a = b } ] = 2 3 . Pr [ B ] ◮ When flipping coins, A = coin 1 yields heads and B = coin 2 yields tails are independent; ◮ When throwing 3 balls into 3 bins, A = bin 1 is empty and B = bin 2 is empty are not independent;

Total probability Total probability Total probability Here is a simple useful fact: Assume that Ω is the union of the disjoint sets A 1 ,..., A N . Assume that Ω is the union of the disjoint sets A 1 ,..., A N . Pr [ B ] = Pr [ A ∩ B ]+ Pr [¯ A ∩ B ] . Then, Pr [ B ] = Pr [ A 1 ∩ B ]+ ··· + Pr [ A N ∩ B ] . Indeed, B is the union of the disjoint sets A n ∩ B for n = 1 ,..., N . Thus, Indeed, B is the union of two disjoint sets A ∩ B and ¯ A ∩ B . Pr [ B ] = Pr [ A 1 ] Pr [ B | A 1 ]+ ··· + Pr [ A N ] Pr [ B | A N ] . Thus, Pr [ B ] = Pr [ A 1 ] Pr [ B | A 1 ]+ ··· + Pr [ A N ] Pr [ B | A N ] . Pr [ B ] = Pr [ A ] Pr [ B | A ]+ Pr [¯ A ] Pr [ B | ¯ A ] . Is you coin loaded? Is you coin loaded? Bayes Rule Your coin is fair w.p. 1 / 2 or such that Pr [ H ] = 0 . 6, otherwise. A picture: Another picture: We imagine that there are N possible causes A 1 ,..., A N . You flip your coin and it yields heads. What is the probability that it is fair? Analysis: A = ‘coin is fair’ , B = ‘outcome is heads’ We want to calculate P [ A | B ] . We know P [ B | A ] = 1 / 2 , P [ B | ¯ A ] = 0 . 6 , Pr [ A ] = 1 / 2 = Pr [¯ A ] Imagine 100 situations, among which m := 100 ( 1 / 2 )( 1 / 2 ) are such that A and B occur and Now, n := 100 ( 1 / 2 )( 0 . 6 ) are such that ¯ Imagine 100 situations, among which 100 p n q n are such that A n A and B occur. Pr [ A ∩ B ]+ Pr [¯ A ∩ B ] = Pr [ A ] Pr [ B | A ]+ Pr [¯ A ] Pr [ B | ¯ Pr [ B ] = A ] and B occur, for n = 1 ,..., N . Thus, among the m + n situations where B occurred, there are Thus, among the 100 ∑ m p m q m situations where B occurred, = ( 1 / 2 )( 1 / 2 )+( 1 / 2 ) 0 . 6 = 0 . 55 . m where A occurred. there are 100 p n q n where A n occurred. Thus, Hence, Hence, Pr [ A | B ] = Pr [ A ] Pr [ B | A ] ( 1 / 2 )( 1 / 2 ) p n q n m ( 1 / 2 )( 1 / 2 ) = ( 1 / 2 )( 1 / 2 )+( 1 / 2 ) 0 . 6 ≈ 0 . 45 . Pr [ A n | B ] = . Pr [ A | B ] = m + n = ( 1 / 2 )( 1 / 2 )+( 1 / 2 ) 0 . 6 . ∑ m p m q m Pr [ B ]

Why do you have a fever? Bayes’ Rule Operations Thomas Bayes Using Bayes’ rule, we find 0 . 15 × 0 . 80 Pr [ Flu | High Fever ] = 0 . 15 × 0 . 80 + 10 − 8 × 1 + 0 . 85 × 0 . 1 ≈ 0 . 58 10 − 8 × 1 0 . 15 × 0 . 80 + 10 − 8 × 1 + 0 . 85 × 0 . 1 ≈ 5 × 10 − 8 Pr [ Ebola | High Fever ] = Bayes’ Rule is the canonical example of how information 0 . 85 × 0 . 1 changes our opinions. Pr [ Other | High Fever ] = 0 . 15 × 0 . 80 + 10 − 8 × 1 + 0 . 85 × 0 . 1 ≈ 0 . 42 These are the posterior probabilities. One says that ‘Flu’ is the Most Likely a Posteriori (MAP) cause of the high fever. Source: Wikipedia. Thomas Bayes Testing for disease. Bayes Rule. Let’s watch TV!! Random Experiment: Pick a random male. Outcomes: ( test , disease ) A - prostate cancer. B - positive PSA test. ◮ Pr [ A ] = 0 . 0016 , (.16 % of the male population is affected.) Using Bayes’ rule, we find ◮ Pr [ B | A ] = 0 . 80 (80% chance of positive test with disease.) 0 . 0016 × 0 . 80 P [ A | B ] = 0 . 0016 × 0 . 80 + 0 . 9984 × 0 . 10 = . 013 . ◮ Pr [ B | A ] = 0 . 10 (10% chance of positive test without disease.) A 1.3% chance of prostate cancer with a positive PSA test. From http://www.cpcn.org/01 psa tests.htm and Surgery anyone? http://seer.cancer.gov/statfacts/html/prost.html (10/12/2011.) Impotence... Positive PSA test ( B ). Do I have disease? A Bayesian picture of Thomas Bayes. Incontinence.. Pr [ A | B ]??? Death.

Summary Change your mind? Key Ideas: ◮ Conditional Probability: Pr [ A | B ] = Pr [ A ∩ B ] Pr [ B ] ◮ Bayes’ Rule: Pr [ A n ] Pr [ B | A n ] Pr [ A n | B ] = ∑ m Pr [ A m ] Pr [ B | A m ] . Pr [ A n | B ] = posterior probability ; Pr [ A n ] = prior probability . ◮ All these are possible: Pr [ A | B ] < Pr [ A ]; Pr [ A | B ] > Pr [ A ]; Pr [ A | B ] = Pr [ A ] .

Recommend

More recommend