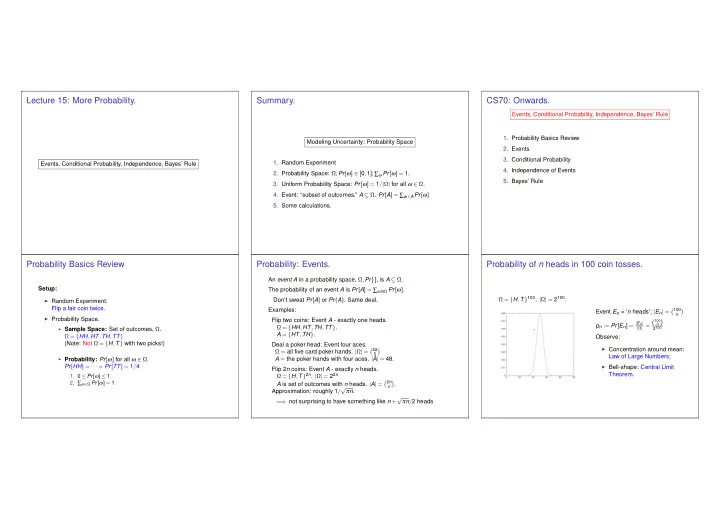

n Lecture 15: More Probability. Summary. CS70: Onwards. Events, Conditional Probability, Independence, Bayes’ Rule 1. Probability Basics Review Modeling Uncertainty: Probability Space 2. Events 3. Conditional Probability 1. Random Experiment Events, Conditional Probability, Independence, Bayes’ Rule 4. Independence of Events 2. Probability Space: Ω; Pr [ ω ] ∈ [ 0 , 1 ]; ∑ ω Pr [ ω ] = 1. 5. Bayes’ Rule 3. Uniform Probability Space: Pr [ ω ] = 1 / | Ω | for all ω ∈ Ω . 4. Event: “subset of outcomes.” A ⊆ Ω . Pr [ A ] = ∑ w ∈ A Pr [ ω ] 5. Some calculations. Probability Basics Review Probability: Events. Probability of n heads in 100 coin tosses. An event A in a probability space, Ω , Pr [ · ] , is A ⊆ Ω . Setup: The probability of an event A is Pr [ A ] = ∑ ω in Ω Pr [ ω ] . Ω = { H , T } 100 ; | Ω | = 2 100 . Don’t sweat Pr [ A ] or Pr ( A ) . Same deal. ◮ Random Experiment. Flip a fair coin twice. Examples: � 100 � Event E n = ‘ n heads’; | E n | = n ◮ Probability Space. Flip two coins: Event A - exactly one heads. | Ω | = ( 100 n ) p n := Pr [ E n ] = | E n | Ω = { HH , HT , TH , TT } . ◮ Sample Space: Set of outcomes, Ω . 2 100 p n A = { HT , TH } . Ω = { HH , HT , TH , TT } Observe: (Note: Not Ω = { H , T } with two picks!) Deal a poker head: Event four aces. ◮ Concentration around mean: � 52 � Ω = all five card poker hands. | Ω | = 5 Law of Large Numbers; ◮ Probability: Pr [ ω ] for all ω ∈ Ω . A = the poker hands with four aces. | A | = 48. Pr [ HH ] = ··· = Pr [ TT ] = 1 / 4 ◮ Bell-shape: Central Limit Flip 2 n coins: Event A - exactly n heads. Theorem. Ω = { H , T } 2 n . | Ω | = 2 2 n 1. 0 ≤ Pr [ ω ] ≤ 1 . 2. ∑ ω ∈ Ω Pr [ ω ] = 1 . � 2 n A is set of outcomes with n heads. | A | = � . Approximation: roughly 1 / √ π n . n ⇒ not surprising to have something like n + √ π n / 2 heads =

Probability is Additive Consequences of Additivity Inclusion/Exclusion Theorem (a) Pr [ A ∪ B ] = Pr [ A ]+ Pr [ B ] − Pr [ A ∩ B ] ; Theorem (inclusion-exclusion property) Pr [ A ∪ B ] = Pr [ A ]+ Pr [ B ] − Pr [ A ∩ B ] (a) If events A and B are disjoint, i.e., A ∩ B = / 0 , then (b) Pr [ A 1 ∪···∪ A n ] ≤ Pr [ A 1 ]+ ··· + Pr [ A n ] ; Pr [ A ∪ B ] = Pr [ A ]+ Pr [ B ] . (union bound) (c) If A 1 ,... A N are a partition of Ω , i.e., (b) If events A 1 ,..., A n are pairwise disjoint, pairwise disjoint and ∪ N m = 1 A m = Ω , then i.e., A k ∩ A m = / 0 , ∀ k � = m , then Pr [ B ] = Pr [ B ∩ A 1 ]+ ··· + Pr [ B ∩ A N ] . Pr [ A 1 ∪···∪ A n ] = Pr [ A 1 ]+ ··· + Pr [ A n ] . (law of total probability) Proof: Proof: Obvious. Straightforward. Use definition of probability of events. Another view. Any ω ∈ A ∪ B is in A ∩ B , A ∪ B , or A ∩ B . So, add it up. (b) is obvious. Doh! Add probabilities of outcomes once on LHS and at least once on RHS. Proofs for (a) and (c)? Next... Total probability Roll a Red and a Blue Die. Conditional probability: example. Assume that Ω is the union of the disjoint sets A 1 ,..., A N . Two coin flips. First flip is heads. Probability of two heads? Ω = { HH , HT , TH , TT } ; Uniform probability space. Event A = first flip is heads: A = { HH , HT } . Then, New sample space: A ; uniform still. Pr [ B ] = Pr [ A 1 ∩ B ]+ ··· + Pr [ A N ∩ B ] . Indeed, B is the union of the disjoint sets A n ∩ B for n = 1 ,..., N . In “math”: ω ∈ B is in exactly one of A i ∩ B . Adding up probability of them, get Pr [ ω ] in sum. Event B = two heads. E 1 = ‘Red die shows 6’ ; E 2 = ‘Blue die shows 6’ ..Did I say... The probability of two heads if the first flip is heads. E 1 ∪ E 2 = ‘At least one die shows 6’ Add it up. The probability of B given A is 1 / 2. Pr [ E 1 ] = 6 36 , Pr [ E 2 ] = 6 36 , Pr [ E 1 ∪ E 2 ] = 11 36 .

A similar example. Conditional Probability: A non-uniform example Another non-uniform example Consider Ω = { 1 , 2 ,..., N } with Pr [ n ] = p n . Two coin flips. At least one of the flips is heads. Let A = { 3 , 4 } , B = { 1 , 2 , 3 } . → Probability of two heads? Ω Ω = { HH , HT , TH , TT } ; uniform. P r [ ω ] Event A = at least one flip is heads. A = { HH , HT , TH } . 3/10 Red Green 4/10 Yellow 2/10 Blue 1/10 New sample space: A ; uniform still. Physical experiment Probability model Ω = { Red, Green, Yellow, Blue } Event B = two heads. Pr [ Red | Red or Green ] = 3 7 = Pr [ Red ∩ (Red or Green) ] p 3 = Pr [ A ∩ B ] Pr [ Red or Green ] Pr [ A | B ] = . The probability of two heads if at least one flip is heads. p 1 + p 2 + p 3 Pr [ B ] The probability of B given A is 1 / 3. Yet another non-uniform example Conditional Probability. More fun with conditional probability. Consider Ω = { 1 , 2 ,..., N } with Pr [ n ] = p n . Toss a red and a blue die, sum is 4, Let A = { 2 , 3 , 4 } , B = { 1 , 2 , 3 } . What is probability that red is 1? Definition: The conditional probability of B given A is Pr [ B | A ] = Pr [ A ∩ B ] Pr [ A ] A ∩ B In A ! A A B B In B ? Must be in A ∩ B . Pr [ B | A ] = Pr [ A ∩ B ] Pr [ A ] . Pr [ B | A ] = | B ∩ A | = 1 3 ; versus Pr [ B ] = 1 / 6. | A | p 2 + p 3 = Pr [ A ∩ B ] Pr [ A | B ] = . B is more likely given A . p 1 + p 2 + p 3 Pr [ B ]

Yet more fun with conditional probability. Emptiness.. Gambler’s fallacy. Toss a red and a blue die, sum is 7, Suppose I toss 3 balls into 3 bins. what is probability that red is 1? A =“1st bin empty”; B =“2nd bin empty.” What is Pr [ A | B ] ? Flip a fair coin 51 times. A = “first 50 flips are heads” B = “the 51st is heads” Pr [ B | A ] ? A = { HH ··· HT , HH ··· HH } B ∩ A = { HH ··· HH } Uniform probability space. Pr [ B | A ] = | B ∩ A | = 1 2 . | A | Pr [ B ] = Pr [ { ( a , b , c ) | a , b , c ∈ { 1 , 3 } ] = Pr [ { 1 , 3 } 3 ] = 8 27 Same as Pr [ B ] . Pr [ A ∩ B ] = Pr [( 3 , 3 , 3 )] = 1 27 The likelihood of 51st heads does not depend on the previous flips. Pr [ A | B ] = Pr [ A ∩ B ] = ( 1 / 27 ) ( 8 / 27 ) = 1 / 8 ; vs. Pr [ A ] = 8 27 . Pr [ B ] Pr [ B | A ] = | B ∩ A | = 1 6 ; versus Pr [ B ] = 1 6 . A is less likely given B : | A | Second bin is empty = ⇒ first bin is more likely to contain ball(s). Observing A does not change your mind about the likelihood of B . Product Rule Product Rule Correlation Recall the definition of conditional probability: Theorem Product Rule An example. Let A 1 , A 2 ,..., A n be events. Then Random experiment: Pick a person at random. Pr [ B | A ] = Pr [ A ∩ B ] . Event A : the person has lung cancer. Pr [ A ] Pr [ A 1 ∩···∩ A n ] = Pr [ A 1 ] Pr [ A 2 | A 1 ] ··· Pr [ A n | A 1 ∩···∩ A n − 1 ] . Event B : the person is a heavy smoker. Hence, Proof: By induction. Fact: Pr [ A ∩ B ] = Pr [ A ] Pr [ B | A ] . Assume the result is true for n . (It holds for n = 2.) Then, Pr [ A | B ] = 1 . 17 × Pr [ A ] . Consequently, Pr [ A 1 ∩···∩ A n ∩ A n + 1 ] Conclusion: Pr [ A ∩ B ∩ C ] = Pr [( A ∩ B ) ∩ C ] = Pr [ A 1 ∩···∩ A n ] Pr [ A n + 1 | A 1 ∩···∩ A n ] ◮ Smoking increases the probability of lung cancer by 17 % . = Pr [ A ∩ B ] Pr [ C | A ∩ B ] = Pr [ A 1 ] Pr [ A 2 | A 1 ] ··· Pr [ A n | A 1 ∩···∩ A n − 1 ] Pr [ A n + 1 | A 1 ∩···∩ A n ] , ◮ Smoking causes lung cancer. = Pr [ A ] Pr [ B | A ] Pr [ C | A ∩ B ] . Thus, the result holds for n + 1.

Recommend

More recommend