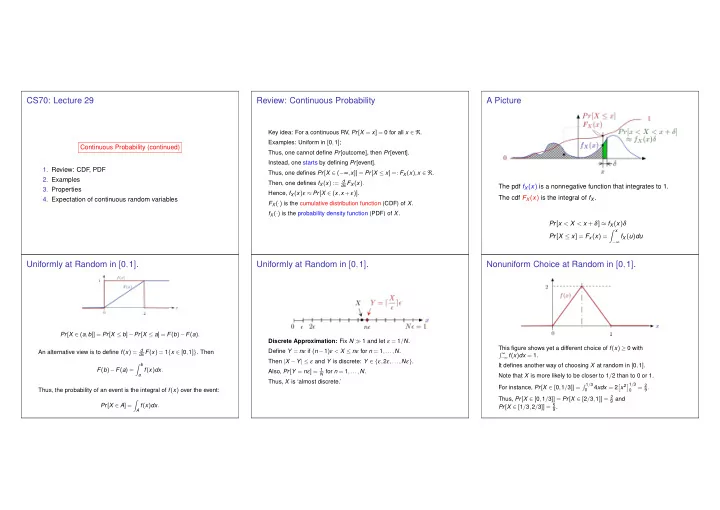

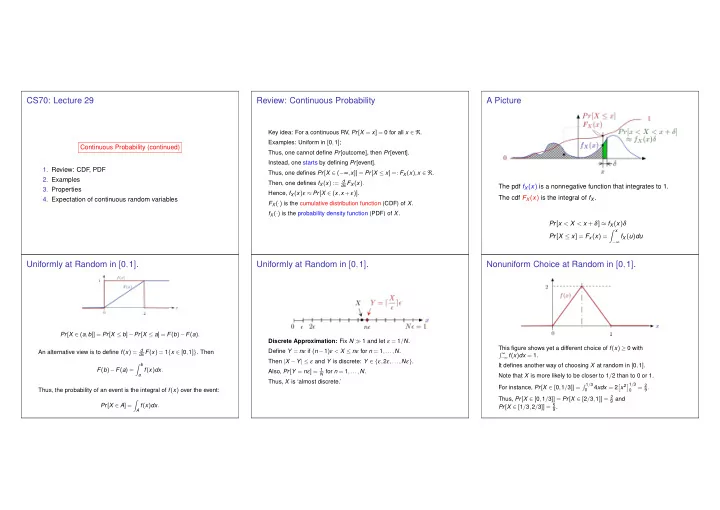

CS70: Lecture 29 Review: Continuous Probability A Picture Key idea: For a continuous RV, Pr [ X = x ] = 0 for all x ∈ ℜ . Examples: Uniform in [ 0 , 1 ] ; Continuous Probability (continued) Thus, one cannot define Pr [ outcome ] , then Pr [ event ] . Instead, one starts by defining Pr [ event ] . 1. Review: CDF , PDF Thus, one defines Pr [ X ∈ ( − ∞ , x ]] = Pr [ X ≤ x ] =: F X ( x ) , x ∈ ℜ . 2. Examples Then, one defines f X ( x ) := d dx F X ( x ) . The pdf f X ( x ) is a nonnegative function that integrates to 1. 3. Properties Hence, f X ( x ) ε ≈ Pr [ X ∈ ( x , x + ε )] . The cdf F X ( x ) is the integral of f X . 4. Expectation of continuous random variables F X ( · ) is the cumulative distribution function (CDF) of X . f X ( · ) is the probability density function (PDF) of X . Pr [ x < X < x + δ ] ≈ f X ( x ) δ � x Pr [ X ≤ x ] = F x ( x ) = − ∞ f X ( u ) du Uniformly at Random in [ 0 , 1 ] . Uniformly at Random in [ 0 , 1 ] . Nonuniform Choice at Random in [ 0 , 1 ] . Pr [ X ∈ ( a , b ]] = Pr [ X ≤ b ] − Pr [ X ≤ a ] = F ( b ) − F ( a ) . Discrete Approximation: Fix N ≫ 1 and let ε = 1 / N . This figure shows yet a different choice of f ( x ) ≥ 0 with An alternative view is to define f ( x ) = d Define Y = n ε if ( n − 1 ) ε < X ≤ n ε for n = 1 ,..., N . dx F ( x ) = 1 { x ∈ [ 0 , 1 ] } . Then � ∞ − ∞ f ( x ) dx = 1. Then | X − Y | ≤ ε and Y is discrete: Y ∈ { ε , 2 ε ,..., N ε } . � b It defines another way of choosing X at random in [ 0 , 1 ] . F ( b ) − F ( a ) = a f ( x ) dx . Also, Pr [ Y = n ε ] = 1 N for n = 1 ,..., N . Note that X is more likely to be closer to 1 / 2 than to 0 or 1. Thus, X is ‘almost discrete.’ � 1 / 3 x 2 � 1 / 3 = 2 For instance, Pr [ X ∈ [ 0 , 1 / 3 ]] = 4 xdx = 2 � 9 . Thus, the probability of an event is the integral of f ( x ) over the event: 0 0 Thus, Pr [ X ∈ [ 0 , 1 / 3 ]] = Pr [ X ∈ [ 2 / 3 , 1 ]] = 2 9 and � Pr [ X ∈ A ] = A f ( x ) dx . Pr [ X ∈ [ 1 / 3 , 2 / 3 ]] = 5 9 .

General Random Choice in ℜ Pr [ X ∈ ( x , x + ε )] Discrete Approximation Let F ( x ) be a nondecreasing function with F ( − ∞ ) = 0 and F (+ ∞ ) = 1. An illustration of Pr [ X ∈ ( x , x + ε )] ≈ f X ( x ) ε : Define X by Pr [ X ∈ ( a , b ]] = F ( b ) − F ( a ) for a < b . Also, for a 1 < b 1 < a 2 < b 2 < ··· < b n , Fix ε ≪ 1 and let Y = n ε if X ∈ ( n ε , ( n + 1 ) ε ] . Pr [ X ∈ ( a 1 , b 1 ] ∪ ( a 2 , b 2 ] ∪ ( a n , b n ]] Thus, Pr [ Y = n ε ] = F X (( n + 1 ) ε ) − F X ( n ε ) . = Pr [ X ∈ ( a 1 , b 1 ]]+ ··· + Pr [ X ∈ ( a n , b n ]] Note that | X − Y | ≤ ε and Y is a discrete random variable. = F ( b 1 ) − F ( a 1 )+ ··· + F ( b n ) − F ( a n ) . Also, if f X ( x ) = d dx F X ( x ) , then F X ( x + ε ) − F X ( x ) ≈ f X ( x ) ε . Let f ( x ) = d dx F ( x ) . Then, Hence, Pr [ Y = n ε ] ≈ f X ( n ε ) ε . Thus, we can think of X of being almost discrete with Pr [ X ∈ ( x , x + ε ]] = F ( x + ε ) − F ( x ) ≈ f ( x ) ε . Pr [ X = n ε ] ≈ f X ( n ε ) ε . Here, F ( x ) is called the cumulative distribution function (cdf) of X and f ( x ) is the probability density function (pdf) of X . Thus, the pdf is the ‘local probability by unit length.’ To indicate that F and f correspond to the RV X , we will write them It is the ‘probability density.’ F X ( x ) and f X ( x ) . Example: CDF Calculation of event with dartboard.. PDF. Example: hitting random location on gas tank. Random location on circle. Probability between . 5 and . 6 of center? Example: “Dart” board. 1 Recall CDF . Recall that y 0 for y < 0 0 for y < 0 y 2 y 2 F Y ( y ) = Pr [ Y ≤ y ] = for 0 ≤ y ≤ 1 F Y ( y ) = Pr [ Y ≤ y ] = for 0 ≤ y ≤ 1 Random Variable: Y distance from center. 1 for y > 1 1 for y > 1 Probability within y of center: 0 for y < 0 area of small circle f Y ( y ) = F ′ Pr [ Y ≤ y ] = Pr [ 0 . 5 < Y ≤ 0 . 6 ] = Pr [ Y ≤ 0 . 6 ] − Pr [ Y ≤ 0 . 5 ] Y ( y ) = 2 y for 0 ≤ y ≤ 1 area of dartboard 0 for y > 1 = F Y ( 0 . 6 ) − F Y ( 0 . 5 ) π y 2 = y 2 . = = . 36 − . 25 The cumulative distribution function (cdf) and probability π distribution function (pdf) give full information. = . 11 Hence, Use whichever is convenient. 0 for y < 0 y 2 F Y ( y ) = Pr [ Y ≤ y ] = for 0 ≤ y ≤ 1 1 for y > 1

Target U [ a , b ] Expo ( λ ) The exponential distribution with parameter λ > 0 is defined by f X ( x ) = λ e − λ x 1 { x ≥ 0 } � 0 , if x < 0 F X ( x ) = 1 − e − λ x , if x ≥ 0 . Note that Pr [ X > t ] = e − λ t for t > 0. Some Properties Expectation Examples of Expectation Definition: The expectation of a random variable X with pdf f ( x ) is defined as � ∞ 1. Expo is memoryless. Let X = Expo ( λ ) . Then, for s , t > 0, E [ X ] = − ∞ xf X ( x ) dx . Pr [ X > t + s ] 1. X = U [ 0 , 1 ] . Then, f X ( x ) = 1 { 0 ≤ x ≤ 1 } . Thus, Pr [ X > t + s | X > s ] = Pr [ X > s ] Justification: Say X = n δ w.p. f X ( n δ ) δ for n ∈ Z . Then, � ∞ � 1 e − λ ( t + s ) � ∞ � x 2 0 = 1 � 1 = e − λ t E [ X ] = ∑ ( n δ ) Pr [ X = n δ ] = ∑ = E [ X ] = − ∞ xf X ( x ) dx = 0 x . 1 dx = 2 . ( n δ ) f X ( n δ ) δ = − ∞ xf X ( x ) dx . e − λ s 2 n n = Pr [ X > t ] . � g ( x ) dx ≈ ∑ n g ( n δ ) δ . Choose Indeed, for any g , one has 2. X = distance to 0 of dart shot uniformly in unit circle. Then ‘Used is a good as new.’ g ( x ) = xf X ( x ) . f X ( x ) = 2 x 1 { 0 ≤ x ≤ 1 } . Thus, 2. Scaling Expo . Let X = Expo ( λ ) and Y = aX for some a > 0. Then � ∞ � 1 � 2 x 3 0 = 2 � 1 Pr [ Y > t ] = Pr [ aX > t ] = Pr [ X > t / a ] E [ X ] = − ∞ xf X ( x ) dx = 0 x . 2 xdx = 3 . 3 e − λ ( t / a ) = e − ( λ / a ) t = Pr [ Z > t ] for Z = Expo ( λ / a ) . = Thus, a × Expo ( λ ) = Expo ( λ / a ) . Also, Expo ( λ ) = 1 λ Expo ( 1 ) .

Examples of Expectation Linearity of Expectation Summary Theorem Expectation is linear. 3. X = Expo ( λ ) . Then, f X ( x ) = λ e − λ x 1 { x ≥ 0 } . Thus, Proof: ‘As in the discrete case.’ � ∞ � ∞ 0 x λ e − λ x dx = − 0 xde − λ x . E [ X ] = Example 1: X = U [ a , b ] . Then 1 (a) f X ( x ) = b − a 1 { a ≤ x ≤ b } . Thus, Continuous Probability Recall the integration by parts formula: � b � x 2 1 1 a = a + b � b � b � b E [ X ] = a x b − adx = . 1. pdf: Pr [ X ∈ ( x , x + δ ]] = f X ( x ) δ . � b b − a 2 2 � a u ( x ) dv ( x ) = u ( x ) v ( x ) a − a v ( x ) du ( x ) � x 2. CDF: Pr [ X ≤ x ] = F X ( x ) = − ∞ f X ( y ) dy . � b (b) X = a +( b − a ) Y , Y = U [ 0 , 1 ] . Hence, = u ( b ) v ( b ) − u ( a ) v ( a ) − a v ( x ) du ( x ) . 3. U [ a , b ] , Expo ( λ ) , target. � ∞ E [ X ] = a +( b − a ) E [ Y ] = a + b − a = a + b 4. Expectation: E [ X ] = − ∞ xf X ( x ) dx . . Thus, 2 2 � ∞ � ∞ 5. Expectation is linear. 0 xde − λ x [ xe − λ x ] ∞ 0 e − λ x dx = 0 − Example 2: X , Y are U [ 0 , 1 ] . Then � ∞ 0 − 0 + 1 0 de − λ x = − 1 = λ . E [ 3 X − 2 Y + 5 ] = 3 E [ X ] − 2 E [ Y ]+ 5 = 31 2 − 21 λ 2 + 5 = 5 . 5 . Hence, E [ X ] = 1 λ .

Recommend

More recommend