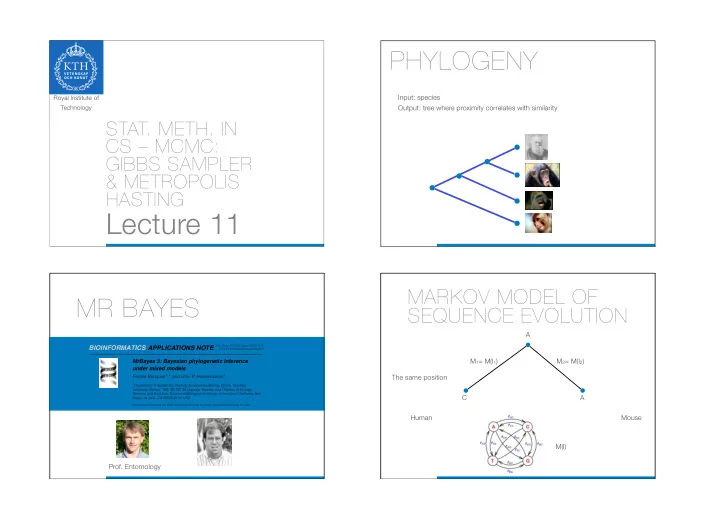

PHYLOGENY LOREM I P S U M Input: species Royal Institute of Technology Output: tree where proximity correlates with similarity STAT. METH. IN CS – MCMC: GIBBS SAMPLER & METROPOLIS HASTING Lecture 11 MARKOV MODEL OF MR BAYES SEQUENCE EVOLUTION A Vol. 19 no. 12 2003, pages 1572–1574 BIOINFORMATICS APPLICATIONS NOTE DOI: 10.1093/bioinformatics/btg180 M 1 = M(l 1 ) M 2 = M(l 2 ) MrBayes 3: Bayesian phylogenetic inference under mixed models Fredrik Ronquist 1, ∗ and John P. Huelsenbeck 2 The same position 1 Department of Systematic Zoology, Evolutionary Biology Centre, Uppsala University, Norbyv. 18D, SE-752 36 Uppsala, Sweden and 2 Section of Ecology, Behavior and Evolution, Division of Biological Sciences, University of California, San C A Diego, La Jolla, CA 92093-0116, USA Received on December 20, 2002; revised on February 14, 2003; accepted on February 19, 2003 Human Mouse M(l) Prof. Entomology

MARKOV MODEL OF MARKOV MODEL OF SEQUENCE EVOLUTION SEQUENCE EVOLUTION A C A C G T A C T G C G Human A C A C G T A G T G C C M 1 = M(l 1 ) M 2 = M(l 2 ) Mouse A A A C G T A C T G C A Same gene, same positions A C A C G T A G T G C C A A A C G T A C T G C A Uniform In general, Human Mouse M(l) A A C …………T A MARKOV CHAINS MARKOV CHAINS (DISCRETE) (DISCRETE) ★ Directed graph with Probabilities on outgoing edges sum to one transition probabilities p 2 ★ We observe the sequence of visited vertices p 2 ∑ i ∈ [d] p i =1 p 1 p 1 p d q

gcd - greatest common divisor MC- EXISTENCE MCMC The period of a state i OF STATIONARY gcd{ t : p( i → i in t steps) > 0 } Period 4 Theorem: Every irreducible, In order to sample p, set up a MC M ★ aperiodic, finite state MC has a select transition probabilities so that p = stationary distribution • stationary distribution sample from M • Theorem: Every irreducible, aperiodic, and recurrent MC has a stationary distribution M is aperiodic if each states has period 1 We want to satisfy these M is irreducible if ∀ states i,j: p(i → j) > 0 conditions! p q M is recurrent if ∀ states i: p(i → i) = 1 gcd - greatest common divisor MC- EXISTENCE METROPOLIS The period of a state i OF STATIONARY HASTINGS (MH) gcd{ t : p( i → i in t steps) > 0 } Period 1 Theorem: Every irreducible, We want to compute p*(x) (typically aperiodic, finite state MC has a p(x|D)) How? stationary distribution Implicitly construct Markov Chain M Theorem: Every irreducible, with stationary distribution p*(x) aperiodic, and recurrent MC has a Traverse it and sample every k:th visit stationary distribution M is aperiodic if each states has period 1 Use good or random starting point p*(x) ≈ [ ∑ i I(x=x i ) ]/S We want to satisfy these M is irreducible if ∀ states i,j: p(i → j) > 0 conditions! Discard the first l:th samples M is recurrent if ∀ states i: p(i → i) = 1 The remaining samples x 1 ,…,x S is an approximation of p*(x)

IMPLICIT MC WITH STATIONARY CONSTRUCTION OF DISTRIBUTION α = p � ( x � ) q ( x | x � ) MC WITH STATIONARY P*(X)=P(X|D) p � ( x ) q ( x � | x ) DISTRIBUTION P*(X) Transition when in state x: Transition when in state x: α = p � ( x � ) /q ( x � | x ) Propose state according to Propose state according to p � ( x ) /q ( x | x � ) p � ( x � ) p � ( x ) = p ( x � |D ) proposal distribution q(x ´ |x) = p � ( x � ) q ( x | x � ) proposal distribution q(x ´ |x) p ( x |D ) (conditions later) p � ( x ) q ( x � | x ) (conditions later) p ( D| x � ) p ( x � ) p ( D ) Accept x´with probability = p ( D| x ) p ( x ) Accept x´with probability min(1, α ) p ( D ) min(1, α ) = p ( D| x � ) p ( x � ) We want and don’t have p*, but p ( D| x ) p ( x ) it is a ratio that is required! MC WITH STATIONARY MH DISTRIBUTION P*(X)=P(X|D) α = p � ( x � ) q ( x | x � ) p � ( x ) q ( x � | x ) MH typically work when p � ( x � ) p � ( x ) = p ( x � |D ) we cannot compute p*(x), p ( x |D ) but p*(x´)/p*(x) α = p � ( x � ) q ( x | x � ) p ( D| x � ) p ( x � ) p ( D ) p � ( x ) q ( x � | x ) = p ( D| x ) p ( x ) p ( D ) = p ( D| x � ) p ( x � ) p ( D| x ) p ( x ) prior likelihood

DETAILED BALANCE WHY MH EQUATIONS WORKS A transition matrix, i.e., A ij = p(i → j in 1 step) ★ p*(x) the distribution we want to We want p*(x)p(x ′ |x) = p*(x ′ )p(x|x ′ ) A regular if ∀ k,l ∃ n s/t (A k,l ) n >0 estimate ★ p*(x)p(x ′ |x) = p*(x) q(x ′ |x)r(x ′ |x) α (x´|x)=(p*(x´)q(x|x´))/(p*(x)q(x´|x)) Detailed balance equations ∀ k,l π k A kl = π l A lk = p*(x)q(x ′ |x) (p*(x´)q(x|x´))/(p*(x)q(x´|x)) ★ = p*(x´)q(x|x´) Let r(x´|x) = min(1, α (x´|x)) Theorem: If MC M with regular transition matrix A that = p*(x´)q(x|x´)r(x|x´) ★ = p*(x´)p(x|x´) The transition probability for x´ ≠ x (x satisfies detailed balance wrt π , then π the stationary ´= x easy) p(x´|x) = q(x´|x) r(x´|x) distribution of M. Assume p*(x)q(x´|x) ≥ p*(x´)q(x|x´), Proof: Note that so r(x´|x)= α (x´|x) and r(x|x´)=1 ★ π lt+1 = ∑ k π kt A kl = ∑ k π lt A lk = π lt ∑ k A lk = π lt Efficiency of proposal distribution THE CRAFTSMANSHIP • OF PROPOSAL DESIGN Burn-in • MH with N(0,1.000 2 ) proposal MH with N(0,500.000 2 ) proposal MH with N(0,8.000 2 ) proposal 0.2 0.06 0.03 0.04 0.02 0.1 0.02 0.01 0 0 0 0 0 0 200 200 200 Convergence • 400 400 400 100 100 100 600 50 600 50 600 50 0 0 0 800 800 800 − 50 − 50 − 50 Iterations 1000 Iterations 1000 Iterations 1000 − 100 Samples − 100 Samples − 100 Samples PRACTICAL Conservative proposal - hard to move away from local optimum • CONSIDERATIONS Wild proposal - few accepted proposals • 25-40% acceptance rate considered good (use pilot runs) •

� � � � � � � � ��������������������� � ����� �� � � � � � ��� �� � ������������������ � � ����� � ����� ������������ � � � � � � � � � � � � � � � ���������� � ����� � � � � � � � � � ���������� � �� �� ������������������ � � � � � � ��������������������� ��� � � � � � � � � � � � � � ����������������� ����� ������������ � ����� � �� � � ����������������� MR BAYES PROPOSAL MR BAYES PROPOSAL NODE (VERTEX) SLIDER LOCAL TREE OPERATION Two adjacent branches a and b are chosen at random The length of a + b is changed using a multiplier with tuning Three internal branches - a, b, and c - are chosen at random. paremeter � Their total length is changed using a multiplier with tuning The node x is randomly inserted on a + b according to a paremeter �� One of the subtrees A or B is picked at random. uniform distribution It is randomly reinserted on a + b + c according to a uni- Bolder proposals: increase � form distribution More modest proposals: decrease � Bolder proposals: increase � The boldness of the proposal depends heavily on the uniform More modest proposals: decrease � reinsertion of x , so changing � may have limited effect Changing � has little effect on the boldness of the proposal Initial Condition X 0 = 10 Initial Condition X 0 = 17 MH N(0,1.000 2 ), Rhat = 1.493 MH N(0,8.000 2 ), Rhat = 1.039 50 60 p (0) (x) p (0) (x) 40 40 0 5 10 15 20 0 5 10 15 20 30 20 p (1) (x) p (1) (x) 20 0 0 5 10 15 20 0 5 10 15 20 10 − 20 p (2) (x) p (2) (x) 0 − 40 0 5 10 15 20 0 5 10 15 20 p (3) (x) p (3) (x) − 10 − 60 0 200 400 600 800 1000 0 200 400 600 800 1000 0 5 10 15 20 0 5 10 15 20 MH N(0,500.000 2 ), Rhat = 1.005 gibbs, Rhat = 1.007 p (10) (x) p (10) (x) 50 60 40 40 0 5 10 15 20 0 5 10 15 20 30 p (100) (x) p (100) (x) 20 20 10 0 5 10 15 20 0 5 10 15 20 0 0 p (200) (x) p (200) (x) − 10 − 20 − 20 0 5 10 15 20 0 5 10 15 20 − 30 − 40 p (400) (x) p (400) (x) − 40 − 50 − 60 0 200 400 600 800 1000 0 200 400 600 800 1000 0 5 10 15 20 0 5 10 15 20 BURN-IN CONVERGENCE DIAGNOSTICS - MULTIPLE CHAINS

Recommend

More recommend