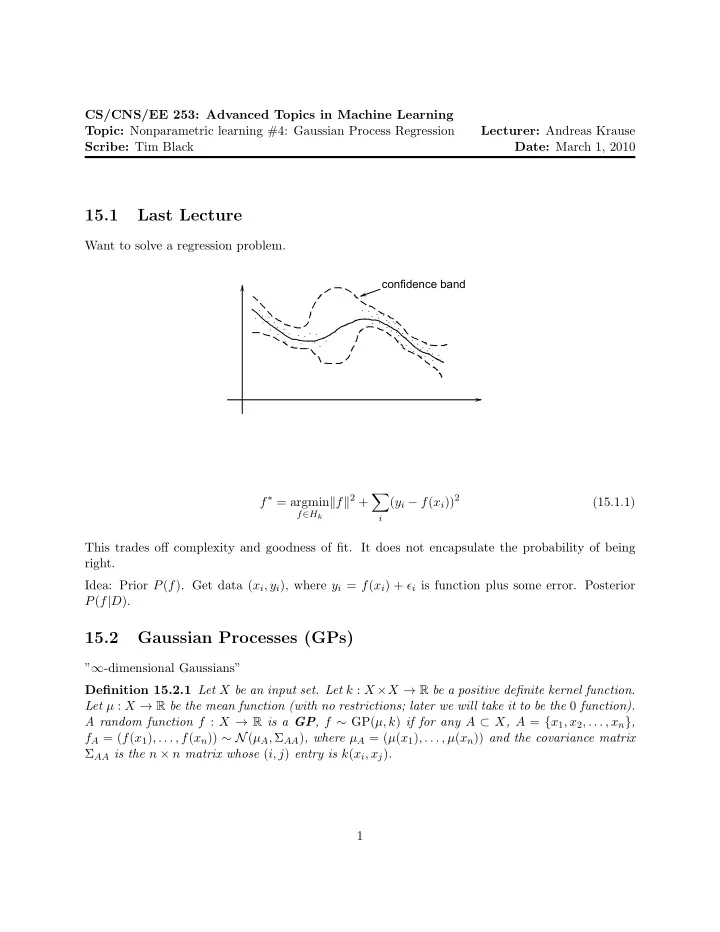

CS/CNS/EE 253: Advanced Topics in Machine Learning Topic: Nonparametric learning #4: Gaussian Process Regression Lecturer: Andreas Krause Scribe: Tim Black Date: March 1, 2010 15.1 Last Lecture Want to solve a regression problem. confidence band f ∗ = argmin � f � 2 + � ( y i − f ( x i )) 2 (15.1.1) f ∈ H k i This trades off complexity and goodness of fit. It does not encapsulate the probability of being right. Idea: Prior P ( f ). Get data ( x i , y i ), where y i = f ( x i ) + ǫ i is function plus some error. Posterior P ( f | D ). 15.2 Gaussian Processes (GPs) ” ∞ -dimensional Gaussians” Definition 15.2.1 Let X be an input set. Let k : X × X → R be a positive definite kernel function. Let µ : X → R be the mean function (with no restrictions; later we will take it to be the 0 function). A random function f : X → R is a GP , f ∼ GP( µ, k ) if for any A ⊂ X , A = { x 1 , x 2 , . . . , x n } , f A = ( f ( x 1 ) , . . . , f ( x n )) ∼ N ( µ A , Σ AA ) , where µ A = ( µ ( x 1 ) , . . . , µ ( x n )) and the covariance matrix Σ AA is the n × n matrix whose ( i, j ) entry is k ( x i , x j ) . 1

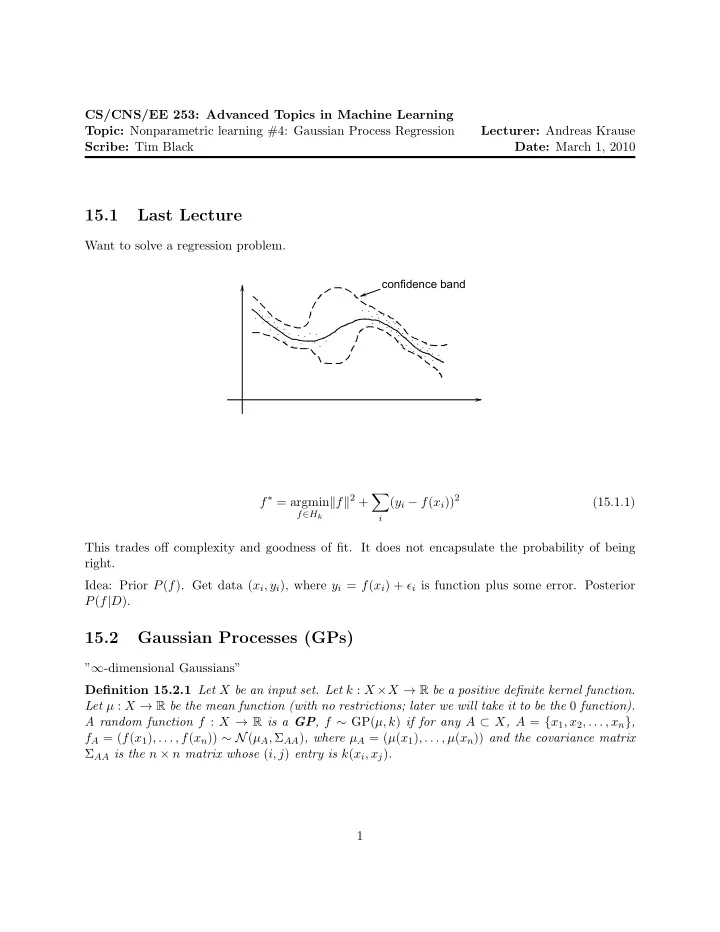

f(x) f(x) (for set x) covariance mean function x x probability of f(x) 15.2.1 Prediction in GPs Suppose we get to see f A = f ′ (that is, we get to see the value of f at a set of points without noise). What is P ( f ( x ) | f A = f ′ )? Conditionals on a Gaussian give a Gaussian: P ( f ( x ) | f A = AA ( f ′ − µ A ), σ 2 x | A ), where µ x | A = µ x + Σ xA Σ − 1 x − Σ xA Σ − 1 f ′ ) = N ( f ( x ); µ x | A , σ 2 x | A = σ 2 AA Σ Ax , and Σ x A = ( k ( x, x 1 ) , k ( x, x 2 ) , . . . , k ( x, x n )). What if we have noise? y i = f ( x i ) + ǫ i , ǫ i ∼ N (0 , Θ 2 ) y A = f A + ǫ A , ǫ A ∼ N (0 , σ 2 I ) P ( y A ) = N ( y A ; µ A , Σ AA + σ 2 x | A ) P ( f ( x ) | y A ) = N ( f ( x ); µ x | A , σ 2 x | A ) µ x | A = µ x + Σ xA (Σ AA + σ 2 I ) − 1 ( y A − µ A ) σ 2 x | A = σ 2 x + Σ xA (Σ AA + σ 2 I ) − 1 Σ Ax NEVER EVER CALCULATE (Σ AA + σ 2 I ) − 1 ) AS inv( Σ AA + σ 2 I ) . Instead, solve the linear 2

system α = ˜ Σ AA � ( y A − µ A ) (Matlab notation), where ˜ Σ AA = Σ AA + σ 2 I , and α = ˜ Σ − 1 AA ( y A − µ A ). 15.2.2 Connection to RKHS Assume µ = 0. µ x | A = µ x + Σ xA (Σ AA + σ 2 I ) − 1 y A = � n i =1 α i k ( x i , x ). � f � 2 + 1 i ( y i − f ( x i )) 2 . argmax P ( f | y A ) = argmin � σ 2 f ∈ H k f ∈ H k 15.2.3 Parameter Estimation Most kernel functions have parameters. k SE ( x, x ′ ) = c 2 exp ( − ( x − x ′ ) 2 ). Parameters are Θ = ( c, h ). c is the “magnitude” of the functions, h and h is the “length scale” of f . small h k(x, x') x' x Let N u = Number of upcrossings at level in [0 , 1] (“How wiggly is this function”). Assume k is isotropic , k ( x, x ′ ) = k ( � x − x ′ � ), and assume µ = 0. 3

Theorem 15.2.2 (Adler). � − u 2 k ′′ (0) E [ N u ] = 1 � � (15.2.2) k (0) exp 2 π 2 k (0) For SE kernel: k (0) = c 2 , k ′′ (0) = − 2 c 2 h 2 � � � � � 1 2 − u 2 1 1 − u 2 E [ N u ] = = . h 2 exp √ h exp 2 π 2 c 2 2 c 2 π 2 How do we choose the parameters? Learn from data! Pick parameters to maximize likelihood. Θ ∗ = argmax P ( y A | Θ) = argmax � P ( y A , f | Θ) d � P ( y A | f, Θ) P ( f | Θ) d Θ = argmax � P ( y A | f ) P ( f | Θ) d Θ f = argmax Θ Θ Θ Θ P(y |f) low high high A low high high P(f|O) P ( y A | Θ) = N (0 , Σ AA (Θ) + σ 2 I ) = (2 π (Σ AA (Θ))) − n/ 2 exp ( − 1 2 y T A (Σ AA (Θ) + σ 2 I ) − 1 y A ). Solve the optimization problem by gradient descent. Θ ∗ = argmax P ( y A | Θ) Θ How do we solve it? Calculate gradient of log ( P ( y A | Θ)). Run conjugate gradient descent . 15.2.4 Incorporating prior knowlege f = g + h ∼ GP(0 , k lin + k SE ), where g is parametric and h is nonparametric. i w i φ i ( x ). h ∼ GP(0 , k SE ). w ∼ N (0 , I ). g ∼ GP(0 , k lin ). k lin ( x, x ′ ) = � i φ i ( x ) φ i ( x ′ ) = g ( x ) = � φ ( x ) T φ ( x ′ ). 4

Recommend

More recommend