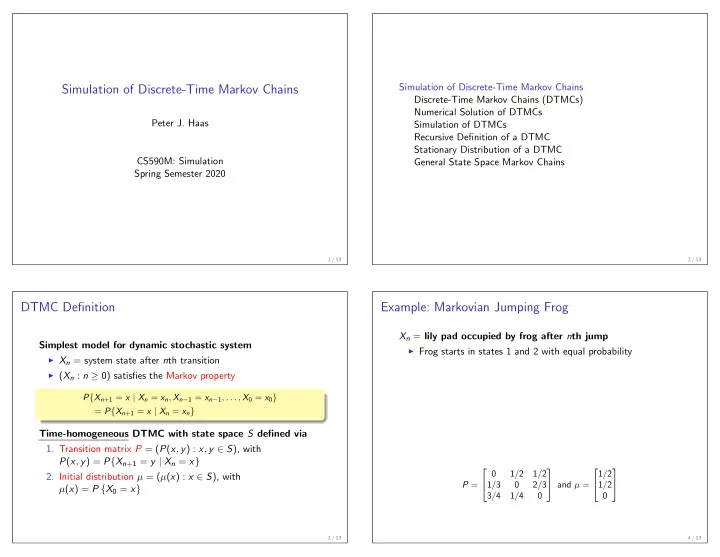

Simulation of Discrete-Time Markov Chains Simulation of Discrete-Time Markov Chains Discrete-Time Markov Chains (DTMCs) Numerical Solution of DTMCs Peter J. Haas Simulation of DTMCs Recursive Definition of a DTMC Stationary Distribution of a DTMC CS590M: Simulation General State Space Markov Chains Spring Semester 2020 1 / 13 2 / 13 DTMC Definition Example: Markovian Jumping Frog X n = lily pad occupied by frog after n th jump Simplest model for dynamic stochastic system ◮ Frog starts in states 1 and 2 with equal probability ◮ X n = system state after n th transition ◮ ( X n : n ≥ 0) satisfies the Markov property P { X n +1 = x | X n = x n , X n − 1 = x n − 1 , . . . , X 0 = x 0 } = P { X n +1 = x | X n = x n } Time-homogeneous DTMC with state space S defined via 1. Transition matrix P = ( P ( x , y ) : x , y ∈ S ), with P ( x , y ) = P { X n +1 = y | X n = x } 0 1 / 2 1 / 2 1 / 2 2. Initial distribution µ = ( µ ( x ) : x ∈ S ), with and µ = P = 1 / 3 0 2 / 3 1 / 2 µ ( x ) = P { X 0 = x } 3 / 4 1 / 4 0 0 3 / 13 4 / 13

Computing Probabilities and Expectations Simulation of DTMCs Example: θ = P { frog on pad 2 after k th jump } Why simulate? � 1 if x = 2 ◮ Write as θ = E [ f ( X k )], where f ( x ) = Naive method for generating a discrete random variable 0 otherwise ◮ Goal: Generate Y having pmf p i = P { Y = y i } and cdf ◮ Sometimes write indicator function f ( x ) as I ( x = 2) c i = P { Y ≤ y i } for 1 ≤ i ≤ m ◮ Q: Why is this correct? i p i c i 1 3/12 3/12 Numerical solution for arbitrary function f ◮ Example: 2 8/12 11/12 ◮ Q: What is probability distribution after first jump? 3 1/12 12/12 v n (1) ◮ Let v n ( i ) = P { frog on pad i after n th jump } v n = v n (2) y 3 y 1 y 2 v n (3) 11/12 1 ◮ Set v 0 = µ and v ⊤ m +1 = v ⊤ 0 m P for m ≥ 0 3/12 I.e., v m +1 ( j ) = � 3 i =1 v m ( i ) P ( i , j ) Ex: If U = 0 . 27, then return Y = y 2 ◮ Then E [ f ( X k )] = v ⊤ k f , where f = [ f (1) , f (2) , f (3)] ⊤ ◮ Q: How can we speed up this algorithm? ◮ Ex: For θ as above, take f = (0 , 1 , 0) so that v ⊤ k f = v (2) 5 / 13 6 / 13 Simulation of DTMCs, Continued DTMCs: Recursive Definition Generating a sample path X 0 , X 1 , . . . Proposition 1. Generate X 0 from µ and set m = 0 ◮ Let U 1 , U 2 , . . . be a sequence of i.i.d. random variables and X 0 2. Generate Y according to P ( X m , · ) and set X m +1 = Y a given random variable 3. Set m ← m + 1 and go to 2. ◮ ( X n : n ≥ 0) is a time homogeneous DTMC ⇔ X n +1 = f ( X n , U n +1 ) for n ≥ 0 and some function f Estimating θ = E [ f ( X k )] In ⇒ direction, U 1 , U 2 , . . . can be taken as uniform 1. Generate X 0 , X 1 , . . . , X k and set Z = f ( X k ) 2. Repeat n times to generate Z 1 , Z 2 , . . . , Z n (i.i.d.) Can use to prove that a given process ( X n : n ≥ 0) is a DTMC 3. Compute point estimate θ n = (1 / n ) � n i =1 Z i Q: What if U 0 , U 1 , . . . are independent but not identical? Can generalize to estimate θ = E [ f ( W )] , where W = f ( X 0 , X 1 , . . . , X k ) Q: Practical advantages of recursive definition? 7 / 13 8 / 13

Example: ( s , S ) Inventory System Digression: Stationary Distribution of a DTMC Definition ◮ Informal : π is a stationary distribution of the DTMC if The model D D ∼ π implies X n +1 ∼ π X n ◮ X n = inventory level at the end of period n ◮ Formal: ◮ D n = demand in period n ◮ If ( s , S ) policy is followed then � π ( j ) = P ( X n +1 = j ) = P ( X n +1 = j | X n = i ) P ( X n = i ) i � or π ⊤ = π ⊤ P � X n − D n +1 if X n − D n +1 ≥ s ; = P ( i , j ) π ( i ) X n +1 = if X n − D n +1 < s S i D D ◮ So if X 0 ∼ π , then X n ∼ π for n ≥ 1 Claim: If ( D n : n ≥ 1) is i.i.d. then ( X n : n ≥ 0) is a DTMC with state space { s , s + 1 , . . . , S } Under appropriate conditions, lim n →∞ P ( X n = i ) = π ( i ) ◮ Also written as X n ⇒ X , where X D Q: Critique of model—what might be missing? ∼ π i f ( i ) π ( i ) = E [ f ( X )] (where X D How to estimate θ = � ∼ π )? 9 / 13 10 / 13 General State Space Markov Chains: GSSMCs GSSMC Example: Waiting times in GI/G/1 Queue Problem: With continuous state space, P { X n +1 = x ′ | X n = x } = 0 ! The GI/G/1 Queue ◮ Service center: single server, infinite-capacity waiting room ◮ Solution: Use transition kernel P ( x , A ) = P { X n +1 ∈ A | X n = x } ◮ Jobs arrive one at a time ◮ In practice: Use recursive definition ◮ First-come, first served (FCFS) service discipline ◮ Successive interarrival times are i.i.d. Example: Continuous ( s , S ) inventory system ◮ Successive service times are i.i.d. Example: Random walk on the real line Queue Server ◮ Let Y 1 , Y 2 , . . . be an i.i.d. sequence of continuous, real-valued random variables ◮ Set X 0 = 0 and X n +1 = X n + Y n +1 for n ≥ 0 ◮ Then ( X n : n ≥ 0) is a GSSMC with state space ℜ 11 / 13 12 / 13

GI/G/1 Waiting Times, Continued Notation ◮ W n = the waiting time of the nth customer (excl. of service) ◮ A n / D n = arrival/departure time of the nth customer ◮ V n = processing time of the nth customer Recursion (Lindley Equation) ◮ D n = A n + W n + V n ◮ Thus W n +1 = [ D n − A n +1 ] + = [ A n + W n + V n − A n +1 ] + = [ W n + V n − I n +1 ] + where I n +1 = A n +1 − A n is ( n + 1)st interarrival time and [ x ] + = max( x , 0) ◮ Thus ( W n : n ≥ 0) is a GSSMC ◮ To simulate: generate the V n ’s and I n ’s and apply recursion 13 / 13

Recommend

More recommend