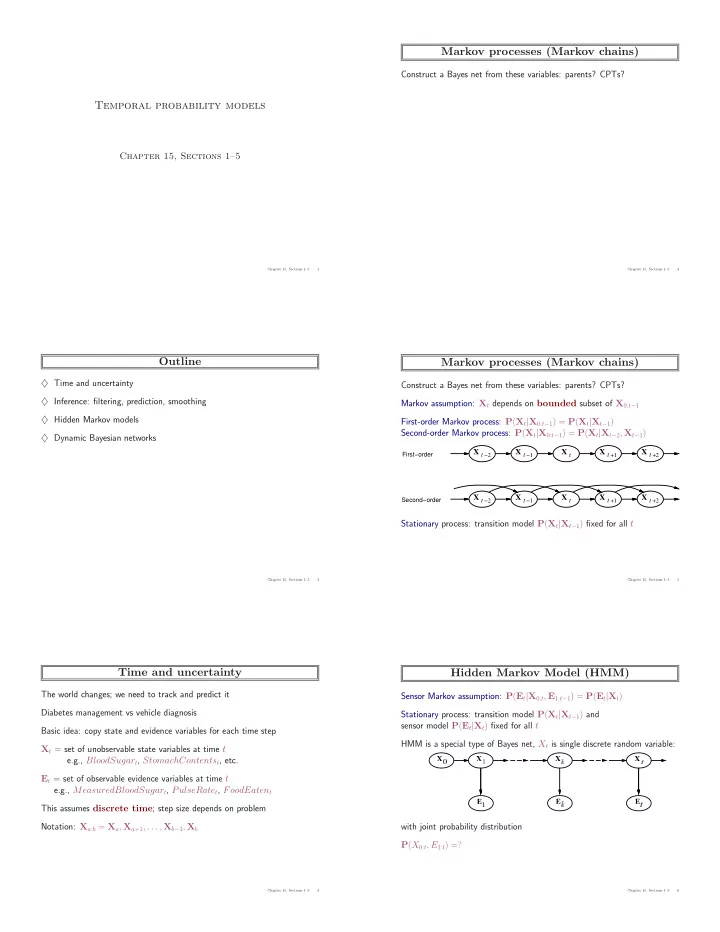

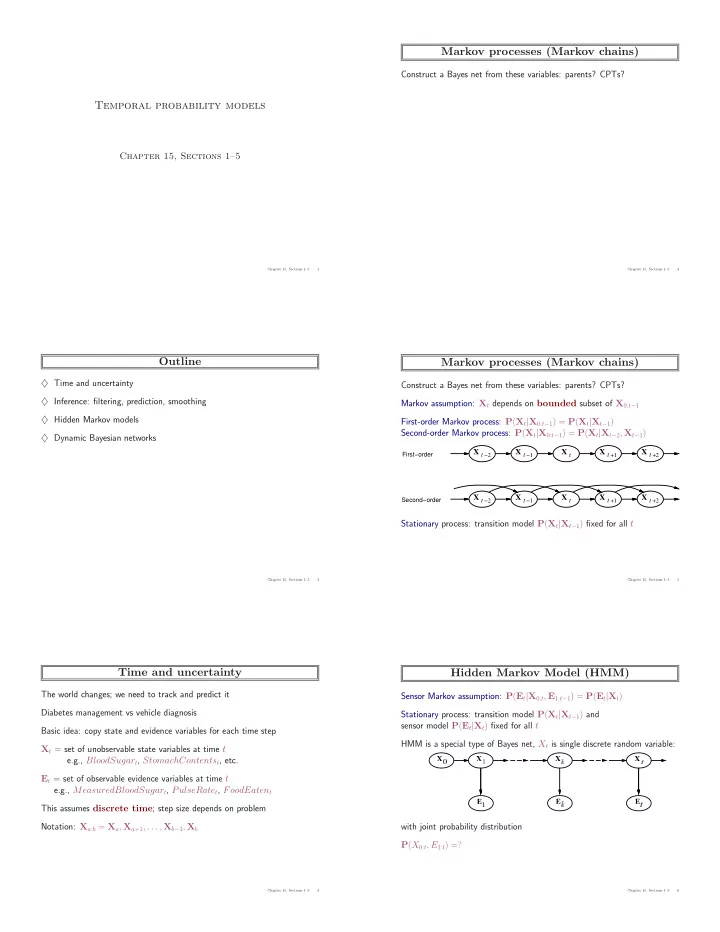

Markov processes (Markov chains) Construct a Bayes net from these variables: parents? CPTs? Temporal probability models Chapter 15, Sections 1–5 Chapter 15, Sections 1–5 1 Chapter 15, Sections 1–5 4 Outline Markov processes (Markov chains) ♦ Time and uncertainty Construct a Bayes net from these variables: parents? CPTs? ♦ Inference: filtering, prediction, smoothing Markov assumption: X t depends on bounded subset of X 0: t − 1 ♦ Hidden Markov models First-order Markov process: P ( X t | X 0: t − 1 ) = P ( X t | X t − 1 ) Second-order Markov process: P ( X t | X 0: t − 1 ) = P ( X t | X t − 2 , X t − 1 ) ♦ Dynamic Bayesian networks X t −2 X t −1 X t X t +1 X t +2 First−order X t −2 X t −1 X t X t +1 X t +2 Second−order Stationary process: transition model P ( X t | X t − 1 ) fixed for all t Chapter 15, Sections 1–5 2 Chapter 15, Sections 1–5 5 Time and uncertainty Hidden Markov Model (HMM) The world changes; we need to track and predict it Sensor Markov assumption: P ( E t | X 0: t , E 1: t − 1 ) = P ( E t | X t ) Diabetes management vs vehicle diagnosis Stationary process: transition model P ( X t | X t − 1 ) and sensor model P ( E t | X t ) fixed for all t Basic idea: copy state and evidence variables for each time step HMM is a special type of Bayes net, X t is single discrete random variable: X t = set of unobservable state variables at time t e.g., BloodSugar t , StomachContents t , etc. E t = set of observable evidence variables at time t e.g., MeasuredBloodSugar t , PulseRate t , FoodEaten t This assumes discrete time ; step size depends on problem Notation: X a : b = X a , X a +1 , . . . , X b − 1 , X b with joint probability distribution P ( X 0: t , E 1: t ) =? Chapter 15, Sections 1–5 3 Chapter 15, Sections 1–5 6

Hidden Markov Model (HMM) Filtering Aim: devise a recursive state estimation algorithm: Sensor Markov assumption: P ( E t | X 0: t , E 1: t − 1 ) = P ( E t | X t ) P ( X t +1 | e 1: t +1 ) = f ( e t +1 , P ( X t | e 1: t )) Stationary process: transition model P ( X t | X t − 1 ) and sensor model P ( E t | X t ) fixed for all t HMM is a special type of Bayes net, X t is single discrete random variable: with joint probability distribution P ( X 0: t , E 1: t ) = P ( X 0 ) Π t i =1 P ( X i | X i − 1 ) P ( E i | X i ) Chapter 15, Sections 1–5 7 Chapter 15, Sections 1–5 10 Example Filtering Aim: devise a recursive state estimation algorithm: R t −1 P(R ) t t 0.7 f 0.3 P ( X t +1 | e 1: t +1 ) = f ( e t +1 , P ( X t | e 1: t )) Rain t −1 Rain Rain t +1 t R P(U ) t t t 0.9 f 0.2 P ( X t +1 | e 1: t +1 ) = P ( X t +1 | e 1: t , e t +1 ) Umbrella t −1 Umbrella Umbrella t +1 = α P ( e t +1 | X t +1 , e 1: t ) P ( X t +1 | e 1: t ) t = α P ( e t +1 | X t +1 ) P ( X t +1 | e 1: t ) First-order Markov assumption not exactly true in real world! Possible fixes: 1. Increase order of Markov process 2. Augment state , e.g., add Temp t , Pressure t Example: robot motion. Augment position and velocity with Battery t Chapter 15, Sections 1–5 8 Chapter 15, Sections 1–5 11 Inference tasks Filtering Filtering: P ( X t | e 1: t ) Aim: devise a recursive state estimation algorithm: belief state—input to the decision process of a rational agent P ( X t +1 | e 1: t +1 ) = f ( e t +1 , P ( X t | e 1: t )) Prediction: P ( X t + k | e 1: t ) for k > 0 evaluation of possible action sequences; like filtering without the evidence P ( X t +1 | e 1: t +1 ) = P ( X t +1 | e 1: t , e t +1 ) = α P ( e t +1 | X t +1 , e 1: t ) P ( X t +1 | e 1: t ) Smoothing: P ( X k | e 1: t ) for 0 ≤ k < t = α P ( e t +1 | X t +1 ) P ( X t +1 | e 1: t ) better estimate of past states, essential for learning I.e., prediction + estimation. Prediction by summing out X t : Most likely explanation: arg max x 1: t P ( x 1: t | e 1: t ) speech recognition, decoding with a noisy channel P ( X t +1 | e 1: t +1 ) = α P ( e t +1 | X t +1 ) Σ x t P ( X t +1 , x t | e 1: t ) = α P ( e t +1 | X t +1 ) Σ x t P ( X t +1 | x t , e 1: t ) P ( x t | e 1: t ) = α P ( e t +1 | X t +1 ) Σ x t P ( X t +1 | x t ) P ( x t | e 1: t ) Chapter 15, Sections 1–5 9 Chapter 15, Sections 1–5 12

Filtering Most likely explanation Aim: devise a recursive state estimation algorithm: Most likely sequence � = sequence of most likely states!!!! P ( X t +1 | e 1: t +1 ) = f ( e t +1 , P ( X t | e 1: t )) Most likely path to each x t +1 = most likely path to some x t plus one more step x 1 ... x t P ( x 1 , . . . , x t , X t +1 | e 1: t +1 ) max P ( X t +1 | e 1: t +1 ) = P ( X t +1 | e 1: t , e t +1 ) = P ( e t +1 | X t +1 ) max P ( X t +1 | x t ) max x 1 ... x t − 1 P ( x 1 , . . . , x t − 1 , x t | e 1: t ) = α P ( e t +1 | X t +1 , e 1: t ) P ( X t +1 | e 1: t ) x t = α P ( e t +1 | X t +1 ) P ( X t +1 | e 1: t ) Identical to filtering, except f 1: t replaced by I.e., prediction + estimation. Prediction by summing out X t : m 1: t = x 1 ... x t − 1 P ( x 1 , . . . , x t − 1 , X t | e 1: t ) , max P ( X t +1 | e 1: t +1 ) = α P ( e t +1 | X t +1 ) Σ x t P ( X t +1 , x t | e 1: t ) I.e., m 1: t ( i ) gives the probability of the most likely path to state i . = α P ( e t +1 | X t +1 ) Σ x t P ( X t +1 | x t , e 1: t ) P ( x t | e 1: t ) Update has sum replaced by max, giving the Viterbi algorithm: = α P ( e t +1 | X t +1 ) Σ x t P ( X t +1 | x t ) P ( x t | e 1: t ) m 1: t +1 = P ( e t +1 | X t +1 ) max x t ( P ( X t +1 | x t ) m 1: t ) f 1: t +1 = Forward ( f 1: t , e t +1 ) where f 1: t = P ( X t | e 1: t ) Time and space constant (independent of t ) Chapter 15, Sections 1–5 13 Chapter 15, Sections 1–5 16 Filtering example Viterbi example 0.500 0.627 Rain 1 Rain 2 Rain 3 Rain 4 Rain 5 0.500 0.373 true true true true true state True 0.500 0.818 0.883 space False 0.500 0.182 0.117 paths false false false false false Rain 0 Rain 1 Rain 2 true true false true true umbrella .8182 .5155 .0361 .0334 .0210 most likely paths Umbrella 1 Umbrella 2 .1818 .0491 .1237 .0173 .0024 m 1:1 m 1:2 m 1:3 m 1:4 m 1:5 P ( X t +1 | e 1: t +1 ) = α P ( e t +1 | X t +1 ) Σ x t P ( X t +1 | x t ) P ( x t | e 1: t ) P ( R t ) P ( U t ) R t − 1 R t t 0.7 t 0.9 f 0.3 f 0.2 Chapter 15, Sections 1–5 14 Chapter 15, Sections 1–5 17 Most likely explanation Implementation Issues Viterbi message: m 1: t +1 = P ( e t +1 | X t +1 ) max x t ( P ( X t +1 | x t ) m 1: t ) or filtering update: P ( X t +1 | e 1: t +1 ) = α P ( e t +1 | X t +1 ) Σ x t P ( X t +1 | x t ) P ( x t | e 1: t ) What is 10 − 6 · 10 − 6 · 10 − 6 ? Chapter 15, Sections 1–5 15 Chapter 15, Sections 1–5 18

Implementation Issues Hidden Markov models X t is a single, discrete variable (usually E t is too) Viterbi message: m 1: t +1 = P ( e t +1 | X t +1 ) max x t ( P ( X t +1 | x t ) m 1: t ) Domain of X t is { 1 , . . . , S } or filtering update: P ( X t +1 | e 1: t +1 ) = α P ( e t +1 | X t +1 ) Σ x t P ( X t +1 | x t ) P ( x t | e 1: t ) 0 . 7 0 . 3 Transition matrix T ij = P ( X t = j | X t − 1 = i ) , e.g., 0 . 3 0 . 7 Sensor matrix O t for each time step, diagonal elements P ( e t | X t = i ) What is 10 − 6 · 10 − 6 · 10 − 6 ? 0 . 9 0 e.g., with U 1 = true , O 1 = 0 0 . 2 What is floating point arithmetic precision? Forward messages as column vectors: f 1: t +1 = α O t +1 T ⊤ f 1: t Chapter 15, Sections 1–5 19 Chapter 15, Sections 1–5 22 Implementation Issues Dynamic Bayesian networks X t , E t contain arbitrarily many variables in a replicated Bayes net Viterbi message: m 1: t +1 = P ( e t +1 | X t +1 ) max x t ( P ( X t +1 | x t ) m 1: t ) or filtering update: P ( X t +1 | e 1: t +1 ) = α P ( e t +1 | X t +1 ) Σ x t P ( X t +1 | x t ) P ( x t | e 1: t ) BMeter 1 R 0 P(R ) P(R ) 1 0 t 0.7 Battery 0 Battery 0.7 f 0.3 1 Rain 0 Rain 1 X 0 X 1 What is 10 − 6 · 10 − 6 · 10 − 6 ? R 1 P(U ) 1 t 0.9 f 0.2 X 0 X X 1 What is floating point arithmetic precision? t Umbrella 1 10 − 6 · 10 − 6 · 10 − 6 = 0 Z 1 Chapter 15, Sections 1–5 20 Chapter 15, Sections 1–5 23 Answer? Summary Use either: Temporal models use state and sensor variables replicated over time – Rescaling, multiply values by a (large) constant Markov assumptions and stationarity assumption, so we need – logsum trick (Assignment 5) – transition model P ( X t | X t − 1 ) log is monotone increasing, so: – sensor model P ( E t | X t ) arg max f ( x ) = arg max log f ( x ) Tasks are filtering, prediction, smoothing, most likely sequence; Also, all done recursively with constant cost per time step log( a · b ) = log a + log b Hidden Markov models have a single discrete state variable; used Therefore, work with sums of logarithms of probabilities, rather than products for speech recognition of probabilities: Dynamic Bayes nets subsume HMMs; exact update intractable m 1: t +1 = P ( e t +1 | X t +1 ) max x t ( P ( X t +1 | x t ) m 1: t ) → log m 1: t +1 = log P ( e t +1 | X t +1 ) + max x t (log P ( X t +1 | x t ) + log m 1: t ) Chapter 15, Sections 1–5 21 Chapter 15, Sections 1–5 24

Example Umbrella Problems Filtering: f 1: t +1 := P ( X t +1 | e 1: t +1 ) = α P ( e t +1 | X t +1 ) Σ x t P ( X t +1 | x t ) P ( x t | e 1: t ) Viterbi: m 1: t +1 = P ( e t +1 | X t +1 ) max x t ( P ( X t +1 | x t ) m 1: t ) P ( R t = t ) P ( R t = f ) P ( U t = t ) P ( U t = f ) R t − 1 R t t 0.7 0.3 t 0.9 0.1 f 0.3 0.7 f 0.2 0.8 P ( R 3 |¬ u 1 , u 2 , ¬ u 3 ) = ? arg max R 1:3 P ( R 1:3 |¬ u 1 , u 2 , ¬ u 3 ) = ? Chapter 15, Sections 1–5 25

Recommend

More recommend