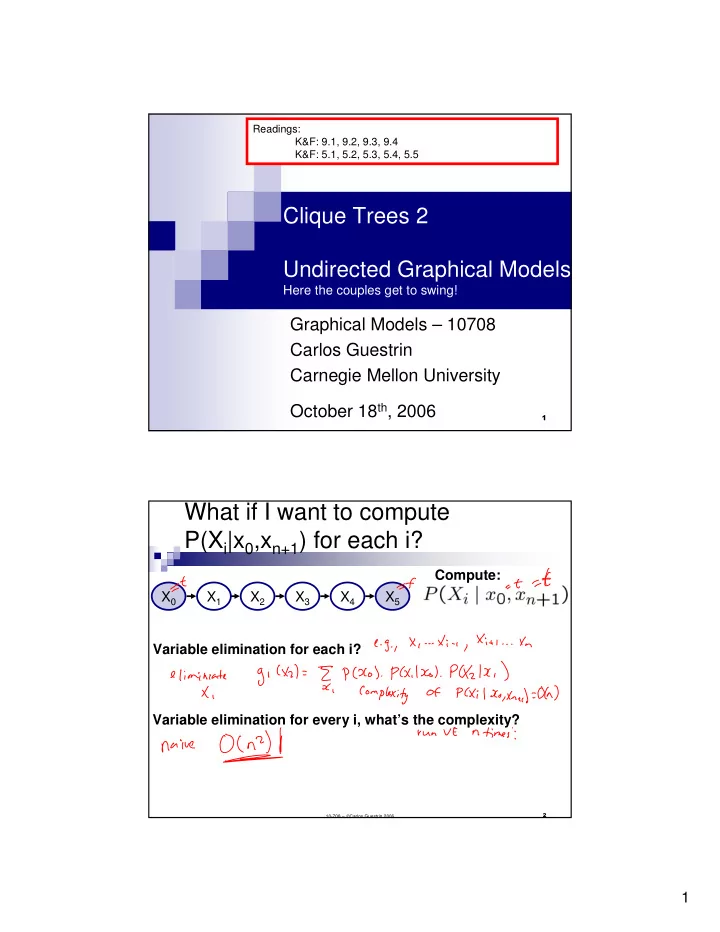

Readings: K&F: 9.1, 9.2, 9.3, 9.4 K&F: 5.1, 5.2, 5.3, 5.4, 5.5 Clique Trees 2 Undirected Graphical Models Here the couples get to swing! Graphical Models – 10708 Carlos Guestrin Carnegie Mellon University October 18 th , 2006 � What if I want to compute P(X i |x 0 ,x n+1 ) for each i? Compute: X 0 X 1 X 2 X 3 X 4 X 5 Variable elimination for each i? Variable elimination for every i, what’s the complexity? � 10-708 – Carlos Guestrin 2006 1

Cluster graph � Cluster graph : For set of factors F CD � Undirected graph � Each node i associated with a cluster C i � Family preserving : for each factor f j � F , DIG � node i such that scope[f i ] � C i � Each edge i – j is associated with a GSI separator S ij = C i � C j C D I GJSL JSL G S L HGJ J H � 10-708 – Carlos Guestrin 2006 Factors generated by VE Coherence Difficulty Intelligence Grade SAT Letter Job Happy Elimination order: {C,D,I,S,L,H,J,G} � 10-708 – Carlos Guestrin 2006 2

Cluster graph for VE � VE generates cluster tree! CD � One clique for each factor used/generated � Edge i – j, if f i used to generate f j DIG � “Message” from i to j generated when marginalizing a variable from f i � Tree because factors only used once GSI � Proposition : � “Message” δ ij from i to j GJSL JSL � Scope[ δ ij ] � S ij HGJ � 10-708 – Carlos Guestrin 2006 Running intersection property � Running intersection property (RIP) CD � Cluster tree satisfies RIP if whenever X � C i and X � C j then X is in every cluster in the (unique) path from C i to C j DIG � Theorem : � Cluster tree generated by VE satisfies RIP GSI GJSL JSL HGJ � 10-708 – Carlos Guestrin 2006 3

Constructing a clique tree from VE � Select elimination order � � Connect factors that would be generated if you run VE with order � � Simplify! � Eliminate factor that is subset of neighbor � 10-708 – Carlos Guestrin 2006 Find clique tree from chordal graph � Triangulate moralized graph to obtain chordal graph � Find maximal cliques � NP-complete in general � Easy for chordal graphs � Max-cardinality search Coherence � Maximum spanning tree finds clique tree satisfying RIP!!! Difficulty Intelligence � Generate weighted graph over cliques Grade SAT � Edge weights (i,j) is separator size – | C i � C j | Letter Job Happy � 10-708 – Carlos Guestrin 2006 4

Clique tree & Independencies � Clique tree (or Junction tree) CD � A cluster tree that satisfies the RIP � Theorem : DIG � Given some BN with structure G and factors F � For a clique tree T for F consider C i – C j with separator S ij : GSI � X – any set of vars in C i side of the tree � Y – any set of vars in C i side of the tree � Then, ( X ⊥ Y | S ij ) in BN GJSL JSL � Furthermore, I( T ) � I( G ) HGJ � 10-708 – Carlos Guestrin 2006 Variable elimination in a clique tree 1 C 1 : CD C 2 : DIG C 3 : GSI C 4 : GJSL C 5 : HGJ C D I G S � Clique tree for a BN L � Each CPT assigned to a clique J � Initial potential π 0 ( C i ) is product of CPTs H �� 10-708 – Carlos Guestrin 2006 5

Variable elimination in a clique tree 2 C 1 : CD C 2 : DIG C 3 : GSI C 4 : GJSL C 5 : HGJ � VE in clique tree to compute P(X i ) � Pick a root (any node containing X i ) � Send messages recursively from leaves to root � Multiply incoming messages with initial potential � Marginalize vars that are not in separator � Clique ready if received messages from all neighbors �� 10-708 – Carlos Guestrin 2006 Belief from message � Theorem : When clique C i is ready � Received messages from all neighbors � Belief π i ( C i ) is product of initial factor with messages: �� 10-708 – Carlos Guestrin 2006 6

� Message does not Choice of root depend on root!!! Root: node 5 Root: node 3 “Cache” computation: Obtain belief for all roots in linear time!! �� 10-708 – Carlos Guestrin 2006 Shafer-Shenoy Algorithm (a.k.a. VE in clique tree for all roots) � Clique C i ready to transmit to C 2 neighbor C j if received messages from all neighbors but j C 3 � Leaves are always ready to transmit � While � C i ready to transmit to C j C 1 C 4 � Send message δ i � j C 5 � Complexity: Linear in # cliques � One message sent each direction in C 6 each edge � Corollary : At convergence C 7 � Every clique has correct belief �� 10-708 – Carlos Guestrin 2006 7

Calibrated Clique tree � Initially, neighboring nodes don’t agree on “distribution” over separators � Calibrated clique tree : � At convergence, tree is calibrated � Neighboring nodes agree on distribution over separator �� 10-708 – Carlos Guestrin 2006 Answering queries with clique trees � Query within clique � Incremental updates – Observing evidence Z=z � Multiply some clique by indicator 1 (Z=z) � Query outside clique � Use variable elimination! �� 10-708 – Carlos Guestrin 2006 8

Message passing with division C 1 : CD C 2 : DIG C 3 : GSI C 4 : GJSL C 5 : HGJ � Computing messages by multiplication: � Computing messages by division: �� 10-708 – Carlos Guestrin 2006 Lauritzen-Spiegelhalter Algorithm Simplified description (a.k.a. belief propagation) see reading for details � Initialize all separator potentials to 1 C 2 � µ ij � 1 C 3 � All messages ready to transmit C 1 C 4 � While � δ i � j ready to transmit C 5 � µ ij ’ � C 6 � If µ ij ’ ≠ µ ij C 7 � δ i � j � � π j � π j � δ i � j � µ ij � µ ij ’ � � neighbors k of j, k ≠ i, δ j � k ready to transmit � Complexity: Linear in # cliques � for the “right” schedule over edges (leaves to root, then root to leaves) � Corollary : At convergence, every clique has correct belief �� 10-708 – Carlos Guestrin 2006 9

VE versus BP in clique trees � VE messages (the one that multiplies) � BP messages (the one that divides) �� 10-708 – Carlos Guestrin 2006 Clique tree invariant � Clique tree potential : � Product of clique potentials divided by separators potentials � Clique tree invariant : � P( X ) = π Τ ( X ) �� 10-708 – Carlos Guestrin 2006 10

Belief propagation and clique tree invariant � Theorem : Invariant is maintained by BP algorithm! � BP reparameterizes clique potentials and separator potentials � At convergence, potentials and messages are marginal distributions �� 10-708 – Carlos Guestrin 2006 Subtree correctness � Informed message from i to j, if all messages into i (other than from j) are informed � Recursive definition (leaves always send informed messages) � Informed subtree : � All incoming messages informed � Theorem : � Potential of connected informed subtree T’ is marginal over scope[ T’ ] � Corollary : � At convergence, clique tree is calibrated � π i = P(scope[ π i ]) � µ ij = P(scope[ µ ij ]) �� 10-708 – Carlos Guestrin 2006 11

Clique trees versus VE � Clique tree advantages � Multi-query settings � Incremental updates � Pre-computation makes complexity explicit � Clique tree disadvantages � Space requirements – no factors are “deleted” � Slower for single query � Local structure in factors may be lost when they are multiplied together into initial clique potential �� 10-708 – Carlos Guestrin 2006 Clique tree summary � Solve marginal queries for all variables in only twice the cost of query for one variable � Cliques correspond to maximal cliques in induced graph � Two message passing approaches � VE (the one that multiplies messages) � BP (the one that divides by old message) � Clique tree invariant � Clique tree potential is always the same � We are only reparameterizing clique potentials � Constructing clique tree for a BN � from elimination order � from triangulated (chordal) graph � Running time (only) exponential in size of largest clique � Solve exactly problems with thousands (or millions, or more) of variables, and cliques with tens of nodes (or less) �� 10-708 – Carlos Guestrin 2006 12

Announcements � Recitation tomorrow, don’t miss it!!! � Ajit on Junction Trees �� 10-708 – Carlos Guestrin 2006 Swinging Couples revisited � This is no perfect map in BNs � But, an undirected model will be a perfect map �� 10-708 – Carlos Guestrin 2006 13

Potentials (or Factors) in Swinging Couples �� 10-708 – Carlos Guestrin 2006 Computing probabilities in Markov networks v. BNs � In a BN, can compute prob. of an instantiation by multiplying CPTs � In an Markov networks, can only compute ratio of probabilities directly �� 10-708 – Carlos Guestrin 2006 14

Normalization for computing probabilities � To compute actual probabilities, must compute normalization constant (also called partition function) Computing partition function is hard! � Must sum over � all possible assignments �� 10-708 – Carlos Guestrin 2006 Factorization in Markov networks � Given an undirected graph H over variables X ={X 1 ,...,X n } A distribution P factorizes over H if � � � subsets of variables D 1 � X ,…, D m � X, such that the D i are fully connected in H � non-negative potentials (or factors) π 1 ( D 1 ),…, π m ( D m ) also known as clique potentials � � such that � Also called Markov random field H, or Gibbs distribution over H �� 10-708 – Carlos Guestrin 2006 15

Recommend

More recommend