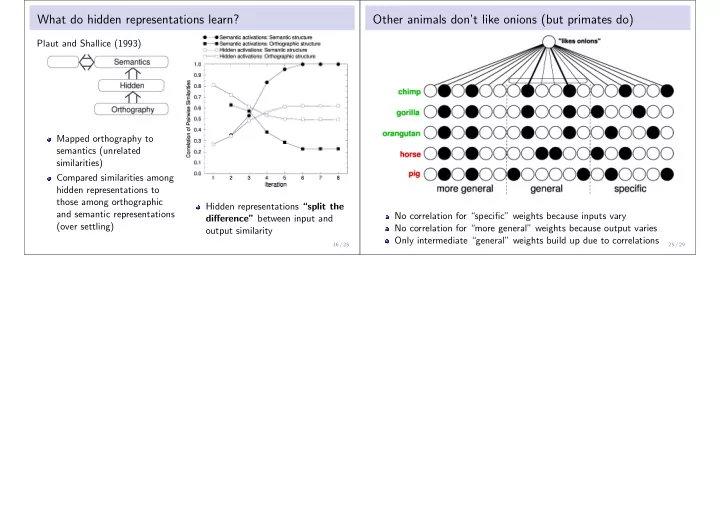

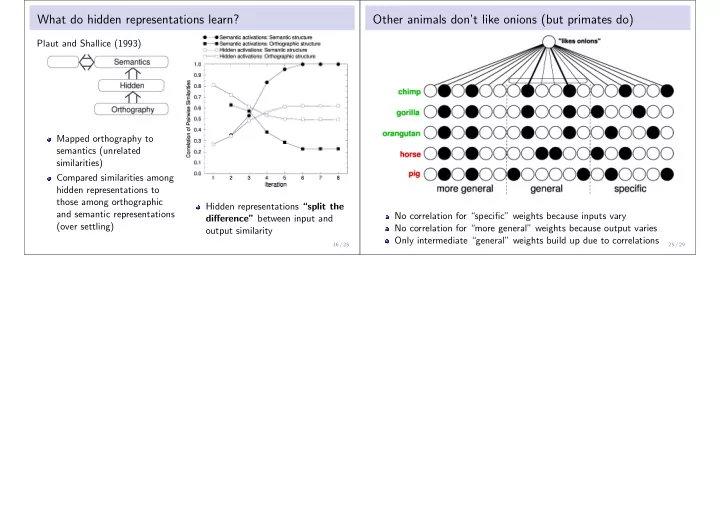

What do hidden representations learn? Other animals don’t like onions (but primates do) Plaut and Shallice (1993) Mapped orthography to semantics (unrelated similarities) Compared similarities among hidden representations to those among orthographic Hidden representations “split the and semantic representations No correlation for “specific” weights because inputs vary difference” between input and (over settling) No correlation for “more general” weights because output varies output similarity Only intermediate “general” weights build up due to correlations 16 / 25 25 / 29

Progressive deteroriation in semantic dementia Semantic hierarchy Progressive Rumelhart and Todd (1993) differentiation in development

Progressive differentiation of concepts “Basic” level Perceptual to conceptual shift Internal representations

Semantic memory Challenge to unitary semantics account Unitary, amodal semantic system Action Phonology (Gesturing) (Naming) Post-semantic lesion • General conceptual knowledge Action Speaking Writing • No basis for sensitivity to input modality abstracted from a large number of Semantics individual episodes or experiences Visual Verbal Tactile (Tulving, 1972) . Input Input Input Semantics • Mediates among multiple input and output modalities Action Phonology Semantic lesion (Gesturing) (Naming) • Can be selectively impaired by • Would impair visual gesturing and non-visual Vision Touch Hearing Semantics brain damage, usually to the naming anterior temporal lobes (Warrington, Visual Verbal Tactile Input Input Input 1975) . Challenges Pre-semantic lesion Action Phonology (Gesturing) (Naming) • Modality-specific effects in semantic priming • Would impair visual gesturing (and other Semantics measures of comprehension) • Category- and modality-specific semantic deficits – But might be preserved relative to naming due to Visual Verbal Tactile • Modality-specific naming disorders (e.g., optic aphasia) priviledged access (Caramazza et al., 1990) Input Input Input Optic aphasia Alternative view of semantic organization Action Phonology Selective impairment in visual object naming (Gesturing) (Naming) • Brain damage to left medial occipital lobe (visual cortex and underlying white matter) Multiple modality-specific semantic systems (Beauvois, 1982; Lhermitte & Visual Verbal Tactile • Not visual agnosia—relatively preserved visual gesturing and other tests of visual Semantics Semantics Semantics comprehension Beauvois, 1973; Shallice, 1987; Warrington, 1975) • Not general anomia—relatively preserved naming from other modalities (e.g., touch, Visual Verbal Tactile spoken definitions) Input Input Input • Relatively preserved naming of actions associated with visually presented objects (Manning & Campbell, 1996) Optic aphasia : Disconnection from visual to verbal semantics % Correct Performance Visual Visual Tactile Action Study Naming Gesturing Naming Naming Problems Lhermitte & Beauvois (1973) 73 100 91 • Unparsimonious and post-hoc Teixeira Ferreira et al. (1997) 53 95 81 75 Manning & Campbell (1996) 27 75 90 67 • Poor accounts of acquisition, cross-modal generalization and priming Coslett & Saffran (1989) 0 50 92 • No account of relative sparing of visual action naming Analogous selective naming deficits have been observed for tactile input (Beauvois et al., 1978) and for auditory input (Denes & Semenza, 1975)

Current approach Tasks • The semantic system operates according to connectionist/parallel distributed • Naming objects from vision or touch processing (PDP) principles: • Naming and gesturing action associated with objects from vision or touch – Processing : Responses are generated by the interactions of large numbers of simple, neuron-like processing units. Stimulus – Representation : Within each modality, similar objects are represented by overlapping distributed patterns of activity. (Vision or Touch) Task Phonology Action ⇒ – Learning : Knowledge is encoded as weights on connections between units, adjusted object “bed” – ⇒ gradually based on task performance. action “sleep” ⇒ • Semantic representations develop a graded degree of modality-specific specialization in learning to mediate between multiple input and output modalities. • Graded specialization derives from two factors: 1. Task systematicity : Whether similar inputs map to similar outputs ⇒ Naming is an unsystematic task 2. Topographic bias : Learning favors “short” connections (Jacobs & Jordan, 1992) ⇒ Mappings rely most on regions of semantics “near” relevant modalities Training procedure Action (Gesturing) • Object ( N = 100), modality of presentation (Vision vs. Touch), and task (object vs. action) chosen randomly during training • Activations clamped on appropriate input modality; network settled for 5.0 units of • Continuous recurrent time ( τ = 0.2); error injected only over last time unit attractor network Touch Vision • Two input groups • Error derivatives for each weight calculated by back-propagation-through-time (Vision, Touch) adapted for continuous-time networks (Pearlmutter, 1989) equidistant from two • Weight changes scaled by Gaussian function ( SD = 10) of connection length output groups (Action, Phonology) (cf. Jacobs & Jordan, 1992) • Task units: object vs. • 110,000 total object presentations ( ≈ 275 per condition); action tasks all output activations on correct side of 0.5 for all objects and tasks Phonology Task (Naming)

Action Semantic similarity (Gesturing) size of white square = unit activity level Mean correlations among pairs of semantic representations generated by each object in each input modality (note: relatedness is relative to visual categories). Vision Touch 0.7 .63 (.08) Same Modality 0.6 Different Modalities Mean Semantic Correlation 0.5 0.4 .36 (.19) 0.3 .22 (.16) 0.2 Phonology Task 0.1 (Naming) .03 (.12).02 (.11) 0.0 Identical Same Category Unrelated 1.0 Weight-Change Factor Vision Inputs Item Pairs Touch Inputs 0.8 0.6 • An object is most similar to itself regardless of modality of presentation 0.4 (but cross-modal representations are not identical ) 0.2 0.0 • Accounts for reduction in semantic priming with cross-modal presentation 1 3 5 7 9 11 13 15 X Position Acquisition Magnitude of incoming weights to semantics Vision Input Touch Input 100 90 Visual Gesturing Visual Action Naming 80 Visual Object Naming 70 Touch Percent Correct Vision 60 50 40 30 20 10 1.4 Incoming Weight Magnitude 1.2 0 0 10 20 30 40 50 60 70 80 90 100 1.0 Object Presentations (x 1000) 0.8 0.6 0.4 Visual Input Tactile Input 0.2 0.0 1 3 5 7 9 11 13 15 X Position

Topographic lesions Effects of lesion location Lesions applied to Vision ⇒ Semantics connections % correct visual gesturing − % correct visual naming • Probability of removing each connection is a Gaussian function of the distance of unit from ( white : gesturing > naming; black : naming > gesturing) lesion location; SD of Gaussian controls severity (1.5 below). Action Action (Gesturing) Vision Touch Touch Vision Phonology Task (Naming) Phonology Effects of lesion location Effects of lesion severity 100 Performance on visual naming and visual gesturing after lesions to Vision ⇒ Semantics connections centered at each location (10 repetitions each; SD = 1.5). 90 80 Visual Naming Visual Gesturing 70 1 • Highly selective impairment Percent Correct 60 of visual object naming Action Action relative to visual gesturing 50 2 and tactile naming 40 30 • Relative preservation of Tactile Naming 3 20 Visual Gesturing visual action naming Visual Action Naming 10 Touch Visual Object Naming Vision 4 0 0.0 0.5 1.0 1.5 2.0 2.5 3.0 3.5 4.0 Vision−to−Semantics Lesion Severity (SD) % Correct Performance Visual Visual Tactile Action Study Naming Gesturing Naming Naming 1. Lhermitte & Beauvois (1973) 73 100 91 Phonology Phonology 2. Teixeira Ferreira et al. (1997) 53 95 81 75 3. Manning & Campbell (1996) 27 75 90 67 4. Coslett & Saffran (1989) 0 50 92

Recommend

More recommend