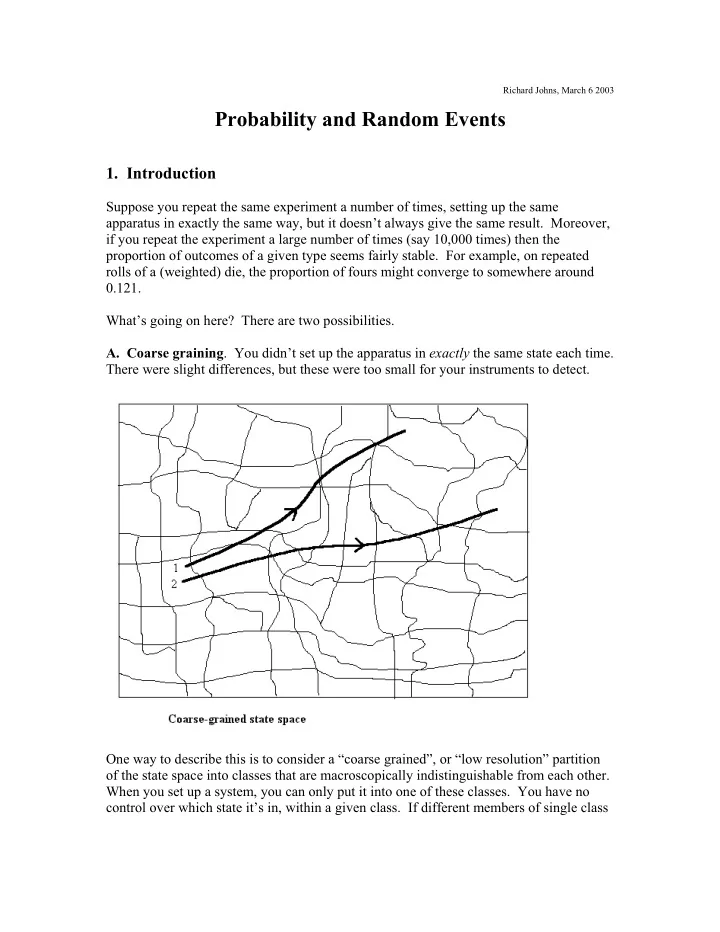

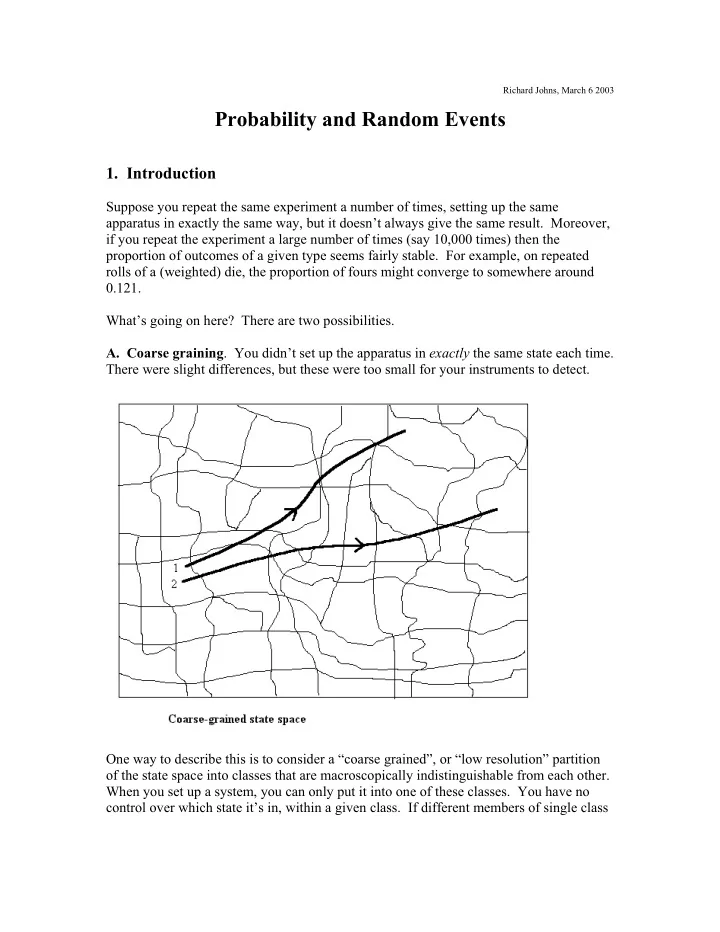

Richard Johns, March 6 2003 Probability and Random Events 1. Introduction Suppose you repeat the same experiment a number of times, setting up the same apparatus in exactly the same way, but it doesn’t always give the same result. Moreover, if you repeat the experiment a large number of times (say 10,000 times) then the proportion of outcomes of a given type seems fairly stable. For example, on repeated rolls of a (weighted) die, the proportion of fours might converge to somewhere around 0.121. What’s going on here? There are two possibilities. A. Coarse graining . You didn’t set up the apparatus in exactly the same state each time. There were slight differences, but these were too small for your instruments to detect. One way to describe this is to consider a “coarse grained”, or “low resolution” partition of the state space into classes that are macroscopically indistinguishable from each other. When you set up a system, you can only put it into one of these classes. You have no control over which state it’s in, within a given class. If different members of single class

lead (deterministically) to macroscopically-distinct histories, then the system will appear to be random. (Or maybe that’s just what a random system is?) We get probabilities here, but they’re epistemic (knowledge) probabilities. If we had sufficiently precise information, then the probabilities would all be either 0 or 1. B. True Randomness If the system under consideration is truly random, then it may deliver different outcomes even when started up in exactly the same state each time. In other words, even the complete initial state does not determine the history. This is hard to understand, however. Is it possible? If it is, then how? Does it mean that the history appears from nowhere, without any cause? 2. Logical and Physical Relations It’s important to realise that the relation of entailment (logical consequence) is quite different from production (cause and effect) Entailment , or logical consequence, is a relation between statements or propositions. A entails B just in case it’s correct to infer B from knowledge that A. In general, whether or not entailment holds between a pair of statements depends on the background information . For example, if you know that all ravens are black, then “Fred is a raven” entails “Fred is black”. Note that words like “determined”, “necessary”, “inevitable”, and so on all express entailment, with the dynamical laws as background knowledge. Production (Cause and effect) A cause is the source of the effect. The cause brings the effect about, makes it happen. To see that entailment and production are different, let’s consider some examples. First, we know that with a deterministic system the future determines (entails) the past. But the future does not produce the past – it’s the other way around. Second, consider a room with two exit doors, A and B. A person inside the room wants to leave, so he goes to the nearest door, A, opens it and leaves. What he didn’t know was the other door B had been secretly locked, from the outside. The locking of B determines that he will leave through A, but it doesn’t cause him to leave through A. (In other cases, where the person tries B first, it would be a cause, but not in this case.)

We see that there’s a huge difference between one event producing another, and (a description of) one event entailing (a description of) another. Note that physicists often use the term causality to mean determinism. Since causation and determination are quite distinct, this usage can be confusing. 3. Random Processes The following definition of a random event is fairly uncontroversial. Definition A random event is one that is not determined by any prior cause It’s often not noticed that this definition is compatible with two quite different theories of randomness. Theory 1 (events from nowhere) A random event has no cause; that’s why it’s not determined by any prior cause. A random event “appears from nowhere”, in the sense that it is not produced by anything. Theory 2 (caused, but not determined) A random event is produced by prior causes, just like a deterministic event. The difference is that a random event is not determined by its causes. Given the cause, the event might not have occurred. (It’s clear that, if production and entailment are conflated, then Theory 1 is the only option. If determination and causation are the same relation, then an undetermined event must be uncaused.) Are random events caused? Argument 1 If a single random event is uncaused, then so is a large ensemble of random events, each occurring within the same apparatus set up the same way. (If each part of the ensemble is uncaused, then so is the whole.) But, across the whole ensemble, there may be a very reliable proportion of experiments that deliver a given outcome. For example, it may be that around 12% of experiments have the outcome B. No matter when or where the experiments are performed, the same proportion 12% is measured, give or take a little bit. Surely this value 12% is caused by the apparatus? If not, then where does it come from? But if the ensemble is caused, then so are the individual experiments. (Note that it’s quite

consistent to say that individual events are undetermined , but that statistics on large ensembles of such events are more-or-less determined.) A Variation on Theory 1? A variation of Theory 1 would be that a random event isn’t completely uncaused, but rather “only partially caused”. But what is this supposed to mean? It doesn’t mean that E partially occurs. Argument 2 If Theory 2 is correct, then it’s easy to give a theory of chance, or physical probability. We say that the chance of a random event is the degree to which it is determined by its causes. Note that entailment comes in degrees, as belief is a matter of degree. For Theory 1, no theory of chance is forthcoming. Chance cannot be a degree of determination in that case, as there would be nothing to partially determine the event. Partial Entailment Consider the following argument. Feike is a Frisian 70% of Frisians can swim -------------------------------- ∴ Feike can swim The argument isn’t valid, since the premisses don’t make the conclusion certain, but they do make it probable. The (epistemic) probability of the conclusion, given the premisses, is 0.7. Thus entailment is a matter of degree. The “Principal Principle” If you know that the physical chance of an event E is some number p , and you know nothing else relevant to E, then you should believe to the degree p that E occurs. We might say that chances are authoritative. This (apparently trivial) fact about chance is actually crucial. Without it we’d have no way to measure chances empirically. It’s this principle that tells us that improbable events aren’t likely to happen.

The Axioms of Probability P ( A ) ≥ 0 Axiom 1 Axiom 2 If A is inevitable then P ( A ) = 1. Axiom 3 If A and B are mutually exclusive then P ( A or B ) = P ( A ) + P ( B ). P ( A & B ) If P ( B ) ≠ 0, then = Axiom 4 P ( A B ) . P ( B ) Axiom 4 is also known as the Principle of Conditioning. Sometimes it is taken to be the definition of conditional probability, but this is a mistake. It requires demonstration. Axiom 2 is the most important for our discussion of quantum mechanics. Since probabilities are real numbers, it entails that the probability of a disjunction (A or B) is always at least as great as the probability of each disjunct. EPR and Common Causes In the EPR experiment it appears that we have two separate measurements, each with a random outcome. Neither outcome has any influence over the other. Yet the outcomes are correlated, so if that if one is UP then the other is DOWN. To explain such a correlation we would like to appeal to a common cause, i.e. their common source. But we’ve seen that the outcomes are not determined by any common cause. This is not a problem for the theory of random events given here. We can say that the events are produced by a common cause, but this cause doesn’t determine each outcome. It merely determines that the outcomes will be opposite.

Recommend

More recommend