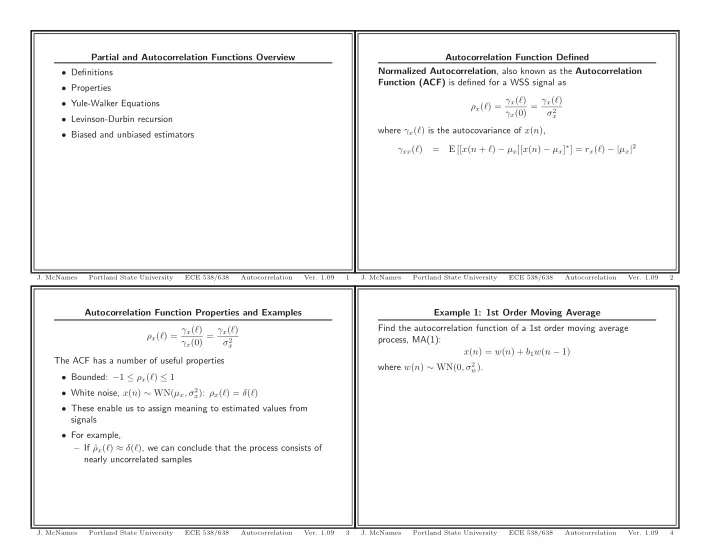

Partial and Autocorrelation Functions Overview Autocorrelation Function Defined Normalized Autocorrelation , also known as the Autocorrelation • Definitions Function (ACF) is defined for a WSS signal as • Properties ρ x ( ℓ ) = γ x ( ℓ ) γ x (0) = γ x ( ℓ ) • Yule-Walker Equations σ 2 x • Levinson-Durbin recursion where γ x ( ℓ ) is the autocovariance of x ( n ) , • Biased and unbiased estimators E [[ x ( n + ℓ ) − µ x ][ x ( n ) − µ x ] ∗ ] = r x ( ℓ ) − | µ x | 2 γ xx ( ℓ ) = J. McNames Portland State University ECE 538/638 Autocorrelation Ver. 1.09 1 J. McNames Portland State University ECE 538/638 Autocorrelation Ver. 1.09 2 Autocorrelation Function Properties and Examples Example 1: 1st Order Moving Average Find the autocorrelation function of a 1st order moving average ρ x ( ℓ ) = γ x ( ℓ ) γ x (0) = γ x ( ℓ ) process, MA(1): σ 2 x x ( n ) = w ( n ) + b 1 w ( n − 1) The ACF has a number of useful properties where w ( n ) ∼ WN(0 , σ 2 w ) . • Bounded: − 1 ≤ ρ x ( ℓ ) ≤ 1 • White noise, x ( n ) ∼ WN( µ x , σ 2 x ) : ρ x ( ℓ ) = δ ( ℓ ) • These enable us to assign meaning to estimated values from signals • For example, – If ˆ ρ x ( ℓ ) ≈ δ ( ℓ ) , we can conclude that the process consists of nearly uncorrelated samples J. McNames Portland State University ECE 538/638 Autocorrelation Ver. 1.09 3 J. McNames Portland State University ECE 538/638 Autocorrelation Ver. 1.09 4

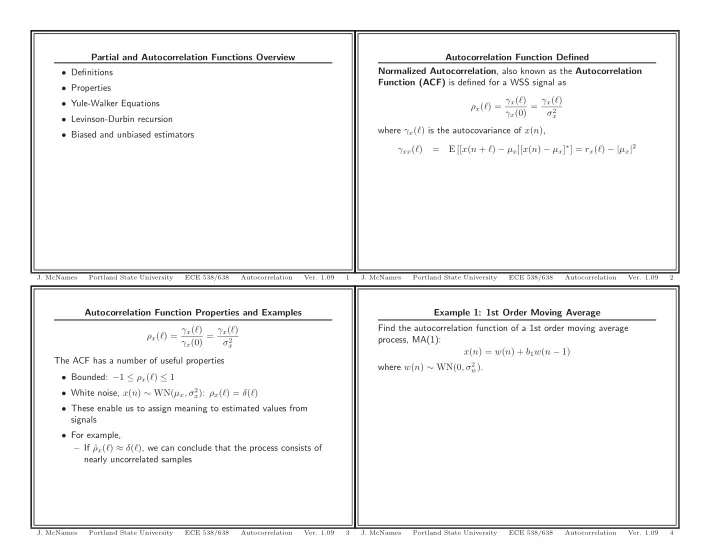

Example 2: 1st Order Autoregressive Autocorrelation Function Properties Find the autocorrelation function of a 1st order autoregressive process, ρ x ( ℓ ) = γ x ( ℓ ) γ x (0) = γ x ( ℓ ) AR(1): σ 2 x x ( n ) = − a 1 x ( n − 1) + w ( n ) • In general, the ACF of an AR( P ) process decays as a sum of Z where w ( n ) ∼ WN(0 , σ 2 1 w ) . Hint: − α n u ( − n − 1) damped exponentials (infinite extent) ← → 1 − αz − 1 for an ROC of | z | < | α | . • If the AR( P ) coefficients are known, the ACF can be determined by solving a set of linear equations • The ACF of a MA( Q ) process is finite: ρ x ( ℓ ) = 0 for ℓ > Q • Thus, if the estimated ACF is very small for large lags a MA( Q ) model may be appropriate • The ACF of a ARMA( P, Q ) process is also a sum of damped exponentials (infinite extent) • It is difficult to solve for in general J. McNames Portland State University ECE 538/638 Autocorrelation Ver. 1.09 5 J. McNames Portland State University ECE 538/638 Autocorrelation Ver. 1.09 6 All-Pole Models AP Equations b 0 b 0 Let us consider a causal AP( P ) model: H ( z ) = A ( z ) = 1 + � P k =1 a k z − k P � a k H ( z ) z − k H ( z ) + = b 0 • All-pole models are especially important because they can be k =1 estimated by solving a set of linear equations P � • Partial autocorrelation can also be best understood within the h ( n ) + a k h ( n − k ) = b 0 δ ( n ) context of all-pole models (my motivation) k =1 P • Recall that an AZ( Q ) model can be expressed as an AP( ∞ ) � a k h ( n − k ) h ∗ ( n − ℓ ) b 0 h ∗ ( n − ℓ ) δ ( n ) = model if the AZ( Q ) model is minimum phase k =0 • Since the coefficients at large lags tend to be small, this can often ∞ P ∞ � � � be well approximated by an AP( P ) model a k h ( n − k ) h ∗ ( n − ℓ ) b 0 h ∗ ( n − ℓ ) δ ( n ) = n = −∞ k =0 n = −∞ P � b 0 h ∗ ( − ℓ ) a k r h ( ℓ − k ) = k =0 J. McNames Portland State University ECE 538/638 Autocorrelation Ver. 1.09 7 J. McNames Portland State University ECE 538/638 Autocorrelation Ver. 1.09 8

AP Equations Continued AP Equations in Matrix Form Since AP( P ) is causal, h (0) = b 0 , h ∗ (0) = b ∗ 0 , and We can collect the first P + 1 of these terms in a matrix ⎡ ⎤ ⎡ ⎤ ⎡ ⎤ | b 0 | 2 P r h (0) r h ( − 1) · · · r h ( − P ) 1 � | b 0 | 2 a k r h ( − k ) = ℓ = 0 r h (1) r h (0) · · · r h ( − P + 1) a 1 0 ⎢ ⎥ ⎢ ⎥ ⎢ ⎥ ⎢ ⎥ ⎢ ⎥ ⎢ ⎥ = . . . . . k =0 ... ⎢ . . . ⎥ ⎢ . ⎥ ⎢ . ⎥ . . . . . ⎣ ⎦ ⎣ ⎦ ⎣ ⎦ P � r h ( P ) r h ( P − 1) · · · r h (0) a P 0 a k r h ( ℓ − k ) = 0 ℓ > 0 ⎡ ⎤ ⎡ ⎤ ⎡ ⎤ r ∗ r ∗ | b 0 | 2 k =0 r h (0) h (1) · · · h ( P ) 1 r ∗ r h (1) r h (0) · · · h ( P − 1) a 1 0 ⎢ ⎥ ⎢ ⎥ ⎢ ⎥ This has several important consequences. One is that the ⎢ ⎥ ⎢ ⎥ ⎢ ⎥ = . . . . . ... ⎢ . . . ⎥ ⎢ . ⎥ ⎢ . ⎥ autocorrelation can be expressed as a recursive relation for ℓ > 0 , since . . . . . ⎣ ⎦ ⎣ ⎦ ⎣ ⎦ a 0 = 1 : r h ( P ) r h ( P − 1) · · · r h (0) a P 0 P � a k r h ( ℓ − k ) = 0 • The autocorrelation matrix is Hermitian, Toeplitz, and positive k =0 definite. P � r h ( ℓ ) = − a k r h ( ℓ − k ) ℓ > 0 k =1 J. McNames Portland State University ECE 538/638 Autocorrelation Ver. 1.09 9 J. McNames Portland State University ECE 538/638 Autocorrelation Ver. 1.09 10 Solving the AP Equations Solving for a If we know the autocorrelation, we can solve these equations for a and ⎡ ⎤ 1 b 0 ⎡ ⎤ ⎡ ⎤ r ∗ r h (1) r h (0) · · · h ( P − 1) 0 ⎡ ⎤ ⎡ ⎤ ⎡ ⎤ a 1 r ∗ r ∗ | b 0 | 2 ⎢ ⎥ r h (0) h (1) · · · h ( P ) 1 . . . . ... ⎢ ⎥ ⎢ ⎥ ⎢ ⎥ . . . . = . . . . . r ∗ ⎢ ⎥ r h (1) r h (0) · · · h ( P − 1) a 1 0 ⎣ ⎦ . ⎣ ⎦ ⎢ ⎥ ⎢ ⎥ ⎢ ⎥ . ⎣ ⎦ ⎢ ⎥ ⎢ ⎥ ⎢ ⎥ ⎦ = r h ( P ) r h ( P − 1) · · · r h (0) 0 . . . . . ... ⎢ ⎥ ⎢ ⎥ ⎢ ⎥ . . . . . a P . . . . . ⎣ ⎦ ⎣ ⎣ ⎦ ⎡ ⎤ ⎡ ⎤ ⎡ ⎤ ⎡ ⎤ r h ( P ) r h ( P − 1) · · · r h (0) a P 0 r ∗ r h (1) r h (0) · · · h ( P − 1) a 1 0 . . . . . ... ⎢ ⎥ ⎢ ⎥ ⎢ ⎥ ⎢ ⎥ . . . . . ⎦ + = . . . . . ⎣ ⎣ ⎦ ⎣ ⎦ ⎣ ⎦ r h ( P ) r h ( P − 1) · · · r h (0) a P 0 r h + R h a = 0 − R − 1 = a h r h These are called the Yule-Walker equations J. McNames Portland State University ECE 538/638 Autocorrelation Ver. 1.09 11 J. McNames Portland State University ECE 538/638 Autocorrelation Ver. 1.09 12

Solving for b 0 Yule-Walker Equation Comments ⎡ ⎤ ⎡ ⎤ ⎡ ⎤ � r ∗ r ∗ | b 0 | 2 r h (0) h (1) · · · h ( P ) 1 a = R − 1 b 0 = ± r h (0) + a T r h h r h r ∗ r h (1) r h (0) · · · h ( P − 1) a 1 0 ⎢ ⎥ ⎢ ⎥ ⎢ ⎥ ⎢ ⎥ ⎢ ⎥ ⎢ ⎥ = . . . . . ... ⎢ . . . ⎥ ⎢ . ⎥ ⎢ . ⎥ • The matrix inverse exists because unless h ( n ) = 0 , R h is positive . . . . . ⎣ ⎦ ⎣ ⎦ ⎣ ⎦ definite r h ( P ) r h ( P − 1) · · · r h (0) a P 0 ⎡ ⎤ • Note that we cannot determine the sign of b 0 = h (0) from r h ( ℓ ) 1 a 1 • Thus, the first P terms of the autocorrelation completely ⎢ ⎥ � r h (0) h ( P ) � r ∗ r ∗ ⎢ ⎥ | b 0 | 2 h (1) · · · = . determine the model parameters ⎢ . ⎥ . ⎣ ⎦ • A similar relation exists for the first P + 1 elements of the a P autocorrelation sequence in terms the model parameters by solving a set of linear equations (Problem 4.6) � � P � � • Is not true for AZ or PZ models b 0 = ± a k r h ( k ) � k =0 � = ± r h (0) + a T r h J. McNames Portland State University ECE 538/638 Autocorrelation Ver. 1.09 13 J. McNames Portland State University ECE 538/638 Autocorrelation Ver. 1.09 14 Yule-Walker Equation Comments Continued AR Processes versus AP Models Concisely, we can write the Yule-Walker Equations as � a = R − 1 r h (0) + a T r h b 0 = h r h R h a = − r h If we have an AR( P ) process, then we know r x ( ℓ ) = σ 2 w r h ( ℓ ) and we • Thus the two are equivalent and reversible and unique can equivalently write characterizations of the model R x a = − r x { r h (0) , . . . , r h ( P ) } ↔ { b 0 , a 1 , . . . , a P } • Thus, the following two problems are equivalent – Find the parameters of an AR process, { a 1 , . . . , a P , σ 2 w } , given • The rest of the sequence can then be determined by symmetry r x ( ℓ ) and the recursive relation given earlier – Find the parameters of an AP model, { a 1 , . . . , a P , b 0 } , given P r h ( ℓ ) � r h ( ℓ ) = − a k r h ( ℓ − k ) ℓ > 0 • To accommodate both in a common notation, I will write the k =1 Yule-Walker equations as simply r ∗ r h ( − ℓ ) = h ( ℓ ) Ra = − r J. McNames Portland State University ECE 538/638 Autocorrelation Ver. 1.09 15 J. McNames Portland State University ECE 538/638 Autocorrelation Ver. 1.09 16

Recommend

More recommend