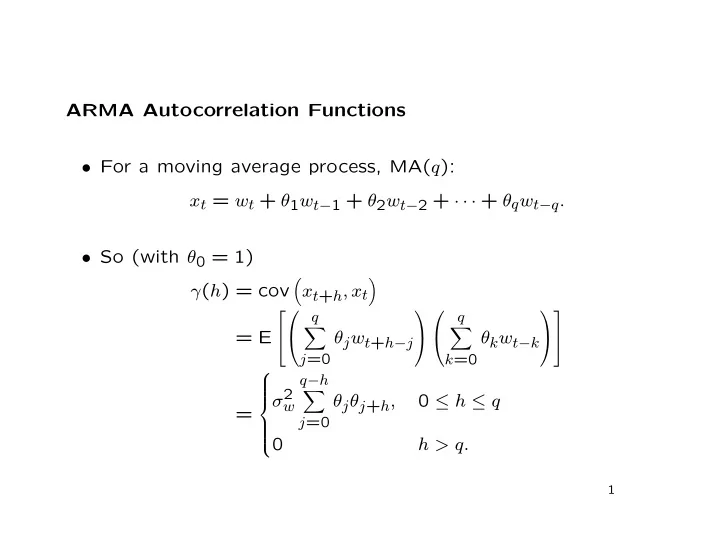

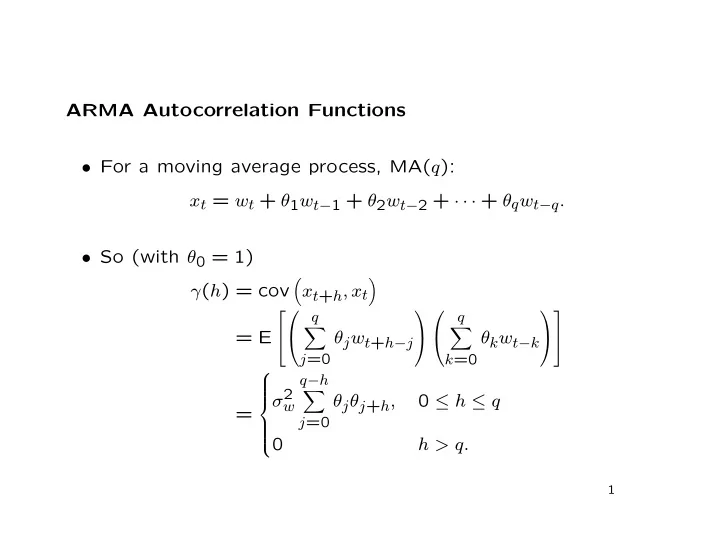

ARMA Autocorrelation Functions • For a moving average process, MA( q ): x t = w t + θ 1 w t − 1 + θ 2 w t − 2 + · · · + θ q w t − q . • So (with θ 0 = 1) � � γ ( h ) = cov x t + h , x t q q � � = E θ j w t + h − j θ k w t − k j =0 k =0 q − h σ 2 � 0 ≤ h ≤ q θ j θ j + h , w = j =0 0 h > q. 1

• So the ACF is q − h � θ j θ j + h j =0 0 ≤ h ≤ q , q ρ ( h ) = θ 2 � j j =0 0 h > q. • Notes: – In these expressions, θ 0 = 1 for convenience. – γ ( q ) � = 0 but γ ( h ) = 0 for h > q . This characterizes MA( q ). 2

• For an autoregressive process, AR( p ): x t = φ 1 x t − 1 + φ 2 x t − 2 + · · · + φ p x t − p + w t . • So � � γ ( h ) = cov x t + h , x t p x t � = E φ j x t + h − j + w t + h j =1 p � � � = φ j γ ( h − j ) + cov w t + h , x t . j =1 3

• Because x t is causal, x t is w t + a linear combination of w t − 1 , w t − 2 , . . . . • So σ 2 h = 0 � � w cov = w t + h , x t 0 h > 0 . • Hence p � γ ( h ) = φ j γ ( h − j ) , h > 0 j =1 and p φ j γ ( − j ) + σ 2 � γ (0) = w . j =1 4

• If we know the parameters φ 1 , φ 2 , . . . , φ p and σ 2 w , these equa- tions for h = 0 and h = 1 , 2 , . . . , p form p + 1 linear equations in the p + 1 unknowns γ (0) , γ (1) , . . . , γ ( p ). • The other autocovariances can then be found recursively from the equation for h > p . • Alternatively, if we know (or have estimated) γ (0) , γ (1) , . . . , γ ( p ), they form p + 1 linear equations in the p + 1 parameters φ 1 , φ 2 , . . . , φ p and σ 2 w . • These are the Yule-Walker equations. 5

• For the ARMA( p, q ) model with p > 0 and q > 0: x t = φ 1 x t − 1 + φ 2 x t − 2 + · · · + φ p x t − p + w t + θ 1 w t − 1 + θ 2 w t − 2 + · · · + θ q w t − q , a generalized set of Yule-Walker equations must be used. • The moving average models ARMA(0 , q ) = MA( q ) are the only ones with a closed form expression for γ ( h ). • For AR( p ) and ARMA( p, q ) with p > 0, the recursive equation means that for h > max( p, q + 1), γ ( h ) is a sum of geomet- rically decaying terms, possibly damped oscillations. 6

• The recursive equation is p � γ ( h ) = φ j γ ( h − j ) , h > q. j =1 • What kinds of sequences satisfy an equation like this? – Try γ ( h ) = z − h for some constant z . – The equation becomes p p φ j z − ( h − j ) = z − h 0 = z − h − φ j z j = z − h φ ( z ) . � � 1 − j =1 j =1 7

• So if φ ( z ) = 0, then γ ( h ) = z − h satisfies the equation. • Since φ ( z ) is a polynomial of degree p , there are p solutions, say z 1 , z 2 , . . . , z p . • So a more general solution is p c l z − h � γ ( h ) = , l l =1 for any constants c 1 , c 2 , . . . , c p . • If z 1 , z 2 , . . . , z p are distinct, this is the most general solution; if some roots are repeated, the general form is a little more complicated. 8

• If all z 1 , z 2 , . . . , z p are real, this is a sum of geometrically decaying terms. • If any root is complex, its complex conjugate must also be a root, and these two terms may be combined into geometri- cally decaying sine-cosine terms. • The constants c 1 , c 2 , . . . , c p are determined by initial condi- tions; in the ARMA case, these are the Yule-Walker equa- tions. • Note that the various rates of decay are the zeros of φ ( z ), the autoregressive operator, and do not depend on θ ( z ), the moving average operator. 9

• Example: ARMA(1 , 1) x t = φx t − 1 + θw t − 1 + w t . • The recursion is γ ( h ) = φγ ( h − 1) , h = 2 , 3 , . . . • So γ ( h ) = cφ h for h = 1 , 2 , . . . , but c � = 1. • Graphically, the ACF decays geometrically, but with a differ- ent value at h = 0. 10

ARMAacf(ar = 0.9, ma = −0.5, 24) 0.2 0.4 0.6 0.8 1.0 ● ● ● ● 5 ● ● ● ● ● 10 ● ● ● Index ● ● 15 ● ● ● ● ● 20 ● ● ● ● ● 25 ● 11

The Partial Autocorrelation Function • An MA( q ) can be identified from its ACF: non-zero to lag q , and zero afterwards. • We need a similar tool for AR( p ). • The partial autocorrelation function (PACF) fills that role. 12

• Recall: for multivariate random variables X , Y , Z , the partial correlations of X and Y given Z are the correlations of: – the residuals of X from its regression on Z ; and – the residuals of Y from its regression on Z . • Here “regression” means conditional expectation, or best lin- ear prediction, based on population distributions, not a sam- ple calculation. • In a time series, the partial autocorrelations are defined as φ h,h = partial correlation of x t + h and x t given x t + h − 1 , x t + h − 2 , . . . , x t +1 . 13

• For an autoregressive process, AR( p ): x t = φ 1 x t − 1 + φ 2 x t − 2 + · · · + φ p x t − p + w t , • If h > p , the regression of x t + h on x t + h − 1 , x t + h − 2 , . . . , x t +1 is φ 1 x t + h − 1 + φ 2 x t + h − 2 + · · · + φ p x t + h − p • So the residual is just w t + h , which is uncorrelated with x t + h − 1 , x t + h − 2 , . . . , x t +1 and x t . 14

• So the partial autocorrelation is zero for h > p : φ h,h = 0 , h > p. • We can also show that φ p,p = φ p , which is non-zero by as- sumption. • So φ p,p � = 0 but φ h,h = 0 for h > p . This characterizes AR( p ). 15

The Inverse Autocorrelation Function • SAS’s proc arima also shows the Inverse Autocorrelation Func- tion (IACF). • The IACF of the ARMA( p, q ) model φ ( B ) x t = θ ( B ) w t is defined to be the ACF of the inverse (or dual) process θ ( B ) x (inverse) = φ ( B ) w t . t • The IACF has the same property as the PACF: AR( p ) is characterized by an IACF that is nonzero at lag p but zero for larger lags. 16

Summary: Identification of ARMA processes • AR( p ) is characterized by a PACF or IACF that is: – nonzero at lag p ; – zero for lags larger than p . • MA( q ) is characterized by an ACF that is: – nonzero at lag q ; – zero for lags larger than q . • For anything else, try ARMA( p, q ) with p > 0 and q > 0. 17

For p > 0 and q > 0: AR( p ) MA( q ) ARMA( p, q ) ACF Tails off Cuts off after lag q Tails off PACF Cuts off after lag p Tails off Tails off IACF Cuts off after lag p Tails off Tails off • Note: these characteristics are used to guide the initial choice of a model; estimation and model-checking will often lead to a different model. 18

Other ARMA Identification Techniques • SAS’s proc arima offers the MINIC option on the identify statement, which produces a table of SBC criteria for various values of p and q . • The identify statement has two other options: ESACF and SCAN . • Both produce tables in which the pattern of zero and non- zero values characterize p and q . • See Section 3.4.10 in Brocklebank and Dickey. 19

options linesize = 80; ods html file = ’varve3.html’; data varve; infile ’../data/varve.dat’; input varve; lv = log(varve); run; proc arima data = varve; title ’Use identify options to identify a good model’; identify var = lv(1) minic esacf scan; estimate q = 1 method = ml; estimate q = 2 method = ml; estimate p = 1 q = 1 method = ml;

run; • proc arima output

Recommend

More recommend