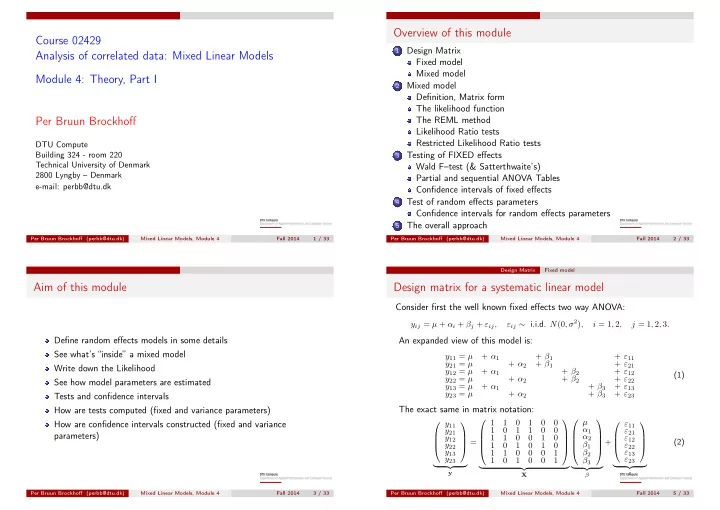

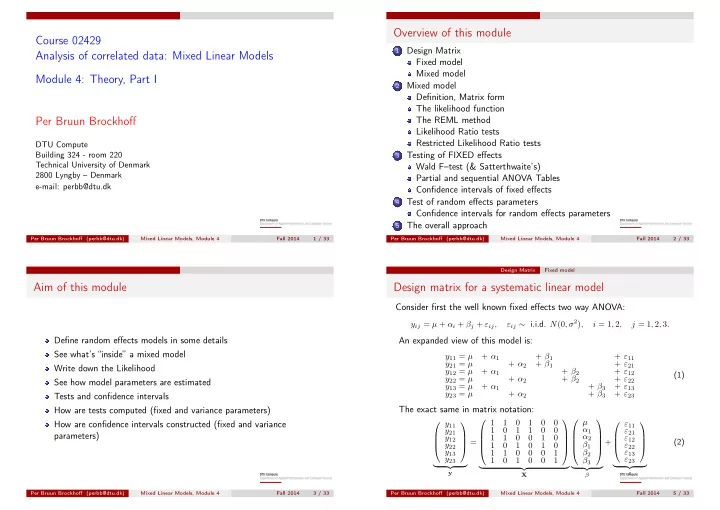

Overview of this module Course 02429 Design Matrix 1 Analysis of correlated data: Mixed Linear Models Fixed model Mixed model Module 4: Theory, Part I Mixed model 2 Definition, Matrix form The likelihood function Per Bruun Brockhoff The REML method Likelihood Ratio tests Restricted Likelihood Ratio tests DTU Compute Building 324 - room 220 Testing of FIXED effects 3 Technical University of Denmark Wald F–test (& Satterthwaite’s) 2800 Lyngby – Denmark Partial and sequential ANOVA Tables e-mail: perbb@dtu.dk Confidence intervals of fixed effects Test of random effects parameters 4 Confidence intervals for random effects parameters The overall approach 5 Per Bruun Brockhoff (perbb@dtu.dk) Mixed Linear Models, Module 4 Fall 2014 1 / 33 Per Bruun Brockhoff (perbb@dtu.dk) Mixed Linear Models, Module 4 Fall 2014 2 / 33 Design Matrix Fixed model Aim of this module Design matrix for a systematic linear model Consider first the well known fixed effects two way ANOVA: ε ij ∼ i.i.d. N (0 , σ 2 ) , y ij = µ + α i + β j + ε ij , i = 1 , 2 , j = 1 , 2 , 3 . Define random effects models in some details An expanded view of this model is: See what’s “inside” a mixed model y 11 = µ + α 1 + β 1 + ε 11 y 21 = µ + α 2 + β 1 + ε 21 Write down the Likelihood y 12 = µ + α 1 + β 2 + ε 12 (1) y 22 = µ + α 2 + β 2 + ε 22 See how model parameters are estimated y 13 = µ + α 1 + β 3 + ε 13 y 23 = µ + α 2 + β 3 + ε 23 Tests and confidence intervals The exact same in matrix notation: How are tests computed (fixed and variance parameters) 1 1 0 1 0 0 µ y 11 ε 11 How are confidence intervals constructed (fixed and variance α 1 1 0 1 1 0 0 y 21 ε 21 parameters) α 2 y 12 1 1 0 0 1 0 ε 12 = + (2) y 22 β 1 ε 22 1 0 1 0 1 0 y 13 β 2 ε 13 1 1 0 0 0 1 y 23 ε 23 β 3 1 0 1 0 0 1 � �� � � �� � � �� � � �� � y ε X β Per Bruun Brockhoff (perbb@dtu.dk) Mixed Linear Models, Module 4 Fall 2014 3 / 33 Per Bruun Brockhoff (perbb@dtu.dk) Mixed Linear Models, Module 4 Fall 2014 5 / 33

Design Matrix Fixed model Design Matrix Fixed model Construction of the design matrix µ 1 1 0 1 0 0 y 11 ε 11 α 1 1 0 1 1 0 0 y 21 ε 21 α 2 y 12 1 1 0 0 1 0 ε 12 In a general systematic linear model (with both factors and covariates), it is = + β 1 y 22 1 0 1 0 1 0 ε 22 surprisingly easy to construct the design matrix X . y 13 β 2 ε 13 1 1 0 0 0 1 y 23 ε 23 1 0 1 0 0 1 β 3 For each factor: Add one column for each level, with ones in the rows � �� � � �� � � �� � � �� � y ε where the corresponding observation is from that level, and zeros β X otherwise. y is the vector of all observations For each covariate: Add one column with the measurements of the X is the known as the design matrix covariate. Remove linear dependencies (if necessary) β is the vector of fixed effect parameters ε is a vector of independent N (0 , σ 2 ) “measurement errors” Example: linear regression: The vector ε is said to follow a multivariate normal distribution y i = α + β · x i + ε Mean vector 0 In matrix notation: Covariance matrix σ 2 I 1 x 1 Written as: ε ∼ N ( 0 , σ 2 I ) 1 x 2 � α � 1 x 3 y = X β + ε specifies the model, and everything can be calculated y = . . + ε β . . from y and X . . . 1 x n Per Bruun Brockhoff (perbb@dtu.dk) Mixed Linear Models, Module 4 Fall 2014 6 / 33 Per Bruun Brockhoff (perbb@dtu.dk) Mixed Linear Models, Module 4 Fall 2014 7 / 33 Design Matrix Mixed model Mixed model Definition, Matrix form The mixed linear model A general linear mixed effects model A general linear mixed model can be presented in matrix notation by: Consider now the one way ANOVA with random block effect: y = X β + Zu + ε, where u ∼ N (0 , G ) and ε ∼ N (0 , R ) . b j ∼ N (0 , σ 2 ε ij ∼ N (0 , σ 2 ) , y ij = µ + α i + b j + ε ij , B ) , i = 1 , 2 , j = 1 , 2 , 3 y is the observation vector The matrix notation is: X is the design matrix for the fixed effects 1 1 0 1 0 0 y 11 ε 11 � b 1 y 21 1 0 1 � µ 1 0 0 ε 21 β is the vector containing the fixed effect parameters � � y 12 1 1 0 0 1 0 ε 12 α 1 = + b 2 + Z is the design matrix for the random effects y 22 1 0 1 0 1 0 ε 22 α 2 b 3 u is the vector of random effects y 13 1 1 0 0 0 1 ε 13 � �� � � �� � y 23 ε 23 1 0 1 0 0 1 It is assumed that u ∼ N ( 0 , G ) β u � �� � � �� � � �� � � �� � cov ( u i , u j ) = G i,j (typically G has a very simple structure (for y ε X Z instance diagonal)) Notice how this matrix representation is constructed in exactly the same ε is the vector of residual errors way as for the fixed effects model — but separately for fixed and random It is assumed that ε ∼ N ( 0 , R ) cov ( ε i , ε j ) = R i,j (typically R is diagonal, but we shall later see some effects. useful exceptions for repeated measurements) Per Bruun Brockhoff (perbb@dtu.dk) Mixed Linear Models, Module 4 Fall 2014 8 / 33 Per Bruun Brockhoff (perbb@dtu.dk) Mixed Linear Models, Module 4 Fall 2014 10 / 33

Mixed model Definition, Matrix form Mixed model Definition, Matrix form The distribution of y One way ANOVA with random block effect From the model description: y = X β + Zu + ε, where u ∼ N (0 , G ) and ε ∼ N (0 , R ) . Consider again the model: We can compute the mean vector µ = E ( y ) and covariance matrix b j ∼ N (0 , σ 2 ε ij ∼ N (0 , σ 2 ) , V = var ( y ) : y ij = µ + α i + b j + ε ij , B ) , i = 1 , 2 , j = 1 , 2 , 3 µ = E ( X β + Zu + ε ) = X β [ All other terms have mean zero ] Calculation of µ and V gives: V = var ( X β + Zu + ε ) [ from model ] σ 2 + σ 2 σ 2 µ + α 1 0 0 0 0 = var ( X β ) + var ( Zu ) + var ( ε ) [ all terms are independent ] B B σ 2 σ 2 + σ 2 µ + α 2 0 0 0 0 B B µ + α 1 0 0 σ 2 + σ 2 σ 2 0 0 = var ( Zu ) + var ( ε ) [ variance of fixed effects is zero ] µ = & V = B B σ 2 σ 2 + σ 2 µ + α 2 0 0 0 0 Z var ( u ) Z ′ + var ( ε ) B B σ 2 + σ 2 σ 2 = [ Z is constant ] µ + α 1 0 0 0 0 B B σ 2 σ 2 + σ 2 µ + α 2 0 0 0 0 ZGZ ′ + R B B = [ from model ] Notice that two observations from the same block are correlated. So y follows a multivariate normal distribution: y ∼ N ( X β, ZGZ ′ + R ) Per Bruun Brockhoff (perbb@dtu.dk) Mixed Linear Models, Module 4 Fall 2014 11 / 33 Per Bruun Brockhoff (perbb@dtu.dk) Mixed Linear Models, Module 4 Fall 2014 12 / 33 Mixed model The likelihood function Mixed model The REML method The likelihood function The restricted/residual maximum likelihood method The likelihood L is a function of model parameters and observations The maximum likelihood method tends to give (slightly) too low For given parameter values L returns a measure of the probability of estimates of the random effects parameters. We say it is biased observing y downwards The negative log likelihood ℓ for a mixed linear model is: The simplest example is: ( x 1 , . . . , x N ) ∼ i.i.d. N ( µ, σ 2 ) ℓ ( y , β, γ ) ∝ 1 � � σ 2 = 1 � ( x i − x ) 2 is the maximum likelihood estimate, but log | V ( γ ) | + ( y − X β ) ′ ( V ( γ )) − 1 ( y − X β ) ˆ n 2 σ 2 = � ( x i − x ) 2 is generally preferred, because it is unbiased 1 ˆ n − 1 Here γ is the variance parameters ( σ 2 and σ 2 The restricted/residual maximum likelihood (REML) method modifies B in our example) the maximum likelihood method by minimizing: A natural estimate is to choose the parameters that make our observations most likely: ℓ re ( y , β, γ ) ∝ 1 log | V ( γ ) | + ( y − X β ) ′ ( V ( γ )) − 1 ( y − X β )+ log | X ′ ( V ( γ )) − 1 X | � � 2 (ˆ β, ˆ γ ) = argmin ℓ ( y , β, γ ) which gives unbiased estimates (at least in balanced cases) ( β,γ ) The REML method is generally preferred in mixed models This is the maximum likelihood (ML) method Per Bruun Brockhoff (perbb@dtu.dk) Mixed Linear Models, Module 4 Fall 2014 13 / 33 Per Bruun Brockhoff (perbb@dtu.dk) Mixed Linear Models, Module 4 Fall 2014 14 / 33

Recommend

More recommend