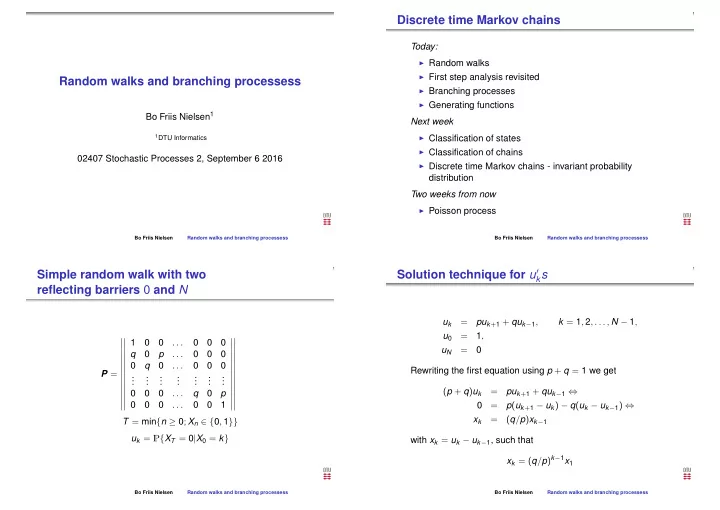

Discrete time Markov chains Today: ◮ Random walks ◮ First step analysis revisited Random walks and branching processess ◮ Branching processes ◮ Generating functions Bo Friis Nielsen 1 Next week ◮ Classification of states 1 DTU Informatics ◮ Classification of chains 02407 Stochastic Processes 2, September 6 2016 ◮ Discrete time Markov chains - invariant probability distribution Two weeks from now ◮ Poisson process Bo Friis Nielsen Random walks and branching processess Bo Friis Nielsen Random walks and branching processess Solution technique for u ′ Simple random walk with two k s reflecting barriers 0 and N u k = pu k + 1 + qu k − 1 , k = 1 , 2 , . . . , N − 1 , u 0 = 1 , � � . . . � � 1 0 0 0 0 0 � � � � u N = 0 � � � � q 0 p . . . 0 0 0 � � � � � � � � 0 q 0 . . . 0 0 0 � � � � Rewriting the first equation using p + q = 1 we get P = . . . . . . . � � � � . . . . . . . � � � � . . . . . . . � � � � ( p + q ) u k = pu k + 1 + qu k − 1 ⇔ � � � � 0 0 0 . . . q 0 p � � � � � � � � 0 0 0 . . . 0 0 1 0 = p ( u k + 1 − u k ) − q ( u k − u k − 1 ) ⇔ � � � � x k = ( q / p ) x k − 1 T = min { n ≥ 0 ; X n ∈ { 0 , 1 }} u k = P { X T = 0 | X 0 = k } with x k = u k − u k − 1 , such that x k = ( q / p ) k − 1 x 1 Bo Friis Nielsen Random walks and branching processess Bo Friis Nielsen Random walks and branching processess

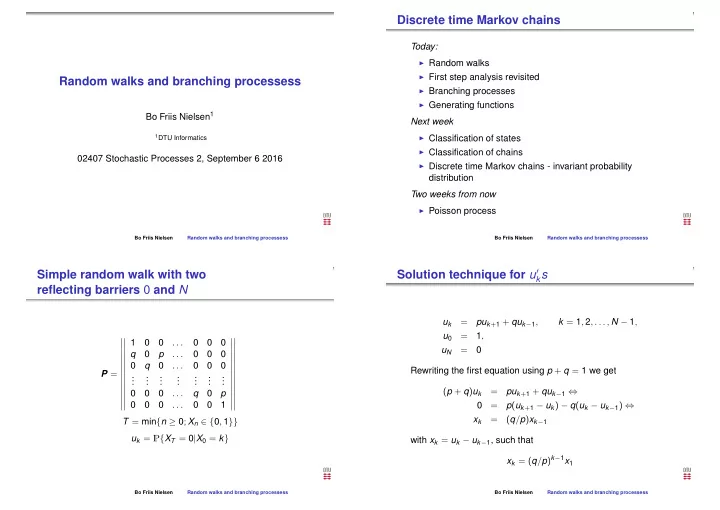

Recovering u k Values of absorption probabilities u k From u N = 0 we get x 1 = u 1 − u 0 = u 1 − 1 x 2 = u 2 − u 1 N − 1 ( q / p ) i ⇔ . � 0 = 1 + x 1 . . i = 0 x k = u k − u k − 1 1 x 1 = − � N − 1 i = 0 ( q / p ) i such that Leading to = x 1 + 1 u 1 u 2 = x 2 + x 1 + 1 � when p = q = 1 1 − ( k / N ) = ( N − k ) / N 2 . u k = ( q / p ) k − ( q / p ) N . when p � = q . 1 − ( q / p ) N k − 1 � ( q / p ) i u k = x k + x k − 1 + · · · + 1 = 1 + x 1 i = 0 Bo Friis Nielsen Random walks and branching processess Bo Friis Nielsen Random walks and branching processess Direct calculation as opposed to first step analysis Expected number of visits to states � � � � Q R W ( n ) = Q ( 0 ) + Q ( 1 ) + . . . Q ( n ) � � � � P = ij ij ij ij � � � � 0 I � � � � In matrix notation we get Q 2 I + Q + Q 2 + · · · + Q n � � � � � � � � � � � � W ( n ) Q R Q R QR + R = P 2 � � � � � � � � � � � � = � = � � � � � � � � � � � � 0 I 0 I 0 I � I + Q + · · · + Q n − 1 � � � � � � � � � � � � = I + Q I + QW ( n − 1 ) = Q n Q n − 1 R + Q n − 2 R + · · · + QR + R � � � � P n � � � � = Elementwise we get the “first step analysis” equations � � � � 0 I � � � � r − 1 � n � 1 � W ( n ) P ik W ( n − 1 ) � if X ℓ = j = δ ij + W ( n ) � = E 1 ( X ℓ = j ) | X 0 = i , where 1 ( X ℓ ) = ij kj ij if X ℓ � = j 0 k = 0 ℓ = 0 Bo Friis Nielsen Random walks and branching processess Bo Friis Nielsen Random walks and branching processess

Limiting equations as n → ∞ Absorption time T − 1 r T − 1 � � � 1 ( X n = j ) = 1 = T ∞ n = 0 j = 0 n = 0 W = I + Q + Q 2 + · · · = � Q i Thus i = 0 W = I + QW r T − 1 � � E ( T | X 0 = i ) = 1 ( X n = j ) X 0 = i E From the latter we get j = 0 n = 0 ( I − Q ) W = I r � T − 1 � � � = 1 ( X n = j | X 0 = i E When all states related to Q are transient (we have assumed j = 0 n = 0 that) we have r ∞ Q i = ( I − Q ) − 1 � � W = = W ij i = 0 j = 0 With T = min { n ≥ 0 , r ≤ X n ≤ N } we have that In matrix formulation v = W 1 � T − 1 � � � � W ij = E 1 ( X n = j ) � X 0 = i � where v i = E ( T | X 0 = i ) as last week, and 1 is a column vector � n = 0 of ones. Bo Friis Nielsen Random walks and branching processess Bo Friis Nielsen Random walks and branching processess Absorption probabilities Random sum (2.3) N � X = ξ 1 + · · · + ξ N = ξ i U ( n ) = P { T ≤ n , X T = j | X 0 = i } i = 1 ij where N is a random variable taking values among the U ( 1 ) = R = IR non-negative integers; with U ( 2 ) = IR + QR E ( N ) = ν, V ar ( N ) = τ 2 , E ( ξ i ) = µ, V ar ( ξ i ) = σ 2 U ( n ) = ( I + Q + · · · + Q ( n − 1 ) R = W ( n − 1 ) R Leading to E ( X ) = E ( E ( X | N )) = E ( N µ ) = νµ U = WR V ar ( X ) = E ( V ar ( X | N )) + V ar ( E ( X | N )) E ( N σ 2 ) + V ar ( N µ ) = νσ 2 + τ 2 µ 2 = Bo Friis Nielsen Random walks and branching processess Bo Friis Nielsen Random walks and branching processess

Branching processes Extinction probabilities X n + 1 = ξ 1 + ξ 2 + · · · + ξ X n Define N to be the random time of extinction where ξ i are independent random variables with common ( N can be defective - i.e. P { N = ∞ ) > 0 ) } propability mass function u n = P { N ≤ n } = P { X N = 0 } P { ξ i = k } = p k And we get From a random sum interpretation we get ∞ � p k u k u n = n − 1 µ E ( X n ) = µ n + 1 E ( X n + 1 ) = k = 0 σ 2 E ( X n ) + µ V ar ( X n ) = σ 2 µ n + µ 2 V ar ( X n ) V ar ( X n + 1 ) = σ 2 µ n + µ 2 ( σ 2 µ n − 1 + µ 2 V ar ( X n − 1 )) = Bo Friis Nielsen Random walks and branching processess Bo Friis Nielsen Random walks and branching processess The generating function - an important analytic Generating functions tool ∞ d k φ ( s ) � p k = 1 � s ξ � � p k s k , � φ ( s ) = E = � d s k k ! � s = 0 k = 0 Moments from generating functions ◮ Manipulations with probability distributions � ∞ � d φ ( s ) ◮ Determining the distribution of a sum of random variables � � � p k ks k − 1 = = E ( ξ ) � � d s � ◮ Determining the distribution of a random sum of random � s = 1 � k = 1 s = 1 variables Similarly ◮ Calculation of moments � ∞ ◮ Unique characterisation of the distribution d 2 φ ( s ) � � � p k k ( k − 1 ) s k − 2 � = = E ( ξ ( ξ − 1 )) � � d s 2 ◮ Same information as CDF � � s = 1 � k = 2 s = 1 a factorial moment V ar ( ξ ) = φ ′′ ( 1 ) + φ ′ ( 1 ) − ( φ ′ ( 1 )) 2 Bo Friis Nielsen Random walks and branching processess Bo Friis Nielsen Random walks and branching processess

The sum of iid random variables Sum of iid random variables - continued Remember Independent Identically Distributed The Probability of outcome ( X 1 = i , X 2 = x − i ) is S n = X 1 + X 2 + · · · + X n = � n i = 1 X i P { X 1 = i , X 2 = x − i } = P { X 1 = i } P { X 2 = x − i } by With p x = P { X i = x } , X i ≥ 0 we find for n = 2 S 2 = X 1 + X 2 independence, which again is p i p x − i . The event { S 2 = x } can be decomposed into the set In total we get { ( X 1 = 0 , X 2 = x ) , ( X 1 = 1 , X 2 = x − 1 ) x � . . . ( X 1 = i , X 2 = x − i ) , . . . ( X 1 = x , X 2 = 0 ) } P { S 2 = x } = p i p x − i The probability of the event { S 2 = x } is the sum of the i = 0 probabilities of the individual outcomes. Bo Friis Nielsen Random walks and branching processess Bo Friis Nielsen Random walks and branching processess Generating function - one example Generating function - another example Binomial distribution � n � Poisson distribution p k ( 1 − p ) n − k p k = p k = λ k k k ! e − λ � n n n ∞ ∞ ∞ � s k λ k ( s λ ) k � s k p k = � s k p k ( 1 − p ) n − k k ! e − λ = e − λ φ bin ( s ) = � s k p k = � � φ poi ( s ) = k k ! k = 0 k = 0 k = 0 k = 0 k = 0 � n n = e − λ e s λ = e − λ ( 1 − s ) � ( sp ) k ( 1 − p ) n − k = ( 1 − p + ps ) n � = k k = 0 Bo Friis Nielsen Random walks and branching processess Bo Friis Nielsen Random walks and branching processess

Recommend

More recommend