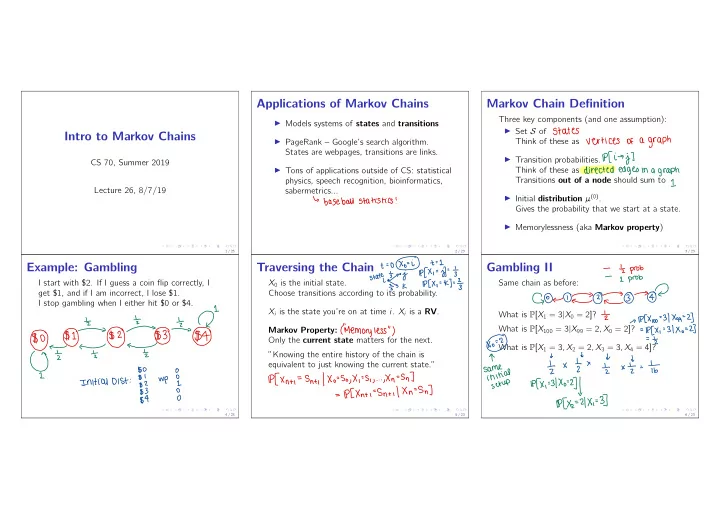

↳ ↳ Applications of Markov Chains Markov Chain Definition Three key components (and one assumption): I Models systems of states and transitions I Set S of States Intro to Markov Chains a graph I PageRank – Google’s search algorithm. Think of these as vertices of States are webpages, transitions are links. → j ] Pfi I Transition probabilities. CS 70, Summer 2019 a graph I Tons of applications outside of CS: statistical Think of these as directed edges in physics, speech recognition, bioinformatics, Transitions out of a node should sum to 1 Lecture 26, 8/7/19 sabermetrics... I Initial distribution µ ( 0 ) . baseball statistics ! Gives the probability that we start at a state. I Memorylessness (aka Markov property ) 1 / 23 2 / 23 3 / 23 =opf Example: Gambling Traversing the Chain + Gambling II hit 's Iz prob - state .io/IYkpCx.=kI-- I prob - I I start with $ 2. If I guess a coin flip correctly, I X 0 is the initial state. Same chain as before: ? 3- get $ 1, and if I am incorrect, I lose $ 1. Choose transitions according to its probability. G⑨←①0③→④ I stop gambling when I either hit $ 0 or $ 4. X i is the state you’re on at time i . X i is a RV . ¥ What is P [ X 1 = 3 | X 0 = 2 ] ? → 117%0--31×99=2 ] ¥④÷F④÷÷④±$ " ) " =p[ X , -31×0=2 ] ( What is P [ X 100 = 3 | X 99 = 2 , X 0 = 2 ] ? Markov Property: Memory less ④ = Lz Only the current state matters for the next. What is P [ X 1 = 3 , X 2 = 2 , X 3 = 3 , X 4 = 4 ] ? ”Knowing the entire history of the chain is Ix Ix ¥x¥ " equivalent to just knowing the current state.” it . - sagging lpfxnti-sntilxo-sgxi.si WP Initial Dist xxx :3 " : .gl/n--SnT--lPCXnti--Sn+i/Xn--Sn ] , . ¥ggIg . gig axed , $ . 4 / 23 5 / 23 6 / 23

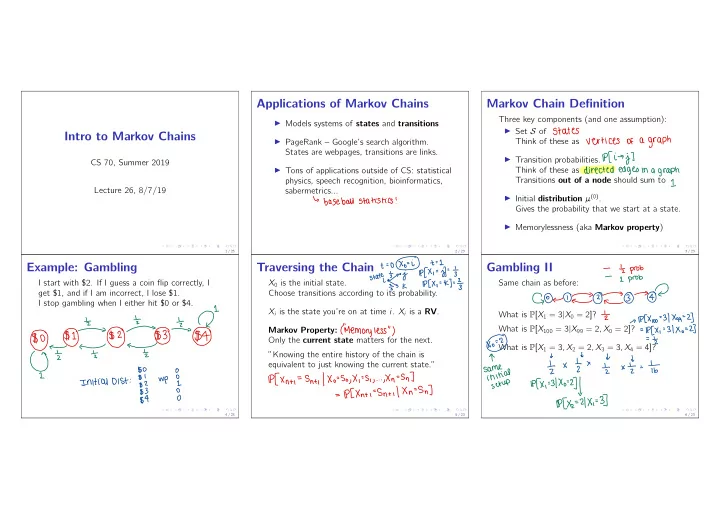

Gambling II The Transition Matrix The Distribution Vectors So far: saw initial distribution µ ( 0 ) . Calculations are easier to do when we stick the Xo - -2 setup same What is P [ X 4 = 4 ] ? transition probabilities in a matrix. Can represent it as a row vector: - Xo 1/4=4 Xz X , Xz ] pfXo=2 ] → j ] [1%0--1] Pfi Transition matrix P . . . . - 3 I 2 T The ( i , j ) entry is P [ X 1 = j | X 0 = i ] , or the 4 index → 4 4 entries 3 2 . . - Pathi row " : index States Col transition from i to j . - States tot ← - sum = . 3 2 3 4 path 2 : 2 : :c We can also define a distribution at time n : !im÷¥¥÷÷÷÷÷j:÷÷ 3 2 I 2 Path 3 4 : - neg , [ pCxn=1T pCXn=2T Try tPCPath27tPCPath3 ] sum non Col : - . 1) Path 1p[Xq=4T=P[ otherwise no I 2 3 / index → restriction . . . States this call - neg - - row entries non : doesn't aotod scale index UCM ) it idea but . EI , = States . 7 / 23 8 / 23 9 / 23 Distribution at Time 1 Distribution at Time n Aside: n ! 1 - 23 I ' " cpcxoilppcxo vector now µ§Y÷ For the two state Markov chain, as n ! 1 , . . . In general: µ ( n ) = P n µ ( 0 ) . (Proof optional.) We’ll prove that µ ( 0 ) P = µ ( 1 ) . IXN Tn → P n ! ¥ , IXN xn i ] Uk ) Example: Two State Markov Chain ith entry IPCX , of - = a E ( 0,1 ) a P CO ) of ith column No matter what µ ( 0 ) is: Di = µ x - it Xo 'D - I ]tlP[xo= 2) xlpfx , a i - I I = pfxo =D xpfx , - / Xo - - p ] [ p i t a . . . Xo - ftaa casework on Rule Prob - PIL } ' Total P = i ] . -tzan ) Iz ) a - EI . - CE IPCX , = [ tttaM Ep i I - = : ) If we know µ ( 1 ) , how do we get µ ( 2 ) ? pm ( Notes UCO ) p2 ) p ill 2) = MCI = Tomorrow: we’ll study this in greater detail! profile induction using 10 / 23 11 / 23 12 / 23

Break First Step Analysis: Two Heads First Step Analysis: Two Heads I repeatedly flip a coin, and stop when I get two For state S , let τ ( S ) be the expected time to two TCO ) heads in a row. What is the expected number of heads, starting from state S . variables : 4 TCH ) flips I need before stopping? T ( T ) Analyze a single transition out of each state to TLE ) get the first step equations : What’s the weirdest thing you’ve ever eaten? - ' ETH 101 TCO ) It ETCH ) ④ : - - - 2-itztfthtt.TK ) : TCT ) ' " T E ETH ) TITLE ) It TCH ) H : - TCE ) O HH - t : - TCO ) Notes Goal : . . 13 / 23 14 / 23 15 / 23 Max of Two Geometrics Max of Two Geometrics Coupon Collector: A Markov Chain? Let X , Y ∼ Geometric( p ) . X , Y are independent . Set up the first step equations, and solve: Can we reformulate Coupon Collector (with n - p I Say X , Y model time until a success. distinct coupons) as a Markov chain? ps max( X , Y ) is the first time that both X , Y have How do we recover the expected number of succeeded at least once. What is E [max( X , Y )] ? coupons needed to get all n distinct ones? up # Successes - States - - distinct T # coupons States = P2r_ 1- p geo both both rien succeed at En fall rien geo ' . ¥05 ② i t①¥②¥ from until - expected time + ( i ) - pi - ④ Ci - p )TH)tp2Tl2 ) - pp TCO ) -12pct . . - ( I TCO ) It - O : T P2r_ t PTCZ ) - p ) geo both 1- ( I " i both TCI ) = expected 17=1 " T( " from time to " N succeed 1 : T ( it fall Let geo . T (2) =D Finish goal up TCO ) Exercise z : : : . . 16 / 23 17 / 23 18 / 23

⇒ ⇒ Probability of A Before B Probability of A Before B Gambling III Let A and B be two disjoint subsets of the states Can also run first step analysis! I start with $ 100. In each round, I win $ 100 with A ! S of a Markov chain. probability p and lose $ 100 with probability Already in IEA If ( i ) I : x : ( 1 − p ) . I end when I either have $ 0 or $ 300. to get Impossible Let α ( i ) be the probability that we enter A before ali )= 0 if B What is the probability I end the game with $ 300? B : before entering B , if we start at state i . A to . ¥④a pfi→jklj ) C ali ) else : - - " neighbors j - i of on taking based 1 casework i from Step . 19 / 23 20 / 23 21 / 23 Gambling III Summary Let A = { 0 } , B = { 300 } . First step equations: I Markov chains let you model real world ¥④a I problems with states and transition probabilities I The Markov property tells you that where i ] that you go next only depends on the current - PCB state before ali ) state , not on any previous history. x( 07=0 $0 : - p ) 210 ) + ( I I The first step analysis is a simple way of p4( 200 ) 2400 ) = $100 : analyzing expected hitting times and 2400 ) - p ) 300 ) ( 200 ) pal ( C t = x $200 : probabilities of hitting certain states before ( 200 ) achoo ) P2 3001=1 I $300 = others. : PINO xp Cl = - ,P¥}° all ooh 22 / 23 23 / 23

Recommend

More recommend