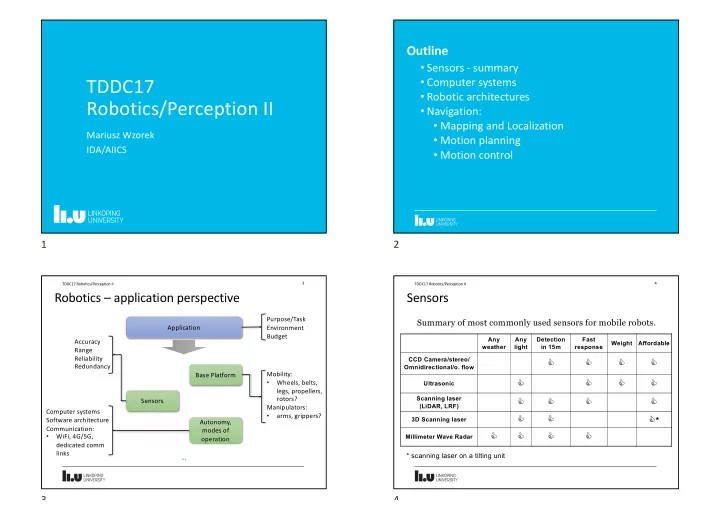

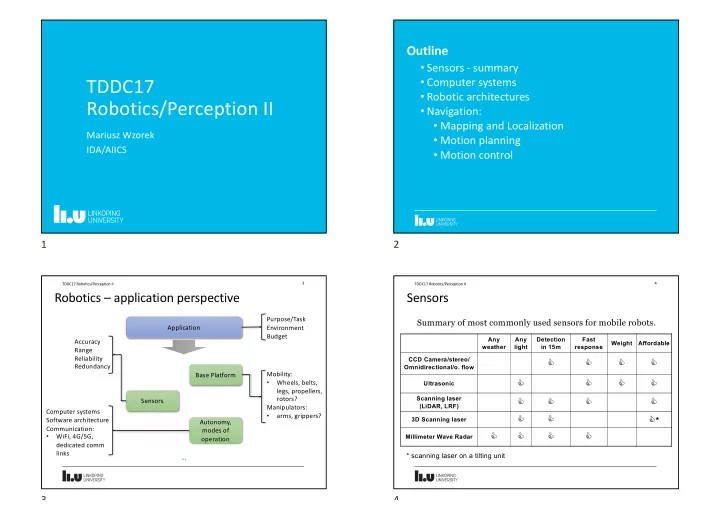

Outline • Sensors - summary TDDC17 • Computer systems • Robotic architectures Robotics/Perception II • Navigation: • Mapping and Localization Mariusz Wzorek • Motion planning IDA/AIICS • Motion control 1 2 TDDC17 Robotics/Perception II 3 TDDC17 Robotics/Perception II 4 Robotics – application perspective Sensors Purpose/Task Summary of most commonly used sensors for mobile robots. Application Environment Budget Any Any Detection Fast Accuracy Weight Affordable weather light in 15m response Range Reliability CCD Camera/stereo/ C C C C Redundancy Omnidirectional/o. flow Mobility: Base Platform C C C C • Wheels, belts, Ultrasonic legs, propellers, rotors? Scanning laser Sensors C C C C (LiDAR, LRF) Manipulators: Computer systems • arms, grippers? C C C * Software architecture 3D Scanning laser Autonomy, Communication: modes of C C C C • WiFi, 4G/5G, Millimeter Wave Radar operation dedicated comm links * scanning laser on a tilting unit … 3 4

TDDC17 Robotics/Perception II 5 TDDC17 Robotics/Perception II 6 Sensors cont’d Sensors cont’d waymo.com tesla.com 5 6 TDDC17 Robotics/Perception II 7 Sensors - common interfaces Outline • Sensors - summary • analog - voltage level • digital: • Computer systems • serial connections e.g.: • Robotic architectures • RS232 • Navigation: • I2C • Mapping and Localization • SPI • Motion planning • USB • Motion control • Ethernet • pulse width modulation - PWM • … 7 8

TDDC17 Robotics/Perception II 9 TDDC17 Robotics/Perception II 10 Computer systems Computer systems cont’d Many challenges and trade-offs! PC104 - standardised form factor • power consumption • industrial grade • size & weight • relatively small size • computational power • highly configurable • robustness • >200 vendors world-wide • different operational conditions moisture, temperature, dirt, • variety of components vibrations, etc. • variety of processors • cloud computing? ~10x10cm 9 10 TDDC17 Robotics/Perception II 11 TDDC17 Robotics/Perception II 12 Computer systems cont’d Computer systems cont’d Custom made embedded systems Example system design: • with integrated sensor suite !"#$ %&#$ L4-464!1/2M!NL2CO!L2FCP! • micro scale, light weight • !"#$!%&'!()*+,-./! • !;<<!/&'!()*+,-!===! • !"!%0!12/! • !>?@!/0!12/! (HD)8!/4*4R)-)*B!K*9B! • !"!%0!3456!789:)! • !?">!/0!3456!789:)! • fitted for platform design %(C!1)E)9:)8! AB6)8*)B! 048H-)B89E!(8)55,8)!2I+-)B)8! CD9BE6! (SF!C)*5H8!C,9B)! '%#$ AB6)8*)B!T97)H!C)8:)8! • !;<<!/&'!()*+,-!===! • !>?@!/0!12/! U<>#""V!W98)I)55!0897R)! • !?">!/0!3456!789:)! %C/!/H7)-5! • fitted for platform design FH--,*9E4+H*!/H7,I)! CB)8)H.T959H*! FFG!FHIH8! /H7,I)! F4-)84! (4*! J9IB! AB6)8*)B! K*9B! Q45)8!14*R)!S9*7)8! J6)8-4I! /9*9GT! 1C>Y>! /H7,I)! F4-)84! 1)EH87)85! 2*4IHR! S98)D98)! ()8E)X+H*!C)*5H8!C,9B)! 11 12

TDDC17 Robotics/Perception II 13 TDDC17 Robotics/Perception II 14 Ground Robot at AIICS LiU Base platform • Husky unmanned ground vehicle by Clearpath • run time: up to 3h • Max speed: 1 m/s • Weight: 50 kg • Max payload: 75 kg 13 14 GPU – Jetson TK1 TDDC17 Robotics/Perception II Robot Arm UR5 – Universal 15 TDDC17 Robotics/Perception II 16 Robotics Intel NUC i7 computer Network switch Stereo camera • Weight: 20.6 kg • Max payload: 5 kg • Working radius: 850 mm • 6 DOF Gripper Gripper – Weiss Robotics Battery pack for arm • Weight: 1.2 kg GPS antenna • Max payload: 8 kg Inertial Measurement Unit LiDAR (Light Detection and WiFi router Ranging) sensor - Velodyne Robot arm power and control system • Range: 100m • Vertical FOV: 30deg • Horizontal FOV: 360deg 15 16

TDDC17 Robotics/Perception II 18 Telesystems/Telemanipulators Outline • Sensors - summary • Computer systems • Robotic architectures • Navigation: • Mapping and Localization Delay • Motion planning • Motion control 17 18 TDDC17 Robotics/Perception II 19 TDDC17 Robotics/Perception II 20 Telepresence Reactive systems Sense Act Experience of being fully present at live event without actually being there Robot responses directly to sensor stimuli . • see the environment through robot’s cameras Intelligence emerges from combination of simple behaviours. • feel the surrounding through robot sensors For example - subsumption architecture (Rodney Brooks, MIT): • concurrent behaviours • Realised by for example: • higher levels subsume behaviours of lower layers • stereo vision • no internal representation • sound feedback • finite state machines • cameras that follow the operator’s head movements • force feedback and tactile sensing • VR system https://www.youtube.com/watch?v=9u0CIQ8P_qk 19 20

TDDC17 Robotics/Perception II 21 TDDC17 Robotics/Perception II 22 Limitations of Reactive Systems Hierarchical Systems • Agents without environment models must have sufficient Sense Plan Act information available from local environment • If decisions are based on local environment, how does it take into account non-local information (i.e., it has a “short-term” view) • Difficult to make reactive agents that learn Sensors • Since behaviour emerges from component interactions plus environment, it is hard to see how to engineer specific agents Extract combine features Execute Control (no principled methodology exists) Plan task features into model task motors • It is hard to engineer agents with large numbers of behaviours (dynamics of interactions become too complex to understand) Actuators 21 22 TDDC17 Robotics/Perception II 23 TDDC17 Robotics/Perception II 24 Hierarchical Systems (Shakey Example) Hybrid systems (three-layer architecture) Plan Shakey (1966 - 1972) • Developed at the Stanford Research Institute • Used STRIPS planner (operators, pre and post conditions) • Navigated in an office environment, trying to satisfy a goal Sense Act given to it on a teletype. It would, depending on the goal and circumstances, navigate around obstacles consisting of large painted blocks and wedges, push them out of the way, or push them to some desired location. • Primary sensor: black-and-white television camera • Sting symbolic logic model of the world in the form of first order predicate calculus • Very careful engineering of the environment https://www.youtube.com/watch?v=GmU7SimFkpU 23 24

TDDC17 Robotics/Perception II 25 TDDC17 Robotics/Perception II 26 Hybrid systems (HDR3 – AIICS/IDA @ LiU Example) Hybrid systems (Minerva Example) Tour guide at the Smithsonian's National Museum of American History (1998) http://www.cs.cmu.edu/~thrun/movies/minerva.mpg 25 26 TDDC17 Robotics/Perception II 28 Navigation Outline Mapping – Localization – SLAM – State Estimation – Control • Sensors - summary One of the basic Navigation functionalities required • Computer systems for any real world Where am I? deployment: Application of state • need to know where • Robotic architectures estimation we are to calculate robot location • what the (e.g. x, y, orientation - environment looks • Navigation: pose). SLAM Mapping Localization like • Mapping and Localization Simultaneous Localization and Mapping Create a model of the • Motion planning environment. State Motion Assumes that we know Control where the robot and Estimation Planning • Motion control its sensors are. How do I execute planned Estimate position (pose) of How do I safely get from motion? a robot in the environment, point A to B? E.g. generate control signals or poses of landmarks in the to follow planned trajectory environment – sensor data (velocity, position can be noisy commands, etc.) 27 28

TDDC17 Robotics/Perception II 29 TDDC17 Robotics/Perception II 30 LiDAR – recap LiDAR – recap cont’d Active sensor based on time of flight principle SICK LMS (single line sensor): Velodyne Puck (multi-line sensor): • Range (d): 80m • Range (d): 100m • emits light waves from a laser Field of View - horizontal ( a ): Field of View - horizontal ( a ): • • 0 o -180 o 360 o • measures the time it took for the signal to return to calculate the Angular resolution - horizontal ( b ): Angular resolution - horizontal ( b ): • • distance 0.25 o -1 o 0.1 o -0.4 o • Field of View - vertical ( g ): ±15 o Angular resolution - vertical ( f ): 2 o • d g a b f 0 o View from top View from side View from top 29 30 TDDC17 Robotics/Perception II 31 TDDC17 Robotics/Perception II 32 LiDAR – recap cont’d Mapping SICK LMS stationary SICK LMS with tilt Velodyne stationary mechanism range data at certain height Assume we use a mobile robot platform equipped with a LiDAR sensor Simple idea: combine measurement of robot motion with LiDAR sensor readings Measurement of robot motion - state estimation, e.g. odometry, dead reckoning • Simple odometry - wheel encoders that calculate how many times wheels turned • Visual odometry etc. – e.g. optical flow sensor, or use sensor fusion to produce more accurate estimation of motion There will always be a measurement error when calculating the state - depending on the technique/sensor used it can be smaller or larger Scan at x 0, y 0 Scan at x 1, y 1 Scans added y x Velodyne: https://www.youtube.com/watch?v=WPtHRVdWXSI https://www.youtube.com/watch?v=KxWrWPpSE8I 31 32

Recommend

More recommend