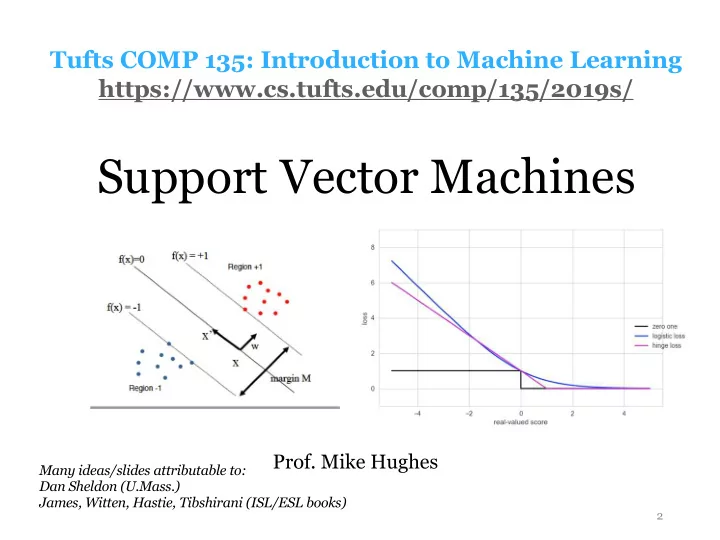

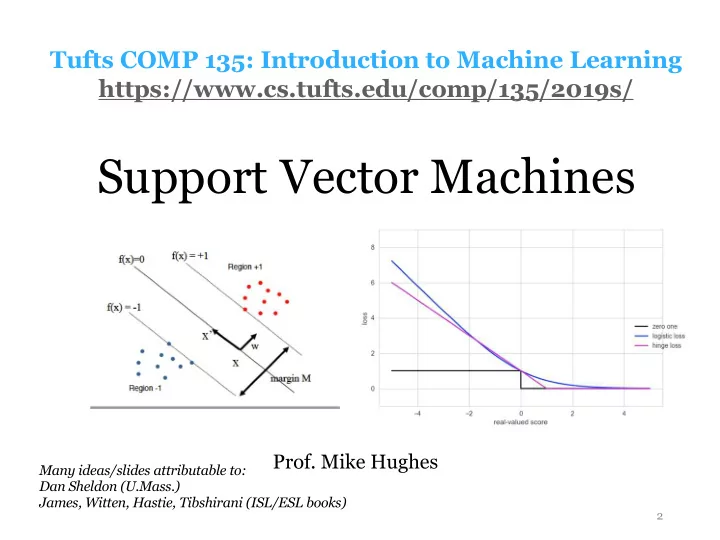

Tufts COMP 135: Introduction to Machine Learning https://www.cs.tufts.edu/comp/135/2019s/ Support Vector Machines Prof. Mike Hughes Many ideas/slides attributable to: Dan Sheldon (U.Mass.) James, Witten, Hastie, Tibshirani (ISL/ESL books) 2

SVM Unit Objectives Big ideas: Support Vector Machine • Why maximize margin? • What is a support vector? • What is hinge loss? • Advantages over logistic regression • Less sensitive to outliers • Advantages from sparsity in when using kernels • Disadvantages • Not probabilistic • Less elegant to do multi-class Mike Hughes - Tufts COMP 135 - Spring 2019 3

What will we learn? Evaluation Supervised Training Learning Data, Label Pairs Performance { x n , y n } N measure Task n =1 Unsupervised Learning data label x y Reinforcement Learning Prediction Mike Hughes - Tufts COMP 135 - Spring 2019 4

Recall: Kernelized Regression Mike Hughes - Tufts COMP 135 - Spring 2019 5

Linear Regression Mike Hughes - Tufts COMP 135 - Spring 2019 6

Kernel Function k ( x i , x j ) = φ ( x i ) T φ ( x j ) Interpret: similarity function for x_i and x_j Properties: Input: any two vectors Output: scalar real larger values mean more similar symmetric Mike Hughes - Tufts COMP 135 - Spring 2019 7

Common Kernels Mike Hughes - Tufts COMP 135 - Spring 2019 8

Kernel Matrices • K_train : N x N symmetric matrix k ( x 1 , x 1 ) k ( x 1 , x 2 ) . . . k ( x 1 , x N ) k ( x 2 , x 1 ) k ( x 2 , x 2 ) . . . k ( x 2 , x N ) K = . . . k ( x N , x 1 ) k ( x N , x 2 ) . . . k ( x N , x N ) • K_test : T x N matrix for test feature Mike Hughes - Tufts COMP 135 - Spring 2019 9

Linear Kernel Regression Mike Hughes - Tufts COMP 135 - Spring 2019 10

Radial basis kernel aka Gaussian aka Squared Exponential Mike Hughes - Tufts COMP 135 - Spring 2019 11

Compare: Linear Regression Prediction : Linear transform of G-dim features G y ( x i , θ ) = θ T φ ( x i ) = X ˆ θ g · φ ( x i ) g g =1 Training : Solve G-dim optimization problem N + L2 penalty y ( x n , θ )) 2 X min ( y n − ˆ (optional) θ n =1 Mike Hughes - Tufts COMP 135 - Spring 2019 12

Kernelized Linear Regression • Prediction : N X y ( x i , α , { x n } N ˆ n =1 ) = α n k ( x n , x i ) = X n =1 • Training: Solve N-dim optimization problem N X y ( x n , α , X )) 2 min ( y n − ˆ α n =1 Can do all needed operations with only access to kernel (no feature vectors are created in memory) Mike Hughes - Tufts COMP 135 - Spring 2019 13

Math on board • Goal: Kernelized linear prediction reweights each training example Mike Hughes - Tufts COMP 135 - Spring 2019 14

Can kernelize any linear model Regression: Prediction N X y ( x i , α , { x n } N ˆ n =1 ) = α n k ( x n , x i ) n =1 Logistic Regression: Prediction p ( Y i = 1 | x i ) = σ (ˆ y ( x i , α , X )) Mike Hughes - Tufts COMP 135 - Spring 2019 15

Training : Reg. Vs. Logistic Reg. N y ( x n , α , X )) 2 X min ( y n − ˆ α n =1 N X min log loss( y n , σ (ˆ y ( x n , α , X ))) α n =1 Mike Hughes - Tufts COMP 135 - Spring 2019 16

Downsides of LR Log loss means any example misclassified pays a steep price, pretty sensitive to outliers Mike Hughes - Tufts COMP 135 - Spring 2019 17

Stepping back Which do we prefer? Why? Mike Hughes - Tufts COMP 135 - Spring 2019 18

Idea: Define Regions Separated by Wide Margin Mike Hughes - Tufts COMP 135 - Spring 2019 19

w is perpendicular to boundary Mike Hughes - Tufts COMP 135 - Spring 2019 20

Examples that define the margin are called support vectors Nearest positive example Nearest negative example Mike Hughes - Tufts COMP 135 - Spring 2019 21

Observation: Non-support training examples do not influence margin at all Could perturb these examples without impacting boundary Mike Hughes - Tufts COMP 135 - Spring 2019 22

How wide is the margin? Mike Hughes - Tufts COMP 135 - Spring 2019 23

Small margin ! = #+1 &' w x + b ≥ 0 −1 &' w x + b < 0 y positive w x + b>0 y negative Margin: distance to the boundary w x + b<0 Mike Hughes - Tufts COMP 135 - Spring 2019 24

Large margin ! = #+1 &' w x + b ≥ 0 −1 &' w x + b < 0 y positive w x + b>0 y negative Margin: distance to the boundary w x + b<0 Mike Hughes - Tufts COMP 135 - Spring 2019 25

How wide is the margin? Distance from nearest positive example to nearest negative example along vector w: Mike Hughes - Tufts COMP 135 - Spring 2019 26

How wide is the margin? Distance from nearest positive example to nearest negative example along vector w: By construction, we assume Mike Hughes - Tufts COMP 135 - Spring 2019 27

SVM Training Problem Version 1: Hard margin For each n = 1, 2, …. N Requires all training examples to be correctly classified This is a constrained quadratic optimization problem. There are standard optimization methods as well methods specially designed for SVM. Mike Hughes - Tufts COMP 135 - Spring 2019 28

SVM Training Problem Version 1: Hard margin Minimizing this equivalent to maximizing the margin width in feature space For each n = 1, 2, …. N Requires all training examples to be correctly classified This is a constrained quadratic optimization problem. There are standard optimization methods as well methods specially designed for SVM. Mike Hughes - Tufts COMP 135 - Spring 2019 29

Soft margin: Allow some misclassifications Slack at example i Distance on wrong side of the margin Mike Hughes - Tufts COMP 135 - Spring 2019 30

Hard vs. soft constraints HARD: All positive examples to satisfy SOFT: Want each positive examples to satisfy with slack as small as possible (minimize absolute value ) Mike Hughes - Tufts COMP 135 - Spring 2019 31

Hinge loss for positive example Assumes current example has positive label y = +1 +1 0 Mike Hughes - Tufts COMP 135 - Spring 2019 32

SVM Training Problem Version 2: Soft margin Tradeoff parameter C controls model complexity Smaller C: Simpler model, encourage large margin even if we make lots of mistakes Bigger C: Avoid mistakes Mike Hughes - Tufts COMP 135 - Spring 2019 33

SVM vs LR: Compare training Both require tuning complexity hyperparameter C to avoid overfitting Mike Hughes - Tufts COMP 135 - Spring 2019 34

Loss functions: SVM vs Logistic Regr. Mike Hughes - Tufts COMP 135 - Spring 2019 35

SVMs: Prediction Make binary prediction via hard threshold Mike Hughes - Tufts COMP 135 - Spring 2019 36

SVMs and Kernels: Prediction Make binary prediction via hard threshold Efficient training algorithms using modern quadratic programming solve the dual optimization problem of SVM soft margin problem Mike Hughes - Tufts COMP 135 - Spring 2019 37

Support vectors are often small fraction of all examples Nearest positive example Nearest negative example Mike Hughes - Tufts COMP 135 - Spring 2019 38

Support vectors defined by non-zero alpha in kernel view Mike Hughes - Tufts COMP 135 - Spring 2019 39

SVM + Squared Exponential Kernel Mike Hughes - Tufts COMP 135 - Spring 2019 40

SVM Logistic Regr Loss hinge cross entropy (log loss) Sensitive to less more outliers Probabilistic? no yes Kernelizable? Yes, with speed Yes benefits from sparsity Multi-class? Only via a separate Easy , use softmax one-vs-all for each class Mike Hughes - Tufts COMP 135 - Spring 2019 41

Activity • KernelRegressionDemo.ipynb • Scroll down to SVM vs logistic regression • Can you visualize behavior with different C? • Try different kernels? • Examine alpha vector? Mike Hughes - Tufts COMP 135 - Spring 2019 42

Recommend

More recommend