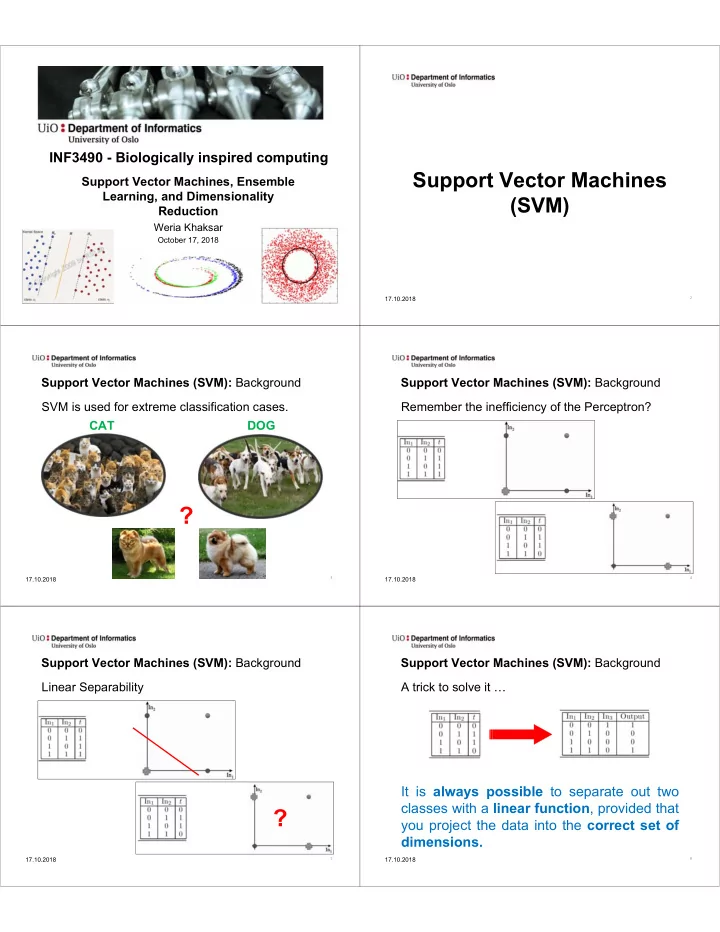

INF3490 - Biologically inspired computing Support Vector Machines Support Vector Machines, Ensemble Learning, and Dimensionality (SVM) Reduction Weria Khaksar October 17, 2018 17.10.2018 2 Support Vector Machines (SVM): Background Support Vector Machines (SVM): Background SVM is used for extreme classification cases. Remember the inefficiency of the Perceptron? CAT DOG ? 17.10.2018 3 17.10.2018 4 Support Vector Machines (SVM): Background Support Vector Machines (SVM): Background Linear Separability A trick to solve it … It is always possible to separate out two classes with a linear function , provided that ? you project the data into the correct set of dimensions. 17.10.2018 17.10.2018 5 6

Support Vector Machines (SVM): Background Support Vector Machines (SVM): The Margin A trick to solve it … Which line is the best separator? ? 17.10.2018 7 17.10.2018 8 Support Vector Machines (SVM): The Margin Support Vector Machines (SVM): The Margin Why do we need the best line? Which line is the best separator? The one with the highest margin 17.10.2018 9 17.10.2018 10 Support Vector Machines (SVM): Support Vectors Support Vector Machines (SVM): Support Vectors Which data points are important? Which data points are important? The data points in each class that lie Support closest to the Vectors classification line are called Support Vectors. 17.10.2018 17.10.2018 11 12

Support Vector Machines (SVM): Optimal Separation Support Vector Machines (SVM): The Math. 𝑁𝑏𝑦𝑗𝑛𝑗𝑨𝑓 |𝑁| The margin should be as large as possible. 𝑡. 𝑢. : the best classifier is the one that goes through the 𝑢 � 𝐱 � . 𝐲 � � 𝑐 � 1, 𝑗 � 1, … , 𝑜 middle of the marginal area. We can through away other data and just use support vectors for classification. 17.10.2018 13 17.10.2018 14 Support Vector Machines (SVM): Support Vector Machines (SVM): Slack Variables for Non-Linearly Separable Problems: Slack Variables for Non-Linearly Separable Problems: � � � � � � � � 17.10.2018 15 17.10.2018 16 Support Vector Machines (SVM): KERNELS Support Vector Machines (SVM): KERNELS The trick is to modify the input features in some way, to be The trick is to modify the input features in some way, to be able to linearly classify the data . able to linearly classify the data . The main idea is to replace the input feature, 𝐲 � , with some The main idea is to replace the input feature, 𝐲 � , with some function, 𝜚 𝐲 � . function, 𝜚 𝐲 � . The main challenge is to automate the algorithm to find the The main challenge is to automate the algorithm to find the proper function without a suitable knowledge domain. proper function without a suitable knowledge domain. 17.10.2018 17.10.2018 17 18

Support Vector Machines (SVM): KERNELS Support Vector Machines (SVM): SVM Algorithm: The trick is to modify the input features in some way, to be able to linearly classify the data . The main idea is to replace the input feature, 𝐲 � , with some function, 𝜚 𝐲 � . The main challenge is to automate the algorithm to find the proper function without a suitable knowledge domain. 17.10.2018 19 17.10.2018 20 Support Vector Machines (SVM): SVM Examples: Support Vector Machines (SVM): SVM Examples: Performing nonlinear classification via linear separation in higher dimensional space 17.10.2018 21 17.10.2018 22 Support Vector Machines (SVM): SVM Examples: Support Vector Machines (SVM): SVM Examples: The SVM learning about a linearly separable dataset ( top row ) and a dataset that needs two straight lines to separate in 2D ( bottom row ) with left the linear kernel, middle the polynomial kernel of degree 3, and right the RBF kernel. The effects of different kernels when learning a version of XOR 17.10.2018 17.10.2018 23 24

Ensemble Learning: Background Having lots of simple learners that each provide slightly different results, Ensembled Learning Putting them together in a proper way, The results are significantly better. 17.10.2018 25 17.10.2018 26 Ensemble Learning: Background Ensemble Learning: Important Considerations Which learners The Basic Idea: should we use? How should we ensure that they learn different things? How should we combine their results? 17.10.2018 27 17.10.2018 28 Ensemble Learning: Important Considerations Ensemble Learning: Background Which learners If we take a collection of very poor learners, should we use? each performing only just better than chance, then by putting them together it is possible to How should we make an ensemble learner that can perform ensure that they learn arbitrarily well. different things? We just need lots of low-quality learners, and How should we a way to put them together usefully, and we combine their can make a learner that will do very well. results? 17.10.2018 17.10.2018 29 30

Ensemble Learning: Background Ensemble Learning: How it works? 17.10.2018 31 17.10.2018 32 Ensemble Learning: BOOSTING Ensemble Learning: BOOSTING AdaBoost: As points are misclassified, their weights increase in boosting (shown by the data point getting larger), which makes the importance of those data points increase, making the classifiers pay more attention to them. 17.10.2018 33 17.10.2018 34 Ensemble Learning: BOOSTING Ensemble Learning: BOOSTING AdaBoost: How it works? 17.10.2018 17.10.2018 35 36

Ensemble Learning: BOOSTING Ensemble Learning: BAGGING AdaBoost: Bagging (Bootstrap Aggregating): AdaBoost in Action 17.10.2018 37 17.10.2018 38 Ensemble Learning: BAGGING Ensemble Learning: BAGGING Bagging (Bootstrap Aggregating): How it works? Bagging (Bootstrap Aggregating): Examples: 17.10.2018 39 17.10.2018 40 Ensemble Learning: Summary Dimensionality reduction 17.10.2018 17.10.2018 41 42

Dimensionality reduction: Why? Dimensionality reduction: How? When looking at data and plotting results, we can Feature Selection : Looking through the features never go beyond three dimensions. that are available and seeing whether or not The higher the number of dimensions we have, the they are actually useful. more training data we need. The dimensionality is an explicit factor for the Feature Derivation : Deriving new features from computational cost of many algorithms. the old ones, generally by applying transforms to Remove noise. the dataset. Significantly improve the results of the learning Clustering : Grouping together similar data algorithm. points, and see whether this allows fewer Make the dataset easier to work with. features to be used. Make the results easier to understand. 17.10.2018 43 17.10.2018 44 Dimensionality reduction: Example Dimensionality reduction: Principal Components Analysis (PCA) 17.10.2018 45 17.10.2018 46 Dimensionality reduction: Dimensionality reduction: Principal Components Analysis (PCA) Principal Components Analysis (PCA) The principal component is the direction in the data with the largest variance. 17.10.2018 17.10.2018 47 48

Dimensionality reduction: Dimensionality reduction: Principal Components Analysis (PCA) Principal Components Analysis (PCA) Example PCA is a linear transformation • Does not directly help with data that is not linearly separable. • However, may make learning easier because of reduced complexity. PCA removes some information from the data • Might just be noise. • Might provide helpful nuances that may be of help to some classifiers. how to project samples into the variable space 17.10.2018 49 17.10.2018 50 17.10.2018 51

Recommend

More recommend