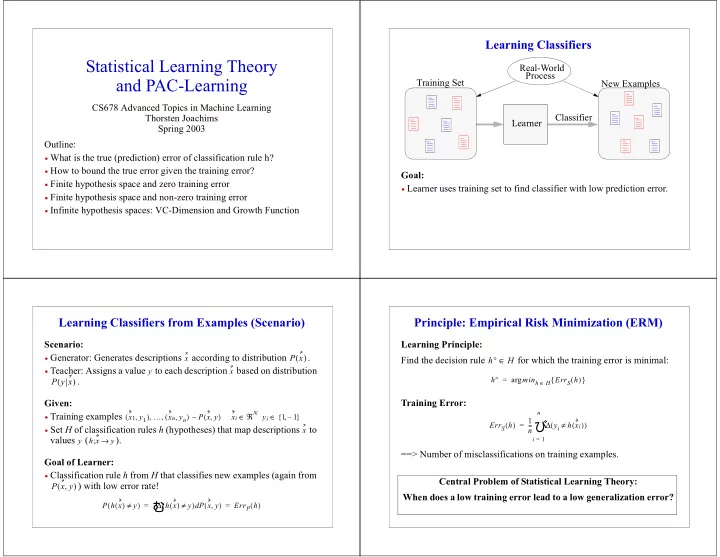

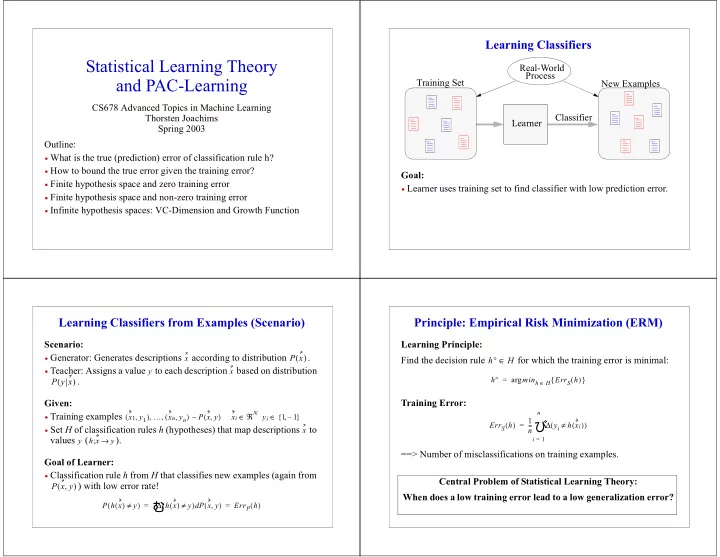

Learning � Classifiers Statistical � Learning � Theory Real-World Process and � PAC-Learning Training � Set New � Examples CS678 � Advanced � Topics � in � Machine � Learning Thorsten � Joachims Classifier Learner Spring � 2003 Outline: • What � is � the � true � (prediction) � error � of � classification � rule � h? • How � to � bound � the � true � error � given � the � training � error? Goal: � • Finite � hypothesis � space � and � zero � training � error � • Learner � uses � training � set � to � find � classifier � with � low � prediction � error. • Finite � hypothesis � space � and � non-zero � training � error • Infinite � hypothesis � spaces: � VC-Dimension � and � Growth � Function Learning � Classifiers � from � Examples � (Scenario) Principle: � Empirical � Risk � Minimization � (ERM) Scenario: Learning � Principle: • Generator: � Generates � descriptions � � according � to � distribution � . P x x ( ) Find � the � decision � rule � � for � which � the � training � error � is � minimal: h ° H ∈ • Teacher: � Assigns � a � value � � to � each � description � � based � on � distribution � y x h ° = arg min h Err S h . { ( ) } P y x ( ) H ∈ Given: Training � Error: ℜ N y n • Training � examples � x 1 y 1 x n y n P x y x i { , 1 – 1 } ( , ) … , , ( , ) ∼ ( , ) ∈ ∈ i 1 � Err S h = - - - y i h x i ( ) ∆ ( ≠ ( ) ) • Set � H � of � classification � rules � h � (hypotheses) � that � map � descriptions � � to � x n values � � ( ). y h x ; y i = 1 → ==> � Number � of � misclassifications � on � training � examples. Goal � of � Learner: • Classification � rule � h � from � H � that � classifies � new � examples � (again � from � Central � Problem � of � Statistical � Learning � Theory: ) � with � low � error � rate! P x y ( , ) When � does � a � low � training � error � lead � to � a � low � generalization � error? � P h x y = ∆ h x y ) P x y d = Err P h ( ( ) ≠ ) ( ( ) ≠ ( , ) ( )

����� Sources � of � Variation What � is � the � true � error � of � classification � rule � h? Learning � Task: Includes � variation � from � different � test � sets. • Generator: � Generates � descriptions � � according � to � distribution � . x P x ( ) Problem � Setting: • Teacher: � Assigns � a � value � � to � each � description � � based � on �� . y x P y x ( ) • given � rule � h => � Learning � Task: � • given � (independent) � test � sample � � of � size � k P x y = P y x ) P x S = x 1 y 1 x k y k ( , ) ( ( ) ( , ) , … , ( , ) estimate Process: � P h x y = ∆ h x y ) P x y d = Err P h ( ( ) ≠ ) ( ( ) ≠ ( , ) ( ) • Select � task � P x y ( , ) • Training � sample � S � (depends � on � ) P x y ( , ) Approach: � measure � error � of � h � on � test � set • Train � learning � algorithm � A � (e.g. � randomized � search) n • Test � sample � V � (depends � on � ) P x y ( , ) 1 � Err V h = - - - y i h x i ( ) ∆ ( ≠ ( ) ) • Apply � classification � rule � h � (e.g. � randomized � prediction) k i = 1 Binomial � Distribution Cross-Validation � Estimation The � probability � of � observing � x � heads � in � a � sample � of � n � independent � coin � Given: � tosses, � when � the � probability � of � heads � is � p � in � each � toss, � is • training � set � S � of � size � n n ! - p r 1 ) n – r Method: P X = x p n = - - - - - - - - - - - - - - - - - - - - - - - – p ( , ) ( r ! n – r ) ! ( • partition � S � into � m � subsets � of � equal � size • for � i � from � 1 � to � m • train � learner � on � all � subsets � except � the � i’ th Confidence � interval: • test � learner � on � i’ th � subset Given � x � observed � heads, � with � at � leat � 95% � confidence � the � true � value � of � p � • record � error � rates � on � test � set fulfills � => � Result: � average � over � recorded � error � rates P X x p n 0.025 and P X x p n 0.025 ( ≥ , ) ≥ ( ≤ , ) ≥ Bias � of � estimate: � see � leave-one-out Warning: � Test � sets � are � independent, � but � not � the � training � sets! => � no � strictly � valid � hypothesis � test � is � known � for � general � learning � algorithms � (see � [Dietterich/97])

Psychic � Game How � can � You � Convince � Me � of � Your � Psychic � Abilities? • I � guess � a � 4 � bit � code Setting: • You � all � guess � a � 4 � bit � code • n � bits => � The � student � who � guesses � my � code � clearly � has � telepathic � abilities � - � • |H| � players right!? Question: � For � which � n � and � |H| � is � prediction � of � zero-error � player � significantly � different � from � random � ( ) � with � probability � ? p = 0.5 1 – δ => � Hypothesis � test � for � P h 1 correct h H correct allnonpsychic ( ∨ … ∨ , ) < δ or P h H E ; rr s h = 0 h H E ; rr P h = 0.5 ( ∃ ∈ ( ) , ∀ ∈ ( ) ) < δ PAC � Learning Case: � Finite � H, � Zero � Error Definition: • The � hypothesis � space � H � is � finite • C � = � class � of � concepts � � (functions � to � be � learned) • There � is � always � some � h � with � zero � training � error � (A � returns � one � such � h) c X ; 1 – 1 → { , } • H � = � class � of � hypotheses � � (functions � used � by � learner � A) • Probability � that � a � (single) � h � with � � has � training � error � of � zero h X ; 1 – 1 Err P h → { , } ( ) ≥ ε • S � = � training � set � (of � size � n ) ) n 1 – ( ε • � = � desired � error � rate � of � learned � hypothesis ε • � = � probability, � with � which � the � learner � A � is � allowed � to � fail δ • Probability � that � there � exists � h � in � H � with �� � that � has � training � Err P h ( ) ≥ ε C � is � PAC-learnable � by � Algorithm � A � using � H � and � n � examples, � if error � of � zero ) n – ε n P Err h A S 1 – P h H E ; rr s h = 0 Err P h H 1 – H e ( ( ) ≤ ε ) ≥ ( δ ) ( ∃ ∈ ( ) , ( ) > ε ) ≤ ( ε ≤ ( ) for � all � , � , � , � and � P(X) � so � that � A � runs � in � polynomial � time � c C ∈ ε δ dependent � on � , � , � the � size � of � the � training � examples � and � the � size � of � the � ε δ concepts. => � only � polynomially � many � training � examples � allowed.

Case: � Finite � H, � Non-Zero � Error Case: � Infinite � H Goal: • union � bound � does � no � longer � work. • maybe � not � all � hypotheses � are � really � different?! P Err S h A S – Err D h A S 1 – ( ( ) ( ) ≤ ε ) ≥ ( δ ) ( ) ( ) <= P sup H Err S h i – Err S h i 1 – ( ( ) ( ) ≤ ε ) ≥ ( δ ) • Probability � that � for � a � fixed � h, � training � error � and � test � error � differ � by � more � than � � (Hoeffding � / � Chernoff � Bound) ε n � � n ε 2 P 1 � � � – 2 - - - x i – p 2 e > ε ≤ � � n � � i = 1 • Probability � over � all � h � in � H: � union � bound � => � multiply � by � |H| How � Many � Dichotomies � for � Fixed � Sample? Vapnik/Chervonenkis � Dimension • Sample � S � of � size � n Definition: � The � VC-dimension � of � H � is � equal � to � the � maximal � number � d � of � examples � that � can � be � split � into � two � sets � in � all � 2 d � ways � using � • Hypothesis � class � H functions � from � H � (shattering). Π H S = h x 1 ) h x 2 ) … h x n ) h ; H ( ) { ( ( , ( , , ( ) ∈ } x 1 x 2 x 3 ... x d h 1 + + + ... + h 2 - + + ... + 2 n Definition: � H � shatters � S, � if � � (i.e. � hypotheses � from � H � can � Π H S = ( ) h 3 + - + ... + split � S � in � all � possible � ways). h 4 - - + ... + ... ... ... ... ... ... h N - - - ... - Growth � function � : � For � all � S Φ d S ( ) VCdim H en ( ) � � Π H S ) n - - - - - - - - - - - - - - - - - - - - - - - - - - - ( ) ≤ Φ VCdim H ( ) ≤ � � ( VCdim H ( )

Recommend

More recommend