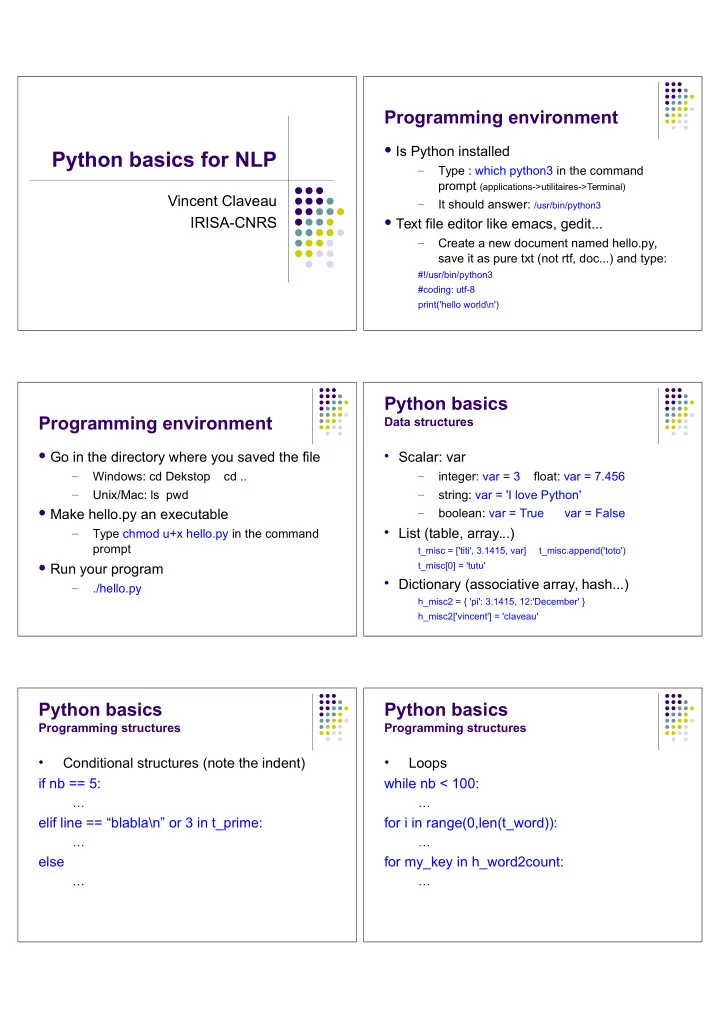

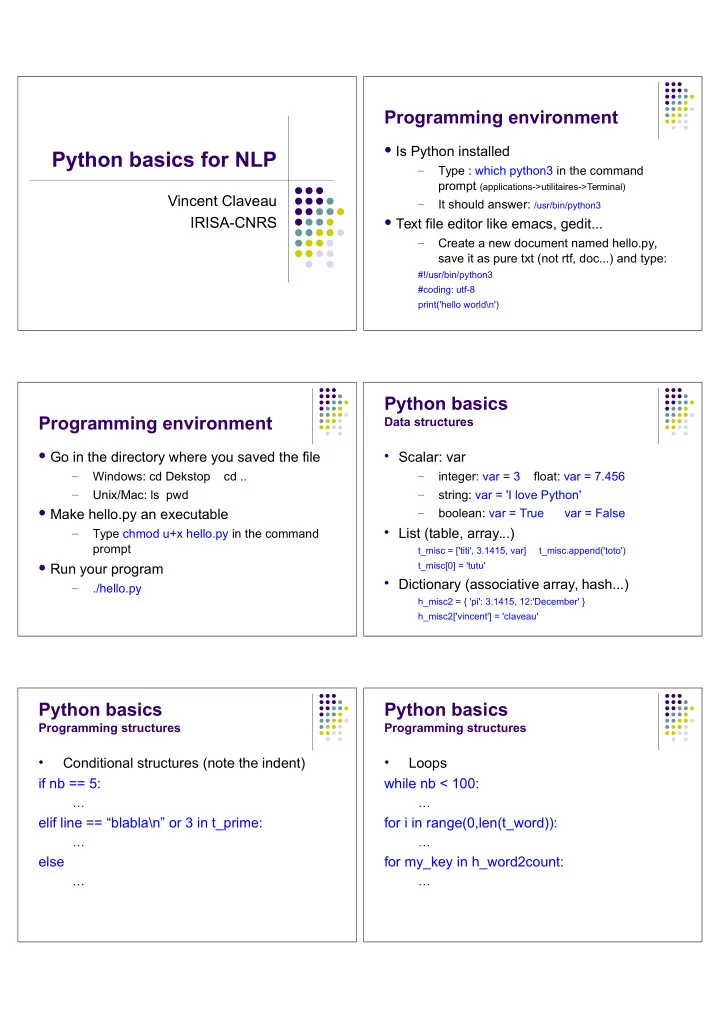

Programming environment Is Python installed Python basics for NLP – Type : which python3 in the command prompt (applications->utilitaires->Terminal) Vincent Claveau – It should answer: /usr/bin/python3 IRISA-CNRS Text file editor like emacs, gedit... – Create a new document named hello.py, save it as pure txt (not rtf, doc...) and type: #!/usr/bin/python3 #coding: utf-8 print('hello world\n') Python basics Programming environment Data structures Scalar: var Go in the directory where you saved the file – – Windows: cd Dekstop cd .. integer: var = 3 float: var = 7.456 – – Unix/Mac: ls pwd string: var = 'I love Python' Make hello.py an executable – boolean: var = True var = False List (table, array...) – Type chmod u+x hello.py in the command prompt t_misc = ['titi', 3.1415, var] t_misc.append('toto') Run your program t_misc[0] = 'tutu' Dictionary (associative array, hash...) – ./hello.py h_misc2 = { 'pi': 3.1415, 12:'December' } h_misc2['vincent'] = 'claveau' Python basics Python basics Programming structures Programming structures • • Conditional structures (note the indent) Loops if nb == 5: while nb < 100: … … elif line == “blabla\n” or 3 in t_prime: for i in range(0,len(t_word)): … … else for my_key in h_word2count: … …

Python basics Python basics • • Regular expressions (regex) About lists t_integer = [1,2,3,4,5,6,7,8,9,10] t_even = [ num for num in t_integer if num%2 == 0 ] m = re.search( '^(D|d)upon.', line) t_decreasing = sorted( t_integer, key=lambda a: -a) if m is not None: … t_word_count = [ ('toto',2), ('titi',32), ('tata',12) ] m = re.search( '^[^\t]*\t(.+)$', line) t_dec_pair = sorted( t_word_count, key=lambda a,b: (-b,a) ) if m is not None: h_count[ m.group(1) ] += 1 var = re.sub( '[ \t]{2,8}([A-Z])', '\t\g<1>', var) Python basics Python basics • • About dictionary functions def myScore( tf , df , N = 1): h_word2count = { 'toto': 2, 'titi': 32, 'tata': 12 } if df > 0: for (w,c) in h_word2count.items( ) : …. score = tf**2 * log( N / df ) else: h_doc2word2count = defaultdict(lambda: defaultdict(lambda: 0) ) print('something strange happens here\n') h_doc2word2count[ 'doc_450' ][ 'toto' ] += 1 score = 0 t_doc = h_doc2word2count.keys( ) return score t_count = h_doc2word2count[ 'doc_450' ].values( ) num = 540 for w in h_word2info: for (doc_id, freq) in h_word2info[w]: print myScore(freq, h_word2df[w],num) Python examples Python examples Read an (existing) file my_file.txt Explore a file : count lines fh = open('my_file.txt', 'r') line_nb = 0 your operations on file handle fh for line in open('mon_fichier.txt', 'r'): fh.close() line_nb += 1 or for line in codecs.open('my_file.txt', 'r', 'utf-8'): … or with open(...) as fh: print('There are' , line_nb , 'lines\n') for line in fh: …. Write (create) a file output.txt fh = codecs.open('my_file.txt', 'w', 'utf-8') fh.write('I write strings !\n' + 'I convert if needed ' + str(10/3) + '\n') fh.close()

Python examples Python examples Explore a file: put lines in an array – reverse Explore a file : count lines with a dictionary – printing of the file frequency of beginning letter of line t_lines = [ ] h_line2count = defaultdict(lambda: 0) # better idea than { } for line in open( 'my_file.txt', 'r' ): re_letter = re.compile('^[^A-Za-z]*([A-Za-z])') t_lines.append( line.rstrip('\r\n') ) for line in open('my_file.txt', 'r' ) : ## h_line2count[ line.rstrip('\r\n') ] += 1 for i in range(len(t_lines)): m = re_letter.search(line) print t_lines[len(t_lines)-1-i] if m is not None: h_line2count[ m.group(1) ]+=1 print sorted(h_line2count.items(), key=lambda (c,v): (-v,c)) Python typical headers Python typical headers Load common libraries Read arguments from command line import argparse from __future__ import division parser = argparse.ArgumentParser() import os, subprocess, codecs, sys, glob, re, getopt, random, operator, pickle parser.add_argument("-t", "--train", dest="file_train", from math import * help="file containing the training docs", metavar="FILE") reload(sys) parser.add_argument("-s", "--stop", dest="file_stop", sys.setdefaultencoding('utf-8') default='common_words.total_fr.txt', help="FILE contains stop words", metavar="FILE") from collections import defaultdict parser.add_argument("-v", "--verbose", action="store_false", prg = sys.argv[0] dest="verbose", default=True, help="print status messages to stdout") def P(output=''): input(output+"\nDebug point; Press ENTER to continue") args = parser.parse_args() def Info(output=''): sys.stderr.write(output+'\n') NLTK Python exercises Count the number of words in the PoS tagged Natural Language Tool Kit corpus → ~4400 – Contains corpus, resources Count how many times each word appears → – Contains basic tools: tagger, chunker... the:202 Use a local easy-install Count the average occurrence of common nouns – easy-install --instal-dir monRepPython (NN, NNS, NNP) → 2.61099 – Count word co-occurrences (2 words occurring Check your PYTHONPATH in a same sentence) Install the needed data/tools import nltk nltk.download()

Python debugging Python debugging Compiling errors Runtime errors First focus on the first syntax error Common causes – syntax error at ./my_prg.py line 1209, near "}" – Uninitialised variables: h_occ['Le'], – common causes: wrong indent or paren ('(','{') mismatch t_count[502] Then process the other errors as they – Division by 0, log 0 appears – Global symbol "df" unknown – Common causes : forgot to declare/initialize a variable (toto = 0)

Recommend

More recommend