Published @ ICCV 2017

Context • Many try to explain CNN predictions • Good overview: CVPR 2018 tutorial on Interpretable ML for CV • https://interpretablevision.github.io/ • Studies show existing methods that use gradients are problematic • Today: a 'good' explenation method

What is an explenation? • A rule that predicts the response of f to certain inputs • Examples: • f(x) = +1 if x contains a cat • f(x) = f(x') if x and x' are related by a rotation. x' is perturbed version • Rules tested using data • Quality of a rule: generalization to unseen data • Rules can be discovered and learned

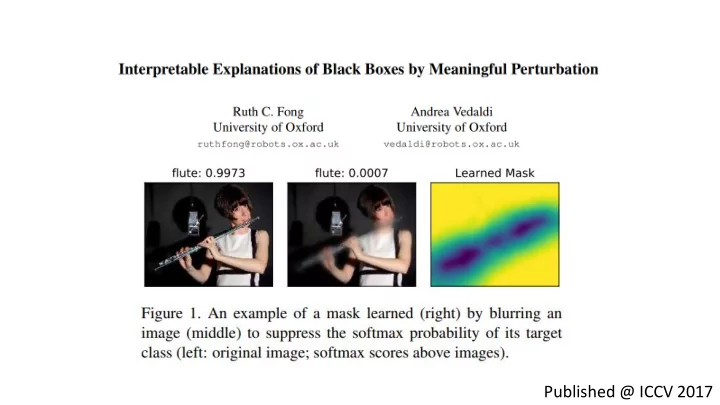

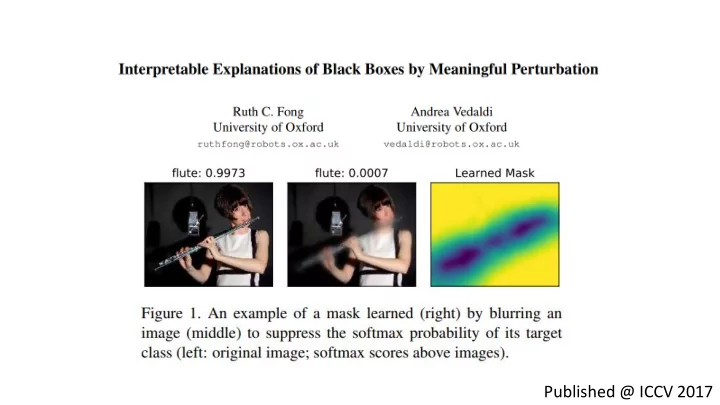

Explenations for CNN's: Saliency • What region of the image is important to get decision f(x)? • Idea: delete parts of x until posterior drops • Deletion = blurring • Task: find smallest mask m that minimizes f(x) significantly

Artefacts • Naively learning the mask introduces artefacts • Remember: explenation should generalize! So if the image x changes, explenation should still hold. • Solution 1: apply mask with random offsets during optimization • Solution 2: regularize mask: smoother / more natural perturbations

Better interpretability • Mask highlights only essential evidence. • Other methods often find 'irrelevant' evidence.

Spurious correlation • Method finds CNN errors

Better understanding • Use extra annotations of Imagenet + masks to improve understanding • Animal faces are more important than feet for CNN's

Adverserial images have strange masks

Detecting adverserial images • After blurring the 'adverserial' mask, CNN can recover original prediction in 40% of the cases

Take home • Saliency != gradient • Proposed method can be used to diagnose and understand CNN's • Paper with extensive, proper evaluation • Proposed method can be slow (requires 300 iterations of Adam)

Recommend

More recommend