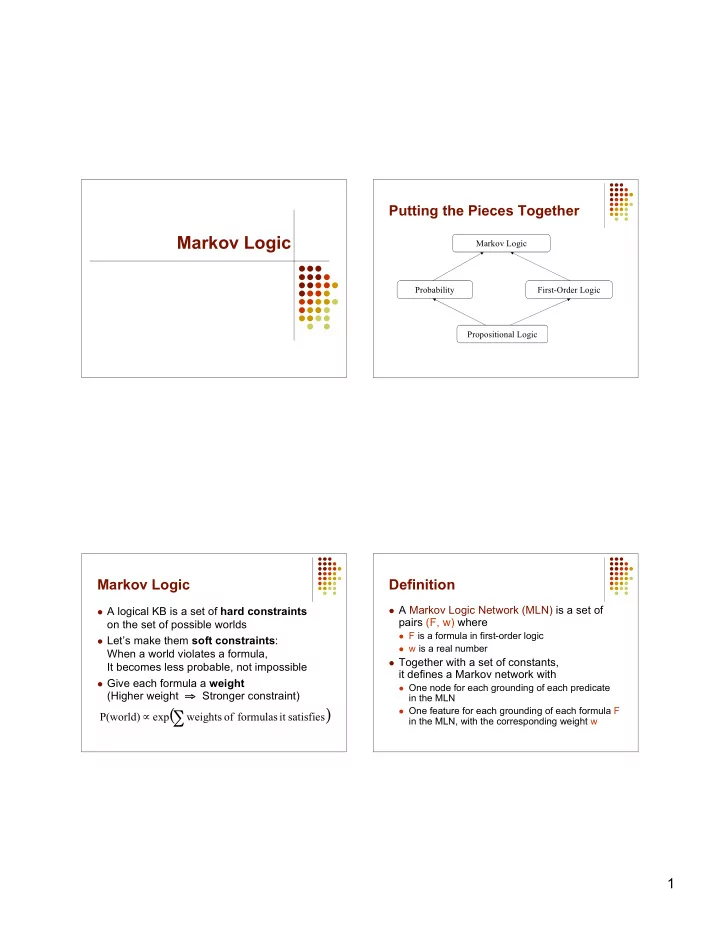

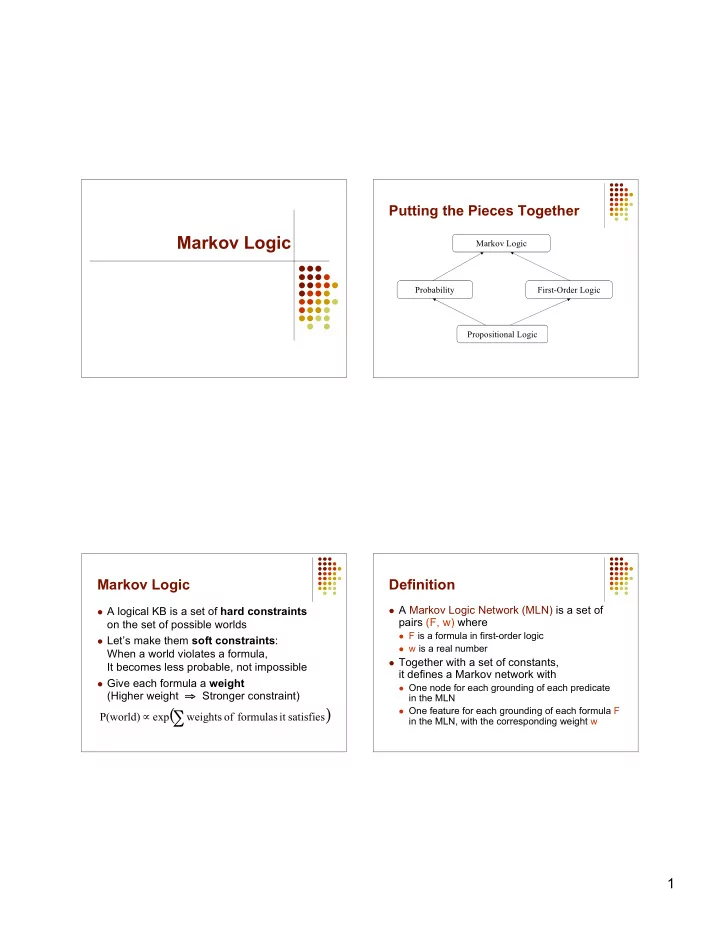

Putting the Pieces Together Markov Logic Markov Logic Probability First-Order Logic Propositional Logic Markov Logic Definition A Markov Logic Network (MLN) is a set of A logical KB is a set of hard constraints pairs (F, w) where on the set of possible worlds F is a formula in first-order logic Let’s make them soft constraints : w is a real number When a world violates a formula, Together with a set of constants, It becomes less probable, not impossible it defines a Markov network with Give each formula a weight One node for each grounding of each predicate (Higher weight ⇒ Stronger constraint) in the MLN One feature for each grounding of each formula F ( ) P(world) exp weights of formulas it satisfies � � in the MLN, with the corresponding weight w 1

Example: Friends & Smokers Example: Friends & Smokers x Smokes ( x ) Cancer ( x ) Smoking causes cancer. � � x , y Friends ( x , y ) ( Smokes ( x ) Smokes ( y ) ) Friends have similar smoking habits. � � � Example: Friends & Smokers Example: Friends & Smokers 1 . 5 x Smokes ( x ) Cancer ( x ) 1 . 5 x Smokes ( x ) Cancer ( x ) � � � � 1 . 1 x , y Friends ( x , y ) ( Smokes ( x ) Smokes ( y ) ) 1 . 1 x , y Friends ( x , y ) ( Smokes ( x ) Smokes ( y ) ) � � � � � � Two constants: Anna (A) and Bob (B) 2

Example: Friends & Smokers Example: Friends & Smokers 1 . 5 x Smokes ( x ) Cancer ( x ) 1 . 5 x Smokes ( x ) Cancer ( x ) � � � � 1 . 1 x , y Friends ( x , y ) ( Smokes ( x ) Smokes ( y ) ) 1 . 1 x , y Friends ( x , y ) ( Smokes ( x ) Smokes ( y ) ) � � � � � � Two constants: Anna (A) and Bob (B) Two constants: Anna (A) and Bob (B) Friends(A,B) Smokes(A) Smokes(B) Friends(A,A) Smokes(A) Smokes(B) Friends(B,B) Cancer(A) Cancer(B) Cancer(A) Cancer(B) Friends(B,A) Example: Friends & Smokers Example: Friends & Smokers 1 . 5 x Smokes ( x ) Cancer ( x ) 1 . 5 x Smokes ( x ) Cancer ( x ) � � � � 1 . 1 x , y Friends ( x , y ) ( Smokes ( x ) Smokes ( y ) ) 1 . 1 x , y Friends ( x , y ) ( Smokes ( x ) Smokes ( y ) ) � � � � � � Two constants: Anna (A) and Bob (B) Two constants: Anna (A) and Bob (B) Friends(A,B) Friends(A,B) Friends(A,A) Smokes(A) Smokes(B) Friends(B,B) Friends(A,A) Smokes(A) Smokes(B) Friends(B,B) Cancer(A) Cancer(B) Cancer(A) Cancer(B) Friends(B,A) Friends(B,A) 3

Markov Logic Networks Relation to Statistical Models MLN is template for ground Markov nets Special cases: Obtained by making all Probability of a world x : predicates zero-arity Markov networks 1 Markov random fields � � P ( x ) exp w n ( x ) � = � � Bayesian networks i i Markov logic allows Z � � i Log-linear models objects to be Exponential models interdependent Weight of formula i No. of true groundings of formula i in x Max. entropy models (non-i.i.d.) Gibbs distributions Typed variables and constants greatly reduce Boltzmann machines size of ground Markov net Discrete distributions Logistic regression Functions, existential quantifiers, etc. Hidden Markov models Conditional random fields Open question: Infinite domains Relation to First-Order Logic MAP/MPE Inference Infinite weights ⇒ First-order logic Problem: Find most likely state of world given evidence Satisfiable KB, positive weights ⇒ Satisfying assignments = Modes of distribution max P ( y | x ) Markov logic allows contradictions between y formulas Query Evidence 4

MAP/MPE Inference MAP/MPE Inference Problem: Find most likely state of world Problem: Find most likely state of world given evidence given evidence 1 � � � max w n ( x , y ) � max exp w n ( x , y ) � � i i i i y Z y i � � i x The MaxWalkSAT Algorithm MAP/MPE Inference for i ← 1 to max-tries do Problem: Find most likely state of world solution = random truth assignment given evidence for j ← 1 to max-flips do if ∑ weights(sat. clauses) > threshold then max � w n ( x , y ) return solution i i y c ← random unsatisfied clause i with probability p This is just the weighted MaxSAT problem flip a random variable in c Use weighted SAT solver else flip variable in c that maximizes (e.g., MaxWalkSAT [Kautz et al., 1997] ) ∑ weights(sat. clauses) Potentially faster than logical inference (!) return failure, best solution found 5

But … Memory Explosion Computing Probabilities Problem: P(Formula|MLN,C) = ? If there are n constants MCMC: Sample worlds, check formula holds and the highest clause arity is c , P(Formula1|Formula2,MLN,C) = ? c the ground network requires O(n ) memory If Formula2 = Conjunction of ground atoms First construct min subset of network necessary Solution: to answer query (generalization of KBMC) Exploit sparseness; ground clauses lazily Then apply MCMC (or other) → LazySAT algorithm [Singla & Domingos, 2006] Can also do lifted inference [Braz et al, 2005] Ground Network Construction But … Insufficient for Logic network ← Ø Problem: queue ← query nodes Deterministic dependencies break MCMC repeat Near-deterministic ones make it very slow node ← front( queue ) remove node from queue Solution: add node to network Combine MCMC and WalkSAT if node not in evidence then → MC-SAT algorithm [Poon & Domingos, 2006] add neighbors( node ) to queue until queue = Ø 6

Recommend

More recommend