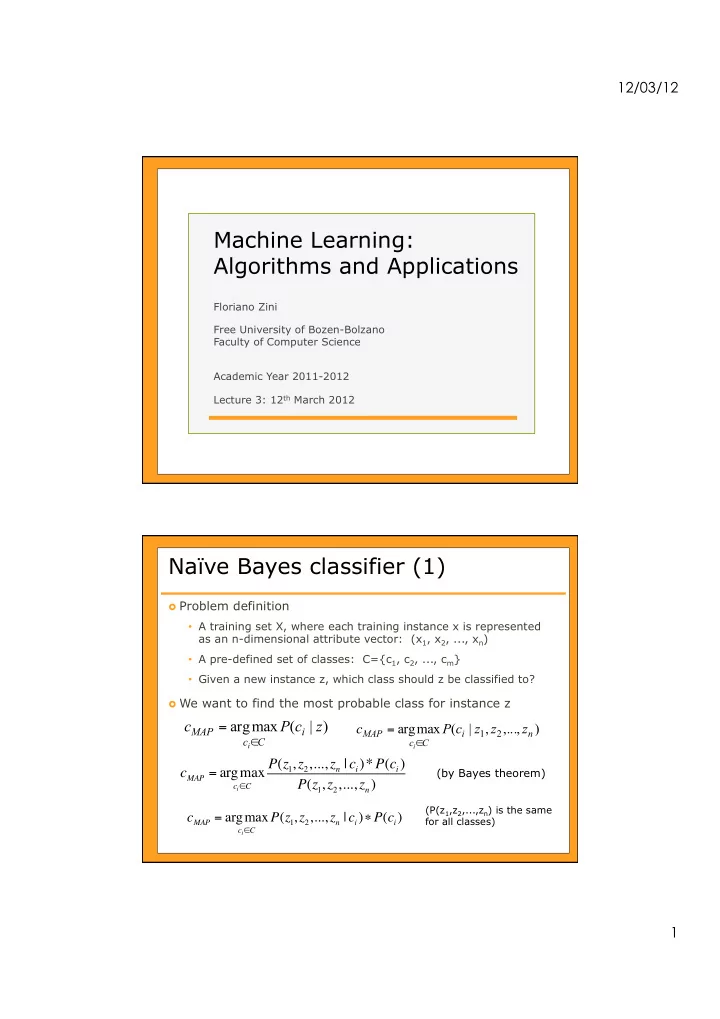

12/03/12 Machine Learning: Algorithms and Applications Floriano Zini Free University of Bozen-Bolzano Faculty of Computer Science Academic Year 2011-2012 Lecture 3: 12 th March 2012 Naïve Bayes classifier (1) Problem definition • A training set X, where each training instance x is represented as an n-dimensional attribute vector: (x 1 , x 2 , ..., x n ) • A pre-defined set of classes: C={c 1 , c 2 , ..., c m } • Given a new instance z, which class should z be classified to? We want to find the most probable class for instance z c arg max P ( c | z ) = c arg max P ( c | z , z ,..., z ) = MAP i MAP i 1 2 n c C i ∈ c C i ∈ P ( z 1 , z 2 ,..., z n | c i )* P ( c i ) c MAP = argmax (by Bayes theorem) P ( z 1 , z 2 ,..., z n ) c i ! C (P(z 1 ,z 2 ,...,z n ) is the same c MAP = argmax P ( z 1 , z 2 ,..., z n | c i ) " P ( c i ) for all classes) c i ! C 1

12/03/12 Naïve Bayes classifier (2) Assumption in Naïve Bayes classifier . The attributes are conditionally independent given the classification n P ( z , z ,..., z | c ) P ( z | c ) ∏ = 1 2 n i j i j 1 = Naïve Bayes classifier finds the most probable class for z n " c NB = argmax P ( c i )* P ( z j | c i ) c i ! C j = 1 Naïve Bayes classifier - Algorithm The learning (training) phase (given a training set) For each class c i ∈ C • Estimate the prior probability: P(c i ) • For each attribute value z j , estimate the probability of that attribute value given class c i : P(z j |c i ) The classification phase • For each class c i ∈ C, compute the formula n " P ( c i ) ! P ( z j | c i ) j = 1 • Select the most probable class c * n c * = argmax # P ( c i ) " P ( z j | c i ) c i ! C j = 1 2

12/03/12 Naïve Bayes classifier – Example (1) Rec. ID Age Income Student Credit_Rating Buy_Computer 1 Young High No Fair No 2 Young High No Excellent No 3 Medium High No Fair Yes 4 Old Medium No Fair Yes 5 Old Low Yes Fair Yes 6 Old Low Yes Excellent No 7 Medium Low Yes Excellent Yes 8 Young Medium No Fair No 9 Young Low Yes Fair Yes 10 Old Medium Yes Fair Yes 11 Young Medium Yes Excellent Yes 12 Medium Medium No Excellent Yes 13 Medium High Yes Fair Yes 14 Old Medium No Excellent No Will a young student with medium income and fair credit rating buy a computer? http://www.cs.sunysb.edu/~cse634/lecture_notes/07classification.pdf Naïve Bayes classifier – Example (2) Representation of the problem • z = (Age= Young ,Income= Medium ,Student= Yes ,Credit_Rating= Fair ) • Two classes: c 1 (buy a computer) and c 2 (not buy a computer) Compute the prior probability for each class • P(c 1 ) = 9/14 • P(c 2 ) = 5/14 Compute the probability of each attribute value given each class • P(Age=Young|c 1 ) = 2/9; P(Age=Young|c 2 ) = 3/5 • P(Income=Medium|c 1 ) = 4/9; P(Income=Medium|c 2 ) = 2/5 • P(Student=Yes|c 1 ) = 6/9; P(Student=Yes|c 2 ) = 1/5 • P(Credit_Rating=Fair|c 1 ) = 6/9; P(Credit_Rating=Fair|c 2 ) = 2/5 3

12/03/12 Naïve Bayes classifier – Example (3) Compute the likelihood of instance z given each class • For class c 1 P(z|c 1 )= P(Age=Young|c 1 )*P(Income=Medium|c 1 )*P(Student=Yes|c 1 )* P(Credit_Rating=Fair|c 1 ) = (2/9)*(4/9)*(6/9)*(6/9) = 0.044 • For class c 2 P(z|c 2 )= P(Age=Young|c 2 )*P(Income=Medium|c 2 )*P(Student=Yes|c 2 )* P(Credit_Rating=Fair|c 2 ) = (3/5)*(2/5)*(1/5)*(2/5) = 0.019 Find the most probable class • For class c 1 P(c 1 ) *P(z|c 1 ) = (9/14)*(0.044) = 0.028 • For class c 2 P(c 2 )*P(z|c 2 ) = (5/14)*(0.019) = 0.007 → Conclusion: The person z ( a young student with medium income and fair credit rating ) will buy a computer ! Naïve Bayes classifier – Issues (1) What happens if no training instances associated with class c i have attribute value x j ? E.g., in the “buy computer” example, no young students bought computers n P(x j |c i )= n(c j ,x j )/n(c j )=0 , and hence: " P ( c i ) ! P ( x j | c i ) = 0 j = 1 Solution: use a Bayesian approach to estimate P(x j |c i ) n ( c , x ) mp + i j P ( x | c ) = j i n ( c ) m + i • n(c i ): number of training instances associated with class c i • n(c i ,x j ): number of training instances associated with class c i that have attribute value x j • p: a prior estimate for P(x j |c i ) → Assume uniform priors: p=1/k, if attribute f j has k possible values • m: a weight given to prior → To augment the n(c i ) actual observations by an additional m virtual samples distributed according to p 4

12/03/12 Naïve Bayes classifier – Issues (2) • P(x j |c i )<1, for every attribute value x j and class c i • So, when the number of attribute values is very large n ⎛ ∏ ⎞ lim P ( x | c ) 0 ⎜ ⎟ = ⎜ j i ⎟ n → ∞ ⎝ ⎠ j 1 = n Solution: use a logarithmic function of probability * - $ ' n # , / c NB = argmax log P ( c i ) " & P ( x j | c i ) ) , / & ) % ( c i ! C + . j = 1 n ⎛ ⎞ c arg max log P ( c ) log P ( x | c ) ⎜ ∑ ⎟ = + NB i j i ⎜ ⎟ c C ∈ ⎝ ⎠ j 1 i = Naïve Bayes classifier – Summary One of the most practical learning methods Based on the Bayes theorem Parameter estimation for Naïve Bayes models uses the maximum likelihood estimation Computationally very fast • Training: only one pass over the training set • Classification: linear in the number of attributes Despite its conditional independence assumption, Naïve Bayes classifier shows a good performance in several application domains When to use? • A moderate or large training set available • Instances are represented by a large number of attributes • Attributes that describe instances are conditionally independent given classification 5

12/03/12 Linear regression Linear regression – Introduction Goal: to predict a real-valued output given an input instance A simple-but-effective learning technique when the target function is a linear function The learning problem is to learn (i.e., approximate) a real-valued function f f: X → Y • X: The input domain (i.e., an n-dimensional vector space – R n ) • Y: The output domain (i.e., the real values domain – R) • f: The target function to be learned (i.e., a linear mapping function) n f ( x ) w w x w x ... w x w ∑ w x = + + + + = + (w i ,x i ∈ R) 0 1 1 2 2 n n 0 i i i 1 = Essentially, to learn the weights vector w = (w 0 , w 1 , w 2 , …, w n ) 6

12/03/12 Linear regression – Example What is the linear function f(x)? f(x) x f(x) 0.13 -0.91 1.02 -0.17 3.17 1.61 -2.76 -3.31 1.44 0.18 5.28 3.36 x -1.74 -2.46 7.93 5.56 ... ... E.g., f(x) = -1.02 + 0.83x Linear regression – Training / test instances For each training instance x=(x 1 ,x 2 ,...,x n ) ∈ X, where x i ∈ R • The desired (target) output value c x ( ∈ R) n • The actual output value y w w x ∑ = + x 0 i i i 1 = → Here, w i are the system’s current estimates of the weights → The actual output value y x is desired to (approximately) be c x For a test instance z=(z 1 ,z 2 ,...,z n ) • To predict the output value • By applying the learned target function f 7

12/03/12 Linear regression – Error function The learning algorithm requires to define an error function → To measure the error made by the system in the training phase Definition of the training square error E • Error computed on each training example x: 2 n 1 1 ⎛ ⎞ 2 E ( x ) ( c y ) c w w x ⎜ ∑ ⎟ = − = − − x x x 0 i i ⎜ ⎟ 2 2 ⎝ ⎠ i 1 = • Error computed on the entire training set X: 2 $ ' n 1 2 ( c x # y x ) 2 = 1 " " " " E = E ( x ) c x # w 0 # w i x i = & ) 2 % ( x ! X x ! X x ! X i = 1 Least-square linear regression Learning the target function f is equivalent to learning the weights vector w that minimizes the training square error E → Why the name of the approach is “ Least-Square Linear Regression ” Training phase • Initialize the weights vector w (small random values) • Compute the training error E • Update the weights vector w according to the delta rule • Repeat until converging to a (locally) minimum error E Prediction phase For a new instance z, the (predicted) output value is: n where w*=(w* 0 ,w* 1 ,..., w* n ) is f ( z ) w * ∑ w * i z = + 0 i the learned weights vector i 1 = 8

12/03/12 The delta rule To update the weights vector w in the direction that decreases the training error E • η is the learning rate (i.e., a small positive constant) → To decide the degree to which the weights are changed at each training step • Instance-to-instance update: w i ← w i + η (c x -y x )x i $ ( ) w i ! w i + ! c x " y x x i • Batch update: x # X Other names of the delta rule • LMS (least mean square) rule • Adaline rule • Widrow-Hoff rule LSLR_batch (X, η ) for each attribute i w i ← an initial (small) random value while not CONVERGENCE for each attribute i delta_w i ← 0 for each training example x ∈ X compute the actual output value y x for each attribute i delta_w i ← delta_w i + η (c x -y x )x i for each attribute i w i ← w i + delta_w i end while return w 9

Recommend

More recommend