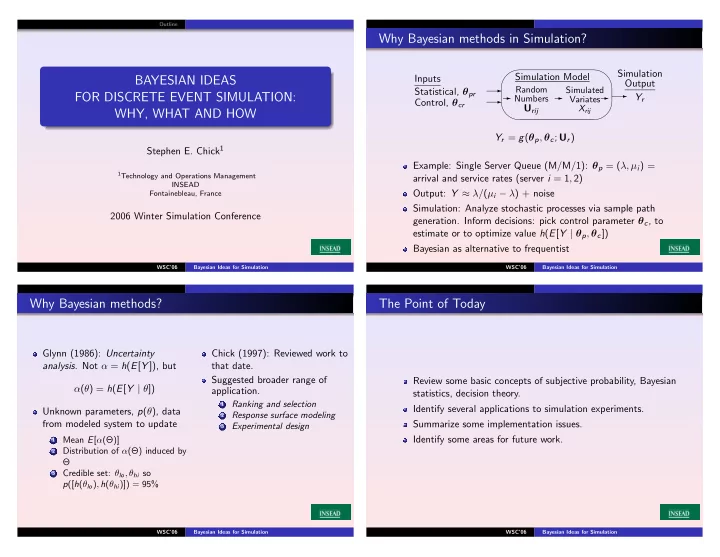

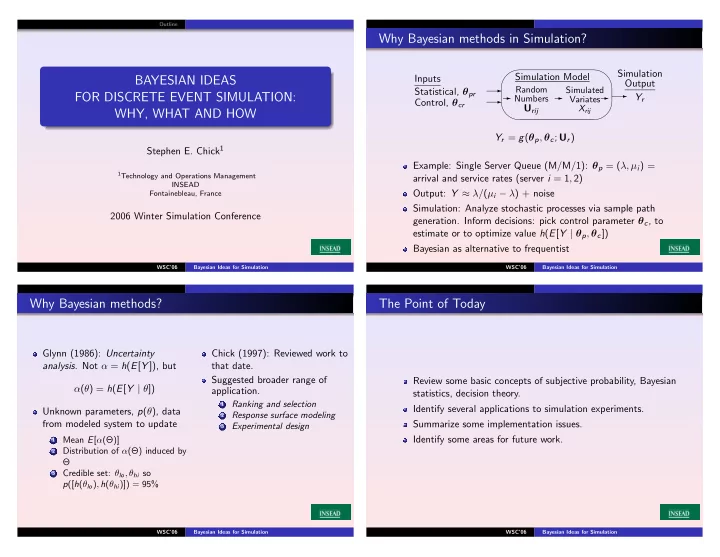

Outline Why Bayesian methods in Simulation? ✬ ✩ Simulation Simulation Model Inputs BAYESIAN IDEAS Output ✲ ✲ Random ✲ Simulated Statistical, θ pr ✲ ✲ FOR DISCRETE EVENT SIMULATION: Y r ✲ Numbers Variates Control, θ cr ✫ ✪ U rij X rij WHY, WHAT AND HOW Y r = g ( θ p , θ c ; U r ) Stephen E. Chick 1 Example: Single Server Queue (M/M/1): θ p = ( λ, µ i ) = 1 Technology and Operations Management arrival and service rates (server i = 1 , 2) INSEAD Output: Y ≈ λ/ ( µ i − λ ) + noise Fontainebleau, France Simulation: Analyze stochastic processes via sample path 2006 Winter Simulation Conference generation. Inform decisions: pick control parameter θ c , to estimate or to optimize value h ( E [ Y | θ p , θ c ]) Bayesian as alternative to frequentist WSC’06 Bayesian Ideas for Simulation WSC’06 Bayesian Ideas for Simulation Why Bayesian methods? The Point of Today Glynn (1986): Uncertainty Chick (1997): Reviewed work to analysis . Not α = h ( E [ Y ]), but that date. Suggested broader range of Review some basic concepts of subjective probability, Bayesian α ( θ ) = h ( E [ Y | θ ]) application. statistics, decision theory. Ranking and selection 1 Identify several applications to simulation experiments. Unknown parameters, p ( θ ), data Response surface modeling 2 from modeled system to update Summarize some implementation issues. Experimental design 3 Identify some areas for future work. Mean E [ α (Θ)] 1 Distribution of α (Θ) induced by 2 Θ Credible set: θ lo , θ hi so 3 p ([ h ( θ lo ) , h ( θ hi )]) = 95% WSC’06 Bayesian Ideas for Simulation WSC’06 Bayesian Ideas for Simulation

Getting down To brass tacks Applications Implementation Summary What is Bayes? Prior Probability Asymptotic Theorems Dec Related work Outline 1 Getting down to brass tacks See the WSC (2006) paper and chapter in Henderson and Nelson Subjective and Bayesian methods book for a long (but incomplete) citation list for work over the last Assessing prior probability 10 years on: Asymptotic Theorems Formal Bayes or decision theoretic theory Decisions, loss, and value of information Entropy and Kullback-Leibler Discrepancy Applications: scheduling, insurance, finance, traffic modeling, public health, waterway safety, supply chain and other areas 2 Applications Bayes and deterministic simulations Uncertainty Analysis Selecting from Multiple Candidate Distributions Favorite books on subjective and Bayesian probability and Selecting the Best System decision theory Metamodels Public Policy and Health Economics: increasingly uses simulation (in addition to decision trees, Markov chains), and increasingly 3 Implementation requires probabilistic sensitivity analysis . 4 Summary WSC’06 Bayesian Ideas for Simulation WSC’06 Bayesian Ideas for Simulation Getting down To brass tacks Applications Implementation Summary What is Bayes? Prior Probability Asymptotic Theorems Decisi Getting down To brass tacks Applications Implementation Summary What is Bayes? Prior Probability Asymptotic Theorems Dec Getting down to brass tacks Getting down to brass tacks Probability of 7 heads in the first 10 flips? Probability of 7 heads in the first 10 flips? How to approach the problem. . . Comte d’Alembert (18th cent.) Indifference says maybe 1/11? But wait, for one flip, probability of heads is 1/2? See Savage (1972) and Kreps (1988). WSC’06 Bayesian Ideas for Simulation WSC’06 Bayesian Ideas for Simulation

Getting down To brass tacks Applications Implementation Summary What is Bayes? Prior Probability Asymptotic Theorems Decisi Getting down To brass tacks Applications Implementation Summary What is Bayes? Prior Probability Asymptotic Theorems Dec Getting down to brass tacks Getting down to brass tacks Probability of 7 heads in the first 10 flips? Probability of 7 heads in the first 10 flips? Dwight (an unreconstructed frequentist) Dwight (an unreconstructed frequentist) 7!3! θ 7 (1 − θ ) 3 , where θ = lim n →∞ X 1 + ... + X n 10! 7!3! θ 7 (1 − θ ) 3 , where θ = lim n →∞ X 1 + ... + X n 10! (a.e.). (a.e.). n n If we rent Madison Square garden and flip the tack repeatedly, If we rent Madison Square garden and flip the tack repeatedly, I can estimate θ for you. I can estimate θ for you. What confidence and how accurately do you need to know θ ? What confidence and how accurately do you need to know θ ? Hmmm. . . Let’s reformulate the question . . . WSC’06 Bayesian Ideas for Simulation WSC’06 Bayesian Ideas for Simulation Getting down To brass tacks Applications Implementation Summary What is Bayes? Prior Probability Asymptotic Theorems Decisi Getting down To brass tacks Applications Implementation Summary What is Bayes? Prior Probability Asymptotic Theorems Dec Why am I a Bayesian? Why am I a Bayesian? Ralph Probability of 7 heads in the first 10 flips? I’m willing to use probability for personal judgments Will you accept the following bet now ? You get $100 if there are 7 � 1 7!3! θ 7 (1 − θ ) 3 π ( θ ) d θ , where π ( θ ) is a prior probability . 10! heads, but you pay $5 if not. 0 I’ll update with Bayes’ rule , to get posterior probability more from Dwight = π ( θ ) � n p ( θ | x n ) = π ( θ ) p ( x n | θ ) i =1 p ( x i | θ ) I can’t answer until I have a good idea of what θ is. � p ( x n ) p ( x n | θ ) d π ( θ ) Guessing wouldn’t be scientific. WSC’06 Bayesian Ideas for Simulation WSC’06 Bayesian Ideas for Simulation

Getting down To brass tacks Applications Implementation Summary What is Bayes? Prior Probability Asymptotic Theorems Decisi Getting down To brass tacks Applications Implementation Summary What is Bayes? Prior Probability Asymptotic Theorems Dec Why am I a Bayesian? Why am I a Bayesian? Lenny Lenny Probability of 7 heads in the first 10 flips? Exchangeability plus conceptually infinite N imply � 1 7!3! θ 7 (1 − θ ) 3 dF ( θ ) 10! Fair bets: I set p ( E 1 ) > p ( E 2 ) if I prefer the first bet: lim N →∞ p (7 heads in first 10 flips) = 0 ���� ���� �� �� de Finetti (1990)-like representation Ralph assumed conditional i.i.d., while Lenny derives formula �� �� �� �� 1) 2) from exchangeability Exchangeability (weaker than i.i.d.) Probability defined by bet preferences, not repeated outcomes p ( x 1 , x 2 , . . . , x n ) = p ( x s 1 , x s 2 , . . . , x s n ) for permutations s on { 1 , 2 , . . . , n } for arbitrary n . WSC’06 Bayesian Ideas for Simulation WSC’06 Bayesian Ideas for Simulation Getting down To brass tacks Applications Implementation Summary What is Bayes? Prior Probability Asymptotic Theorems Decisi Getting down To brass tacks Applications Implementation Summary What is Bayes? Prior Probability Asymptotic Theorems Dec Implication for Simulation: Y r = g ( θ p , θ e , θ c ; U r ) Implication for Simulation: Y r = g ( θ p , θ e , θ c ; U r ) ✬ ✩ ✬ ✩ Inputs Simulation Inputs Simulation Model ✲ Simulation ✲ Simulation Model Statistical, θ pr Output Statistical, θ pr ✲ Output ✲ Random ✲ Simulated ✲ ✲ Control, θ cr ✲ ✲ Random ✲ Simulated ✲ Y r Control, θ cr ✲ ✲ Numbers Variates Y r ✲ Numbers Environmental, θ er Variates ✫ ✪ U rij X rij Environmental, θ er ✫ ✪ ✬ ✩ U rij X rij ✻ Inputs Metamodel Metamodel ✲ Statistical, θ p Output ✲ ✲ Metamodel ✲ Control, θ c ✲ Y ✲ Parameters ✫ ✪ Environmental, θ e Ψ Input selection: Infinite exchangeable sequence X ij from Input selection: Infinite exchangeable sequence X ij from modeled system to infer i th statistical input, θ pi modeled system to infer i th statistical input, θ pi Metamodeling: Infinite exchangeable Y r to infer Ψ . WSC’06 Bayesian Ideas for Simulation WSC’06 Bayesian Ideas for Simulation

Recommend

More recommend