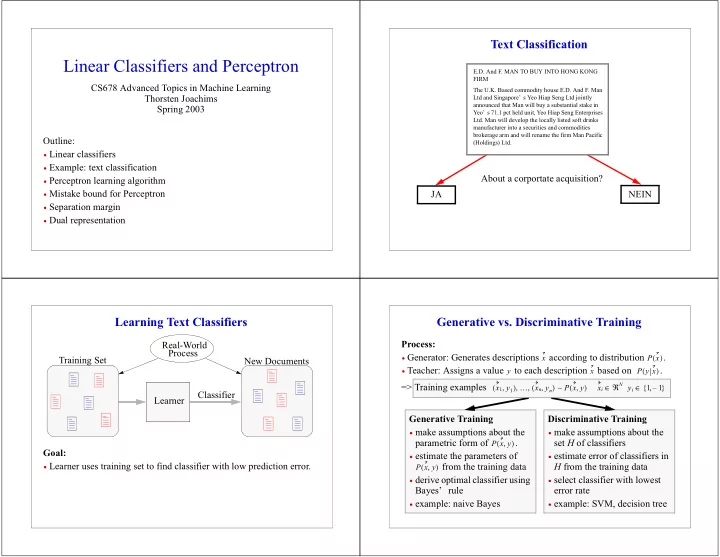

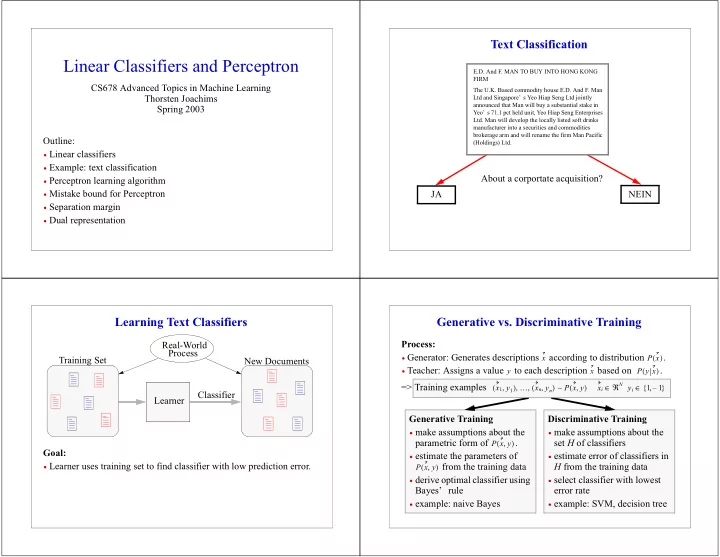

Text � Classification Linear � Classifiers � and � Perceptron E.D. � And � F. � MAN � TO � BUY � INTO � HONG � KONG � FIRM CS678 � Advanced � Topics � in � Machine � Learning The � U.K. � Based � commodity � house � E.D. � And � F. � Man � Thorsten � Joachims Ltd � and � Singapore’ s � Yeo � Hiap � Seng � Ltd � jointly � announced � that � Man � will � buy � a � substantial � stake � in � Spring � 2003 Yeo’ s � 71.1 � pct � held � unit, � Yeo � Hiap � Seng � Enterprises � Ltd. � Man � will � develop � the � locally � listed � soft � drinks � manufacturer � into � a � securities � and � commodities � brokerage � arm � and � will � rename � the � firm � Man � Pacific � Outline: (Holdings) � Ltd. • Linear � classifiers • Example: � text � classification About � a � corportate � acquisition? • Perceptron � learning � algorithm • Mistake � bound � for � Perceptron JA NEIN • Separation � margin • Dual � representation Learning � Text � Classifiers Generative � vs. � Discriminative � Training Process: Real-World Process • Generator: � Generates � descriptions � � according � to � distribution � . x P x ( ) Training � Set New � Documents • Teacher: � Assigns � a � value � � to � each � description � � based � on �� . y x P y x ( ) N y => � Training � examples � x 1 y 1 x n y n P x y x i { , 1 – 1 } ( , ) … , , ( , ) ∼ ( , ) ∈ ℜ ∈ i Classifier Learner Generative � Training Discriminative � Training • make � assumptions � about � the � • make � assumptions � about � the � parametric � form � of � . set � H � of � classifiers P x y ( , ) Goal: � • estimate � the � parameters � of � • estimate � error � of � classifiers � in � • Learner � uses � training � set � to � find � classifier � with � low � prediction � error. � from � the � training � data H � from � the � training � data P x y ( , ) • derive � optimal � classifier � using � • select � classifier � with � lowest � Bayes’ � rule error � rate • example: � naive � Bayes • example: � SVM, � decision � tree

Representing � Text � as � Attribute � Vectors Linear � Classifiers � (Example) Attributes: � Words � Text � Classification: � Physics � (+1) � versus � Receipes � (-1) 0 baseball (Word-Stems) nuclear � atom � salt pepper � water heat � and 3 specs ID y (x 1 ) (x 2 ) � (x 3 ) (x 4 ) � (x 5 ) (x 6 ) (x 7 ) From: xxx@sciences.sdsu.edu 0 graphics Newsgroups: comp.graphics D1 1 2 0 0 2 0 2 +1 Subject: Need specs on Apple QT 1 references Values: � Occurrence- I need to get the specs, or at least a 0 hockey D2 0 0 0 3 0 1 1 -1 Frequencies very verbose interpretation of the specs, 0 car for QuickTime. Technical articles from D3 0 2 1 0 0 0 3 +1 magazines and references to books would 0 clinton be nice, too. D4 0 0 1 1 1 1 1 -1 . . I also need the specs in a fromat usable . on a Unix or MS-Dos system. I can’t do much with the QuickTime stuff they 1 unix w,b 2 3 -1 -3 -1 -1 0 b=1 have on ... 0 space 7 2 quicktime � D1: � w i x i + b = 2 1 + 3 2 + – 1 ) 0 + – 3 ) 0 + – 1 ) 2 + – 1 ) 0 + 0 2 ] 1 + [ ⋅ ⋅ ( ⋅ ( ⋅ ( ⋅ ( ⋅ ⋅ 0 computer i = 1 7 � ==> � The � ordering � of � words � is � ignored! D2: � w i x i + b = 2 0 + 3 0 + – 1 ) 0 + – 3 ) 3 + – 1 ) 0 + – 1 ) 1 + 0 1 ] 1 + [ ⋅ ⋅ ( ⋅ ( ⋅ ( ⋅ ( ⋅ ⋅ i = 1 Linear � Classifiers Perceptron � (Rosenblatt) N y Rules � of � the � Form: � weight � vector � , � threshold � Input: � � � (linear � separable) w b S = x 1 y 1 x n y n x i { , 1 – 1 } ( , ) … , , ( , ) ∈ ℜ ∈ i � w 0 0 b 0 ; 0 k ; 0 ← ← ← N • � N � � R = max i x i 1 if w i x i + b 0 � > • h x = sign w i x i + b = � ( ) • repeat � i = 1 � i = 1 • for � i=1 � to � n – 1 else � • if � y i w k x i + b k 0 ( ⋅ ) ≤ Geometric � Interpretation � (Hyperplane): w k w k + η y i x i ← + 1 • η y i R 2 b k b k + ← • + 1 w k k = 1 ← • • endif b • endfor • until � no � mistakes � made � in � the � for � loop • return � w k b k ( , )

� Analysis � of � Perceptron Dual � Perceptron Definition � (Margin � of � an � Example): � The � margin � of � an � example � • For � each � example � , � count � with � � the � number � of � times � the � x i y i x i y i ( , ) ( , ) α i with � respect � to � the � hyperplane � � is perceptron � algorithm � makes � a � mistake � on � it. � Then w b ( , ) n � w = α i y i x i = y i w x i + b δ i ( ⋅ ) i = 1 � and � = 0 ; b 0 0 R = max i x i α ← • Definition � (Margin � of � an � Example): � The � margin � of � a � training � set � • repeat � with � respect � to � the � hyperplane � � is � � S = x 1 y 1 x n y n w b ( , ) … , , ( , ) ( , ) • for � i=1 � to � n � � = min i y i w x i + b n δ ( ⋅ ) � � � • if � y i α i y i x j x i + b k 0 ( ⋅ ) ≤ � � � � j = 1 Theorem � (Novikoff): � If � for � a � training � set � S � there � exists � a � weight � vector � + 1 α i ← α i • with � margin � , � then � the � perceptron � makes � at � most y i R 2 δ b b + ← • 4 R 2 � � • endif � � - - - - - - • endfor � � δ 2 • until � no � mistakes � made � in � the � for � loop mistakes � before � returning � a � separating � hyperplane. • return � α b ( , ) � Experiment: � Perceptron � for � Text � Classification Perceptron with eta=0.1 30 "perceptron_iter_trainerror.dat" "perceptron_iter_testerror.dat" 25 hard_margin_svm_testerror.dat Percent Training/Testing Errors 20 15 10 5 0 1 2 3 4 5 6 7 8 9 10 Iterations Train � on � 1000 � pos � / � 1000 � neg � examples � for � “ acq” � (Reuters-21578).

Recommend

More recommend