CS 472 - Perceptron 1

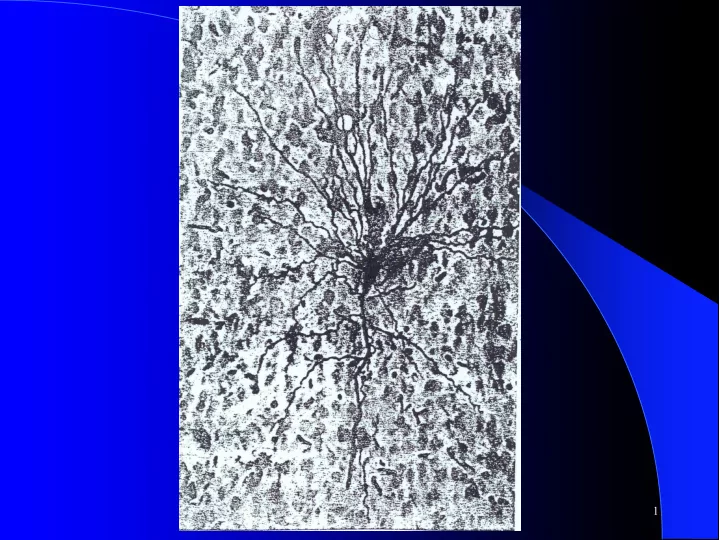

Basic Neuron CS 472 - Perceptron 2

Expanded Neuron CS 472 - Perceptron 3

Perceptron Learning Algorithm l First neural network learning model in the 1960’s l Simple and limited (single layer models) l Basic concepts are similar for multi-layer models so this is a good learning tool l Still used in current applications (modems, etc.) CS 472 - Perceptron 4

Perceptron Node – Threshold Logic Unit x 1 w 1 x 2 z w 2 𝜄 θ x n w n n 1 if x w ∑ ≥ θ i i i 1 z = = n 0 if x w ∑ < θ i i i 1 = CS 472 - Perceptron 5

Perceptron Node – Threshold Logic Unit x 1 w 1 x 2 z w 2 𝜄 x n w n n 1 if x w ∑ • Learn weights such that an objective ≥ θ i i function is maximized. i 1 z = = n • What objective function should we use? 0 if x w ∑ < θ i i • What learning algorithm should we use? i 1 = CS 472 - Perceptron 6

Perceptron Learning Algorithm x 1 .4 z .1 x 2 -.2 n x 1 x 2 t 1 if x w ∑ ≥ θ i i .8 .3 1 i 1 z = = n .4 .1 0 0 if x w ∑ < θ i i i 1 = CS 472 - Perceptron 7

First Training Instance .8 .4 z =1 .1 .3 -.2 net = .8*.4 + .3*-.2 = .26 n x 1 x 2 t 1 if x w ∑ ≥ θ i i .8 .3 1 i 1 z = = n .4 .1 0 0 if x w ∑ < θ i i i 1 = CS 472 - Perceptron 8

Second Training Instance .4 .4 z =1 .1 .1 -.2 net = .4*.4 + .1*-.2 = .14 n x 1 x 2 t 1 if x w ∑ ≥ θ i i D w i = ( t - z ) * c * x i .8 .3 1 i 1 z = = n .4 .1 0 0 if x w ∑ < θ i i i 1 = CS 472 - Perceptron 9

Perceptron Rule Learning D w i = c ( t – z) x i Where w i is the weight from input i to perceptron node, c is the learning l rate, t is the target for the current instance, z is the current output, and x i is i th input Least perturbation principle l Only change weights if there is an error – small c rather than changing weights sufficient to make current pattern correct – Scale by x i – Create a perceptron node with n inputs l Iteratively apply a pattern from the training set and apply the perceptron l rule Each iteration through the training set is an epoch l Continue training until total training set error ceases to improve l Perceptron Convergence Theorem: Guaranteed to find a solution in finite l time if a solution exists CS 472 - Perceptron 10

CS 472 - Perceptron 11

Augmented Pattern Vectors 1 0 1 -> 0 1 0 0 -> 1 Augmented Version 1 0 1 1 -> 0 1 0 0 1 -> 1 l Treat threshold like any other weight. No special case. Call it a bias since it biases the output up or down. l Since we start with random weights anyways, can ignore the - q notion, and just think of the bias as an extra available weight. (note the author uses a -1 input) l Always use a bias weight CS 472 - Perceptron 12

Perceptron Rule Example Assume a 3 input perceptron plus bias (it outputs 1 if net > 0, else 0) l Assume a learning rate c of 1 and initial weights all 0: D w i = c ( t – z) x i l Training set 0 0 1 -> 0 l 1 1 1 -> 1 1 0 1 -> 1 0 1 1 -> 0 D W Pattern Target Weight Vector Net Output 0 0 1 1 0 0 0 0 0 CS 472 - Perceptron 13

Example Assume a 3 input perceptron plus bias (it outputs 1 if net > 0, else 0) l Assume a learning rate c of 1 and initial weights all 0: D w i = c ( t – z) x i l Training set 0 0 1 -> 0 l 1 1 1 -> 1 1 0 1 -> 1 0 1 1 -> 0 D W Pattern Target Weight Vector Net Output 0 0 1 1 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 0 0 0 0 CS 472 - Perceptron 14

Example Assume a 3 input perceptron plus bias (it outputs 1 if net > 0, else 0) l Assume a learning rate c of 1 and initial weights all 0: D w i = c ( t – z) x i l Training set 0 0 1 -> 0 l 1 1 1 -> 1 1 0 1 -> 1 0 1 1 -> 0 D W Pattern Target Weight Vector Net Output 0 0 1 1 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 0 0 0 0 0 0 1 1 1 1 1 0 1 1 1 1 1 1 1 CS 472 - Perceptron 15

Peer Instruction – Zoom Version l I pose a challenge question (usually multiple choice), which will help solidify understanding of topics we have studied – Might not just be one correct answer l You each get some time (1-2 minutes) to come up with your answer and vote – using a Zoom polling question l Then we put you in random groups with Zoom breakout rooms and you get time to discuss and find a solution together – Learn from and teach each other! l When finished, leave your breakout room and vote again with your updated vote. You may return to the breakout room for more discussion while waiting for the all to finish if you want. l Finally, we discuss together the different responses, show the votes, give you opportunity to justify your thinking, and give you further insights CS 472 - Perceptron 16

Peer Instruction (PI) Why l Studies show this approach improves learning l Learn by doing, discussing, and teaching each other – Curse of knowledge/expert blind-spot – Compared to talking with a peer who just figured it out and who can explain it in your own jargon – You never really know something until you can teach it to someone else – More improved learning! l Just as important to understand why wrong answers are wrong, or how they could be changed to be right. – Learn to reason about your thinking and answers l More enjoyable - You are involved and active in the learning CS 472 - Perceptron 17

How Groups Interact l Best if group members have different initial answers l 3 is the “magic” group number – May have 2-4 on a given day to make sure everyone is involved l Teach and learn from each other: Discuss, reason, articulate l If you know the answer, listen to where colleagues are coming from first, then be a great humble teacher. You will also learn by doing that and you’ll be on the other side in the future. – I can’t do that as well because every small group has different misunderstandings and you get to focus on your particular questions l Be ready to justify to the class your answer and justifications! CS 472 - Perceptron 18

**Challenge Question** - Perceptron Assume a 3 input perceptron plus bias (it outputs 1 if net > 0, else 0) l Assume a learning rate c of 1 and initial weights all 0: D w i = c ( t – z) x i l Training set 0 0 1 -> 0 l 1 1 1 -> 1 1 0 1 -> 1 0 1 1 -> 0 D W Pattern Target Weight Vector Net Output 0 0 1 1 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 0 0 0 0 0 0 1 1 1 1 1 0 1 1 1 1 1 1 1 l Once this converges the final weight vector will be 1 1 1 1 A. -1 0 1 0 B. 0 0 0 0 C. 1 0 0 0 D. None of the above E. CS 472 - Perceptron 19

Example Assume a 3 input perceptron plus bias (it outputs 1 if net > 0, else 0) l Assume a learning rate c of 1 and initial weights all 0: D w i = c ( t – z) x i l Training set 0 0 1 -> 0 l 1 1 1 -> 1 1 0 1 -> 1 0 1 1 -> 0 D W Pattern Target Weight Vector Net Output 0 0 1 1 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 0 0 0 0 0 0 1 1 1 1 1 0 1 1 1 1 1 1 1 3 1 0 0 0 0 0 1 1 1 0 1 1 1 1 CS 472 - Perceptron 20

Example Assume a 3 input perceptron plus bias (it outputs 1 if net > 0, else 0) l Assume a learning rate c of 1 and initial weights all 0: D w i = c ( t – z) x i l Training set 0 0 1 -> 0 l 1 1 1 -> 1 1 0 1 -> 1 0 1 1 -> 0 D W Pattern Target Weight Vector Net Output 0 0 1 1 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 0 0 0 0 0 0 1 1 1 1 1 0 1 1 1 1 1 1 1 3 1 0 0 0 0 0 1 1 1 0 1 1 1 1 3 1 0 -1 -1 -1 0 0 1 1 0 1 0 0 0 CS 472 - Perceptron 21

Example Assume a 3 input perceptron plus bias (it outputs 1 if net > 0, else 0) l Assume a learning rate c of 1 and initial weights all 0: D w i = c ( t – z) x i l Training set 0 0 1 -> 0 l 1 1 1 -> 1 1 0 1 -> 1 0 1 1 -> 0 D W Pattern Target Weight Vector Net Output 0 0 1 1 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 0 0 0 0 0 0 1 1 1 1 1 0 1 1 1 1 1 1 1 3 1 0 0 0 0 0 1 1 1 0 1 1 1 1 3 1 0 -1 -1 -1 0 0 1 1 0 1 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 0 0 0 1 1 0 0 0 0 1 0 1 1 1 1 0 0 0 1 1 0 0 0 0 0 1 1 1 0 1 0 0 0 0 0 0 0 0 0 CS 472 - Perceptron 22

Perceptron Homework Assume a 3 input perceptron plus bias (it outputs 1 if net > 0, else 0) l Assume a learning rate c of 1 and initial weights all 1: D w i = c ( t – z) x i l Show weights after each pattern for just one epoch l Training set 1 0 1 -> 0 l 1 1 0 -> 0 1 0 1 -> 1 0 1 1 -> 1 D W Pattern Target Weight Vector Net Output 1 1 1 1 CS 472 - Perceptron 23

Training Sets and Noise l Assume a Probability of Error at each input and output value each time a pattern is trained on l 0 0 1 0 1 1 0 0 1 1 0 -> 0 1 1 0 l i.e. P(error) = .05 l Or a probability that the algorithm is applied wrong (opposite) occasionally l Averages out over learning CS 472 - Perceptron 24

Linear Separability • • X 2 0 • 0 • 0 0 0 X 1 2-d case (two inputs) W 1 X 1 + W 2 X 2 > (Z=1) W 1 X 1 + W 2 X 2 < (Z=0) So, what is decision boundary? W 1 X 1 + W 2 X 2 = X 2 + W 1 X 1 /W 2 = /W 2 X 2 = (-W 1 /W 2 )X 1 + /W 2 Y = MX + B CS 472 - Perceptron 25

Recommend

More recommend