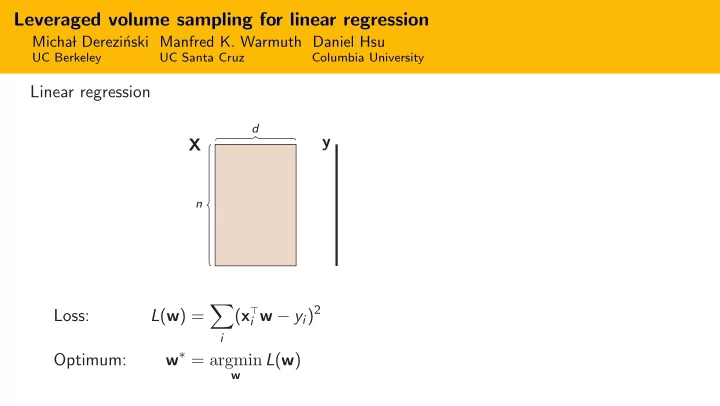

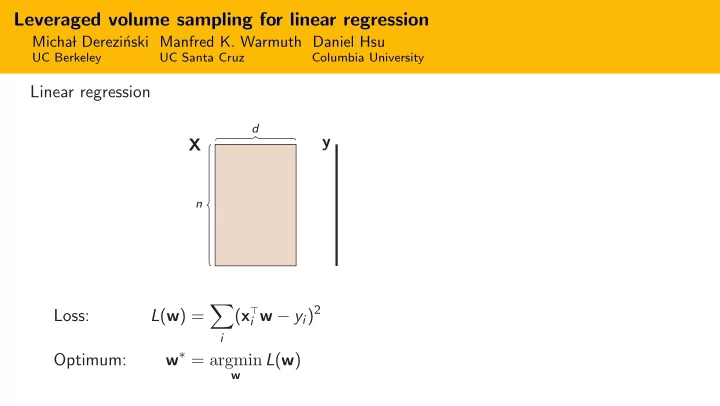

Leveraged volume sampling for linear regression Micha� l Derezi´ nski Manfred K. Warmuth Daniel Hsu UC Berkeley UC Santa Cruz Columbia University Linear regression d y X n � i w − y i ) 2 ( x ⊤ Loss: L ( w ) = i w ∗ = argmin Optimum: L ( w ) w

Leveraged volume sampling for linear regression Micha� l Derezi´ nski Manfred K. Warmuth Daniel Hsu UC Berkeley UC Santa Cruz Columbia University Linear regression with hidden responses d y X Sample S = { 4 , 6 , 9 } y 4 . x ⊤ 4 y 6 . x ⊤ 6 Receive x ⊤ y 9 9 y 4 , y 6 , y 9 � i w − y i ) 2 Loss: L ( w ) = ( x ⊤ i w ∗ = argmin Optimum: L ( w ) w

Leveraged volume sampling for linear regression Micha� l Derezi´ nski Manfred K. Warmuth Daniel Hsu UC Berkeley UC Santa Cruz Columbia University Linear regression with hidden responses d y X Sample Goal: Best unbiased estimator � w ( S ) S = { 4 , 6 , 9 } y 4 . x ⊤ �� � 4 y 6 . w ( S ) = w ∗ E x ⊤ 6 �� � Receive ≤ (1 + ǫ ) L ( w ∗ ) L w ( S ) x ⊤ y 9 9 y 4 , y 6 , y 9 � i w − y i ) 2 Existing sampling methods: Loss: L ( w ) = ( x ⊤ 1. leverage score sampling: i.i.d., biased i w ∗ = argmin Optimum: L ( w ) 2. volume sampling: joint, unbiased w

Leveraged volume sampling Volume sampling Jointly choose set S of k ≥ d indices s.t. � � � Pr ( S ) ∝ det x i x ⊤ i i ∈ S

Leveraged volume sampling Volume sampling Jointly choose set S of k ≥ d indices s.t. � � � Pr ( S ) ∝ det x i x ⊤ i i ∈ S Theorem [DW17] �� � = w ∗ E w ( S ) � i w − y i ) 2 . w ( S ) = argmin � ( x ⊤ where w i ∈ S

Leveraged volume sampling Volume sampling Jointly choose set S of k ≥ d indices s.t. � � � Pr ( S ) ∝ det x i x ⊤ i i ∈ S Theorem [DW17] �� � = w ∗ w ( S ) E New Lower Bound Volume sampling may need a sample of size k = Ω( n ) to get a (3 / 2) -approximation � �� � ǫ =1 / 2

Leveraged volume sampling Solution: Use i.i.d. and joint sampling Volume sampling Jointly choose set S of k ≥ d indices s.t. leverage scores volume sampling � �� � � �� � � � � � � � � � � 1 Pr ( S ) ∝ det x i x ⊤ Pr ( S ) ∝ ℓ i det ℓ i x i x ⊤ i i i ∈ S i ∈ S i ∈ S Theorem [DW17] �� � = w ∗ w ( S ) E New Lower Bound Volume sampling may need a sample of size k = Ω( n ) to get a (3 / 2) -approximation � �� � ǫ =1 / 2

Leveraged volume sampling Solution: Use i.i.d. and joint sampling Volume sampling Jointly choose set S of k ≥ d indices s.t. leverage scores volume sampling � �� � � �� � � � � � � � � � � 1 Pr ( S ) ∝ det x i x ⊤ Pr ( S ) ∝ ℓ i det ℓ i x i x ⊤ i i i ∈ S i ∈ S i ∈ S � � � 2 Theorem [DW17] 1 x ⊤ w ( S ) = argmin � i w − y i �� � ℓ i w = w ∗ w ( S ) E i ∈ S New Lower Bound New Theorem For k = O ( d log d + d /ǫ ) Volume sampling may need a sample of size �� � = w ∗ w ( S ) and E k = Ω( n ) to get a (3 / 2) -approximation �� � � �� � w.h.p. w ( S ) ≤ (1 + ǫ ) L ( w ∗ ) L ǫ =1 / 2

New volume sampling algorithm Determinantal rejection sampling trick repeat Sample i 1 , . . . , i s i.i.d. ∼ ( ℓ 1 , . . . , ℓ n ) � det ( � s 1 x it x ⊤ it ) � t =1 ℓ it Sample Accept ∼ Bernoulli det( X ⊤ X ) until Accept = true preprocessing O ( nd 2 ) + sampling O ( d 4 ) � �� � � �� � improvable to � no dependence on n O ( nd +poly( d ))

New volume sampling algorithm Experiments – 7 datasets from Libsvm Determinantal rejection sampling trick repeat Sample i 1 , . . . , i s i.i.d. ∼ ( ℓ 1 , . . . , ℓ n ) � det ( � s 1 x it x ⊤ it ) � t =1 ℓ it Sample Accept ∼ Bernoulli det( X ⊤ X ) until Accept = true preprocessing O ( nd 2 ) + sampling O ( d 4 ) � �� � � �� � improvable to � no dependence on n O ( nd +poly( d )) Check out poster #151

Recommend

More recommend