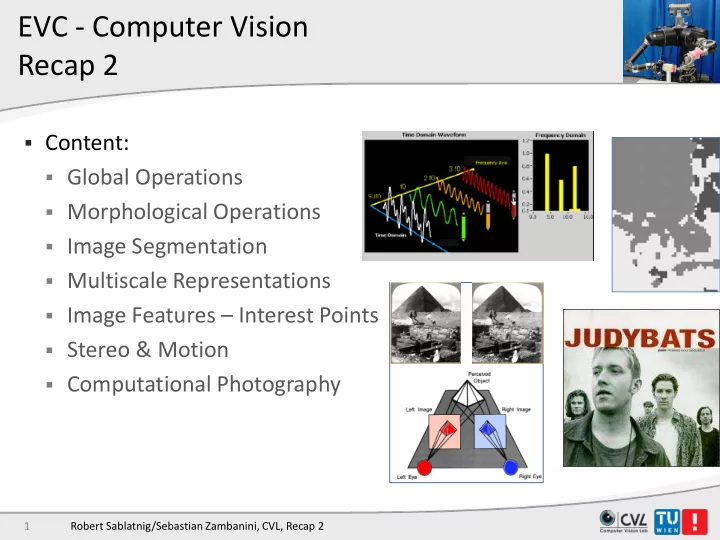

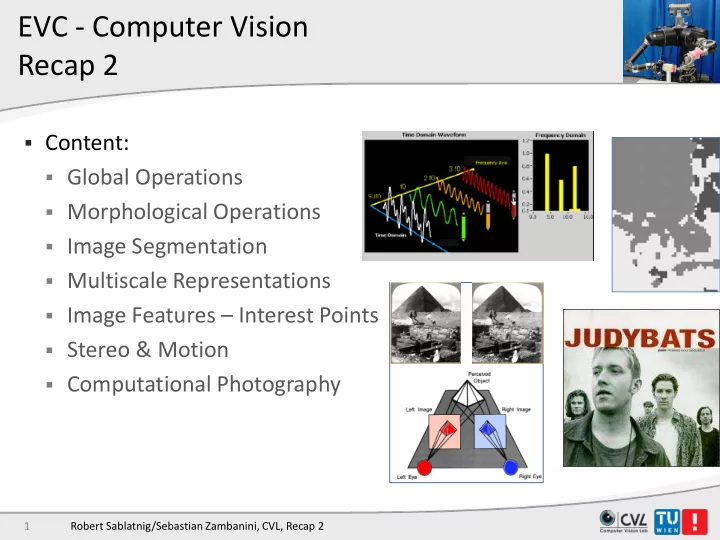

EVC - Computer Vision Recap 2 Content: Global Operations Morphological Operations Image Segmentation Multiscale Representations Image Features – Interest Points Stereo & Motion Computational Photography 1 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Global Operations - Fourier Transform Fourier Transform: ∞ 1 ∫ ω = ⋅ − ω i x G ( ) g ( t ) e dt π 2 − ∞ converts a function from the spatial (or time) domain to the frequency domain 2 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Time Domain and Frequency Domain Time Domain: Tells us how properties (air pressure in a sound function, for example) change over time: • Amplitude = 100 • Frequency = number of cycles in one second = 200 Hz 3 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Time Domain and Frequency Domain Frequency domain: Tells us how properties (amplitudes) change over frequencies: 4 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Fourier Transform A Fourier Transform is an integral transform that re-expresses a function in terms of different sine waves of varying amplitudes , wavelengths , and phases . So what does this mean exactly? Let’s start with an example…in 1-D Can be represented by: When you let these three waves interfere with each other you get your original wave function! 5 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Fourier Transform Since this object can be made up of 3 fundamental frequencies an ideal Fourier Transform would look something like this: Increasing Frequency Increasing Frequency Notice that it is symmetric around the central point and that the amount of points radiating outward correspond to the distinct frequencies used in creating the image. 6 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Let’s Try it with Two-Dimensions! This image exclusively has This image exclusively has 32 cycles in vertical direction. 8 cycles in horizontal direction. You will notice that the second example is a little more smeared out. This is because the lines are more blurred so more sine waves are required to build it. The transform is weighted so brighter spots indicate sine waves more frequently used. 7 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Magnitude vs. Phase 8 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Power Spectrum C A B 1 2 3 fx(cycles/image pixel size) fx(cycles/image pixel size) fx(cycles/image pixel size) 9 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Hough-Transformation Detection of straight lines in grayscale images 10 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Hough Transformation P.V.C. Hough 1962: US Patent = θ + θ r x cos y sin Line in Parameterform (Hess): r y r • = θ + θ r x cos y sin 0 • r θ 0 θ θ x Image Space Parameter Space 11 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Hough Transform for Collinear Points All lines that pass through a point P(x,y) are defined by: = θ + θ r x cos y sin Collinear points are detected in parameter space: Example Image space Parameter space 12 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

A simple example φ p 13 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Morphology Morph means “Shape” We do Morphology for Shape Analysis & Shape Study. Shape analysis easy in case of binary images , pixel locations describe the shape. Digital Morphology is a way to describe or analyze the shape of objects in digital images 14 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Structuring Element Essential part of morphological operations is the Structuring Element used to probe the input image. Two-dimensional structuring elements consist of a matrix, much smaller than the image being processed. Structuring Elements can have varying sizes Element values are 0,1 and none (!) Structural Elements have origin (anchor pixel) 15 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Erosion Brief Description To delete or to reduce something Pixels not matching a given pattern are deleted from the image. Basic effect • Erode away the boundaries of regions of foreground pixels (i.e. white pixels, typically). Common names: Erode, Shrink, Reduce 16 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Erosion Effect of erosion using a 3 × 3 square structuring element Set of coordinate points = { (-1, -1), (0, -1), (1, -1), (-1, 0), (0, 0), (1, 0), (-1, 1), (0, 1), (1, 1) } A 3 × 3 square structuring element 17 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Dilation Dilation is the set of all points in the image, where the (reflected) structuring element “ touches ” the foreground . Consider each pixel belonging to the foreground Replicate the structuring element 18 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Dilation Effect of dilation using a 3 × 3 square structuring element Set of coordinate points = { (-1, -1), (0, -1), (1, -1), (-1, 0), (0, 0), (1, 0), (-1, 1), (0, 1), (1, 1) } 3 × 3 square structuring element 19 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Combining Dilation and Erosion Morphological Opening Opening is defined as an erosion , followed by a dilation . Morphological Closing Closing is defined as a dilation , followed by an erosion . 20 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Opening 1 1 1 Structuring element: 3x3 square 1 1 1 1 1 1 21 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Closing 1 1 1 Structuring element: 3x3 square 1 1 1 1 1 1 22 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Hit-and-miss Transform Used to look for particular patterns of foreground and background pixels Very simple object recognition All other morphological operations can be derived from it!! Input: Binary Image Structuring Element, containing 0s, 1s and “don’t cares” 23 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Hit-and-Miss Transform Effect of the hit-and-miss based right angle convex corner detector Four structuring elements used for corner finding in binary images 24 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Thinning Original image Thresholded image Thinning result • A simple way to obtain the skeleton of the character is to thin the image until convergence. • The line is broken at some locations, which might cause problems during the recognition process. 25 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Example Thinning We use two Hit-and-miss Transforms 26 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Introduction to Image Segmentation Image Segmentation Sky Tree Tree ? ? Grass 27 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

How can we divide an Image into Uniform Regions ? Segmentation techniques can be classified as either contextual or non-contextual . Non-contextual techniques ignore the relationships that exist between features in an image; pixels are simply grouped together on the basis of some global attribute , such as grey level. Contextual techniques , additionally exploit the relationships between image features . Thus, a contextual technique might group together pixels that have similar grey levels and are close to one another . 28 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Greylevel Histogram-based Segmentation First, we will look at two very simple non-contextual image segmentation techniques that are based on the greylevel histogram of an image: Thresholding Clustering 29 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Greylevel Thresholding We can easily understand segmentation based on Background thresholding by looking at the histogram of the low noise object/background image Object There is a clear ‘valley’ between to two peaks We can define the greylevel thresholding algorithm as follows: T If the greylevel of pixel p <= T then pixel p is an object pixel else Pixel p is a background pixel 30 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Pixel Classification by Threshold Histogramm Problem : Connected image regions do not always have the same intensity => missing location relation of pixels Original Histogram Segmentation 31 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Local Thresholding A complex thresholding algorithm is to use a spatially varying threshold. This approach is very useful to compensate for the effects of non –uniform illumination. If T depends on coordinates x and y , this referred to as Dynamic, Adaptive or Local Thresholding. 32 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Local Thresholding Finding the local threshold is to statistically examine the intensity values of the local neighborhood of each pixel The statistic which is most appropriate depends largely on the input image . Simple and fast functions include: The mean of the local intensity distribution 1. T = mean The median value 2. T = median The mean of the minimum and maximum values, 3. min + max = T 2 33 Robert Sablatnig/Sebastian Zambanini, CVL, Recap 2

Recommend

More recommend