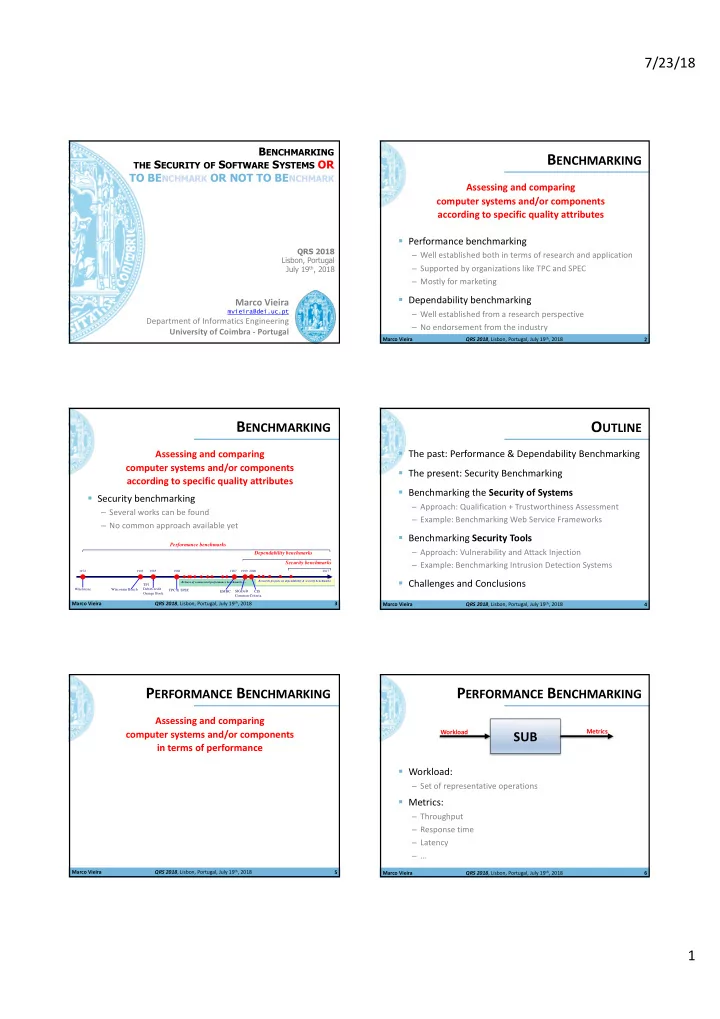

7/23/18 B ENCHMARKING B ENCHMARKING THE S ECURITY OF S OFTWARE S YSTEMS OR TO BE NCHMARK OR NOT TO BE NCHMARK Assessing and comparing computer systems and/or components according to specific quality attributes § Performance benchmarking QRS 2018 – Well established both in terms of research and application Lisbon, Portugal – Supported by organizations like TPC and SPEC July 19 th , 2018 – Mostly for marketing § Dependability benchmarking Marco Vieira – Well established from a research perspective mvieira@dei.uc.pt Department of Informatics Engineering – No endorsement from the industry University of Coimbra - Portugal Marco Vieira QRS 2018 , Lisbon, Portugal, July 19 th , 2018 2 B ENCHMARKING O UTLINE Assessing and comparing § The past: Performance & Dependability Benchmarking computer systems and/or components § The present: Security Benchmarking according to specific quality attributes § Benchmarking the Security of Systems § Security benchmarking – Approach: Qualification + Trustworthiness Assessment – Several works can be found – Example: Benchmarking Web Service Frameworks – No common approach available yet § Benchmarking Security Tools Performance benchmarks – Approach: Vulnerability and Attack Injection Dependability benchmarks – Example: Benchmarking Intrusion Detection Systems Security benchmarks 1972 1983 1985 1988 1987 1999 2000 2017 § Challenges and Conclusions Research projects on dependability & security benchmarks Release of commercial performance benchmarks … TP1 Whetstone Wisconsin Bench DebitCredit TPC & SPEC EMBC SIGDeB CIS Orange Book Common Criteria Marco Vieira QRS 2018 , Lisbon, Portugal, July 19 th , 2018 3 Marco Vieira QRS 2018 , Lisbon, Portugal, July 19 th , 2018 4 P ERFORMANCE B ENCHMARKING P ERFORMANCE B ENCHMARKING Assessing and comparing Metrics computer systems and/or components Workload SUB in terms of performance § Workload: – Set of representative operations § Metrics: – Throughput – Response time – Latency – … Marco Vieira QRS 2018 , Lisbon, Portugal, July 19 th , 2018 5 Marco Vieira QRS 2018 , Lisbon, Portugal, July 19 th , 2018 6 1

7/23/18 TPC-C (1992) D EPENDABILITY B ENCHMARKING Assessing and comparing Metrics Workload DBMS computer systems and/or components considering dependability attributes § Workload: – Database transactions Although some integrity tests are performed, § Metrics: it assumes that nothing fails – Transaction rate (tpmC) – Price per transaction ($/tpmC) Marco Vieira QRS 2018 , Lisbon, Portugal, July 19 th , 2018 7 Marco Vieira QRS 2018 , Lisbon, Portugal, July 19 th , 2018 8 D EPENDABILITY B ENCHMARKING DB ENCH -OLTP (2005) Experimental Experimental metrics metrics Workload Workload SUB SUB Faultload Faultload Models Unconditional metrics Parameters (fault § Faultload: § Workload: rates, MTBF, etc.) – Set of representative faults, injected into the system – TPC-C transactions § Metrics: § Faultload: – Performance and/or dependability – Operator faults + Software faults + HW component failures • Both baseline and in the presence of faults § Metrics: – Unconditional and/or direct – Performance: tpmC, $/tpmC, Tf, $/Tf – Dependability: Ne, AvtS, AvtC Marco Vieira QRS 2018 , Lisbon, Portugal, July 19 th , 2018 9 Marco Vieira QRS 2018 , Lisbon, Portugal, July 19 th , 2018 10 DB ENCH -OLTP (2005) DB ENCH -OLTP (2005) tpmC Baseline Performance $ Tf Performance With Faults $ tpmC Tf $/tpmC $/Tf 4000 4000 30 30 3000 3000 20 20 2000 2000 10 10 1000 1000 0 0 0 0 A B C D E F G H I J K A B C D E F G H I J K Does not take into account malicious behaviors % Availability AvtS (Server) (faults = vulnerability + attack) 100 AvtC (Clients) 90 80 70 60 Faultload: Operator faults 50 A B C D E F G H I J K Marco Vieira QRS 2018 , Lisbon, Portugal, July 19 th , 2018 11 Marco Vieira QRS 2018 , Lisbon, Portugal, July 19 th , 2018 12 2

7/23/18 S ECURITY B ENCHMARKING B ENCHMARKING S ECURITY OF S YSTEMS Experimental Assessing and comparing metrics Workload computer systems and/or components SUB Attacking what? Do we know the vulnerabilities? Attackload considering security aspects Models What are representative attacks? Unconditional metrics Parameters (vulnerability Does not work if one wants to benchmark how exposure, mean time between attacks, etc.) § Benchmarking the Security of Systems / Components secure different systems are! § Attackload: – Systems that should implement security requirements – Representative attacks e.g. does the number of vulnerabilities of a system – OS, middleware, server software, etc. represent anything? § Metrics: § Benchmarking Security Tools – Performance + dependability – Tools used to improve the security of systems – Security (e.g., number vulnerabilities, attack detection) – Penetration testers, static analyzers, IDS, etc. Marco Vieira QRS 2018 , Lisbon, Portugal, July 19 th , 2018 13 Marco Vieira QRS 2018 , Lisbon, Portugal, July 19 th , 2018 14 A DIFFERENT APPROACH … A DIFFERENT APPROACH … Security Security Trustworthiness Acceptable Metrics Qualification SUBs Qualification Assessment SUBs Unacceptable Unacceptable Security = 0 Security = 0 § Security Qualification: § Trustworthiness Assessment: – Apply state-of-the-art techniques and tools to detect – Gather evidences on how much one can trust vulnerabilities – e.g., best coding practices, development process, bad smells – SUBs with vulnerabilities are: • Disqualified! • Or vulnerabilities are fixed… Marco Vieira QRS 2018 , Lisbon, Portugal, July 19 th , 2018 15 Marco Vieira QRS 2018 , Lisbon, Portugal, July 19 th , 2018 16 A DIFFERENT APPROACH … E XAMPLE : W EB S ERVICE F RAMEWORKS Security Trustworthiness Qualification Assessment Acceptable Metrics Acceptable Trust. Qualification Assessment (testing) (CPU + mem.) SUBs WSFs Score Unacceptable Unacceptable Security = 0 Security = 0 § Metrics: § Qualification – Portray trust from a user perspective – DoS Attacks – Dynamic: may change over time – Coercive Parsing, Malformed XML, Malicious Attachment, etc. – Depend on the type of evidences gathered § Trustworthiness Assessment: – Different metrics for different attack vectors – Quality model to compute a score Marco Vieira QRS 2018 , Lisbon, Portugal, July 19 th , 2018 17 Marco Vieira QRS 2018 , Lisbon, Portugal, July 19 th , 2018 18 3

7/23/18 Q UALITY M ODEL S YSTEMS U NDER B ENCHMARKING Marco Vieira QRS 2018 , Lisbon, Portugal, July 19 th , 2018 19 Marco Vieira QRS 2018 , Lisbon, Portugal, July 19 th , 2018 20 T RUSTWORTHINESS R ESULTS B ENCHMARKING S ECURITY T OOLS Experimental Data metrics Sec. Workload SUB Faultload Tool (vulnerabilities + attacks) § Faultload: – Vulnerabilities are injected – Attacks target the injected vulnerabilities § Data can be collected for benchmarking security tools – Penetration testers, static analyzers, IDS, etc. Marco Vieira QRS 2018 , Lisbon, Portugal, July 19 th , 2018 21 Marco Vieira QRS 2018 , Lisbon, Portugal, July 19 th , 2018 22 V ULNERABILITY AND A TTACK I NJECTION E XAMPLE : B ENCHMARKING IDS § Security requires a defense in depth approach – Coding best practices – Testing – Static analysis – … § Vulnerability-free code is hard (or even impossible) to achieve... § Intrusion detection tools support a post-deployment approach – For protecting against known and unknown attacks Marco Vieira QRS 2018 , Lisbon, Portugal, July 19 th , 2018 23 Marco Vieira QRS 2018 , Lisbon, Portugal, July 19 th , 2018 24 4

Recommend

More recommend