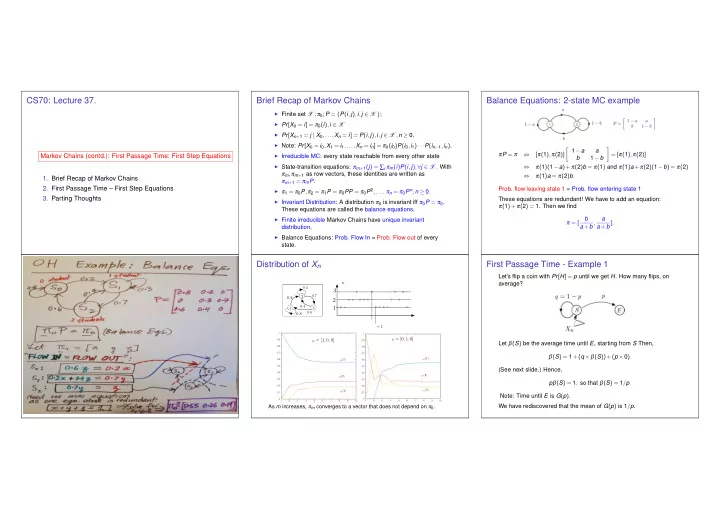

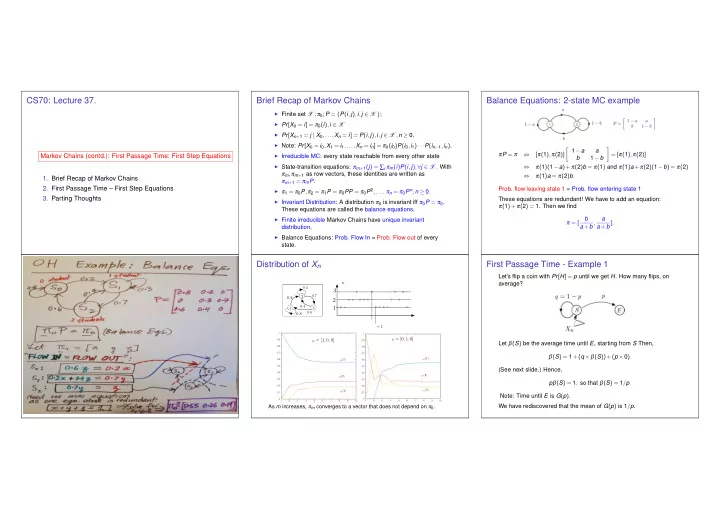

m X n n m m m CS70: Lecture 37. Brief Recap of Markov Chains Balance Equations: 2-state MC example ◮ Finite set X ; π 0 ; P = { P ( i , j ) , i , j ∈ X } ; ◮ Pr [ X 0 = i ] = π 0 ( i ) , i ∈ X ◮ Pr [ X n + 1 = j | X 0 ,..., X n = i ] = P ( i , j ) , i , j ∈ X , n ≥ 0. ◮ Note: Pr [ X 0 = i 0 , X 1 = i 1 ,..., X n = i n ] = π 0 ( i 0 ) P ( i 0 , i 1 ) ··· P ( i n − 1 , i n ) . � � 1 − a a π P = π ⇔ [ π ( 1 ) , π ( 2 )] = [ π ( 1 ) , π ( 2 )] ◮ Irreducible MC: every state reachable from every other state Markov Chains (contd.): First Passage Time: First Step Equations b 1 − b ◮ State-transition equations: π m + 1 ( j ) = ∑ i π m ( i ) P ( i , j ) , ∀ j ∈ X . With ⇔ π ( 1 )( 1 − a )+ π ( 2 ) b = π ( 1 ) and π ( 1 ) a + π ( 2 )( 1 − b ) = π ( 2 ) π m , π m + 1 as row vectors, these identities are written as ⇔ π ( 1 ) a = π ( 2 ) b . 1. Brief Recap of Markov Chains π m + 1 = π m P . 2. First Passage Time – First Step Equations Prob. flow leaving state 1 = Prob. flow entering state 1 ◮ π 1 = π 0 P , π 2 = π 1 P = π 0 PP = π 0 P 2 ,.... π n = π 0 P n , n ≥ 0 . 3. Parting Thoughts These equations are redundant! We have to add an equation: ◮ Invariant Distribution: A distribution π 0 is invariant iff π 0 P = π 0 . π ( 1 )+ π ( 2 ) = 1. Then we find These equations are called the balance equations. b a ◮ Finite irreducible Markov Chains have unique invariant π = [ a + b , a + b ] . distribution. ◮ Balance Equations: Prob. Flow In = Prob. Flow out of every state. Distribution of X n First Passage Time - Example 1 Let’s flip a coin with Pr [ H ] = p until we get H . How many flips, on average? n 0 . 3 3 0 . 7 2 0 . 2 2 0 . 4 1 3 1 1 0 . 6 0 . 8 + 1 π 0 = [0 , 1 , 0] π 0 = [1 , 0 , 0] Let β ( S ) be the average time until E , starting from S Then, β ( S ) = 1 +( q × β ( S ))+( p × 0 ) . π m (1) π m (1) (See next slide.) Hence, π m (2) π m (2) p β ( S ) = 1 , so that β ( S ) = 1 / p . π m (3) π m (3) Note: Time until E is G ( p ) . We have rediscovered that the mean of G ( p ) is 1 / p . As m increases, π m converges to a vector that does not depend on π 0 .

First Passage Time - Example 1 First Passage Time - Example 2 First Passage Time - Example 2 Let’s flip a coin with Pr [ H ] = p until we get H . Average no. of flips? Let’s flip a coin with Pr [ H ] = p until we get two consecutive H s. How many flips, on average? Here is a picture: Let β ( S ) be the average time until E . Then, Let us justify the first step equation for β ( T ) . The others are similar. β ( S ) = 1 +( q × β ( S ))+( p × 0 ) . Let N ( T ) be the random number of steps, starting from T until the MC hits E . Let also N ( H ) be defined similarly. Finally, let N ′ ( T ) be the Justification: Let β ( i ) be the average time from state i until the MC hits state E . number of steps after the second visit to T until the MC hits E . Then, Let N be the random number of steps until E , starting from S . We claim that (these are called the first step equations) N ( T ) = 1 + Z × N ( H )+( 1 − Z ) × N ′ ( T ) Let also N ′ be the number of steps until E , after the second visit to S . β ( S ) = 1 + p β ( H )+ q β ( T ) where Z = 1 { first flip in T is H } . Since Z and N ( H ) are independent, Finally, let Z = 1 { first flip = H } = 1 if first flip is H and 0 else. Then, and Z and N ′ ( T ) are independent, taking expectations, we get β ( H ) = 1 + p 0 + q β ( T ) N = 1 +( 1 − Z ) × N ′ + Z × 0 . β ( T ) = 1 + p β ( H )+ q β ( T ) . E [ N ( T )] = 1 + pE [ N ( H )]+ qE [ N ′ ( T )] , Now, Z and N ′ are independent. Also, E [ N ′ ] = E [ N ] = β ( S ) . Hence, Solving, we find β ( S ) = 2 + 3 qp − 1 + q 2 p − 2 . (E.g., β ( S ) = 6 if p = 1 / 2.) i.e., taking expectation of both sides of the equation, we get: β ( T ) = 1 + p β ( H )+ q β ( T ) . β ( S ) = E [ N ] = 1 +(( 1 − p ) × E [ N ′ ])+( p × 0 ) = 1 +( q × β ( S ))+( p × 0 ) . First Passage Time - Example 3: Practice Exercise Example 3: Practice Exercise Solution First Step Equations You keep rolling a fair six-sided die until the sum of the last two rolls is 8 . Let X n be a MC on X and A ⊂ X . Define Question : How many times do you have to roll the die before you T A = min { n ≥ 0 | X n ∈ A } . stop, on average? Let β ( i ) = E [ T A | X 0 = i ] , i ∈ X . The FSE are 6 6 β ( S ) = 1 + 1 β ( j ); β ( 1 ) = 1 + 1 β ( j ); β ( i ) = 1 + 1 ∑ ∑ ∑ β ( j ) , i = 2 ,..., 6 . β ( i ) = 0 , i ∈ A 6 6 6 j = 1 j = 1 j = 1 ,..., 6 ; j � = 8 − i β ( i ) = 1 + ∑ P ( i , j ) β ( j ) , i / ∈ A Spoiler Alert: Solution on next slide j Symmetry: β ( 2 ) = ··· = β ( 6 ) =: γ . Also, β ( 1 ) = β ( S ) . Thus, (but don’t look: try to do it yourself first!) β ( S ) = 1 +( 5 / 6 ) γ + β ( S ) / 6 ; γ = 1 +( 4 / 6 ) γ +( 1 / 6 ) β ( S ) . ⇒ ··· β ( S ) = 8 . 4 .

Summary Probability part of the course: key takeaways? What should I take away about probability from this course? I mean, after the final ? Random Thoughts Markov Chains ◮ Given the uncertainty around us, we should understand some Famous Quotes: French mathematician Pierre-Simon Laplace probability. “Being precise about being imprecise.” (Translated from French): ◮ Markov Chain: Pr [ X n + 1 = j | X 0 ,..., X n = i ] = P ( i , j ) ◮ 4 key concepts: “The theory of probabilities is basically just common ◮ First Passage Time: 1. Learn from observations to revise our biases, given by the sense reduced to calculus” ◮ A ⊂ X ; β ( i ) = E [ T A | X 0 = i ] ; role of the prior; Bayes’ Theorem ; ◮ β ( i ) = 1 + ∑ j P ( i , j ) β ( j ); 2. Confidence Intervals : CLT, Cheybyshev Bounds, WLLN. ◮ FSE: β ( i ) = 1 + ∑ j P ( i , j ) β ( j ) ; 3. Regression/Estimation : L [ Y | X ] , E [ Y | X ] Famous Quotes: Attributed by Mark Twain to British Prime 4. Markov Chains : Sequence of RVs, P [ X n + 1 = x n + 1 | X n = ◮ π n = π 0 P n Minister Benjamin Disraeli: x n , X n − 1 = x n − 1 , X n − 2 = x n − 2 ,... ] = P [ X n + 1 = x n + 1 | X n = x n ] , ◮ π is invariant iff π P = π ”There are three kinds of lies: lies, damned lies, and Balance Equations. statistics.” ◮ Irreducible ⇒ one and only one invariant distribution π ◮ Quantifying our degree of certainty. This clear thinking invites us to question vague statements, and to convert them into precise ideas. Confusing Statistics: Simpson’s Paradox More on Confusing Statistics Confirmation Bias Confirmation bias is the tendency to search for, interpret, and Statistics are often confusing: recall information in a way that confirms one’s beliefs or hypotheses, while giving disproportionately less consideration ◮ The average household annual income in the US is $ 72 k . to alternative possibilities. Yes, but the median is $ 52 k . Confirmation biases contribute to overconfidence in personal ◮ The false alarm rate for prostate cancer is only 1 % . Great, beliefs and can maintain or strengthen beliefs in the face of but only 1 person in 8 , 000 has that cancer. So, there are contrary evidence. 80 false alarms for each actual case. The numbers are applications and admissions of males and females to the two colleges of a university. ◮ The Texas sharpshooter fallacy. Look at people living close Three aspects: to power lines. You find clusters of cancers. You will also Overall, the admission rate of male students is 80 % whereas it ◮ Biased search for information. E.g., ignoring articles that find such clusters when looking at people eating kale. is only 51 % for female students. dispute your beliefs. ◮ False causation. Vaccines cause autism. Both vaccination ◮ Biased interpretation. E.g., putting more weight on A closer look shows that the admission rate is larger for female and autism rates increased.... confirmation than on contrary evidence. students in both colleges.... ◮ Beware of statistics reported in the media! ◮ Biased memory. E.g., remembering facts that confirm your Female students happen to apply more to the college that beliefs and forgetting others. admits fewer students.

Recommend

More recommend