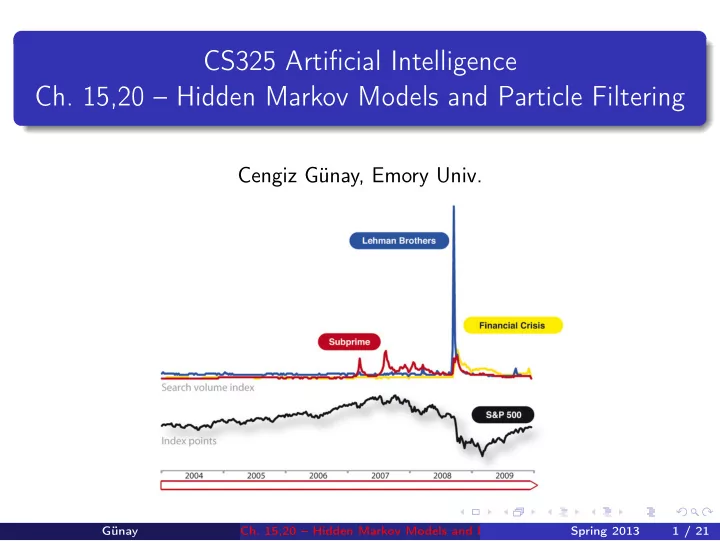

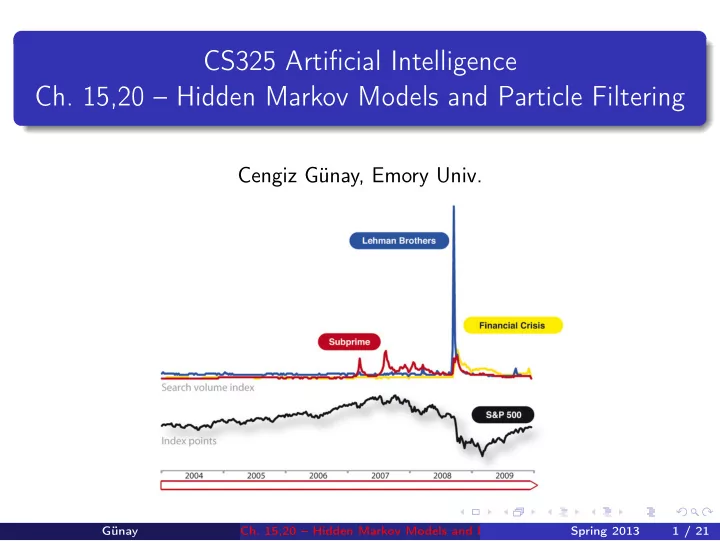

CS325 Artificial Intelligence Ch. 15,20 – Hidden Markov Models and Particle Filtering Cengiz Günay, Emory Univ. Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 1 / 21

Get Rich Fast! Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 2 / 21

Get Rich Fast! Or go bankrupt? Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 2 / 21

Get Rich Fast! Or go bankrupt? So, how can we predict time-series data ? Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 2 / 21

Hidden Markov Models Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 3 / 21

Hidden Markov Models Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 3 / 21

Entry/Exit Surveys Exit survey: Reinforcement Learning What’s the difference between MDPs and Reinforcement Learning? What is the dilemma between exploration and exploitation? Entry survey: Hidden Markov Models (0.25 points of final grade) What previous algorithm would you use for time series prediction? What time series do you wish you could predict? Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 4 / 21

Time Series Prediction? Have we done this before? Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 5 / 21

Time Series Prediction? Have we done this before? Belief states with action schemas? Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 5 / 21

Time Series Prediction? Have we done this before? Belief states with action schemas? Not for continuous variables Goal-based Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 5 / 21

Time Series Prediction? Have we done this before? Belief states with action schemas? Not for continuous variables Goal-based MDPs and RL? Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 5 / 21

Time Series Prediction? Have we done this before? Belief states with action schemas? Not for continuous variables Goal-based MDPs and RL? Goal-based No time sequence Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 5 / 21

Time Series Prediction with Hidden Markov Models (HMMs) Dr. Thrun is very happy – HMMs are his specialty. HMMs: analyze & predict time series data can deal with noisy sensors Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 6 / 21

Time Series Prediction with Hidden Markov Models (HMMs) Dr. Thrun is very happy – HMMs are his specialty. HMMs: analyze & predict time series data can deal with noisy sensors Example domains: finance (get rich fast!) robotics medical speech and language Alternatives: Recurrent neural networks (not probabilistic) Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 6 / 21

What are HMMs? Markov chain: Hidden states : S 1 → S 2 → · · · → S n ↓ ↓ Measurements : Z 1 · · · Z n Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 7 / 21

What are HMMs? Markov chain: Hidden states : S 1 → S 2 → · · · → S n ↓ ↓ Measurements : Z 1 · · · Z n It’s essentially a Bayes Net! Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 7 / 21

What are HMMs? Markov chain: Hidden states : S 1 → S 2 → · · · → S n ↓ ↓ Measurements : Z 1 · · · Z n It’s essentially a Bayes Net! Implementations: Kalman Filter (see Ch. 15) Particle Filter Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 7 / 21

Video: Lost Robots, Speech Recognition

Future Prediction with Markov Chains Is tomorrow going to be R ainy or S unny? 0 . 4 0 . 6 0 . 8 R S 0 . 2 Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 9 / 21

Future Prediction with Markov Chains Is tomorrow going to be R ainy or S unny? 0 . 4 0 . 6 0 . 8 R S 0 . 2 Start with “today is rainy”: P ( R 0 ) = 1, then P ( S 0 ) = 0 Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 9 / 21

Future Prediction with Markov Chains Is tomorrow going to be R ainy or S unny? 0 . 4 0 . 6 0 . 8 R S 0 . 2 Start with “today is rainy”: P ( R 0 ) = 1, then P ( S 0 ) = 0 What’s P ( S 1 ) = ? P ( S 2 ) = ? P ( S 3 ) = ? Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 9 / 21

Future Prediction with Markov Chains Is tomorrow going to be R ainy or S unny? 0 . 4 0 . 6 0 . 8 R S 0 . 2 Start with “today is rainy”: P ( R 0 ) = 1, then P ( S 0 ) = 0 What’s P ( S 1 ) = 0 . 4 P ( S 2 ) = ? P ( S 3 ) = ? Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 9 / 21

Future Prediction with Markov Chains Is tomorrow going to be R ainy or S unny? 0 . 4 0 . 6 0 . 8 R S 0 . 2 Start with “today is rainy”: P ( R 0 ) = 1, then P ( S 0 ) = 0 What’s P ( S 1 ) = 0 . 4 P ( S 2 ) = 0 . 56 P ( S 3 ) = ? Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 9 / 21

Future Prediction with Markov Chains Is tomorrow going to be R ainy or S unny? 0 . 4 0 . 6 0 . 8 R S 0 . 2 Start with “today is rainy”: P ( R 0 ) = 1, then P ( S 0 ) = 0 What’s P ( S 1 ) = 0 . 4 P ( S 2 ) = 0 . 56 P ( S 3 ) = 0 . 624 Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 9 / 21

Future Prediction with Markov Chains Is tomorrow going to be R ainy or S unny? 0 . 4 0 . 6 0 . 8 R S 0 . 2 Start with “today is rainy”: P ( R 0 ) = 1, then P ( S 0 ) = 0 What’s P ( S 1 ) = 0 . 4 P ( S 2 ) = 0 . 56 P ( S 3 ) = 0 . 624 P ( S t + 1 ) = 0 . 4 × P ( R t ) + 0 . 8 × P ( S t ) Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 9 / 21

Back to the Future? How far can we see into the future? P ( A ∞ ) =? Until it reaches a stationary state (or limit cycle) Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 10 / 21

Back to the Future? How far can we see into the future? P ( A ∞ ) =? Until it reaches a stationary state (or limit cycle) Use calculus: t →∞ P ( A t + 1 ) = P ( A t ) lim Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 10 / 21

Back to the Future? How far can we see into the future? P ( A ∞ ) =? Until it reaches a stationary state (or limit cycle) Use calculus: t →∞ P ( A t + 1 ) = P ( A t ) lim 0 . 4 0 . 6 R S 0 . 8 0 . 2 P ( S ∞ ) = ? Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 10 / 21

Back to the Future? How far can we see into the future? P ( A ∞ ) =? Until it reaches a stationary state (or limit cycle) Use calculus: t →∞ P ( A t + 1 ) = P ( A t ) lim 0 . 4 0 . 6 R S 0 . 8 0 . 2 P ( S ∞ ) = 2 / 3 Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 10 / 21

Back to the Future? How far can we see into the future? P ( A ∞ ) =? Until it reaches a stationary state (or limit cycle) Use calculus: t →∞ P ( A t + 1 ) = P ( A t ) lim 0 . 4 0 . 6 R S 0 . 8 0 . 2 P ( S ∞ ) = 2 / 3 t →∞ P ( S t + 1 ) = 0 . 4 × P ( R t ) + 0 . 8 × P ( S t ) , lim subst . x = P ( S t + 1 ) = P ( S t ) = 1 − P ( R t ) = · · · Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 10 / 21

And How Do We Get The Transition Probabilities? ? ? R S ? ? Observed sequence in Atlanta � : RRSRRRSR Use Maximum Likelihood Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 11 / 21

And How Do We Get The Transition Probabilities? ? ? R S ? ? Observed sequence in Atlanta � : RRSRRRSR Use Maximum Likelihood P ( S | S ) = ? Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 11 / 21

And How Do We Get The Transition Probabilities? ? ? R S ? ? Observed sequence in Atlanta � : RRSRRRSR Use Maximum Likelihood total transitions from S = 0 observed transitions P ( S | S ) = 2 Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 11 / 21

And How Do We Get The Transition Probabilities? ? ? R S ? ? Observed sequence in Atlanta � : RRSRRRSR Use Maximum Likelihood total transitions from S = 0 observed transitions P ( S | S ) = 2 P ( R | S ) = 2 / 2 , P ( S | R ) = 2 / 5 , P ( R | R ) = 3 / 5 Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 11 / 21

And How Do We Get The Transition Probabilities? ? ? R S ? ? Observed sequence in Atlanta � : RRSRRRSR Use Maximum Likelihood total transitions from S = 0 observed transitions P ( S | S ) = 2 P ( R | S ) = 2 / 2 , P ( S | R ) = 2 / 5 , P ( R | R ) = 3 / 5 Edge effects? P ( S | S ) = 0? Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 11 / 21

Overcoming Overfitting: Remember Laplacian Smoothing? Observed sequence in Atlanta � : RRSRRRSR Laplacian smoothing K = 1 observed transitions P ( S | S ) = total transitions from S Günay Ch. 15,20 – Hidden Markov Models and Particle Filtering Spring 2013 12 / 21

Recommend

More recommend