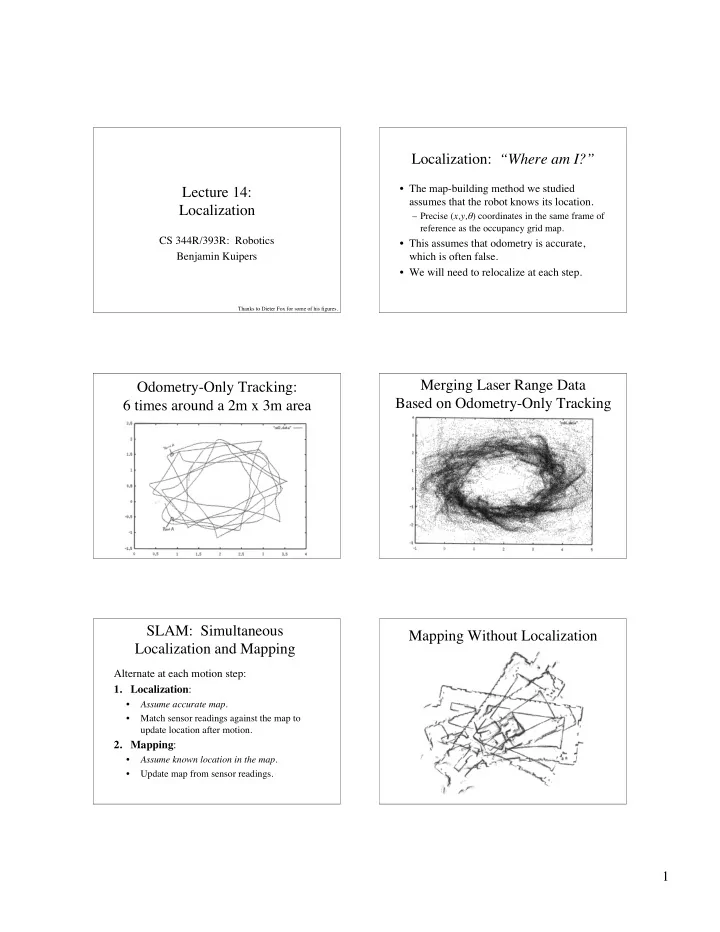

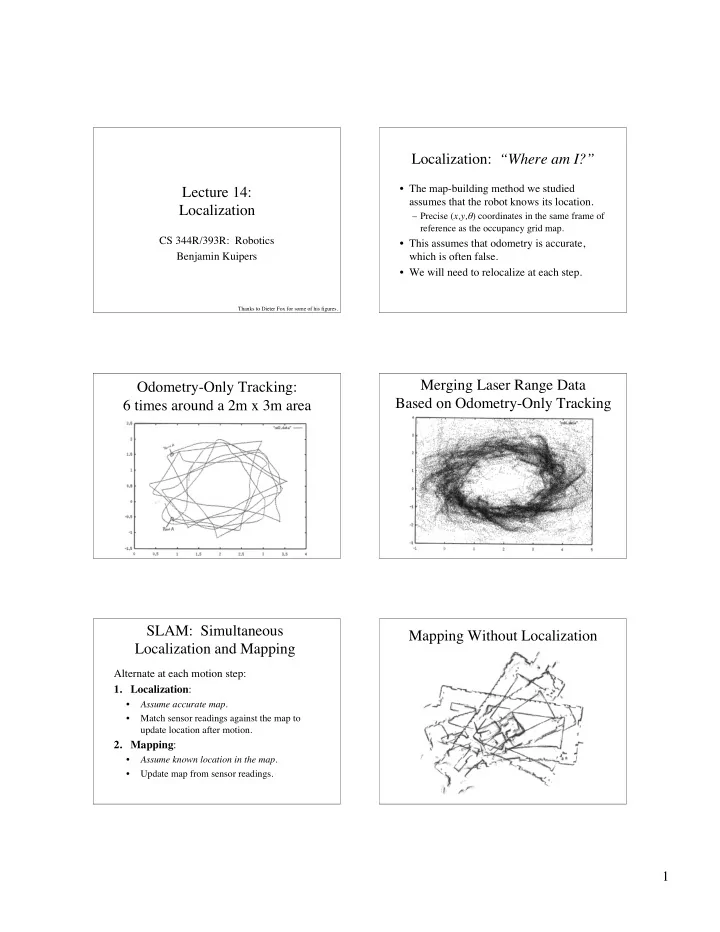

Localization: “Where am I?” • The map-building method we studied Lecture 14: assumes that the robot knows its location. Localization – Precise ( x,y, θ ) coordinates in the same frame of reference as the occupancy grid map. CS 344R/393R: Robotics • This assumes that odometry is accurate, Benjamin Kuipers which is often false. • We will need to relocalize at each step. Thanks to Dieter Fox for some of his figures. Merging Laser Range Data Odometry-Only Tracking: Based on Odometry-Only Tracking 6 times around a 2m x 3m area SLAM: Simultaneous Mapping Without Localization Localization and Mapping Alternate at each motion step: 1. Localization : • Assume accurate map . • Match sensor readings against the map to update location after motion. 2. Mapping : • Assume known location in the map . • Update map from sensor readings. 1

Modeling Action and Sensing Mapping With Localization Z t-1 Z t Z t+1 u t-1 u t X t-1 X t X t+1 • Action model: P ( x t | x t- 1 , u t- 1 ) • Sensor model: P ( z t | x t ) • What we want to know is Belief : Bel ( x ) P ( x | u , z , u , z ) K = t t 1 2 t 1 t � the posterior probability distribution of x t , given the past history of actions and sensor inputs. The Markov Assumption Dynamic Bayesian Network Z t-1 Z t Z t+1 • The well-known DBN for local SLAM. u t-1 u t X t-1 X t X t+1 • Given the present, the future is independent of the past. • Given the state x t , the observation z t is independent of the past. P ( z t | x t ) = P ( z t | x t , u 1 , z 2 K , u t � 1 ) Law of Total Probability Bayes Law ( marginalizing ) • We can treat the denominator in Bayes Law Discrete Continuous case as a normalizing constant: � P ( y ) = 1 � p ( y ) dy = 1 P ( y | x ) P ( x ) P ( x y ) P ( y | x ) P ( x ) = = � y P ( y ) 1 P ( y ) 1 � P ( x ) P ( x , y ) � = = � p ( x ) p ( x , y ) dy = = � P ( y | x ) P ( x ) � y x • We will apply it in the following form: P ( x ) P ( x | y ) P ( y ) � p ( x ) p ( x | y ) p ( y ) dy = = � Bel ( x ) P ( x | u , z , u , z ) K = t t 1 2 t 1 t y � P ( z | x , u , z , , u ) P ( x | u , z , , u ) K K = � t t 1 2 t � 1 t 1 2 t � 1 2

Bayes Filter Markov Localization Bel ( x ) P ( x | u , z K , u , z ) = t t 1 2 t � 1 t � Bel ( x t ) = � P ( z t | x t ) P ( x t | u t � 1 , x t � 1 ) Bel ( x t � 1 ) dx t � 1 P ( z | x , u , z , K , u ) P ( x | u , z , K , u ) = � Bayes t t 1 2 t 1 t 1 2 t 1 � � • Bel ( x t- 1 ) and Bel ( x t ) are prior and posterior P ( z | x ) P ( x | u , z , , u ) K Markov = � t t t 1 2 t 1 � probabilities of location x . P ( z | x ) P ( x | u , z , K , u , x ) • P ( x t | u t- 1 , x t- 1 ) is the action model, giving the = � � Total prob. t t t 1 2 t � 1 t � 1 probability distribution over result of u t- 1 at x t- 1 . P ( x | u , z , , u ) dx K t 1 1 2 t 1 t 1 � � � • P ( z t | x t ) is the sensor model, giving the probability P ( z | x ) P ( x | u , x ) P ( x | u , z , , u ) dx K Markov = � � distribution over sense images z t at x t . t t t t 1 t 1 t 1 1 2 t 1 t 1 � � � � � • η is a normalization constant, ensuring that total P ( z | x ) P ( x | u , x ) Bel ( x ) dx = � � probability mass over x t is 1. t t t t � 1 t � 1 t � 1 t � 1 Markov Localization • Evaluate Bel ( x t ) for every possible state x t . • Prediction phase: Bel � ( x t ) = � P ( x t | u t � 1 , x t � 1 ) Bel ( x t � 1 ) dx t � 1 – Integrate over every possible state x t- 1 to apply the Uniform prior probability Bel - ( x 0 ) probability that action u t- 1 could reach x t from there. • Correction phase: Bel ( x t ) = � P ( z t | x t ) Bel � ( x t ) – Weight each state x t with likelihood of observation z t . Sensor information P ( z 0 | x 0 ) Apply the action model P ( x 1 | u 0 , x 0 ) Bel � ( x 1 ) = � Bel ( x 0 ) = � P ( z 0 | x 0 ) Bel � ( x 0 ) P ( x 1 | u 0 , x 0 ) Bel ( x 0 ) dx 0 3

Combine with observation P ( z 1 | x 1 ) Action model again: P ( x 2 | u 1 , x 1 ) Bel � ( x 2 ) = � P ( x 2 | u 1 , x 1 ) Bel ( x 1 ) dx 1 Bel ( x 1 ) = � P ( z 1 | x 1 ) Bel � ( x 1 ) Initial belief Bel ( x 0 ) Local and Global Localization • Most localization is local : – Incrementally correct belief in position after each action. • Global localization is more dramatic. – Where in the entire environment am I? • The “kidnapped robot problem” – Includes detecting that I am lost. Intermediate Belief Bel ( x t ) Final Belief Bel ( x t ) 4

Global Localization Movie Future Attractions • Sensor and action models • Particle filtering – elegant, simple algorithm – Monte Carlo simulation 5

Recommend

More recommend