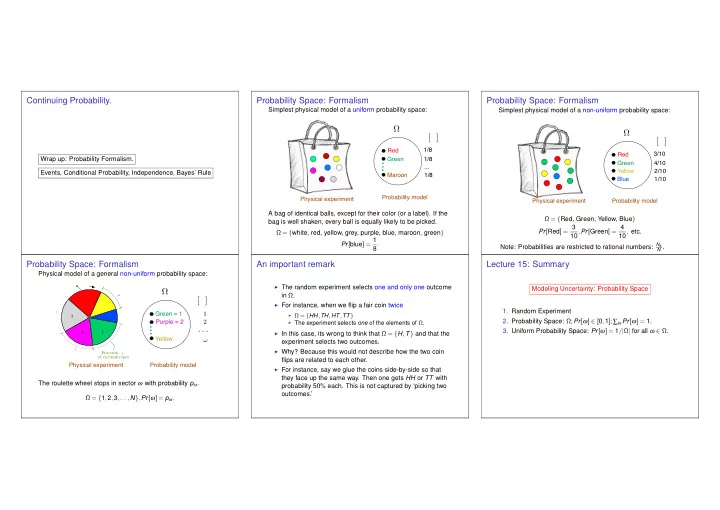

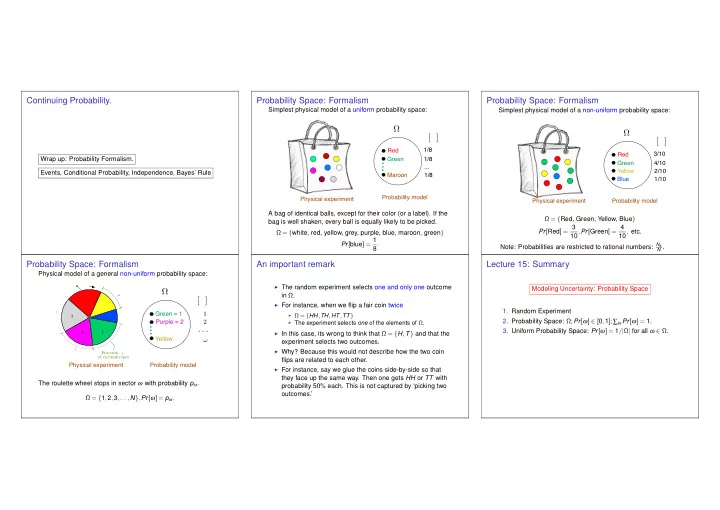

ω ω Continuing Probability. Probability Space: Formalism Probability Space: Formalism Simplest physical model of a uniform probability space: Simplest physical model of a non-uniform probability space: Ω Ω P r [ ω ] P r [ ω ] Red 1/8 3/10 Red Wrap up: Probability Formalism. Green 1/8 Green 4/10 . ... . . Yellow 2/10 Events, Conditional Probability, Independence, Bayes’ Rule Maroon 1/8 Blue 1/10 Probability model Physical experiment Physical experiment Probability model A bag of identical balls, except for their color (or a label). If the Ω = { Red, Green, Yellow, Blue } bag is well shaken, every ball is equally likely to be picked. Pr [ Red ] = 3 10 , Pr [ Green ] = 4 10 , etc. Ω = { white, red, yellow, grey, purple, blue, maroon, green } Pr [ blue ] = 1 8 . Note: Probabilities are restricted to rational numbers: N k N . Probability Space: Formalism An important remark Lecture 15: Summary Physical model of a general non-uniform probability space: ◮ The random experiment selects one and only one outcome Modeling Uncertainty: Probability Space Ω in Ω . p ω P r [ ω ] ◮ For instance, when we flip a fair coin twice 1. Random Experiment p 3 p 1 Green = 1 ◮ Ω = { HH , TH , HT , TT } 3 p 2 2. Probability Space: Ω; Pr [ ω ] ∈ [ 0 , 1 ]; ∑ ω Pr [ ω ] = 1. Purple = 2 ◮ The experiment selects one of the elements of Ω . ... . . . 3. Uniform Probability Space: Pr [ ω ] = 1 / | Ω | for all ω ∈ Ω . 1 2 ◮ In this case, its wrong to think that Ω = { H , T } and that the Yellow p ω experiment selects two outcomes. p 2 ◮ Why? Because this would not describe how the two coin Fraction p 1 of circumference flips are related to each other. Physical experiment Probability model ◮ For instance, say we glue the coins side-by-side so that they face up the same way. Then one gets HH or TT with The roulette wheel stops in sector ω with probability p ω . probability 50 % each. This is not captured by ‘picking two outcomes.’ Ω = { 1 , 2 , 3 ,..., N } , Pr [ ω ] = p ω .

A A A A A B CS70: On to Calculation. Probability Basics Review Set notation review Ω Ω Ω Events, Conditional Probability, Independence, Bayes’ Rule [ B \ B Setup: ◮ Random Experiment. 1. Probability Basics Review Flip a fair coin twice. 2. Events ◮ Probability Space. 3. Conditional Probability Figure: Difference ( A , Figure: Two events Figure: Union (or) ◮ Sample Space: Set of outcomes, Ω . not B ) 4. Independence of Events Ω = { HH , HT , TH , TT } Ω Ω Ω 5. Bayes’ Rule (Note: Not Ω = { H , T } with two picks!) ∩ B A ∆ B ◮ Probability: Pr [ ω ] for all ω ∈ Ω . ¯ Pr [ HH ] = ··· = Pr [ TT ] = 1 / 4 1. 0 ≤ Pr [ ω ] ≤ 1 . 2. ∑ ω ∈ Ω Pr [ ω ] = 1 . Figure: Complement Figure: Intersection Figure: Symmetric (not) (and) difference (only one) Probability of exactly one ‘heads’ in two coin flips? Event: Example Probability of exactly one heads in two coin flips? Idea: Sum the probabilities of all the different outcomes that Sample Space, Ω = { HH , HT , TH , TT } . have exactly one ‘heads’: HT , TH . Ω Uniform probability space: This leads to a definition! P r [ ω ] Pr [ HH ] = Pr [ HT ] = Pr [ TH ] = Pr [ TT ] = 1 4 . Definition: 3/10 Red Event, E , “exactly one heads”: { TH , HT } . ◮ An event, E , is a subset of outcomes: E ⊂ Ω . Green 4/10 ◮ The probability of E is defined as Pr [ E ] = ∑ ω ∈ E Pr [ ω ] . Yellow 2/10 Blue 1/10 Physical experiment Probability model Ω = { Red, Green, Yellow, Blue } Pr [ Red ] = 3 10 , Pr [ Green ] = 4 10 , etc. Pr [ ω ] = | E | | Ω | = 2 4 = 1 Pr [ E ] = ∑ 2 . E = { Red , Green } ⇒ Pr [ E ] = 3 + 4 = 3 10 + 4 10 = Pr [ Red ]+ Pr [ Green ] . ω ∈ E 10

n Example: 20 coin tosses. Probability of n heads in 100 coin tosses. Roll a red and a blue die. 20 coin tosses Sample space: Ω = set of 20 fair coin tosses. Ω = { T , H } 20 ≡ { 0 , 1 } 20 ; | Ω | = 2 20 . Ω = { H , T } 100 ; | Ω | = 2 100 . ◮ What is more likely? ◮ ω 1 := ( 1 , 1 , 1 , 1 , 1 , 1 , 1 , 1 , 1 , 1 , 1 , 1 , 1 , 1 , 1 , 1 , 1 , 1 , 1 , 1 ) , or � 100 � Event E n = ‘ n heads’; | E n | = n ◮ ω 2 := ( 1 , 0 , 1 , 1 , 0 , 0 , 0 , 1 , 0 , 1 , 0 , 1 , 1 , 0 , 1 , 1 , 1 , 0 , 0 , 0 )? | Ω | = ( 100 n ) p n := Pr [ E n ] = | E n | 1 Answer: Both are equally likely: Pr [ ω 1 ] = Pr [ ω 2 ] = | Ω | . 2 100 p n ◮ What is more likely? Observe: ( E 1 ) Twenty Hs out of twenty, or ◮ Concentration around mean: ( E 2 ) Ten Hs out of twenty? Law of Large Numbers; Answer: Ten Hs out of twenty. ◮ Bell-shape: Central Limit Why? There are many sequences of 20 tosses with ten Hs; Theorem. | Ω | ≪ Pr [ E 2 ] = | E 2 | 1 only one with twenty Hs. ⇒ Pr [ E 1 ] = | Ω | . � 20 � | E 2 | = = 184 , 756 . 10 Exactly 50 heads in 100 coin tosses. Exactly 50 heads in 100 coin tosses. Sample space: Ω = set of 100 coin tosses = { H , T } 100 . Calculation. | Ω | = 2 × 2 ×···× 2 = 2 100 . Stirling formula (for large n ): 1 Uniform probability space: Pr [ ω ] = 2 100 . √ � n � n n ! ≈ 2 π n . Event E = “100 coin tosses with exactly 50 heads” e √ | E | ? 4 π n ( 2 n / e ) 2 n 4 n � 2 n � Choose 50 positions out of 100 to be heads. ≈ √ 2 π n ( n / e ) n ] 2 ≈ √ π n . n [ � 100 � | E | = . 50 � 100 � Pr [ E ] = | E | | Ω | = | E | 1 1 50 2 2 n = √ π n = √ ≈ . 08 . Pr [ E ] = 2 100 . 50 π

Probability is Additive Consequences of Additivity Inclusion/Exclusion Theorem Theorem (a) Pr [ A ∪ B ] = Pr [ A ]+ Pr [ B ] − Pr [ A ∩ B ] ; Pr [ A ∪ B ] = Pr [ A ]+ Pr [ B ] − Pr [ A ∩ B ] (a) If events A and B are disjoint, i.e., A ∩ B = / 0 , then (inclusion-exclusion property) (b) Pr [ A 1 ∪···∪ A n ] ≤ Pr [ A 1 ]+ ··· + Pr [ A n ] ; Pr [ A ∪ B ] = Pr [ A ]+ Pr [ B ] . (union bound) (c) If A 1 ,... A N are a partition of Ω , i.e., (b) If events A 1 ,..., A n are pairwise disjoint, pairwise disjoint and ∪ N m = 1 A m = Ω , then i.e., A k ∩ A m = / 0 , ∀ k � = m , then Pr [ B ] = Pr [ B ∩ A 1 ]+ ··· + Pr [ B ∩ A N ] . Pr [ A 1 ∪···∪ A n ] = Pr [ A 1 ]+ ··· + Pr [ A n ] . (law of total probability) Proof: Proof: Another view. Any ω ∈ A ∪ B is in A ∩ B , A ∪ B , or A ∩ B . So, add Obvious. it up. (b) is obvious. Proofs for (a) and (c)? Next... Total probability Roll a Red and a Blue Die. Conditional probability: example. Assume that Ω is the union of the disjoint sets A 1 ,..., A N . Two coin flips. First flip is heads. Probability of two heads? Ω = { HH , HT , TH , TT } ; Uniform probability space. Event A = first flip is heads: A = { HH , HT } . Then, Pr [ B ] = Pr [ A 1 ∩ B ]+ ··· + Pr [ A N ∩ B ] . New sample space: A ; uniform still. Indeed, B is the union of the disjoint sets A n ∩ B for n = 1 ,..., N . In “math”: ω ∈ B is in exactly one of A i ∩ B . Adding up probability of them, get Pr [ ω ] in sum. Event B = two heads. E 1 = ‘Red die shows 6’ ; E 2 = ‘Blue die shows 6’ ..Did I say... The probability of two heads if the first flip is heads. E 1 ∪ E 2 = ‘At least one die shows 6’ Add it up. The probability of B given A is 1 / 2. Pr [ E 1 ] = 6 36 , Pr [ E 2 ] = 6 36 , Pr [ E 1 ∪ E 2 ] = 11 36 .

A similar example. Conditional Probability: A non-uniform example Another non-uniform example Two coin flips. At least one of the flips is heads. Consider Ω = { 1 , 2 ,..., N } with Pr [ n ] = p n . → Probability of two heads? Let A = { 3 , 4 } , B = { 1 , 2 , 3 } . Ω Ω = { HH , HT , TH , TT } ; uniform. P r [ ω ] Event A = at least one flip is heads. A = { HH , HT , TH } . 3/10 Red Green 4/10 Yellow 2/10 Blue 1/10 New sample space: A ; uniform still. Physical experiment Probability model Ω = { Red, Green, Yellow, Blue } Event B = two heads. Pr [ Red | Red or Green ] = 3 7 = Pr [ Red ∩ (Red or Green) ] p 3 = Pr [ A ∩ B ] Pr [ A | B ] = . Pr [ Red or Green ] The probability of two heads if at least one flip is heads. p 1 + p 2 + p 3 Pr [ B ] The probability of B given A is 1 / 3. Yet another non-uniform example Conditional Probability. More fun with conditional probability. Consider Ω = { 1 , 2 ,..., N } with Pr [ n ] = p n . Toss a red and a blue die, sum is 4, Let A = { 2 , 3 , 4 } , B = { 1 , 2 , 3 } . What is probability that red is 1? Definition: The conditional probability of B given A is Pr [ B | A ] = Pr [ A ∩ B ] Pr [ A ] A ∩ B In A ! A A B B In B ? Must be in A ∩ B . Pr [ B | A ] = Pr [ A ∩ B ] Pr [ A ] . Pr [ B | A ] = | B ∩ A | = 1 3 ; versus Pr [ B ] = 1 / 6. | A | p 2 + p 3 = Pr [ A ∩ B ] Pr [ A | B ] = . B is more likely given A . p 1 + p 2 + p 3 Pr [ B ]

Recommend

More recommend