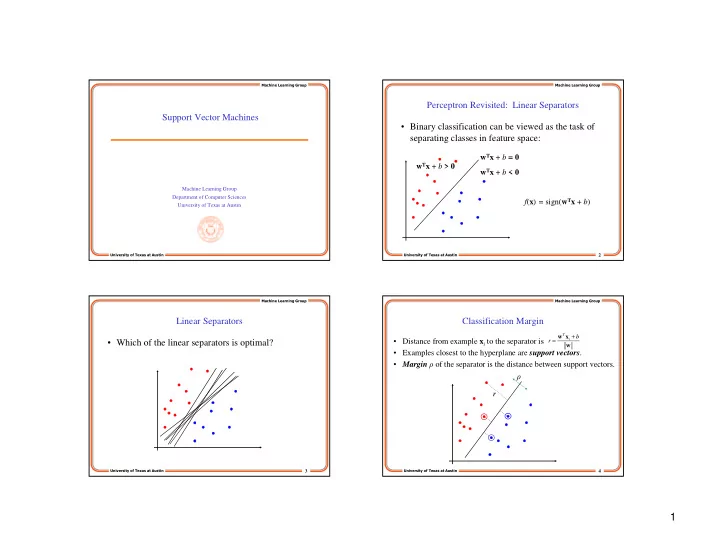

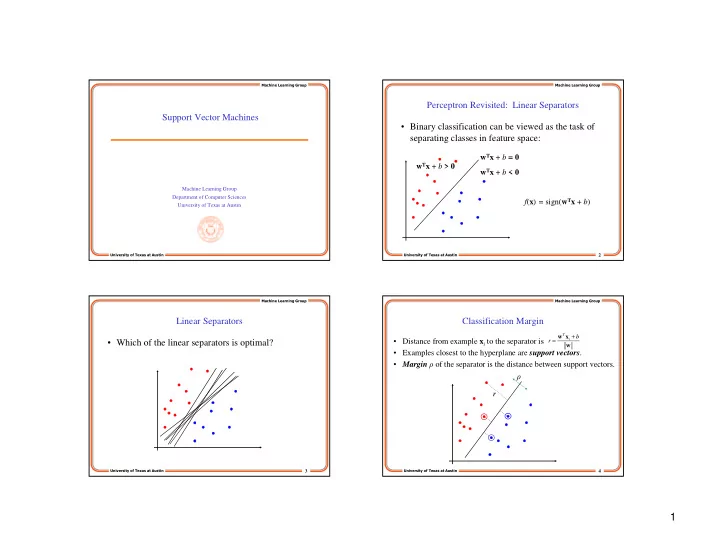

���������������������� ���������������������� Perceptron Revisited: Linear Separators Support Vector Machines • Binary classification can be viewed as the task of separating classes in feature space: w T x + b = 0 w T x + b > 0 w T x + b < 0 Machine Learning Group Department of Computer Sciences f ( x ) = sign( w T x + b ) University of Texas at Austin ����������������������������� ����������������������������� 2 ���������������������� ���������������������� Linear Separators Classification Margin + T w x b = • Distance from example x i to the separator is r i • Which of the linear separators is optimal? w • Examples closest to the hyperplane are support vectors . • Margin ρ of the separator is the distance between support vectors. ρ r ����������������������������� 3 ����������������������������� 4 1

���������������������� ���������������������� Maximum Margin Classification Linear SVM Mathematically • Let training set {( x i , y i )} i =1.. n , x i ∈ ∈ R d , y i ∈ ∈ ∈ ∈ ∈ ∈ {-1, 1} be separated by a • Maximizing the margin is good according to intuition and hyperplane with margin ρ . Then for each training example ( x i , y i ): PAC theory. • Implies that only support vectors matter; other training w T x i + b ≤ - ρ /2 if y i = -1 ⇔ ⇔ ⇔ ⇔ y i ( w T x i + b ) ≥ ρ /2 examples are ignorable. w T x i + b ≥ ρ /2 if y i = 1 • For every support vector x s the above inequality is an equality. After rescaling w and b by ρ / 2 in the equality, we obtain that + T y ( w x b ) 1 distance between each x s and the hyperplane is = = s s r w w • Then the margin can be expressed through (rescaled) w and b as: 2 ρ = r 2 = w ����������������������������� ����������������������������� 5 6 ���������������������� ���������������������� Linear SVMs Mathematically (cont.) Solving the Optimization Problem • Then we can formulate the quadratic optimization problem: Find w and b such that Φ ( w ) =w T w is minimized Find w and b such that and for all ( x i , y i ), i =1.. n : y i ( w T x i + b ) ≥ 1 2 ρ = is maximized w • Need to optimize a quadratic function subject to linear constraints. and for all ( x i , y i ), i =1.. n : y i ( w T x i + b) ≥ 1 • Quadratic optimization problems are a well-known class of mathematical programming problems for which several (non-trivial) algorithms exist. Which can be reformulated as: • The solution involves constructing a dual problem where a Lagrange multiplier α i is associated with every inequality constraint in the primal Find w and b such that (original) problem: Find α 1 … α n such that Φ ( w ) = ||w|| 2 = w T w is minimized Q ( α ) = Σ α i - ½ ΣΣ α i α j y i y j x i T x j is maximized and and for all ( x i , y i ), i =1.. n : y i ( w T x i + b ) ≥ 1 (1) Σ α i y i = 0 (2) α i ≥ 0 for all α i ����������������������������� 7 ����������������������������� 8 2

���������������������� ���������������������� The Optimization Problem Solution Soft Margin Classification Given a solution α 1 … α n to the dual problem, solution to the primal is: • • What if the training set is not linearly separable? Slack variables ξ i can be added to allow misclassification of difficult or • w = Σ α i y i x i b = y k - Σ α i y i x i for any α k > 0 noisy examples, resulting margin called soft . T x k Each non-zero α i indicates that corresponding x i is a support vector. • Then the classifying function is (note that we don’t need w explicitly): • ξ i ξ i f ( x ) = Σ α i y i x i T x + b • Notice that it relies on an inner product between the test point x and the support vectors x i – we will return to this later. • Also keep in mind that solving the optimization problem involved computing the inner products x i T x j between all training points. ����������������������������� ����������������������������� 9 10 ���������������������� ���������������������� Soft Margin Classification Mathematically Soft Margin Classification – Solution • The old formulation: • Dual problem is identical to separable case (would not be identical if the 2- norm penalty for slack variables C Σ ξ i 2 was used in primal objective, we Find w and b such that would need additional Lagrange multipliers for slack variables): Φ ( w ) =w T w is minimized and for all ( x i , y i ), i =1.. n : y i ( w T x i + b ) ≥ 1 Find α 1 … α N such that Q ( α ) = Σ α i - ½ ΣΣ α i α j y i y j x i T x j is maximized and (1) Σ α i y i = 0 • Modified formulation incorporates slack variables: (2) 0 ≤ α i ≤ C for all α i Find w and b such that Φ ( w ) =w T w + C Σ ξ i is minimized Again, x i with non-zero α i will be support vectors. • and for all ( x i , y i ), i =1.. n : y i ( w T x i + b ) ≥ 1 – ξ i, , ξ i ≥ 0 • Solution to the dual problem is: Again, we don’t need to compute w explicitly for • Parameter C can be viewed as a way to control overfitting: it “trades off” w = Σ α i y i x i classification: the relative importance of maximizing the margin and fitting the training b= y k (1- ξ k ) - Σ α i y i x i for any k s.t. α k > 0 T x k data. f ( x ) = Σ α i y i x i T x + b ����������������������������� 11 ����������������������������� 12 3

���������������������� ���������������������� Theoretical Justification for Maximum Margins Linear SVMs: Overview • Vapnik has proved the following: • The classifier is a separating hyperplane. The class of optimal linear separators has VC dimension h bounded from above as 2 • Most “important” training points are support vectors; they define the D ≤ + h min , m 1 ρ 2 0 hyperplane. where ρ is the margin, D is the diameter of the smallest sphere that can enclose all of the training examples, and m 0 is the dimensionality. Quadratic optimization algorithms can identify which training points x i are • support vectors with non-zero Lagrangian multipliers α i . • Intuitively, this implies that regardless of dimensionality m 0 we can minimize the VC dimension by maximizing the margin ρ . • Both in the dual formulation of the problem and in the solution training points appear only inside inner products: • Thus, complexity of the classifier is kept small regardless of f ( x ) = Σ α i y i x i Find α 1 … α N such that T x + b dimensionality. Q ( α ) = Σ α i - ½ ΣΣ α i α j y i y j x i T x j is maximized and (1) Σ α i y i = 0 (2) 0 ≤ α i ≤ C for all α i ����������������������������� ����������������������������� 13 14 ���������������������� ���������������������� Non-linear SVMs Non-linear SVMs: Feature spaces • Datasets that are linearly separable with some noise work out great: • General idea: the original feature space can always be mapped to some higher-dimensional feature space where the training set is separable: x 0 Φ : x → φ ( x ) • But what are we going to do if the dataset is just too hard? x 0 • How about… mapping data to a higher-dimensional space: x 2 x 0 ����������������������������� 15 ����������������������������� 16 4

Recommend

More recommend