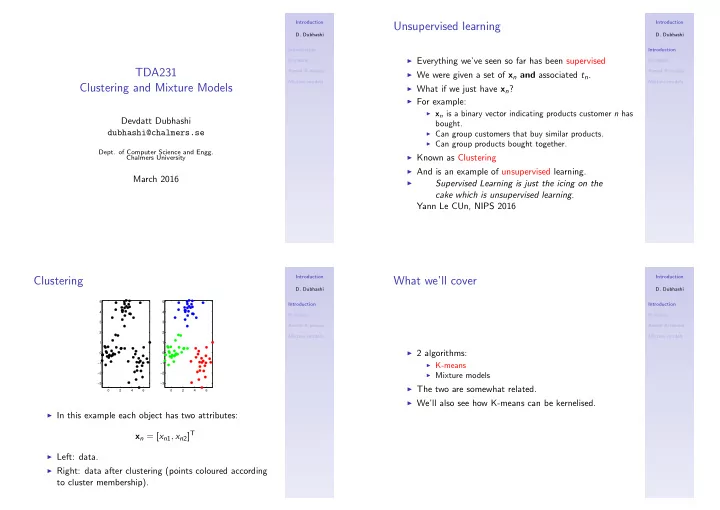

Introduction Introduction Unsupervised learning D. Dubhashi D. Dubhashi Introduction Introduction ◮ Everything we’ve seen so far has been supervised K-means K-means TDA231 Kernel K-means Kernel K-means ◮ We were given a set of x n and associated t n . Mixture models Mixture models Clustering and Mixture Models ◮ What if we just have x n ? ◮ For example: ◮ x n is a binary vector indicating products customer n has Devdatt Dubhashi bought. ◮ Can group customers that buy similar products. dubhashi@chalmers.se ◮ Can group products bought together. Dept. of Computer Science and Engg. ◮ Known as Clustering Chalmers University ◮ And is an example of unsupervised learning. March 2016 Supervised Learning is just the icing on the ◮ cake which is unsupervised learning. Yann Le CUn, NIPS 2016 Introduction Introduction Clustering What we’ll cover D. Dubhashi D. Dubhashi 5 5 Introduction Introduction 4 4 K-means K-means 3 3 Kernel K-means Kernel K-means 2 2 Mixture models Mixture models 1 1 ◮ 2 algorithms: 0 0 ◮ K-means −1 −1 ◮ Mixture models −2 −2 −3 −3 ◮ The two are somewhat related. 0 2 4 6 0 2 4 6 ◮ We’ll also see how K-means can be kernelised. ◮ In this example each object has two attributes: x n = [ x n 1 , x n 2 ] T ◮ Left: data. ◮ Right: data after clustering (points coloured according to cluster membership).

Introduction Introduction K-means How do we find µ k ? D. Dubhashi D. Dubhashi ◮ Assume that there are K clusters. Introduction Introduction ◮ Each cluster is defined by a position in the input space: ◮ No analytical solution – we can’t write down µ k as a K-means K-means µ k = [ µ k 1 , µ k 2 ] T Kernel K-means function of X . Kernel K-means Mixture models Mixture models ◮ Use an iterative algorithm: ◮ Each x n is assigned to its closest cluster: 1. Guess µ 1 , µ 2 , . . . , µ K 6 2. Assign each x n to its closest µ k 3. z nk = 1 if x n assigned to µ k (0 otherwise) 4 4. Update µ k to average of x n s assigned to µ k : 2 x 2 0 � N n =1 z nk x n µ k = −2 � N n =1 z nk −4 −6 5. Return to 2 until assignments do not change. −2 0 2 4 6 x 1 ◮ Algorithm will converge....it will reach a point where the ◮ Distance is normally Euclidean distance: assignments don’t change. d nk = ( x n − µ k ) T ( x n − µ k ) Introduction Introduction K-means – example K-means – example D. Dubhashi D. Dubhashi Introduction Introduction K-means K-means 6 Kernel K-means Kernel K-means 6 4 Mixture models Mixture models 4 2 2 x 2 x 2 0 0 −2 −2 −4 −4 −6 −2 0 2 4 6 −6 x 1 −2 0 2 4 6 x 1 ◮ Cluster means randomly assigned (top left). ◮ Cluster means updated to mean of assigned points. ◮ Points assigned to their closest mean.

Introduction Introduction K-means – example K-means – example D. Dubhashi D. Dubhashi Introduction Introduction K-means K-means Kernel K-means Kernel K-means 6 6 Mixture models Mixture models 4 4 2 2 x 2 x 2 0 0 −2 −2 −4 −4 −6 −6 −2 0 2 4 6 −2 0 2 4 6 x 1 x 1 ◮ Points re-assigned to closest mean. ◮ Cluster means updated to mean of assigned points. Introduction Introduction K-means – example Two Issues with K-Means D. Dubhashi D. Dubhashi Introduction Introduction K-means K-means Kernel K-means Kernel K-means 6 Mixture models Mixture models 4 2 ◮ What value of k should we use? x 2 0 ◮ How should we pick the initial centers? −2 ◮ Both these significantly affect resulting clustering. −4 −6 −2 0 2 4 6 x 1 ◮ Solution at convergence.

Introduction Introduction Initializing Centers k–Means++ (D. Arthur and S. Vassilvitskii D. Dubhashi D. Dubhashi (2007) Introduction Introduction K-means K-means Kernel K-means Kernel K-means ◮ Start with C 1 := { x } where x is chosen at random from Mixture models Mixture models ◮ Pick k random points. input points. ◮ Pick k points at random from input points. ◮ For i ≥ 2, pick a point x according to a probability ◮ Assign points at random to k groups and then take distribution ν i : centers of these groups. d 2 ( x , C i − 1 ) ◮ Pick a random input point for first center, next center ν i ( x ) = y d 2 ( y , C i − 1 ) � at a point as far away from this as possible, next as far away from first two ... and set C i := C i − 1 ∪ { x } . Gives a provably good O (log n ) approximation to optimal clustering. Introduction Introduction Choosing k Sum of Norms (SON) Convex Relaxation D. Dubhashi D. Dubhashi Introduction Introduction SON Relaxation (Lindsten et al 2011) K-means K-means µ � x i − µ i � 2 + λ � Kernel K-means Kernel K-means min � µ i − µ j � 2 . Mixture models Mixture models ◮ Intra-cluster variance: i < j 1 � ( x − µ k ) 2 . ◮ If you take only first term ... W k := | C k | x ∈ C k ◮ ... µ i = x i for all i . ◮ If you take only second term ... ◮ W := � k W k . ◮ ... µ i = µ j for all i , j . ◮ Pick k to minimize W k ◮ By varying λ , we steer between these two extremes. ◮ Elbow heuristic, Gap Statistic ... ◮ Do not need to know k in advance and do not need to do careful intialization. ◮ Fast scalable algorithm with guarantees under submission later today to ICML ...

Introduction Introduction When does K-means break? When does K-means break? D. Dubhashi D. Dubhashi Introduction Introduction K-means K-means 1.5 1.5 Kernel K-means Kernel K-means 1 1 Mixture models Mixture models 0.5 0.5 x 2 x 2 0 0 −0.5 −0.5 −1 −1 −1.5 −1.5 −1.5 −1 −0.5 0 0.5 1 1.5 −1.5 −1 −0.5 0 0.5 1 1.5 x 1 x 1 ◮ Data has clear cluster structure. ◮ Data has clear cluster structure. ◮ Outer cluster can not be represented as a single point. ◮ Outer cluster can not be represented as a single point. Introduction Introduction Kernelising K-means Kernelising K-means D. Dubhashi D. Dubhashi Introduction Introduction ◮ Maybe we can kernelise K-means? K-means K-means Kernel K-means Kernel K-means ◮ Distances: ◮ Multiply out: Mixture models Mixture models ( x n − µ k ) T ( x n − µ k ) N ◮ Cluster means: n x n − 2 N − 1 � m x n + N − 2 � x T z mk x T z mk z lk x T m x l k k m =1 m , l � N m =1 z mk x m µ k = � N ◮ Kernel substitution: m =1 z mk N N ◮ Distances can be written as (defining N k = � n z nk ): � � k ( x n , x n ) − 2 N − 1 z mk k ( x n , x m )+ N − 2 z mk z lk k ( x m , x l ) k k m =1 m , l =1 � T � � N N � ( x n − µ k ) T ( x n − µ k ) = x n − N − 1 � x n − N − 1 � z mk x m z mk x m k k m =1 m =1

Introduction Introduction Kernel K-means Kernel K-means – example D. Dubhashi D. Dubhashi Introduction Introduction ◮ Algorithm: K-means K-means 1.5 1. Choose a kernel and any necessary parameters. Kernel K-means Kernel K-means 1 2. Start with random assignments z nk . Mixture models Mixture models 3. For each x n assign it to the nearest ‘center’ where 0.5 distance is defined as: x 2 0 N N k ( x n , x n ) − 2 N − 1 � z mk k ( x n , x m )+ N − 2 � z mk z lk k ( x m , x l ) k k −0.5 m =1 m , l =1 −1 4. If assignments have changed, return to 3. −1.5 −1.5 −1 −0.5 0 0.5 1 1.5 ◮ Note – no µ k . This would be N − 1 x 1 � n z nk φ ( x n ) but we k don’t know φ ( x n ) for kernels. We only know φ ( x n ) T φ ( x m ) (last week)... ◮ Continue re-assigning until convergence. Introduction Introduction Kernel K-means – example Kernel K-means – example D. Dubhashi D. Dubhashi Introduction Introduction K-means K-means 1.5 1.5 Kernel K-means Kernel K-means 1 1 Mixture models Mixture models 0.5 0.5 x 2 x 2 0 0 −0.5 −0.5 −1 −1 −1.5 −1.5 −1.5 −1 −0.5 0 0.5 1 1.5 −1.5 −1 −0.5 0 0.5 1 1.5 x 1 x 1 ◮ Continue re-assigning until convergence. ◮ Continue re-assigning until convergence.

Introduction Introduction Kernel K-means K-means – summary D. Dubhashi D. Dubhashi Introduction Introduction K-means K-means Kernel K-means Kernel K-means ◮ Simple (and effective) clustering strategy. Mixture models Mixture models ◮ Converges to (local) minima of: ◮ Makes simple K-means algorithm more flexible. � � z nk ( x n − µ k ) T ( x n − µ k ) ◮ But, have to now set additional parameters. n k ◮ Very sensitive to initial conditions – lots of local optima. ◮ Sensitive to initialisation. ◮ How do we choose K ? ◮ Tricky: Quantity above always decreases as K increases. ◮ Can use CV if we have a measure of ‘goodness’. ◮ For clustering these will be application specific. Introduction Introduction Mixture models – thinking generatively A generative model D. Dubhashi D. Dubhashi Introduction Introduction 6 ◮ Assumption:Each x n comes from one of different K K-means K-means 4 distributions. Kernel K-means Kernel K-means Mixture models ◮ To generate X : Mixture models 2 ◮ For each n : x 2 0 1. Pick one of the K components. −2 2. Sample x n from this distribution. −4 ◮ We already have X −6 −2 −1 0 1 2 3 4 5 ◮ Define parameters of all these distributions as ∆. x 1 ◮ We’d like to reverse-engineer this process learn ∆ which we can then use to find which component each point ◮ Could we hypothesis a model that could have created came from. this data? ◮ Maximise the likelihood! ◮ Each x n seems to have come from one of three distributions.

Recommend

More recommend