Unit 7: Multiple Linear Regression Lecture 1: Introduction to MLR - PowerPoint PPT Presentation

Unit 7: Multiple Linear Regression Lecture 1: Introduction to MLR Statistics 101 Thomas Leininger June 20, 2013 Many variables in a model Weights of books volume (cm 3 ) weight (g) cover 1 800 885 hc 2 950 1016 hc 3 1050 1125 hc

Unit 7: Multiple Linear Regression Lecture 1: Introduction to MLR Statistics 101 Thomas Leininger June 20, 2013

Many variables in a model Weights of books volume (cm 3 ) weight (g) cover 1 800 885 hc 2 950 1016 hc 3 1050 1125 hc 4 350 239 hc 5 750 701 hc 6 600 641 hc l 7 1075 1228 hc 8 250 412 pb 9 700 953 pb h 10 650 929 pb w 11 975 1492 pb 12 350 419 pb 13 950 1010 pb 14 425 595 pb 15 725 1034 pb Statistics 101 (Thomas Leininger) U7 - L1: Multiple Linear Regression June 20, 2013 2 / 17

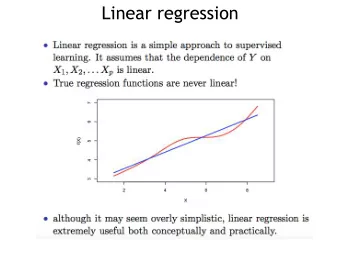

Many variables in a model Weights of hard cover and paperback books Can you identify a trend in the relationship between volume and weight of hardcover and paperback books? hardcover 1000 paperback 800 weight (g) 600 400 200 400 600 800 1000 1200 1400 volume ( cm 3 ) Statistics 101 (Thomas Leininger) U7 - L1: Multiple Linear Regression June 20, 2013 3 / 17

Many variables in a model Modeling weights of books using volume and cover type book_mlr = lm(weight ˜ volume + cover, data = allbacks) summary(book_mlr) Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) 197.96284 59.19274 3.344 0.005841 ** volume 0.71795 0.06153 11.669 6.6e-08 *** cover:pb -184.04727 40.49420 -4.545 0.000672 *** Residual standard error: 78.2 on 12 degrees of freedom Multiple R-squared: 0.9275, Adjusted R-squared: 0.9154 F-statistic: 76.73 on 2 and 12 DF, p-value: 1.455e-07 Conditions for MLR? Statistics 101 (Thomas Leininger) U7 - L1: Multiple Linear Regression June 20, 2013 4 / 17

Many variables in a model Linear model Estimate Std. Error t value Pr( > | t | ) (Intercept) 197.96 59.19 3.34 0.01 volume 0.72 0.06 11.67 0.00 cover:pb -184.05 40.49 -4.55 0.00 � weight = 197 . 96 + 0 . 72 volume − 184 . 05 cover : pb For hardcover books: plug in 0 for cover 1 � weight = 197 . 96 + 0 . 72 volume − 184 . 05 × 0 = 197 . 96 + 0 . 72 volume For paperback books: plug in 1 for cover 2 � = 197 . 96 + 0 . 72 volume − 184 . 05 × 1 weight = 13 . 91 + 0 . 72 volume Statistics 101 (Thomas Leininger) U7 - L1: Multiple Linear Regression June 20, 2013 5 / 17

Many variables in a model Visualising the linear model hardcover 1000 paperback 800 weight (g) 600 400 200 400 600 800 1000 1200 1400 volume ( cm 3 ) Statistics 101 (Thomas Leininger) U7 - L1: Multiple Linear Regression June 20, 2013 6 / 17

Many variables in a model Interpretation of the regression coefficients Estimate Std. Error t value Pr( > | t | ) (Intercept) 197.96 59.19 3.34 0.01 volume 0.72 0.06 11.67 0.00 cover:pb -184.05 40.49 -4.55 0.00 Slope of volume: All else held constant, for each 1 cm 3 increase in volume we would expect weight to increase on average by grams. Slope of cover: All else held constant, the model predicts that paperback books weigh grams lower than hardcover books, on average. Intercept: Hardcover books with no volume are expected on average to weigh about grams. Obviously, the intercept does not make sense in context. It only serves to adjust the height of the line. Statistics 101 (Thomas Leininger) U7 - L1: Multiple Linear Regression June 20, 2013 7 / 17

Many variables in a model Prediction Question Which of the following is the correct calculation for the predicted weight of a paperback book that is 600 cm 3 ? Estimate Std. Error t value Pr( > | t | ) (Intercept) 197.96 59.19 3.34 0.01 volume 0.72 0.06 11.67 0.00 cover:pb -184.05 40.49 -4.55 0.00 (a) 197 . 96 + 0 . 72 × 600 − 184 . 05 × 1 (b) 184 . 05 + 0 . 72 × 600 − 197 . 96 × 1 (c) 197 . 96 + 0 . 72 × 600 − 184 . 05 × 0 (d) 197 . 96 + 0 . 72 × 1 − 184 . 05 × 600 Statistics 101 (Thomas Leininger) U7 - L1: Multiple Linear Regression June 20, 2013 8 / 17

Many variables in a model A note on “interaction” variables � weight = 197 . 96 + 0 . 72 volume − 184 . 05 cover : pb This model assumes that hardcover 1000 paperback hardcover and paperback books have the same slope for the 800 weight (g) relationship between their volume 600 and weight. If this isn’t reasonable, then we would include an 400 “interaction” variable in the model (beyond the scope of this course). 200 400 600 800 1000 1200 1400 volume ( cm 3 ) Statistics 101 (Thomas Leininger) U7 - L1: Multiple Linear Regression June 20, 2013 9 / 17

Many variables in a model Regression topics we may/may not cover Adjusted R 2 Inference in MLR Collinearity in MLR Interactions between variables Model diagnostics and transformations Logistic/Poisson/other regression and many more Statistics 101 (Thomas Leininger) U7 - L1: Multiple Linear Regression June 20, 2013 10 / 17

Adjusted R 2 R 2 vs. adjusted R 2 When any variable is added to the model, R 2 will always increase. If the added variable doesn’t really provide any new information, or is completely unrelated, adjusted R 2 does not increase. R 2 adj properties: R 2 adj will always be smaller than R 2 . R 2 adj applies a penalty for the number of predictors included in the model. Therefore, we choose models with higher R 2 adj over others. Statistics 101 (Thomas Leininger) U7 - L1: Multiple Linear Regression June 20, 2013 11 / 17

Collinearity and parsimony Collinearity between explanatory variables Two predictor variables are said to be collinear when they are correlated, and this collinearity (also called multicollinearity ) complicates model estimation. Remember: Predictors are also called explanatory or independent variables, so they should be independent of each other. We don’t like adding predictors that are associated with each other to the model, because often times the addition of such variable brings nothing to the table. Instead, we prefer the simplest best model, i.e. parsimonious model. In addition, addition of collinear variables can result in biased estimates of the slope parameters. Statistics 101 (Thomas Leininger) U7 - L1: Multiple Linear Regression June 20, 2013 12 / 17

Collinearity and parsimony Inference for the model as a whole Inference for the model as a whole Is the model as a whole significant? H 0 : β 1 = β 2 = · · · = β k = 0 H A : At least one of the β i � 0 F-statistic: 29.74 on 4 and 429 DF, p-value: < 2.2e-16 Since p-value < 0.05, the model as a whole is significant. The F test yielding a significant result doesn’t mean the model fits the data well, it just means at least one of the β s is non-zero. The F test not yielding a significant result doesn’t mean individuals variables included in the model are not good predictors of y , it just means that the combination of these variables doesn’t yield a good model. Statistics 101 (Thomas Leininger) U7 - L1: Multiple Linear Regression June 20, 2013 13 / 17

Collinearity and parsimony Inference for the slope(s) Inference for the slope(s) Is whether or not the mother went to high school a significant predictor of kid’s cognitive test score, given all other variables in the model? H 0 : β 1 = 0, when all other variables are included in the model H A : β 1 � 0, when all other variables are included in the model Estimate Std. Error t value Pr(>|t|) (Intercept) 19.59241 9.21906 2.125 0.0341 mom_hsyes 5.09482 2.31450 2.201 0.0282 mom_iq 0.56147 0.06064 9.259 <2e-16 mom_workyes 2.53718 2.35067 1.079 0.2810 mom_age 0.21802 0.33074 0.659 0.5101 Residual standard error: 18.14 on 429 degrees of freedom T = 2 . 201, df = n − k − 1 = 434 − 4 − 1 = 429, p-value = 0.0282 Since p-value < 0.05, whether or not mom went to high school is a significant predictor of kid’s test score, given all other variables in the model. Statistics 101 (Thomas Leininger) U7 - L1: Multiple Linear Regression June 20, 2013 14 / 17

Collinearity and parsimony Inference for the slope(s) Interpreting the slope Question What is the correct interpretation of the slope for mom work ? Estimate Std. Error t value Pr( > | t | ) (Intercept) 19.59 9.22 2.13 0.03 mom hs:yes 5.09 2.31 2.20 0.03 mom iq 0.56 0.06 9.26 0.00 mom work:yes 2.54 2.35 1.08 0.28 mom age 0.22 0.33 0.66 0.51 All else being equal, kids whose moms worked during the first three years of the kid’s life (a) are estimated to score 2.54 points lower (b) are estimated to score 2.54 points higher than those whose moms did not work. Statistics 101 (Thomas Leininger) U7 - L1: Multiple Linear Regression June 20, 2013 15 / 17

Collinearity and parsimony Inference for the slope(s) Application exercise: CI for slope in MLR Construct a 95% confidence interval for the slope of mom work . t ⋆ SE b i b i ± = n − k − 1 = 434 − 4 − 1 = 429 → 400 df 2 . 54 ± 1 . 97 × 2 . 35 2 . 54 ± 4 . 62 ( − 2 . 08 7 . 16 ) , We are 95% confident that, all else being equal, kids whose moms worked during the first three years of the kid’s life are estimated to score 2.08 points lower to 7.16 points higher than those whose moms did not work. Statistics 101 (Thomas Leininger) U7 - L1: Multiple Linear Regression June 20, 2013 16 / 17

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.