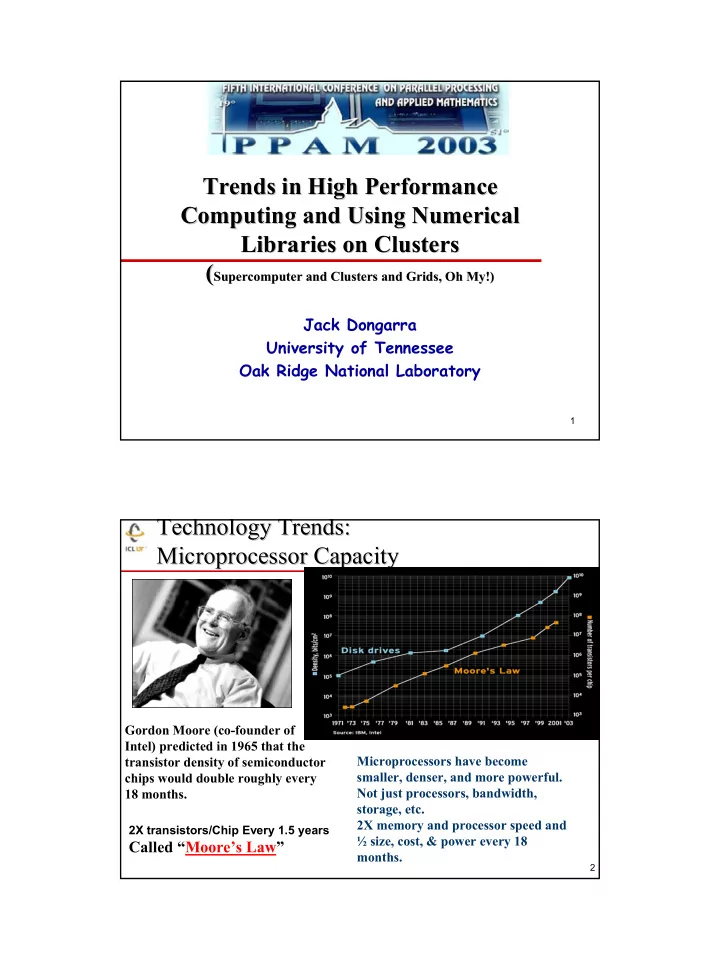

Trends in High Performance Trends in High Performance Computing and Using Numerical Computing and Using Numerical Libraries on Clusters Libraries on Clusters ( Supercomputer and Clusters and Grids, Oh My!) ( Supercomputer and Clusters and Grids, Oh My!) Jack Dongarra University of Tennessee Oak Ridge National Laboratory 1 Technology Trends: Technology Trends: Microprocessor Capacity Microprocessor Capacity Gordon Moore (co-founder of Intel) predicted in 1965 that the transistor density of semiconductor Microprocessors have become chips would double roughly every smaller, denser, and more powerful. 18 months. Not just processors, bandwidth, storage, etc. 2X memory and processor speed and 2X transistors/Chip Every 1.5 years ½ size, cost, & power every 18 Called “Moore’s Law” months. 2 1

Moore’s Law Moore’s Law Super Scalar/Vector/Parallel 1 PFlop/s Earth Parallel Simulator ASCI White ASCI Red Pacific 1 TFlop/s TMC CM-5 Cray T3D Vector TMC CM-2 Cray 2 1 GFlop/s Cray X-MP Super Scalar Cray 1 1941 1 (Floating Point operations / second, Flop/s) CDC 7600 IBM 360/195 1945 100 Scalar 1 MFlop/s 1949 1,000 (1 KiloFlop/s, KFlop/s) CDC 6600 1951 10,000 1961 100,000 1964 1,000,000 (1 MegaFlop/s, MFlop/s) IBM 7090 1968 10,000,000 1975 100,000,000 1987 1,000,000,000 (1 GigaFlop/s, GFlop/s) 1992 10,000,000,000 1993 100,000,000,000 1 KFlop/s 1997 1,000,000,000,000 (1 TeraFlop/s, TFlop/s) UNIVAC 1 2000 10,000,000,000,000 EDSAC 1 2003 35,000,000,000,000 (35 TFlop/s) 3 1950 1960 1970 1980 1990 2000 2010 H. Meuer, H. Simon, E. Strohmaier, & JD H. Meuer, H. Simon, E. Strohmaier, & JD - Listing of the 500 most powerful Computers in the World - Yardstick: Rmax from LINPACK MPP Ax=b, dense problem TPP performance Rate - Updated twice a year Size SC‘xy in the States in November Meeting in Mannheim, Germany in June - All data available from www.top500.org 4 2

A Tour de Force in Engineering A Tour de Force in Engineering Homogeneous, Centralized, ♦ Proprietary, Expensive! Target Application: CFD-Weather, ♦ Climate, Earthquakes 640 NEC SX/6 Nodes (mod) ♦ � 5120 CPUs which have vector ops � Each CPU 8 Gflop/s Peak 40 TFlop/s (peak) ♦ $1/2 Billion for machine & building ♦ Footprint of 4 tennis courts ♦ 7 MWatts ♦ � Say 10 cent/KWhr - $16.8K/day = $6M/year! Expect to be on top of Top500 ♦ until 60-100 TFlop ASCI machine arrives From the Top500 (June 2003) ♦ � Performance of ESC ≈ Σ Next Top 4 Computers � ~ 10% of performance of all the Top500 machines 5 June 2003 June 2003 Manufacturer Computer Rmax Installation Site Year # Proc Rpeak Earth Simulator Center 1 NEC Earth-Simulator 35860 2002 5120 40960 Yokohama Hewlett- ASCI Q - AlphaServer SC Los Alamos National Laboratory 2 13880 2002 8192 20480 Packard ES45/1.25 GHz Los Alamos Linux NetworX MCR Linux Cluster Xeon 2.4 GHz - Lawrence Livermore National Laboratory 3 7634 2002 2304 11060 Quadrics Quadrics Livermore Lawrence Livermore National Laboratory 4 IBM ASCI White, SP Power3 375 MHz 7304 2000 8192 12288 Livermore NERSC/LBNL 5 IBM SP Power3 375 MHz 16 way 7304 2002 6656 9984 Berkeley xSeries Cluster Xeon 2.4 GHz - Lawrence Livermore National Laboratory 6 IBM/Quadrics 6586 2003 1920 9216 Quadrics Livermore National Aerospace Lab 7 Fujitsu PRIMEPOWER HPC2500 (1.3 GHz) 5406 2002 2304 11980 Tokyo Hewlett- rx2600 Itanium2 1 GHz Cluster - Pacific Northwest National Laboratory 8 4881 2003 1540 6160 Packard Quadrics Richland Hewlett- Pittsburgh Supercomputing Center 9 AlphaServer SC ES45/1 GHz 4463 2001 3016 6032 Packard Pittsburgh Hewlett- Commissariat a l'Energie Atomique (CEA) 10 AlphaServer SC ES45/1 GHz 3980 2001 2560 5120 Packard Bruyeres-le-Chatel 6 3

TOP500 – – Performance Performance - - June 2003 June 2003 TOP500 1 Pflop/s 374 TF/s SUM 100 Tflop/s 35.8 TF/s 10 Tflop/s NEC ES N=1 1.17 TF/s IBM ASCI White 1 Tflop/s LLNL Intel ASCI Red 59.7 GF/s 100 Gflop/s Sandia Fujitsu 244 GF/s N=500 'NWT' NAL 10 Gflop/s 0.4 GF/s 1 Gflop/s My Laptop 100 Mflop/s 3 3 4 4 5 5 6 6 7 7 8 8 9 9 0 0 1 1 2 2 3 9 9 9 9 9 9 9 9 9 9 9 9 9 9 0 0 0 0 0 0 0 - - - - - - - - - - - - - - - - - - - - - n n n n n n n n n n n v v v v v v v v v v u o u o u o u o u o u o u o u o u o u o u N N N N N N N N N N J J J J J J J J J J J 7 Performance Extrapolation Performance Extrapolation 10 PFlop/s 1 PFlop/s Blue Gene 130,000 proc 100 TFlop/s ASCI P 12,544 proc 10 TFlop/s Sum 1 TFlop/s N=1 100 GFlop/s TFlop/s PFlop/s 10 GFlop/s To enter Computer the list 1 GFlop/s N=500 100 MFlop/s 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 9 9 9 9 9 9 9 0 0 0 0 0 0 0 0 0 0 1 - - - - - - - - - - - - - - - - - - n n n n n n n n n n n n n n n n n n u u u u u u u u u u u u u u u u u u J J J J J J J J J J J J J J J J J J 8 4

Performance Extrapolation Performance Extrapolation 10 PFlop/s 1 PFlop/s Blue Gene 130,000 proc 100 TFlop/s ASCI P 12,544 proc 10 TFlop/s Sum 1 TFlop/s N=1 100 GFlop/s 10 GFlop/s 1 GFlop/s My Laptop N=500 100 MFlop/s 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 9 9 9 9 9 9 9 0 0 0 0 0 0 0 0 0 0 1 - - - - - - - - - - - - - - - - - - n n n n n n n n n n n n n n n n n n u u u u u u u u u u u u u u u u u u J J J J J J J J J J J J J J J J J J 9 Excerpt from the Top500 - - 21th list 21th list Excerpt from the Top500 Rmax Rank Manufacturer Computer Installation Site Country # Proc [TF/s] … … … … … … Linux Networx MCR Linux Cluster Xeon - Lawrence Livermore National 3 7.634 USA 2304 Quadrics Laboratory xSeries Cluster Xeon – Lawrence Livermore National 6 IBM 6.586 USA 1920 Quadrics Laboratory Hewlett- Pacific Northwest National 8 rx2600 Itanium2 - Quadrics 4.881 USA 1540 Packard Laboratory Aspen Systems, Xeon – Forecast Systems Laboratory – 11 HPTi 3.337 USA 1536 Myrinet2000 NOAA 19 Atipa P4 Xeon Cluster - Myrinet 2.207 Louisiana State University USA 1024 Technology PowerEdge 2650 P4 Xeon – University at Buffalo, 25 Dell 2.004 USA 600 Myrinet SUNY, CCR Titan Cluster Itanium2 – 31 IBM 1.593 NCSA USA 512 Myrinet 39 Self-made PowerRACK-HX Xeon GigE 1.202 University of Toronto Canada 512 … … … … … … … ♦ Not “bottom feeders” ♦ 149 Clusters on the Top500 ♦ 119 are Intel based ♦ A substantial part of these are installed at industrial customers especially in the oil-industry. ♦ 23 of these clusters are labeled as 'Self-Made'. 10 5

SETI@home: Global Distributed Computing : Global Distributed Computing SETI@home ♦ Running on 500,000 PCs, ~1300 CPU Years per Day � 1.3M CPU Years so far ♦ Sophisticated Data & Signal Processing Analysis ♦ Distributes Datasets from Arecibo Radio Telescope 11 SETI@home SETI@home ♦ Use thousands of Internet- connected PCs to help in the search for extraterrestrial intelligence. ♦ When their computer is idle ♦ Largest distributed or being wasted this software will download computation project ~ half a MB chunk of data in existence for analysis. Performs about 3 Tflops for each client in 15 hours. � Averaging 55 Tflop/s ♦ The results of this analysis are sent back to the SETI team, combined with thousands of other participants. 12 6

Eigenvalue problem ♦ Google query attributes ♦ n=2.7x10 9 � � 150M queries/day (see: MathWorks Cleve’s Corner) (2000/second) � 100 countries � 3B documents in the index Data centers Forward link ♦ Back links � 15,000 Linux systems in 6 data 1 if there’s a hyperlink from page i to j � centers Form a transition probability matrix of ♦ the Markov chain � 15 TFlop/s and 1000 TB total Matrix is not sparse, but it is a rank � capability one modification of a sparse matrix � 40-80 1U/2U servers/cabinet Largest eigenvalue is equal to one; ♦ want the corresponding eigenvector � 100 MB Ethernet (the state vector of the Markov switches/cabinet with gigabit chain). Ethernet uplink The elements of eigenvector are � � growth from 4,000 systems Google’s PageRank (Larry Page). (June 2000) When you search: They have an ♦ inverted index of the web pages � 18M queries then Words and links that have those words � Performance and operation ♦ Your query of words: find links then ♦ � simple reissue of failed commands order lists of pages by their PageRank. to new servers � no performance debugging 13 problems are not reproducible Source: Monika Henzinger, Google & Cleve Moler � Grid Computing is About … Grid Computing is About … Resource sharing & coordinated problem solving in dynamic, multi-institutional virtual organizations DATA ADVANCED , ANALYSIS ACQUISITION VISUALIZATION QuickTime™ and a QuickTime™ and a decompressor decompressor are needed to see this picture. are needed to see this picture. COMPUTATIONAL RESOURCES IMAGING INSTRUMENTS LARGE-SCALE DATABASES “Telescience Grid”, Courtesy of Mark Ellisman 14 7

Recommend

More recommend