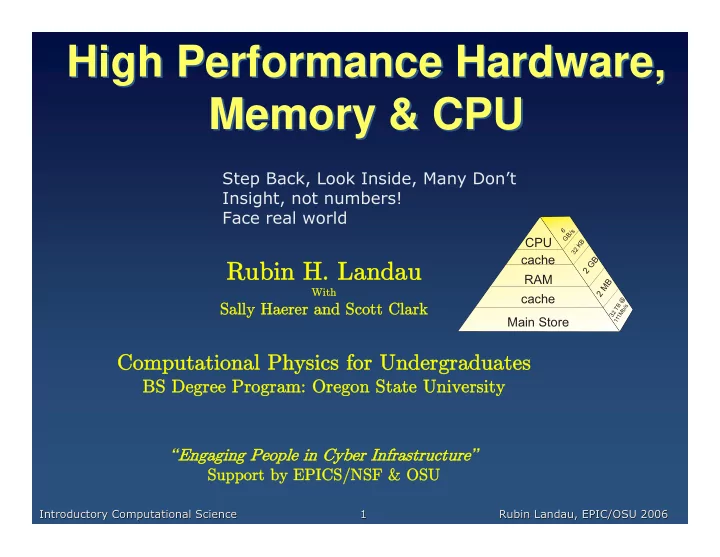

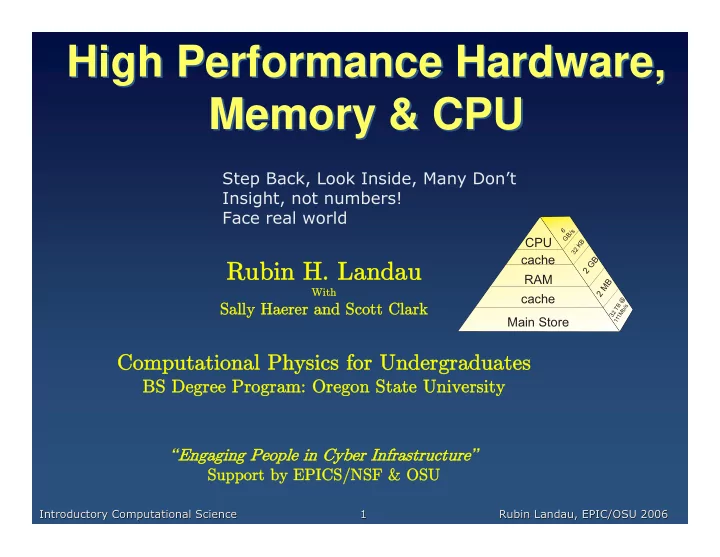

High Performance Hardware, High Performance Hardware, Memory & CPU Memory & CPU Step Back, Look Inside, Many Don’t Insight, not numbers! Face real world 6 GB/s CPU B K 2 3 cache 2 GB Rubin H. Landau Rubin H. Landau RAM 2 MB With With cache 3 2 TB @ Sally Haerer and Scott Clark Sally Haerer and Scott Clark s / b M 1 1 Main Store 1 Computational Physics for Undergraduates Computational Physics for Undergraduates BS Degree Program: Oregon State University BS Degree Program: Oregon State University “ Engaging People in Cyber Infrastructure Engaging People in Cyber Infrastructure ” Suppor Support by EPICS/NSF & OSU by EPICS/NSF & OSU Introductory Computational Science 1 Rubin Landau, EPIC/OSU 2006 Introductory Computational Science 1 Rubin Landau, EPIC/OSU 2006

Problem: Optimize for Speedup Speedup Problem: Optimize for Problem: Optimize for Speedup • Faster by smarter (algorithm), not bigger Fe • Yet @limit: tune program to architecture 1 st locate hot spots speed up? ������ � �� � ��� θ ��������� ����� • Negative side hard work & (your) time intensive �������������� local hard/software: portable, readable ���������� � � � ���������� ���������� ������� � � ������ ���� ��������������� ���������� • CS: “compiler's job not yours” ��� • CSE: large, complex, frequent programs: 3-5X • “CSE : tomorrow’s problems, yesterday’s HdWr CS ” (Press) Introductory Computational Science 2 Rubin Landau, EPIC/OSU 2006 Introductory Computational Science 2 Rubin Landau, EPIC/OSU 2006

Theory: Rules of Optimization Theory: Rules of Optimization Theory: Rules of Optimization 1. “More computing sins are committed in the name of efficiency (without necessarily achieving it) than for any other single reason - including blind stupidity.” - W.A. Wulf 2. “We should forget about small efficiencies, say about 97% of the time: premature optimization is the root of all evil.” - D. Knuth 3. “The best is the enemy of the good.” - Voltaire 4. Do not do it. 5. (for experts only): “Do not do it yet.” - M.A. Jackson Jonathan Hardwich 6. “Do not optimize as you go.” www.cs.cmu.edu/ ~ jch 7. Remember the 80/20 rule: 80% results 20% effort (also 90/10) 8. Always run “before” and “after” benchmarks - fast wrong answers not compatible with search for truth/bridges 9. Use the right algorithms and data structures! Introductory Computational Science 3 Rubin Landau, EPIC/OSU 2006 Introductory Computational Science 3 Rubin Landau, EPIC/OSU 2006

Theory: HPC Components Theory: HPC Components Theory: HPC Components • Supercomputers = fastest, most powerful • Now: parallel machines, PC (WS) based System (64 cabinets) • Linux/Unix ($$ if MS) Cabinet (32 boards) • HPC = good balance major components: Board (16 cards) Card multistaged (pipelined) units (2 chips) 360 Tflops Chip 32TB (2 processors) multiple CPU (parallel) 5.7 Tflops 80 Gflops 512 GB 16 GB DDR fast CPU, but compatible 11.2 Gflops 2.8/5.6 Gflops 1 GB DDR 4 MB very large, very fast memories very fast communications vector, array processors (?) software: integrates all Introductory Computational Science 4 Rubin Landau, EPIC/OSU 2006 Introductory Computational Science 4 Rubin Landau, EPIC/OSU 2006

Memory Hierarchy vs vs Arrays Arrays Memory Hierarchy Memory Hierarchy vs Arrays Ideal world array storage Real world matrices ≠ blocks = broken lines A(1) A(2) A(1) A(3) RAM A(2) A(3) Page 1 Data Cache A(N) A(1),..., A(16) A(2032),..., A(2048) M(1,1) A(N) Page 2 M(2,1) M(1,1) M(3,1) M(2,1) CPU M(3,1) Page 3 CPU M(N,1) M(N,1) M(1,2) Swap Space M(1,2) M(2,2) Registers Page N M(2,2) M(3,2) M(3,2) M(N,N) M(N,N) Row major: C, Java; Column major: F90 • C, J: m(0,0) m(0,1) m(0,2) m(1,0) m(1,1) m(1,2) m(2,0) m(2,1) m(2,20) • F: m(1,1) m(2,1) m(3,1) m(1,2) m(2,2) m(3,2) m(1,3) m(2,3) m(3,3) Introductory Computational Science 5 Rubin Landau, EPIC/OSU 2006 Introductory Computational Science 5 Rubin Landau, EPIC/OSU 2006

Memory Hierarchy: Cost vs vs Speed Speed Memory Hierarchy: Cost Memory Hierarchy: Cost vs Speed • CPU : registers, instructions, FPA, 8 GB/s 6 GB/s • Cache : high-speed buffer, 5.5 GB/s CPU 32 KB • Cache lines : latency issues cache 2 GB • RAM : random access memory RAM 2 MB • Via RISC : reduced instruction set computer cache 3 2 TB @ s / b M • Hard disk: cheap and slow, 111 Mb/s 1 1 Main Store 1 • Pages : length = 4K (386), 8-16K (Unix) • Virtual memory ≈ RAM (32b ≈ 4GB) B A little effort, $$ (t) = page faults C e.g. multitasking/windows D Introductory Computational Science 6 Rubin Landau, EPIC/OSU 2006 Introductory Computational Science 6 Rubin Landau, EPIC/OSU 2006

High Performance Hardware, High Performance Hardware, Memory & CPU (part II) Memory & CPU (part II) (examples) 6 GB/s CPU B K 2 3 cache 2 GB Rubin H. Landau Rubin H. Landau RAM 2 MB cache With With 3 2 TB @ s / b Sally Haerer and Scott Clark Sally Haerer and Scott Clark M 1 1 Main Store 1 Computational Physics for Undergraduates Computational Physics for Undergraduates BS Degree Program: Oregon State University BS Degree Program: Oregon State University “ Engaging People in Cyber Infrastructure Engaging People in Cyber Infrastructure ” Support by EPICS/NSF & OSU Suppor by EPICS/NSF & OSU Introductory Computational Science 7 Rubin Landau, EPIC/OSU 2006 Introductory Computational Science 7 Rubin Landau, EPIC/OSU 2006

Central Processing Unit Central Processing Unit Central Processing Unit • Interacting Memories A(1) RAM A(2) A(3) Page 1 Data Cache • Pipelines: speed A(1),..., A(16) A(2032),..., A(2048) Page 2 A(N) M(1,1) M(2,1) M(3,1) Page 3 Prepare next step CPU during previous M(N,1) Swap Space M(1,2) Registers Page N M(2,2) M(3,2) Bucket brigade M(N,N) e.g: c = (a + b) / (d * f) Unit Step1 Step 2 Step 3 Step 4 A1 Fetch a Fetch b Add A2 Fetch d Fetch f Multiply A3 Divide Introductory Computational Science 8 Rubin Landau, EPIC/OSU 2006 Introductory Computational Science 8 Rubin Landau, EPIC/OSU 2006

CPU Design: RISC CPU Design: RISC CPU Design: RISC • RISC = R educed I nstruction S et C omputer (HPC) M(N,N) M(3,2) M(2,2) M(1,2) M(N,1) M(3,1) M(2,1) M(1,1) A(N) A(3) A(2) A(1) • CISC = C omplex I SC (previous) high-level microcode on chip (1000’s instructions) Swap Space Page N Page 3 Page 2 Page 1 RAM complex instructions slow (10 / instruct) A(1),..., A(16) • RISC: smaller (simpler) instruction set on chip F90, C compiler translate for RISC architecture simpler (fewer cycles/i), cheaper, possibly faster Registers Data Cache CPU saved instruction space more CPU registers A(2032),..., A(2048) pipelines, memory conflict, some parallel • Theory CPU T = # instructs cycles/ instruct cycle t CISC: fewer instructs executed RISC: fewer cycles/ instruct Introductory Computational Science 9 Rubin Landau, EPIC/OSU 2006 Introductory Computational Science 9 Rubin Landau, EPIC/OSU 2006

Latest & Greatest: IBM IBM Blue Gene Latest & Greatest: IBM Blue Gene Blue Gene Latest & Greatest: System • A. Gara et al ., IBM J (64 cabinets) • Specific genes general SC Cabinet • Linux ($$ if MS) (32 boards) • By committee Board (16 cards) Card (2 chips) 360 Tflops Chip 32TB (2 processors) 5.7 Tflops 80 Gflops 512 GB 16 GB DDR 11.2 Gflops 2.8/5.6 Gflops (double data rate) 1 GB DDR 4 MB • Extreme scale 65,536 (2 16 ) nodes • Balance cost/performance • Peak = 360 teraflops (10 12 ); performance/watt • Medium speed 5.6 Gflop (cool) • On, off chip distributed memories • 512 chips/card, 16 cards/Board • 2 cores: 1 compute, 1 communicate • Control: distributed memory MPI Introductory Computational Science 10 Rubin Landau, EPIC/OSU 2006 Introductory Computational Science 10 Rubin Landau, EPIC/OSU 2006

BG's 3 Communication Networks 's 3 Communication Networks BG BG's 3 Communication Networks • Fig (a) : 64 x 32 x 32 3-D torus (2 x 2 x 2 shown) links = chips that also compute both: nearest-neighbor & cut through all ≈ effective bandwidth all nodes node node: 1.4 Gb/s 1 ns ��� • Program speed: local communication 100 ns < Latency < 6.4 s (64 hops) ��� ���������������� �������� • Fig (b) Global collective network ������������� broadcast to all processors ���� ����� • Fig (c) Control network + Gb-Ethernet ������������� ��� for I/O, switch, devices > Tb/s Introductory Computational Science 11 Rubin Landau, EPIC/OSU 2006 Introductory Computational Science 11 Rubin Landau, EPIC/OSU 2006

Recommend

More recommend