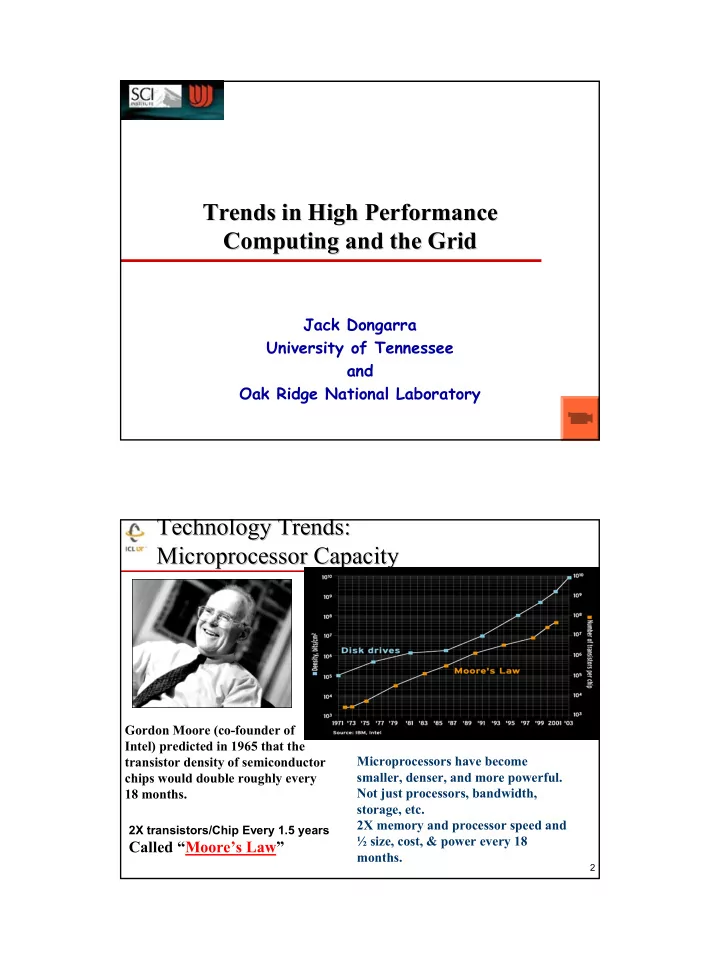

Trends in High Performance Trends in High Performance Computing and the Grid Computing and the Grid Jack Dongarra University of Tennessee and Oak Ridge National Laboratory 1 Technology Trends: Technology Trends: Microprocessor Capacity Microprocessor Capacity Gordon Moore (co-founder of Intel) predicted in 1965 that the transistor density of semiconductor Microprocessors have become chips would double roughly every smaller, denser, and more powerful. 18 months. Not just processors, bandwidth, storage, etc. 2X memory and processor speed and 2X transistors/Chip Every 1.5 years ½ size, cost, & power every 18 Called “Moore’s Law” months. 2 1

Moore’ ’s Law s Law Moore Super Scalar/Vector/Parallel 1 PFlop/s Earth Parallel Simulator ASCI White ASCI Red Pacific 1 TFlop/s TMC CM-5 Cray T3D Vector TMC CM-2 Cray 2 1 GFlop/s Cray X-MP Super Scalar Cray 1 1941 1 (Floating Point operations / second, Flop/s) CDC 7600 IBM 360/195 1945 100 Scalar 1 MFlop/s 1949 1,000 (1 KiloFlop/s, KFlop/s) CDC 6600 1951 10,000 1961 100,000 1964 1,000,000 (1 MegaFlop/s, MFlop/s) IBM 7090 1968 10,000,000 1975 100,000,000 1987 1,000,000,000 (1 GigaFlop/s, GFlop/s) 1992 10,000,000,000 1993 100,000,000,000 1 KFlop/s 1997 1,000,000,000,000 (1 TeraFlop/s, TFlop/s) UNIVAC 1 2000 10,000,000,000,000 EDSAC 1 2003 35,000,000,000,000 (35 TFlop/s) 3 1950 1960 1970 1980 1990 2000 2010 H. Meuer, H. Simon, E. Strohmaier, & JD H. Meuer, H. Simon, E. Strohmaier, & JD - Listing of the 500 most powerful Computers in the World - Yardstick: Rmax from LINPACK MPP Ax=b, dense problem TPP performance Rate - Updated twice a year Size SC‘xy in the States in November Meeting in Mannheim, Germany in June - All data available from www.top500.org 4 2

A Tour de Force in Engineering A Tour de Force in Engineering Homogeneous, Centralized, ♦ Proprietary, Expensive! Target Application: CFD-Weather, ♦ Climate, Earthquakes 640 NEC SX/6 Nodes (mod) ♦ � 5120 CPUs which have vector ops � Each CPU 8 Gflop/s Peak 40 TFlop/s (peak) ♦ $1/2 Billion for machine & building ♦ Footprint of 4 tennis courts ♦ 7 MWatts ♦ � Say 10 cent/KWhr - $16.8K/day = $6M/year! Expect to be on top of Top500 ♦ until 60-100 TFlop ASCI machine arrives From the Top500 (June 2003) ♦ � Performance of ESC ≈ Σ Next Top 4 Computers � ~ 10% of performance of all the Top500 machines 5 June 2003 June 2003 Manufacturer Computer Rmax Installation Site Year # Proc Rpeak Earth Simulator Center 1 NEC Earth-Simulator 35860 2002 5120 40960 Yokohama Hewlett- ASCI Q - AlphaServer SC Los Alamos National Laboratory 2 13880 2002 8192 20480 Packard ES45/1.25 GHz Los Alamos Linux NetworX MCR Linux Cluster Xeon 2.4 GHz - Lawrence Livermore National Laboratory 3 7634 2002 2304 11060 Quadrics Quadrics Livermore Lawrence Livermore National Laboratory 4 IBM ASCI White, SP Power3 375 MHz 7304 2000 8192 12288 Livermore NERSC/LBNL 5 IBM SP Power3 375 MHz 16 way 7304 2002 6656 9984 Berkeley xSeries Cluster Xeon 2.4 GHz - Lawrence Livermore National Laboratory 6 IBM/Quadrics 6586 2003 1920 9216 Quadrics Livermore National Aerospace Lab 7 Fujitsu PRIMEPOWER HPC2500 (1.3 GHz) 5406 2002 2304 11980 Tokyo Hewlett- rx2600 Itanium2 1 GHz Cluster - Pacific Northwest National Laboratory 8 4881 2003 1540 6160 Packard Quadrics Richland Hewlett- Pittsburgh Supercomputing Center 9 AlphaServer SC ES45/1 GHz 4463 2001 3016 6032 Packard Pittsburgh Hewlett- Commissariat a l'Energie Atomique (CEA) 10 AlphaServer SC ES45/1 GHz 3980 2001 2560 5120 Packard Bruyeres-le-Chatel 6 3

TOP500 – – Performance Performance - - June 2003 June 2003 TOP500 1 Pflop/s 374 TF/s SUM 100 Tflop/s 35.8 TF/s 10 Tflop/s NEC ES N=1 1.17 TF/s IBM ASCI White 1 Tflop/s LLNL Intel ASCI Red 59.7 GF/s 100 Gflop/s Sandia Fujitsu 244 GF/s N=500 'NWT' NAL 10 Gflop/s 0.4 GF/s 1 Gflop/s My Laptop 100 Mflop/s 3 3 4 4 5 5 6 6 7 7 8 8 9 9 0 0 1 1 2 2 3 9 9 9 9 9 9 9 9 9 9 9 9 9 9 0 0 0 0 0 0 0 - - - - - - - - - - - - - - - - - - - - - n n n n n n n n n n n v v v v v v v v v v u o u o u o u o u o u o u o u o u o u o u N N N N N N N N N N J J J J J J J J J J J 7 Virginia Tech “ “Big Mac Big Mac” ” G5 Cluster G5 Cluster Virginia Tech ♦ Apple G5 Cluster � Dual 2.0 GHz IBM Power PC 970s � 16 Gflop/s per node � 2 CPUs * 2 fma units/cpu * 2 GHz * 2(mul-add)/cycle � 1100 Nodes or 2200 Processors � Theoretical peak 17.6 Tflop/s � Infiniband 4X primary fabric � Cisco Gigabit Ethernet secondary fabric � Linpack Benchmark using 2112 processors � Theoretical peak of 16.9 Tflop/s � Achieved 9.555 Tflop/s � Could be #3 on 11/03 Top500 � Cost is $5.2 million which includes the system itself, memory, storage, and communication fabrics 8 4

Detail on the Virginia Tech Machine Detail on the Virginia Tech Machine Dual Power PC 970 2GHz ♦ � 4 GB DRAM. � 160 GB serial ATA mass storage. � 4.4 TB total main memory. � 176 TB total mass storage. Primary communications backplane based on infiniband ♦ technology. � Each node can communicate with the network at 20 Gb/s, full duplex, "ultra-low" latency. � Switch consists of 24 96-port switches in fat-tree topology. Secondary Communications Network: ♦ � Gigabit fast ethernet management backplane. � Based on 5 Cisco 4500 switches, each with 240 ports. Software: ♦ � Mac OSX. � MPIch-2 � C, C++ compilers - IBM xlc and gcc 3.3 � Fortran 95/90/77 Compilers - IBM xlf and NAGWare 9 Top 5 Machines for the Top 5 Machines for the Linpack Benchmark Linpack Benchmark R Peak Computer Number R max GFlop/s (Full Precision) of Procs GFlop/s Earth Simulator 1 5120 35860 40960 ASCI Q AlphaServer EV-68 2 (1.25 GHz w/Quadrics) 8160 13880 20480 Apple G5 dual IBM Power PC 3 (2 GHz, 970s, w/Infiniband 4X) 2112 9555 16896 4 HP RX2600 Itanium 2 (1.5GHz w/Quadrics) 1936 8633 11616 5 Linux NetworX (2.4 GHz Pentium 4 Xeon w/Quadrics) 2304 7634 11059 10 5

Performance Extrapolation Performance Extrapolation 10 PFlop/s 1 PFlop/s Blue Gene 130,000 proc 100 TFlop/s ASCI P 12,544 proc 10 TFlop/s Sum 1 TFlop/s N=1 100 GFlop/s TFlop/s PFlop/s 10 GFlop/s To enter Computer the list 1 GFlop/s N=500 100 MFlop/s 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 9 9 9 9 9 9 9 0 0 0 0 0 0 0 0 0 0 1 - - - - - - - - - - - - - - - - - - n n n n n n n n n n n n n n n n n n u u u u u u u u u u u u u u u u u u J J J J J J J J J J J J J J J J J J 11 Performance Extrapolation Performance Extrapolation 10 PFlop/s 1 PFlop/s Blue Gene 130,000 proc 100 TFlop/s ASCI P 12,544 proc 10 TFlop/s Sum 1 TFlop/s N=1 100 GFlop/s 10 GFlop/s 1 GFlop/s My Laptop N=500 100 MFlop/s 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 9 9 9 9 9 9 9 0 0 0 0 0 0 0 0 0 0 1 - - - - - - - - - - - - - - - - - - n n n n n n n n n n n n n n n n n n u u u u u u u u u u u u u u u u u u J J J J J J J J J J J J J J J J J J 12 6

18 and Beyond) To Exaflop/s Exaflop/s (10 (10 18 and Beyond) To 10 Eflops 1 Eflops 100 Pflops 10 Pflops SUM 1 Pflops 100 Tflops N=1 10 Tflops N=500 1 Tflops 100 Gflops 10 Gflops 1 Gflops My Laptop 100 Mflops 1993 1995 2000 2005 2010 2015 2020 2023 13 ASCI Purple & IBM Blue Gene/L ASCI Purple & IBM Blue Gene/L ♦ Announced 11/19/02 � One of 2 machines for LLNL � 360 TFlop/s � 130,000 proc � Linux � FY 2005 � Preliminary machine Plus � IBM Research BlueGene/L ASCI Purple � PowerPC 440, 500MHz w/custom proc/interconnect � 512 Nodes (1024 processors) IBM Power 5 based 14 � 1.435 Tflop/s (2.05 Tflop/s Peak) 12K proc, 100 TFlop/s 7

Recommend

More recommend