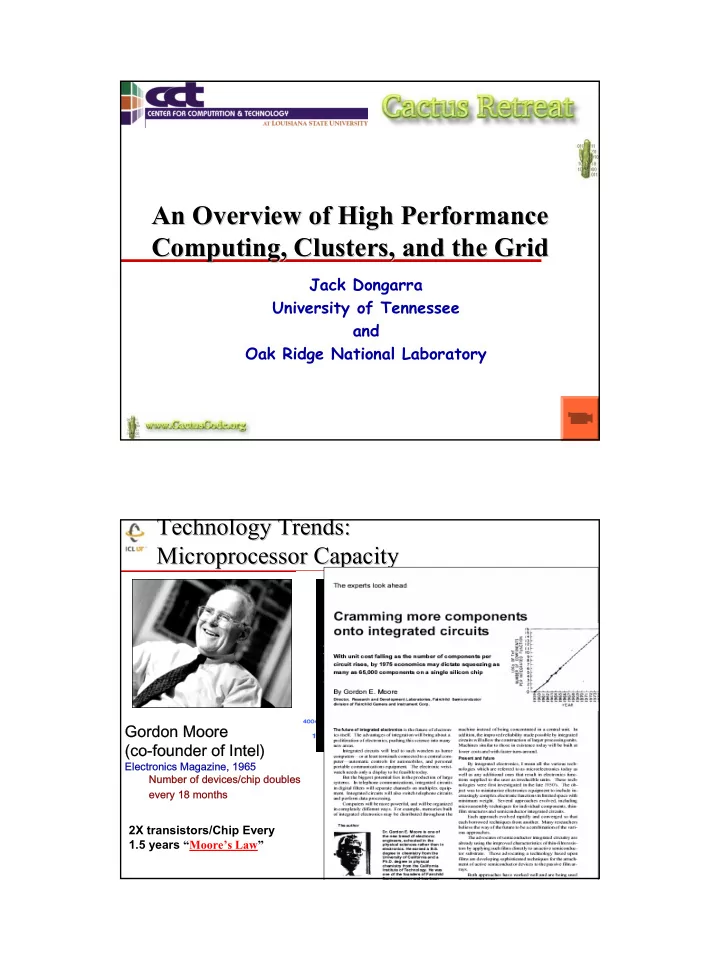

An Overview of High Performance An Overview of High Performance Computing, Clusters, and the Grid Computing, Clusters, and the Grid Jack Dongarra University of Tennessee and Oak Ridge National Laboratory 1 Technology Trends: Technology Trends: Microprocessor Capacity Microprocessor Capacity Gordon Moore (co-founder of Intel) Microprocessors have become Electronics Magazine, 1965 smaller, denser, and more powerful. Number of devices/chip doubles Not just processors, bandwidth, every 18 months storage, etc. 2X memory and processor speed and 2X transistors/Chip Every ½ size, cost, & power every 18 1.5 years “Moore’s Law” months. 2 1

Moore’ ’s Law s Law Moore Super Scalar/Vector/Parallel 1 PFlop/s Earth (10 15 ) Parallel Simulator ASCI White ASCI Red Pacific 1 TFlop/s (10 12 ) TMC CM-5 Cray T3D Vector TMC CM-2 Cray 2 1 GFlop/s Cray X-MP Super Scalar (10 9 ) Cray 1 1941 1 (Floating Point operations / second, Flop/s) CDC 7600 IBM 360/195 1945 100 Scalar 1 MFlop/s 1949 1,000 (1 KiloFlop/s, KFlop/s) 1951 10,000 CDC 6600 (10 6 ) 1961 100,000 1964 1,000,000 (1 MegaFlop/s, MFlop/s) IBM 7090 1968 10,000,000 1975 100,000,000 1987 1,000,000,000 (1 GigaFlop/s, GFlop/s) 1992 10,000,000,000 1993 100,000,000,000 1 KFlop/s 1997 1,000,000,000,000 (1 TeraFlop/s, TFlop/s) UNIVAC 1 (10 3 ) 2000 10,000,000,000,000 EDSAC 1 2003 35,000,000,000,000 (35 TFlop/s) 3 1950 1960 1970 1980 1990 2000 2010 H. Meuer, H. Simon, E. Strohmaier, & JD H. Meuer, H. Simon, E. Strohmaier, & JD - Listing of the 500 most powerful Computers in the World - Yardstick: Rmax from LINPACK MPP Ax=b, dense problem TPP performance PP performance Rate Rate - Updated twice a year Si Size ze SC‘xy in the States in November Meeting in Mannheim, Germany in June - All data available from www.top500.org 4 2

What is a What is a Supercomputer? Supercomputer? ♦ A supercomputer is a hardware and software system that provides close to the maximum performance that can currently be achieved. ♦ Over the last 10 years the range for the Top500 has increased greater than Moore’s Law ♦ 1993: � #1 = 59.7 GFlop/s Why do we need them? � #500 = 422 MFlop/s Computational fluid dynamics, ♦ 2003: protein folding, climate modeling, � #1 = 35.8 TFlop/s national security, in particular for � #500 = 403 GFlop/s cryptanalysis and for simulating nuclear weapons to name a few. 5 A Tour de Force in Engineering A Tour de Force in Engineering Homogeneous, Centralized, ♦ Proprietary, Expensive! Target Application: CFD-Weather, ♦ Climate, Earthquakes 640 NEC SX/6 Nodes (mod) ♦ � 5120 CPUs which have vector ops � Each CPU 8 Gflop/s Peak 40 TFlop/s (peak) ♦ ~ 1/2 Billion $ for machine, ♦ software, & building Footprint of 4 tennis courts ♦ 7 MWatts ♦ � Say 10 cent/KWhr - $16.8K/day = $6M/year! Expect to be on top of Top500 ♦ until 60-100 TFlop ASCI machine arrives From the Top500 (November 2003) ♦ � Performance of ESC > Σ Next Top 3 Computers 6 3

November 2003 November 2003 Rmax Rpeak Manufacturer Computer Installation Site Year # Proc Tflop/s Tflop/s Earth Simulator Center 1 NEC Earth-Simulator 35.8 2002 5120 40.90 Yokohama Hewlett- ASCI Q - AlphaServer SC Los Alamos National Laboratory 2 13.9 2002 8192 20.48 Packard ES45/1.25 GHz Los Alamos Virginia Tech Apple G5 Power PC 3 Self 10.3 Blacksburg, VA 2003 2200 17.60 w/Infiniband 4X PowerEdge 1750 P4 Xeon 3.6 Ghz University of Illinois U/C 4 Dell 9.82 2003 2500 15.30 w/Myrinet Urbana/Champaign Hewlett- rx2600 Itanium2 1 GHz Cluster – Pacific Northwest National Laboratory 5 8.63 2003 1936 11.62 Packard w/Quadrics Richland Opteron 2 GHz, Lawrence Livermore National Laboratory 6 Linux NetworX 8.05 2003 2816 11.26 w/Myrinet Livermore MCR Linux Cluster Xeon 2.4 GHz – Lawrence Livermore National Laboratory 7 Linux NetworX 7.63 2002 2304 11.06 w/Quadrics Livermore Lawrence Livermore National Laboratory 8 IBM ASCI White, Sp Power3 375 MHz 7.30 2000 8192 12.29 Livermore NERSC/LBNL 9 IBM SP Power3 375 MHz 16 way 7.30 2002 6656 9.984 Berkeley xSeries Cluster Xeon 2.4 GHz – Lawrence Livermore National Laboratory 10 IBM 6.59 2003 1920 9.216 w/Quadrics Livermore 50% of top500 performance in top 9 machines; 131 system > 1 TFlop/s; 210 machines are clusters 7 TOP500 – – Performance Performance - - Nov 2003 Nov 2003 TOP500 (10 15 ) 1 Pflop/s 528 TF/s SUM 100 Tflop/s 35.8 TF/s NEC 10 Tflop/s ES N=1 (10 12 ) 1.17 TF/s IBM ASCI White 1 Tflop/s LLNL Intel ASCI Red 59.7 GF/s Sandia 100 Gflop/s Fujitsu 403 GF/s N=500 'NWT' NAL 10 Gflop/s (10 9 ) 0.4 GF/s 1 Gflop/s My Laptop 100 Mflop/s 3 4 5 6 7 8 9 0 1 2 3 9 9 9 9 9 9 9 0 0 0 0 - - - - - - - - - - - n n n n n n n n n n n u u u u u u u u u u u J J J J J J J J J J J 8 4

Number of Systems on Top500 > 1 Tflop/s Tflop/s Number of Systems on Top500 > 1 Over Time Over Time 131 140 120 100 Since 1998 ~ doubling every 2 years 80 59 60 46 40 23 12 17 20 7 5 3 2 1 1 1 1 0 M ay-97 M ay-98 M ay-99 M ay-00 M ay-01 M ay-02 M ay-03 M ay-04 N ov-96 N ov-97 N ov-98 N ov-99 N ov-00 N ov-01 N ov-02 N ov-03 9 Factoids on Machines > 1 TFlop/s TFlop/s Factoids on Machines > 1 Year of Introduction for 131Systems ♦ 131 Systems > 1 TFlop/s ♦ 80 Clusters (61%) 100 86 80 ♦ Average rate: 2.44 Tflop/s 60 ♦ Median rate: 1.55 Tflop/s 40 31 ♦ Sum of processors in Top131: 20 7 155,161 3 3 1 0 � Sum for Top500: 267,789 1998 1999 2000 2001 2002 2003 ♦ Average processor count: 1184 ♦ Median processor count: 706 Number of processors 10000 ♦ Numbers of processors � Most number of processors: 9632 26 Num ber of processors � ASCI Red 1000 � Fewest number of processors: 124 71 � Cray X1 100 1 21 41 61 81 101 121 10 Rank 5

Percent Of 131 Systems Which Use The Percent Of 131 Systems Which Use The Following Processors > 1 TFlop/s Following Processors > 1 TFlop/s About a half are based on 32 bit architecture 9 (11) Machines have a Vector instruction Sets Cut by Manufacture of System Promicro Fujitsu 2% Fujitsu Atipa Technology Legend Group Sparc HPTi 1% 1% 2% 1% 1% NEC SGI Intel Cray AMD 3% 1% Hitachi Alpha 1% Hitachi 4% 2% 2% NEC 6% 2% IBM Itanium 3% 24% 9% Visual Technology Linux Networx 1% 5% Self-made 5% SGI IBM 5% Dell 52% Pentium 5% 48% Cray Inc. 4% HP 10% Cut of the data distorts manufacture counts, ie HP(14), IBM > 24% 11 What About Efficiency? What About Efficiency? ♦ Talking about Linpack ♦ What should be the efficiency of a machine on the Top131 be? � Percent of peak for Linpack > 90% ? > 80% ? > 70% ? > 60% ? … ♦ Remember this is O(n 3 ) ops and O(n 2 ) data � Mostly matrix multiply 12 6

Efficiency of Systems > 1 Tflop/s ES ASCI Q VT-Apple NCSA PNNL 1 LANL Lighting LLNL MCR ASCI White NERSC 0.9 LLNL (6.6) 0.8 AMD Cray X1 0.7 Alpha 0.6 IBM Efficency Hitachi 0.5 _ NEC SX 0.4 Pentium Sparc 0.3 Itanium SGI 0.2 100000 Performance Rank 0.1 10000 0 1000 1 21 41 61 81 101 121 Rank 1 21 41 61 81 101 121 13 Rank Commodity Interconnects Commodity Interconnects ♦ Gig Ethernet ♦ Myrinet Clos ♦ Infiniband ♦ QsNet F a t t r e e ♦ SCI T o r u Switch topology $ NIC $Sw/node $ Node Lt(us)/BW (MB/s) (MPI) s Gigabit Ethernet Bus $ 50 $ 50 $ 100 30 / 100 SCI Torus $1,600 $ 0 $1,600 5 / 300 QsNetII Fat Tree $1,200 $1,700 $2,900 3 / 880 Myrinet (D card) Clos $ 700 $ 400 $1,100 6.5/ 240 IB 4x Fat Tree $1,000 $ 400 $1,400 6 / 820 14 7

Efficency of Systems > 1 TFlop/s ES ASCI Q VT-Apple NCSA PNNL LANL Lighting 1 LLNL MCR ASCI White NERSC 0.9 LLNL gigE 0.8 44 Infiniband 0.7 3 0.6 Myrinet Efficency 19 0.5 Quadrics 12 0.4 Proprietary 52 0.3 SCI 1 0.2 0.1 0 1 21 41 61 81 101 121 Rank 15 Interconnects Used Interconnects Used SCI Myrinet 1% 15% Proprietary 39% GigE 34% Quadrics Infiniband 9% 2% Efficiency for Linpack Linpack Efficiency for Largest node count min max average GigE 1024 17% 63% 37% SCI 120 64% 64% 64% QsNetII 2000 68% 78% 74% Myrinet 1250 36% 79% 59% Infiniband 4x 1100 58% 69% 64% Proprietary 9632 45% 98% 68% 16 8

Country Percent by Total Performance Country Percent by Total Performance France Finland India 3% China 0% 0% Malaysia 2% Germany Italy Israel 0% Canada 3% 1% 0% 2% Korea, South 1% Australia Japan Mexico 0% 1% 15% New Zealand 1% United States Netherlands 63% 1% United Kingdom Saudia Arabia 5% 0% Sweden Switzerland 0% 17 0% KFlop/s per Capita (Flops/Pop) per Capita (Flops/Pop) KFlop/s 1000 900 WETA Digital (Lord of the Rings) 800 700 600 500 400 300 200 100 0 a s m d a a h y n e d a l d n s o a y a i e n n d n e c n d a e i i b l i t n o d c s a l u a a n a n a a i i a a d a p t n h o d a x y t a m r l I r e a l n s a a C r r l g I e a t S n t A l r e a e s r w I n J S M l r F i a e z u e C F Z , S i a a K M A h G t d i i w d e t w e e d r t u S e o N i e n a N 18 K t U S i n U 9

Recommend

More recommend