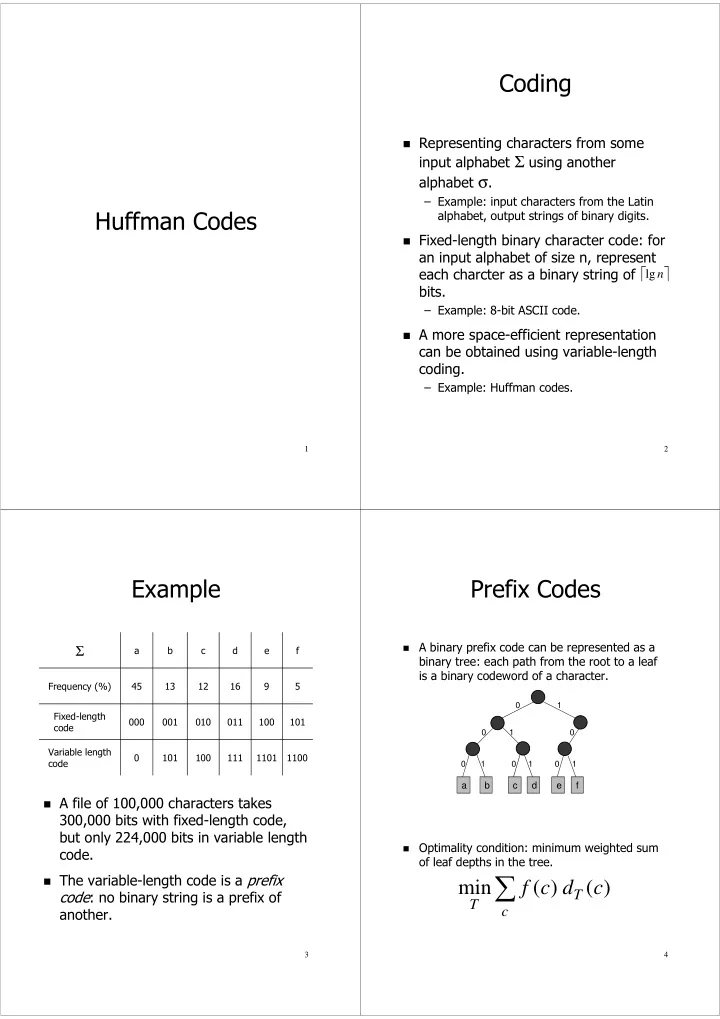

Coding ! Representing characters from some input alphabet Σ using another alphabet σ . – Example: input characters from the Latin Huffman Codes alphabet, output strings of binary digits. ! Fixed-length binary character code: for an input alphabet of size n, represent each charcter as a binary string of lg n bits. – Example: 8-bit ASCII code. ! A more space-efficient representation can be obtained using variable-length coding. – Example: Huffman codes. 1 2 Example Prefix Codes ! A binary prefix code can be represented as a a b c d e f Σ binary tree: each path from the root to a leaf is a binary codeword of a character. Frequency (%) 45 13 12 16 9 5 0 1 Fixed-length 000 001 010 011 100 101 code 0 1 0 Variable length 0 101 100 111 1101 1100 code 0 1 0 1 0 1 a b c d e f ! A file of 100,000 characters takes 300,000 bits with fixed-length code, but only 224,000 bits in variable length ! Optimality condition: minimum weighted sum code. of leaf depths in the tree. ∑ ! The variable-length code is a prefix min f ( c ) d ( c ) code : no binary string is a prefix of T T another. c 3 4

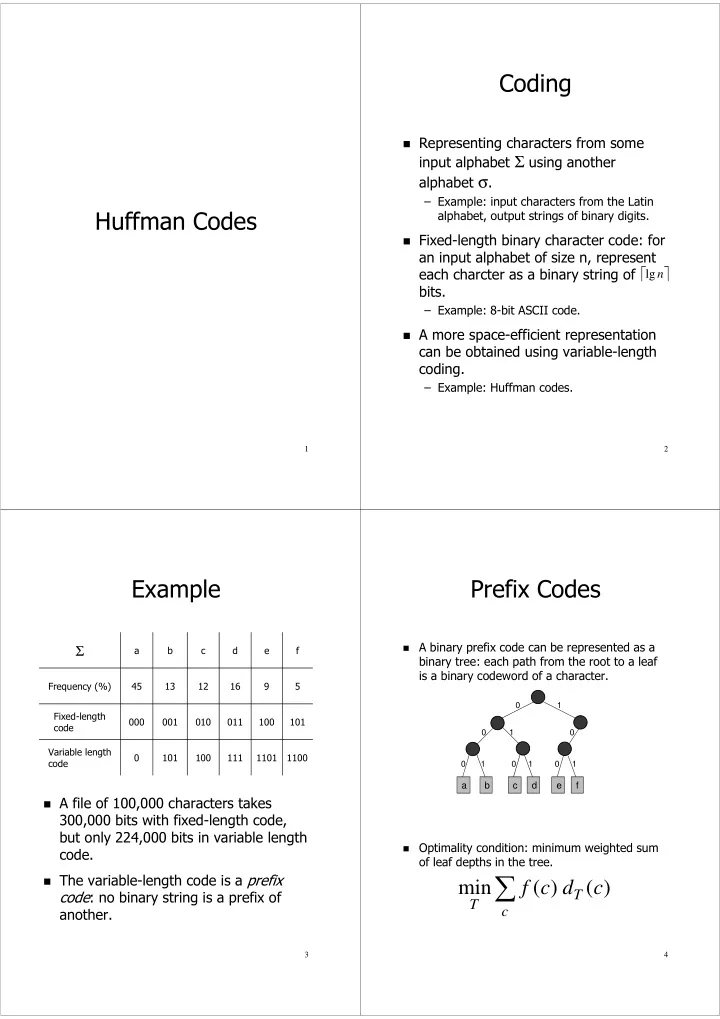

Huffman Code Optimality construction ! Note: an optimal code must correspond to a class CodingTree { full tree! private float frequency; private char letter; private CodingTree left, right; 0 1 0 1 } > 0 1 0 0 1 Input: a set of n pairs (character, frequency) 0 1 Init: create n coding trees (one per char) e f 0 1 0 1 0 1 0 1 0 1 PriorityQ Q = new PriorityQ(treeSet); a b c d e f a b c d CodingTree node; For (j=1; j < n; j++) { node = new CodingTree(); ! Fact: an optimal coding tree for an alphabet of node.left = Q.deleteMin(); n characters has (n-1) internal nodes. node.right = Q.deleteMin(); node.frequency = node.left.frequency + node.right.frequency; Q.insert(node); } Return Q.deleteMin(); 5 6 Example Example (continued) 45, a 13, b 12, c 16, d 9, e 5, f 45, a 55 0 1 25 30 0 1 45, a 13, b 12, c 16, d 14 0 1 0 1 12, c 13, b 14 16, d 5, f 9, e 0 1 5, f 9, e 45, a 25 16, d 14 0 1 0 1 100 0 1 5, f 9, e 12, c 13, b 45, a 55 0 1 25 30 45, a 25 30 0 1 0 1 0 1 0 1 14 12, c 13, b 16, d 12, c 13, b 14 16, d 0 1 0 1 5, f 9, e 5, f 9, e 7 8

Huffman Decoding Optimality Proof (1) ! Starting at the root of the coding tree, ! Lemma 1 (17.2): Let C be an alphabet read input bits. and x,y two characters in C with lowest frequencies. Then there exists an ! After reading 0 go left optimal prefix code tree in which x and ! After reading 1 go right y are sibling leaves. ! If a leaf node has been reached, output the character stored in the leaf, and return to the root of the tree. x b y y b c x c b c x y 9 10 Optimality Proof (2) Optimality Proof (3) ! Lemma 2 (17.3): Let T be an optimal ! Theorem: Huffman’s algorithm prefix code tree for alphabet C. produces an optimal prefix code. Consider any two sibling characters x ! Proof: By induction on the size of the and y in C and let z be their parent in alphabet C, using Lemmas 1 and 2. T. Then, considering z as a character with frequency f[z] = f[x]+f[y], the tree T’ = T – {x,y} represents an optimal prefix code for the alphabet C’ = C – {x,y} U {z}. 11 12

Recommend

More recommend